Archive

Patches for the code of Peter Turchin’s Attrition Warfare Model

The paper Empirically Testing Predictions of an Attrition on Warfare Model for the War in Ukraine, by Peter Turchin, recently showed up during one of my regular searches for software engineering data. A quick scan of the paper founded that it is very empirical, and that the analysis coding was done in R; I could not resist checking out the source code.

One of my first jobs was helping academics fix coding issues with the programs they had written to solve scientific/engineering problems, and this R code reminded me of several habits of scientists who code: the single letter variables used in equations are directly mapped to identifier names, and there is no structure to the code. The code is so short (86 lines) that the lack of structure is a minor inconvenience; a few thousand lines, and it becomes a major headache. The code for Imperial’s COVID model was ten times larger.

Two mistakes in the code/paper jumped out at me, leading to this post. First, some background.

The empirical predictions in the paper are intended to provide insight into who is likely to win the ongoing Ukraine/Russia war. Fighting requires soldiers and these are killed/wounded over time. The country that does not have enough soldiers to at least keep the opposition at bay, looses.

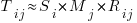

Turchin has proposed what he calls the Attrition War model, based on Lanchester’s laws (various attempts to validate Lanchester’s models, lots of maths to shake a stick at), and the paper solves this model’s set of eight differential equations (each country has the same set of four equations; the connection between the two sets is that one country’s casualty rate and Army size is influenced by the opposing country’s stock of war matériel). The four quantities modelled are casualties, army size, stock of warfare matériel, and production capacity.

Getting predictions out of differential equations requires being able to find a solution to the equations and feeding in numeric values for the various parameters.

Solving the equations is a maths problem, i.e., no knowledge of military matters required. Selecting the equations to solve and the numeric values to feed into the solution is what requires military knowledge. I don’t know anything about military matters; the following analysis is purely related to writing code to solve a set of differential equations, using the equations plus numeric values in Turchin’s November 2023 paper.

For obvious reasons, countries involved in the war do not publish information on the quantities modelled by these equations (which are also likely to be time-dependent). Turchin addresses the changeable nature of the numeric values by introducing various random components into his Attrition model.

From the perspective of solving the eight equations and presenting the results, the following are the two mistakes that jumped out at me (both involving the implementation of the random component):

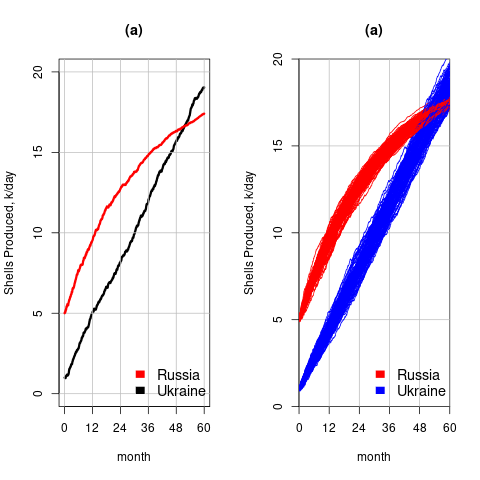

- When a model contains a random component, there will be a huge/infinite number of possible solutions. The takeaway plots in the paper show a single solution (for each of the four variables/two countries), with the width of the plotted lines and their fluctuating appearance suggesting that they contain multiple solutions. The plot below left shows the solution for artillery shell production over time, as it appears in the paper, while the plot below right shows 100 solutions (each line is a different solution; code):

The wedge of lines shows the range of possible solutions (each line drawn overwrites anything previously drawn, and plotting with transparent colors would show the density of solution at a given point; I decided to keep the code simple).

- All the random components are assumed to have a Gaussian distribution. When distribution information is not available, this is usually a safe choice. However, two of the random components must always have non-negative values (i.e., casualties and matériel used can never be negative). The Poisson distribution is the obvious candidate, and a simple search turned up an empirical paper agreeing with this choice (at least for casualties).

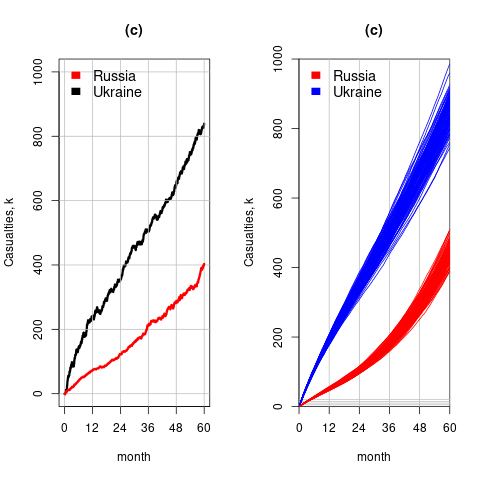

The plot below left shows one solution for the number of casualties over time, using the original code, while the plot below right shows 100 solutions using a Poisson distribution for the random component (code):

With a Poisson random component, the solutions don’t meander as much, and the variance is smaller than when a Gaussian is used. Technically, it is a more accurate model (if more variance is to be expected a Negative Binomial distribution could be used; see commented out code)

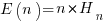

The latest (November) UK government estimate of Russian casualties is 300K, roughly three times larger than predicted by Turchin’s model. Changing the value for the ‘conversion rate of expended matériel to casualties’ from

to

to  brings the casualty prediction inline with current estimates (we have been hearing a lot about the accuracy of the Ukrainian targetting; see code for details).

brings the casualty prediction inline with current estimates (we have been hearing a lot about the accuracy of the Ukrainian targetting; see code for details).

I have also reworked the code to add some structure, e.g., separating out solving the equations and setting the initial conditions.

Turchin used the traditional approach to solving differential equations, the one we are taught at school. Before seeing the code, I was half expecting to see a System dynamics approach. The advantage of a systems dynamic approach is flexibility (i.e., easier to add more components) and visualization (i.e., a chart showing what feeds into/out of what); an example. There is an R-based book: System Dynamics Modelling with R.

Christmas books for 2023

This year’s Christmas book list, based on what I read this year, and for the first time including a blog series that I’m sure will eventually appear in book form.

“To Explain the World: The discovery of modern science” by Steven Weinberg, 2015. Unless you know that Steven Weinberg won a physics Nobel prize, this looks like just another history of science book (the preface tells us that he also taught a history of science course for over a decade). This book is written by a scientist who appears to have read the original material (I’m assuming in translation), who puts the discoveries and the scientists involved at the center of the discussion; this is not the usual historian who sprinkles in a bit about science, while discussing the cast of period characters. For instance, I had never understood why the work of Galileo was considered to be so important (almost as a footnote, historians list a few discoveries of his). Weinberg devotes pages to discussing Galileo’s many discoveries (his mathematics was a big behind the times, continuing to use a geometric approach, rather than the newer algebraic techniques), and I now have a good appreciation of why Galileo is rated so highly by scientists down the ages.

Chapter 2 of “When Old Technologies Were New: Thinking about electric communication in the late nineteenth century” by Carolyn Marvin, 1988. The book is worth buying just for chapter 2, which contains many hilarious examples of how the newly introduced telephone threw a spanner in to the workings of the social etiquette of the class of person who could afford to install one. Suitors could talk to daughters without other family members being present, public phone booths allowed any class of person to be connected directly to the man of the house, and when phone companies started publishing publicly available directories containing subscriber name/address/number, WELL!?! In the US there were 1 million telephones installed by 1899, and subscribers were sometimes able to listen to live musical concerts and sports events (commercial radio broadcasting did not start until the 1920s).

“The Grand Strategy of the Roman Empire: From the first century A.D. to the third” by Edward Luttwak, 1976; h/t Mr. and Mrs. Psmith’s review. I cannot improve or add to John Psmith’s review. The book contains more details; the review captures the essence. On a related note, for the hard core data scientists out there: Early Imperial Roman army campaigning: observations on marching metrics, energy expenditure and the building of marching camps.

“Innovation and Market Structure: Lessons from the computer and semiconductor industries” by Nancy S. Dorfman, 1987. An economic perspective on the business of making and selling computers, from the mid-1940s to the mid-1980s. Lots of insights, (some) data, and specific examples (for the most part, the historians of computing are, well, historians who can craft a good narrative, but the insights are often lacking). The references led me to: Mancke, Fisher, and McKie, who condensed the 100K+ pages of trial transcript from the 1969–1982 IBM antitrust trial down to 1,500+ pages of Historical narrative.

Worshipping the Future by Helen Dale and Lorenzo Warby. Is “… a series of essays dissecting the social mechanisms that have led to the strange and disorienting times in which we live.” The series is a well written analysis that attempts to “… understand mechanisms of how and the why, …” of Woke.

Honourable mentions

“The Big Con: The story of the confidence man and the confidence trick” by David W. Maurer (source material for the film The Sting).

“Cubed: A secret history of the workplace” by Nikil Saval.

Software engineering’s F word

I know of two conversation topics that are guaranteed to make software developers feel uncomfortable, and even walk away: 1) complaining about ChatGPT’s refusable to say anything nice about Adolf Hitler, 2) enquiring why so few people are willing to talk about software engineering’s three letter F word (I actually say the F word).

Academics don’t like to use the F word, preferring phrases such as recreational value (and then only for Open source; earlier this year, I used hedonistic activity in the title of a blog post). A 2012 review of motivation in open source development found 10, out of 40, papers listing the F word as a motivator (all published in management related venues).

The F word occurs in the title of a 1991 article in the newsletter The Software Practitioner, aimed at professional developers: “Torn Between Fun and Tedium” by Bruce Blum. The article appears to be lost, but is discussed in the book software creativity 2.0 by Robert Glass.

Blum assumes that software development work is either Fun or Tedium, and assigns a Fun/Tedium percentage to various phase of a Waterfall software lifecycle.

Selling the project concept is assigned 100/0 by Blum, (i.e., 100% Fun; me: 80/20), developing the requirements is 50/50 (me: 20/80), top level design is 40/60 (me: 80/20), detailed design 33/67 (me: no idea), programming 75/25 (me: agree), testing 33/67 (me: 75/25), and maintenance 0/100 (me: 25/75).

I regularly have younger developers tell me that they picked a particular new technology because it looked like fun to learn and use; older developers have been through this phase new technology phase, and sometimes other phases, and have moved onto being more interested in avoiding grief.

Where is the evidence for this fun seeking? Asking developers about fun in their software engineering day job is not socially acceptable, but it is ok to ask about fun in personal projects.

I suspect that managers who have had little contact with working developers would be shocked by the extent to which fun motivates developer decisions; managers who have had to deal with the practicalities of herding developers less so.

Rounding in reported task implementation time

There is lots of evidence that people often pick a round number when estimating the time needed to implement a task. Parkinson’s law suggests that reported actual implementation time will often also be a round number, e.g., report 30 minutes for a task that actually took 28 minutes.

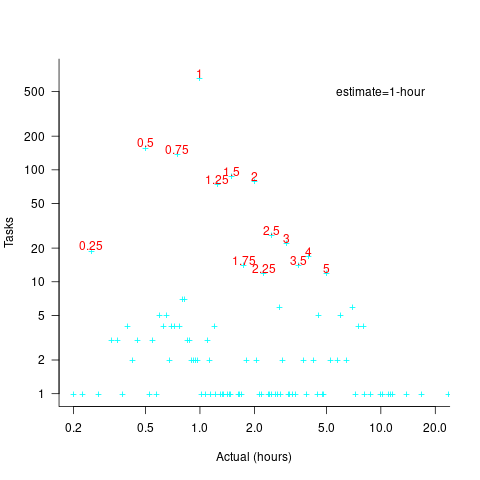

If a task is estimated to take 1-hour, what is the distribution of reported implementations times? The analysis in this article uses the SiP task dataset, and similar patterns occur in other datasets.

The plot below shows the number of tasks having a given reported implementation time, for tasks estimated to take 1-hour, with main peaks labelled in red (reported times rounded to one decimal place and quarter hours; code+data):

With 1-hour estimates, there is limited scope for a wide range of actual times (at least for times less than estimates). The labelled peaks contain 89% of 1-hour estimate tasks (1,525 tasks, 21% less than estimate, 44% equal estimate, 24% greater than estimate).

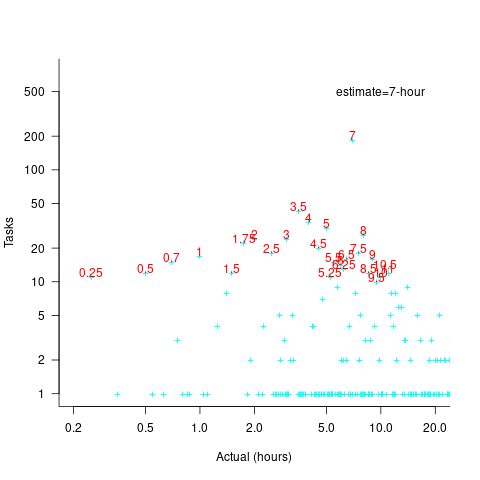

Tasks with larger estimated times are likely to take longer, creating more possible rounding peaks in the implementation time distribution. The plot below shows the number of tasks having a given reported implementation time, for tasks estimated to take 7-hour (i.e., 1-day), with main peaks labelled in red (reported times rounded to one decimal place and quarter hours; code+data):

As expected, there are more peaks and implementation times are distributed over a larger range of values.

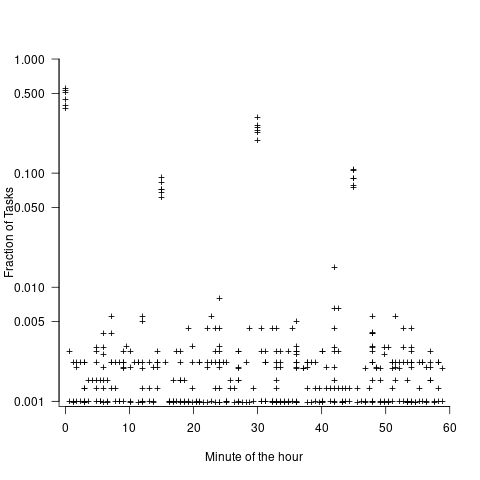

These plots suggest that many actual times are being rounded to 15-minute intervals. The plot below is based on the minute portion of the reported time (i.e., the hour part is ignored), and shows the fraction of tasks, for estimates of 1, 2, 3, 5, 7, and 14 hours, whose minute component of reported time has a given value (code+data):

For estimates of a few hours, around 90% of reported task time is on a 15-minute mark, while for 7- and 14-hour tasks the percentage drops to 80%.

If staff are manually entering task finish times, then some degree of rounding is to be expected. When the finish time is indirectly calculated, based on the submission of a completed form, there will be some fuzziness to the rounding number process.

Rereading The Mythical Man-Month

The book The Mythical Man-Month by Fred Brooks, first published in 1975, continues to be widely referenced, my 1995 edition cites over 250K copies in print. In the past I have found it to be a pleasant, relatively content free, read.

Having spent some time analyzing computing data from the 1960s, I thought it would be interesting to reread Brooks in the light of what I had recently learned. I cannot remember when I last read the book, and only keep a copy to be able to check when others cite it as a source.

Each of the 15 chapters, in the 1975 edition, takes the form of a short five/six page management briefing on some project related topic; chapters start with a picture of some work or art on one page, and a short quote from a famous source occupies the opposite page. The 20th anniversary edition adds four chapters, two of which ‘refire’ Brooks’ 1986 paper introducing the term No Silver Bullet (that no single technlogy will produce an order of magnitude improvement in productivity).

Rereading, I found the 1975 contents to be sufficiently non-specific that my newly acquired knowledge did not change anything. It was a pleasant read, various ideas and some data points are presented, the work of others is covered and cited, a few points are briefly summarised and the chapter ends. The added chapters have a different character than the earlier ones, being more detailed in their discussion and more specific in suggesting outcomes. The ‘No Silver Bullet’ material dismisses some of the various claimed discoveries of a silver bullet.

Why did the book sell so well?

The material is an easy read, and given that no solutions are heavily pushed, there is little to disagree with.

Being involved on a project for the first time can be a confusing experience, and even more experienced people get lost. Brooks can provide solace through his calm presentation of project behaviors as stuff that happens.

What project experience did Brooks have?

Brooks’ PhD thesis The Analytic Design of Automatic Data Processing Systems was completed in 1956, and, aged 25, he joined IBM that year. He was project manager for System/360 from its inception in late 1961 to its launch in April 1964. He managed the development of the operating system OS/360 from February 1964, for a year, before leaving to found the computer science department at the University of North Carolina at Chapel Hill, where he remained.

So Brooks gained a few years of hands-on experience at the start of his career and spent the rest of his life talking about it. A not uncommon career path.

Managing the development of an O/S intended to control a machine containing 16K of memory (i.e., IBM’s System/360 model 30) might not seem like a big deal. Teams of half-a-dozen good people had implemented projects like this since the 1950s. However, large companies create large teams, operating over multiple sites, with every changing requirements and interfaces, changing hardware, all with added input from marketing (IBM was/is a sales-driven organization). I suspect that the actual coding effort was a rounding error, compared to the time spent in meetings and on telephone calls.

Brooks looked after the management, and Gene Amdahl looked after the technical stuff (for lots of details see IBM’s 360 and early 370 systems by Pugh, Johnson, and Palmer).

Brooks was obviously a very capable manager. Did the O/360 project burn him out?

Analyzing mainframe data in light of the IBM antitrust cases

Issues surrounding the decades of decreasing cost/increasing performance of microcomputers, powered by Intel’s x86/Pentium have been extensively analysed. There has been a lot of analysis of pre-Intel computers, in particular Mainframe computers. Is there any major difference?

Mainframes certainly got a lot faster throughout the 1960s and 1970s, and the Computer and Automation’s monthly census shows substantial decreases in rental prices (the OCR error rate is not yet low enough for me to be willing to spend time extracting 300 pages of tabular data).

During the 1960s and 1970s the computer market was dominated by IBM, whose market share was over 70% (its nearest rivals, Sperry Rand, Honeywell, Control Data, General Electric, RCA, Burroughs, and NCR, were known as the seven dwarfs).

While some papers analyzing the mainframe market do mention that there was an antitrust case against IBM, most don’t mention it. There are some interesting papers on the evolution of families of IBM products, but how should this analysis be interpreted in light of IBM’s dominant market position?

For me, the issue of how to approach the interpretation of IBM mainframe cost/performance/sales data is provided by the book The U.S. Computer Industry: A Study of Market Power by Gerald Brock.

Brock compares the expected performance of a dominant company in a hypothetical computer industry, where anticompetitive practices do not occur, with IBM’s performance in the real world. There were a variety of mismatches (multiple antitrust actions have found IBM guilty of abusing its dominant market power).

Any abuse of market power by IBM does not impact the analysis of computer related issues about what happened in the 1950s/1960s/1970, but the possibility of this behavior introduces uncertainty into any analysis of why things happened.

Intel also had its share of antitrust cases, which will be of interest to people analysing the x86/Pentium compatible processor market.

Evidence-based Software Engineering book: the last year

It’s now three years since my book, Evidence-based Software Engineering: based on the publicly available data, was released. What has happened in the last year, since I wrote about the first two years, and what might happen in the next year or so?

There is now a Discord channel for discussing evidence-based software engineering. Blog readers and anyone with an interest in the subject are most welcome.

I keep a copy of software related papers that I think might be worth looking at again, and have been meaning to make this list public. A question by ysch, a Discord channel member, asked after ways of checking whether a software paper was worth reading. This prompted me to create a Github repo containing the titles of these 7,756 saved papers, along with some data related annotations. On the more general question of paper quality, my view is that most papers are not worth reading, with a few being very well worth reading. People have to develop techniques for rapidly filtering out the high volume of drivel; techniques I use, and understanding the publication ecosystem.

This last year saw the sudden arrival of a new tool, LLMs. My experience with using ChatGPT (and other such LLMs) as an evidence-based research tool is that the answers are too generic or just plain wrong (for several months, one LLM reported that I had a degree in Divinity Studies). If I was writing a book, I suspect that they would provide a worthwhile copy-editing service.

I was hoping that the recently released GPT-4 vision model would do high quality text extraction from scanned pdfs, but the quality of output I have received is about the same as traditional OCR-based tools. I expect that the data extraction ability LLM based tools will get a lot better, because they are at the start of the learning curve and there is a commercial incentive for them to be a lot better.

An LLM is driven by the token weights learned during training. Roughly speaking, the more training data on a topic, the larger the trained weights for that topic. There is not a lot of data (i.e., text) relating to evidence-based software engineering, compared to the huge quantities available for some topics, so responses are generic and often parrot established folklore. The following image was generated by DALL-E3:

There is a tale of software product evolution waiting to be told via the data contained in magazine adverts; the magazines are on bitsavers, we just need LLMs to be good enough to reliably extract advert contents (currently, too many hallucinations).

The book contents continue to survive almost completely unscathed, primarily because reader feedback continues to be almost non-existent. Despite the close to 500k downloads (now averaging 4k-5k downloads per month, from the logs I have, with the mobile friendly version around 10%), most people I meet have not heard of the book. The concept of an evidence-based approach to software engineering continues to be met with blank looks, although a commonly cited listener use case for the book’s data is validating a pet theory (my suggestion that the data may show their pet theory to be wrong is not appreciated).

Analysis/data in the following blog posts, from the last 12-months, belongs in the book in some form or other:

Some human biases in conditional reasoning

Unneeded requirements implemented in Waterfall & Agile

Analysis of Cost Performance Index for 338 projects

Evaluating Story point estimation error

Frequency of non-linear relationships in software engineering data

Analysis of when refactoring becomes cost-effective

An evidence-based software engineering book from 2002

Perturbed expressions may ‘recover’

Predicting the size of the Linux kernel binary

Local variable naming: some previously unexplored factors

Optimal function length: an analysis of the cited data

Some data on the size of Cobol programs/paragraphs

Hardware/Software cost ratio folklore

Criteria for increased productivity investment

Likelihood of encountering a given sequence of statements

Relative performance of computers from the 1950s/60s/70s

What was the range of performance of computers introduced in the 1950, 1960s and 1970s, and what was the annual rate of increase?

People have been measuring computer performance since they were first created, and thanks to the Internet Archive the published results are sometimes available today. The catch is that performance was often measured using different benchmarks. Fortunately, a few benchmarks were run on many systems, and in a few cases different benchmarks were run on the same system.

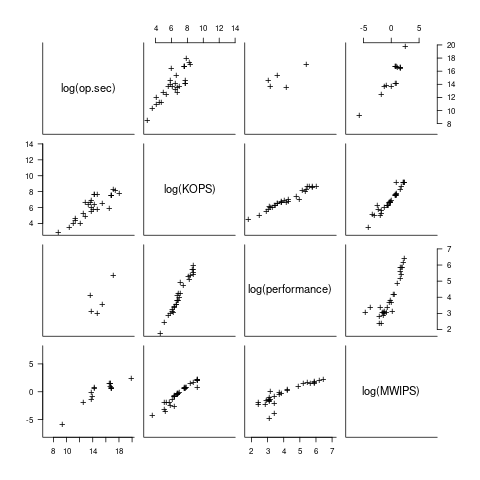

I have found published data on four distinct system performance estimation models, with each applied to 100+ systems (a total of 1,306 systems, of which 1,111 are unique). There is around a 20% overlap between systems across pairs of models, i.e., multiple models applied to the same system. The plot below shows the reported performance for pairs of estimates for the same system (code+data):

The relative performance relationship between pairs of different estimation models for the same system is linear (on a log scale).

Each of the models aims to produce a result that is representative of typical programs, i.e., be of use to people trying to decide which system to buy.

- Kenneth Knight built a structural model, based on 30 or so system characteristics, such as time to perform various arithmetic operations and I/O time; plugging in the values for a system produced a performance estimate. These characteristics were weighted based on measurements of scientific and commercial applications, to calculate a value that was representative of scientific or commercial operation. The Knight data appears in two magazine articles analysing systems from the 1950s and 1960s (the 310 rows are discussed in an earlier post), and the 1985 paper “A functional and structural measurement of technology”, containing data from the late 1960s and 1970s (120 rows),

- Ein-Dor and Feldmesser also built a structural model, based on the characteristics of 209 systems introduced between 1981 and 1984,

- The November 1980 Datamation article by Edward Lias lists what he called the KOPS (thousands of operations per second, i.e., MIPS for slower systems) value for 237 systems. Similar to the Knight and Ein-dor data, the calculated value is based on weighting various cpu instruction timings

- The Whetstone benchmark is based on running a particular program on a system, and recording its performance; this benchmark was designed to be representative of scientific and engineering applications, i.e., floating-point intensive. The design of this benchmark was the subject of last week’s post. I extracted 504 results from Roy Longbottom’s extensive collection of Whetstone results going back to the mid-1960s.

While the Whetstone benchmark was originally designed as an Algol 60 program that was representative of scientific applications written in Algol, only 5% of the results used this version of the benchmark; 85% of the results used the Fortran version. Fitting a regression model to the data finds that the Fortran version produced higher results than the Algol 60 version (which would encourage vendors to use the Fortran version). To ensure consistency of the Whetstone results, only those using the Fortran benchmark are used in this analysis.

A fifth dataset is the Dhrystone benchmark followed in the footsteps of the Whetstone benchmark, but targetting integer-based applications, i.e., no floating-point. First published in 1984, most of the Dhrystone results apply to more recent systems than the other benchmarks. This code+data contains the 328 results listed by the Performance Database Server.

Sometimes slightly different system names appear in the published results. I used the system names appearing in the Computers Models Database as the definitive names. It is possible that a few misspelled system names remain in the data (the possible impact is not matching systems up across models), please let me know if you spot any.

What is the best statistical technique to use to aggregate results from multiple models into a single relative performance value?

I came up with various possibilities, none of which looked that good, and even posted a question on Cross Validated (no replies yet).

Asking on the Evidence-based software engineering Discord channel produced a helpful reply from Neal Fultz, i.e., use the random effects model: lmer(log(metric) ~ (1|System)+(1|Bench), data=Sall_clean) ; after trying lots of other more complicated approaches, I would probably have eventually gotten around to using this approach.

Does this random effects model produce reliable values?

I don’t have a good idea how to evaluate the fitted model. Looking at pairs of systems where I know which is faster, the relative model values are consistent with what I know.

A csv of the calculated system relative performance values. I have yet to find a reliable way of estimating confidence bounds on these values.

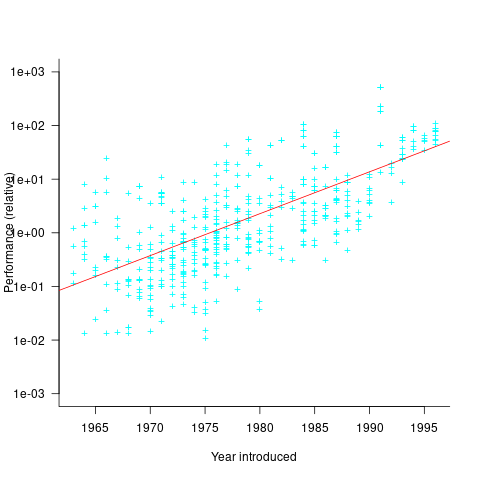

The plot below shows the performance of systems introduced in a given year, on a relative scale, red line is a fitted exponential model (a factor of 5.5 faster, annually; code+data):

If you know of a more effective way of analysing this data, or any other published data on system benchmarks for these decades, please let me know.

Design of the Whetstone benchmark

The Whetstone benchmark was once a widely cited measure of computer performance. This benchmark consisted of a single program, originally designed for Algol 60 based applications, and later translated to other languages (over 85% of published results used Fortran). The source used as representative of typical user programs came from scientific applications, which has some characteristics that are very non-representative of non-scientific applications, e.g., use of floating-point, and proportionally more multiplications and multidimensional array accesses. Dhrystone benchmark was later designed with the intent of being representative of a broader range of applications.

While rooting around for Whetstone result data, I discovered the book Algol 60 Compilation and Assessment by Brian Wichmann. Despite knowing Brian for 25 years and being very familiar with his work on compiler validation, I had never heard of this book (Knuth’s An Empirical Study of Fortran Programs has sucked up all the oxygen in this niche).

As expected, this 1973 book has a very 1960s model of cpu/compiler behavior, much the same as MIX, the idealised computer used by Knuth for the first 30-years of The Art of Computer Programming.

The Whetstone world view is of a WYSIWYG compiler (i.e., each source statement maps to the obvious machine code), and cpu instructions that always take the same number of clock cycles to execute (the cpu/memory performance ratio had not yet moved far from unity, for many machines).

Compiler optimization is all about trying not to generate code, and special casing to eke out many small savings; post-1970 compilers tried hard not to be WYSIWYG. Showing compiler correctness is much simplified by WYSIWIG code generation.

Today, there are application domains where the 1960s machine model still holds. Low power embedded systems may have cpu/memory performance ratios close to unity, and predictable instruction execution times (estimating worst-case execution time is a minor research field).

Creating a representative usage-based benchmark requires detailed runtime data on what the chosen representative programs are doing. Brian modified the Whetstone Algol interpreter to count how many times each virtual machine op-code was executed (see the report Some Statistics from ALGOL Programs for more information).

The modified Algol interpreter was installed on the KDF9 at the National Physical Laboratory and Oxford University, in the late 1960s. Data from 949 programs was collected; the average number of operations per program was 152,000.

The op-codes need to be mapped to Algol statements, to create a benchmark program whose compiled form executes the appropriate proportion of op-codes. Some op-code sequences map directly to statements, e.g., Ld addrof x, Ld value y, Store, maps to the statement: x:=y;.

Counts of occurrences of each language construct in the source of the representative programs provides lots of information about the proportions of the basic building blocks. It’s ‘just’ a matter of sorting out the loop counts.

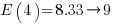

For me, the most interesting part of the book is chapter 2, which attempts to measure the execution time of 40+ different statements running on 36 different machines. The timing model is  , where

, where  is the

is the  ‘th statement,

‘th statement,  is the

is the  ‘th machine,

‘th machine,  an adjustment factor intended to be as close to one as possible, and

an adjustment factor intended to be as close to one as possible, and  is execution time. The book lists some of the practical issues with this model, from the analysis of timing data from current machines, e.g., the impact of different compilers, and particular architecture features having a big performance impact on some kinds of statements.

is execution time. The book lists some of the practical issues with this model, from the analysis of timing data from current machines, e.g., the impact of different compilers, and particular architecture features having a big performance impact on some kinds of statements.

The table below shows statement execution time, in microseconds, for the corresponding computer and statement (* indicates an estimate; the book contains timings on 36 systems):

ATLAS MU5 1906A RRE Algol 68 B5500 Statement 6.0 0.52 1.4 14.0 12.1 x:=1.0 6.0 0.52 1.3 54.0 8.1 x:=1 6.0 0.52 1.4 *12.6 11.6 x:=y 9.0 0.62 2.0 23.0 18.8 x:=y + z 12.0 0.82 3.2 39.0 50.0 x:=y × z 18.0 2.02 7.2 71.0 32.5 x:=y/z 9.0 0.52 1.0 8.0 8.1 k:=1 18.0 0.52 0.9 121.0 25.0 k:=1.0 12.0 0.62 2.5 13.0 18.8 k:=l + m 15.0 1.07 4.9 75.0 35.0 k:=l × m 48.0 1.66 6.7 45.0 34.6 k:=l ÷ m 9.0 0.52 1.9 8.0 11.6 k:=l 6.0 0.72 3.1 44.0 11.8 x:=l 18.0 0.72 8.0 122.0 26.1 l:=y 39.0 0.82 20.3 180.0 46.6 x:=y ^ 2 48.0 1.12 23.0 213.0 85.0 x:=y ^ 3 120.0 10.60 55.0 978.0 1760.0 x:=y ^ z 21.0 0.72 1.8 22.0 24.0 e1[1]:=1 27.0 1.37 1.9 54.0 42.8 e1[1, 1]:=1 33.0 2.02 1.9 106.0 66.6 e1[1, 1, 1]:=1 15.0 0.72 2.4 22.0 23.5 l:=e1[1] 45.0 1.74 0.4 52.0 22.3 begin real a; end 96.0 2.14 80.0 242.0 2870.0 begin array a[1:1]; end 96.0 2.14 86.0 232.0 2870.0 begin array a[1:500]; end 156.0 2.96 106.0 352.0 8430.0 begin array a[1:1, 1:1]; end 216.0 3.46 124.0 452.0 13000.0 begin array a[1:1, 1:1, 1:1]; end 42.0 1.56 3.5 16.0 31.5 begin goto abcd; abcd : end 129.0 2.08 9.4 62.0 98.3 begin switch s:=q; goto s[1]; q : end 210.0 24.60 73.0 692.0 598.0 x:=sin(y) 222.0 25.00 73.0 462.0 758.0 x:=cos(y) 84.0 *0.58 17.3 22.0 14.0 x:=abs(y) 270.0 *10.20 71.0 562.0 740.0 x:=exp(y) 261.0 *7.30 24.7 462.0 808.0 x:=ln(y) 246.0 *6.77 73.0 432.0 605.0 x:=sqrt(y) 272.0 *12.90 91.0 622.0 841.0 x:=arctan(y) 99.0 *1.38 18.7 72.0 37.5 x:=sign(y) 99.0 *2.70 24.7 152.0 41.1 x:=entier(y) 54.0 2.18 43.0 72.0 31.0 p0 69.0 *6.61 57.0 92.0 39.0 p1(x) 75.0 *8.28 65.0 132.0 45.0 p2(x, y) 93.0 *9.75 71.0 162.0 53.0 p2(x, y, z) 57.0 *0.92 8.6 17.0 38.5 loop time |

The performance studies found wide disparities between expected and observed timings. Chapter 9 does a deep dive on six Algol compilers.

A lot of work and idealism went into gather the data for this book (36 systems!). Unfortunately, the computer performance model was already noticeably inaccurate, and advances in compiler optimization and cpu design meant that the accuracy was only going to get worse. Anyways, there is lots of interesting performance data on 1960 era computers.

Whetstone lived on into the 1990s, when the SPEC benchmark started to its rise to benchmark dominance.

Likelihood of encountering a given sequence of statements

How many lines of code have to be read to be likely to encounter every meaningful sequence of  statements (a non-meaningful sequence would be the three statements

statements (a non-meaningful sequence would be the three statements break;break;break;)?

First, it is necessary to work out the likelihood of encountering a given sequence of statements within  lines of code.

lines of code.

If we just consider statements, then the following shows the percentage occurrence of the four kinds of C language statements (detailed usage information):

Statement % occurrence expression-stmt 60.3 selection-stmt 21.3 jump-stmt 15.0 iteration-stmt 3.4 |

The following analysis assumes that one statement occupies one line (I cannot locate data on percentage of statements spread over multiple lines).

An upper estimate can be obtained by treating this as an instance of the Coupon collector’s problem (which assumes that all items are equally likely), i.e., treating each sequence of  statements as a coupon.

statements as a coupon.

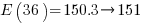

The average number of items that need to be processed before encountering all  distinct items is:

distinct items is:  , where

, where  is the n-th harmonic number. When we are interested in at least one instance of every kind of C statement (i.e., the four listed above), we have:

is the n-th harmonic number. When we are interested in at least one instance of every kind of C statement (i.e., the four listed above), we have:  .

.

There are  distinct sequences of three statements, but only

distinct sequences of three statements, but only  meaningful sequences (when statements are not labelled, a

meaningful sequences (when statements are not labelled, a jump-stmt can only appear at the end of a sequence). If we treat each of these 36 sequences as distinct, then  , 3-line sequences, or 453 LOC.

, 3-line sequences, or 453 LOC.

This approach both under- and over-estimates.

One or more statements may be part of different distinct sequences (causing the coupon approach to overestimate LOC). For instance, the following sequence of four statements:

expression-stmt selection-stmt expression-stmt expression-stmt |

contains two distinct sequences of three statements, i.e., the following two sequences:

expression-stmt selection-stmt selection-stmt expression-stmt expression-stmt expression-stmt |

There is a factor of 20-to-1 in percentage occurrence between the most/least common kind of statement. Does subdividing each kind of statement reduce this difference?

If expression-stmt, selection-stmt, and iteration-stmt are subdivided into their commonly occurring forms, we get the following percentages (where -other is the subdivision holding all cases whose occurrence is less than 1%, and the text to the right of sls- indicates the condition in an if-statement; data):

Statement % occurrence exs-func-call 22.3 exs-object=object 9.6 exs-object=func-call 6.0 exs-object=constant 4.2 exs-object_v++ 2.4 exs-other 15.7 sls-object 3.3 sls-object==object 1.9 sls-!object 1.6 sls-func-call 1.6 sls-expression 1.2 sls-other 11.7 jump-stmt 15.0 its-for 2.1 its-while 1.1 |

Function calls could be further broken down by number of arguments, but this would not have much impact because zero and one arguments are very common.

A more accurate model of the problem is needed.

A Markov chain approach handles both overlapping sequences, and statements having difference occurrence probabilities. For a sequence of length  , the calculation involves an

, the calculation involves an  by

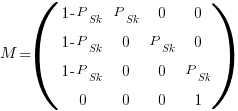

by  matrix. For a sequence of three of the same kind of statement (chosen because ‘same kind’ of sequences are least likely to match, for a given length), the transition matrix is:

matrix. For a sequence of three of the same kind of statement (chosen because ‘same kind’ of sequences are least likely to match, for a given length), the transition matrix is:

where  is the probability that statement

is the probability that statement  will occur. The last row is the absorbing state. For the general case, see: Pattern Markov chains: Optimal Markov chain embedding through deterministic finite automata.

will occur. The last row is the absorbing state. For the general case, see: Pattern Markov chains: Optimal Markov chain embedding through deterministic finite automata.

To calculate the probability that a sequence of the same kind of statement, of length  , occurs within a sequence of

, occurs within a sequence of  statements, this transition matrix is multiplied

statements, this transition matrix is multiplied  times (i.e., raised to the power

times (i.e., raised to the power  ). The following code is an implementation in R (python script handling the general case):

). The following code is an implementation in R (python script handling the general case):

seq_prob = function(N, s_len, Sk)

{

Sp=rep(Sk, s_len)

P=matrix(0, nrow = s_len+1, ncol = s_len+1) # Transition matrix

P[ , 1]=1-c(Sp, 1) # Probability of not occurring: first column

op=cbind(1:s_len, (1:s_len)+1) # diagonal for occurrence probabilities

P[op]=Sp # assign occurrence probabilities

P[s_len+1, s_len+1]=1 # absorbing state

R=P # result value

for (n in 2:N)

R=R %*% P # matrix multiply

return(R)

}

# Calculate probability for N equiprobable occurrences

# Result in last column of first row

N = 100

seq_len=3

sk=0.01

likelihood=seq_prob(N, seq_len, sk)[1, seq_len+1] |

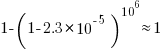

If the occurrence likelihood of  (i.e., 1%), then the likelihood of encountering a sequence of three such statements in a sequence of 3 lines is

(i.e., 1%), then the likelihood of encountering a sequence of three such statements in a sequence of 3 lines is  (i.e., 0.0001%), while for a sequence of 100 lines it is

(i.e., 0.0001%), while for a sequence of 100 lines it is  .

.

The number of statements contained in a function varies. To calculate the likelihood of encountering a particular sequence of three statements in a program, or collection of programs, we need to find the likelihood over all function lengths, adjusting for the probability of encountering functions containing a given number of statements.

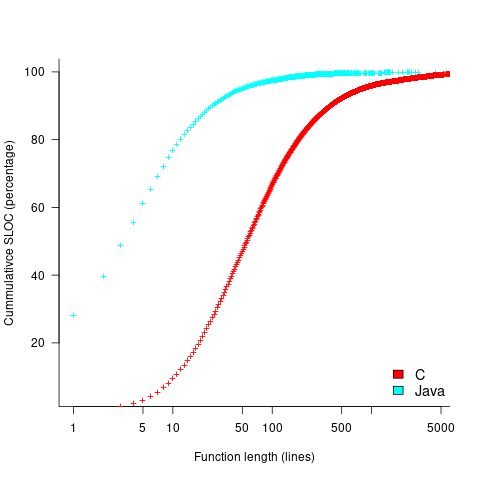

The plot below shows the cumulative percentage of code as function LOC increases (data from Landman, Serebrenik, Bouwers and Vinju; plot code):

Calculating the likelihood of encountering a given sequence length in a function containing a given number of LOC (ignoring local definitions and blank lines), and then adjusting for the probability of a function containing a given number of LOC, the likelihood of encountering a sequence containing 3-to-10 of the same kind of statement (whose likelihood of occurrence is 1%) is given by the following table (calculation code):

of

seq length Occurrence likelihood

3 2.3e-05

4 2.2e-07

5 2.1e-09

6 2.0e-11

7 2.0e-13

8 1.9e-15

9 1.8e-17

10 1.8e-19 |

These values can be used to calculate the likelihood of encountering this ‘1%’ statement sequence in a repo containing  C functions. For instance, in 1 million functions the likelihood of one instance of a three ‘1%’ same-kind statement sequence is:

C functions. For instance, in 1 million functions the likelihood of one instance of a three ‘1%’ same-kind statement sequence is:  . For a repo containing one billion functions of C, there is an 88% chance of encountering a sequence of five such statements.

. For a repo containing one billion functions of C, there is an 88% chance of encountering a sequence of five such statements.

The sequence occurrence likelihood for Java will be smaller because Java functions contain fewer LOC.

At the subdivision level of kind-of-statement that has been found to occur in 1% of all statements, sequences up to five long are likely to be encountered at least once in a billion functions, with sequences containing more common statements occurring at a greater rate.

Sequences of kind-of-statements whose occurrence rate is well below 1% are unlikely to be encountered.

This analysis has assumed that the likelihood of occurrence of each statement in a sequence is independent of the other statements in the sequence. For some kind-of-statement this is probably not true, but no data is available.

The pervasive use of common statement sequences enables LLMs to do a good job of predicting what comes next.

Recent Comments