Archive

The age of the Algorithm is long gone

I date the age of the Algorithm from roughly the 1960s to the late 1980s.

During the age of the Algorithms, developers spent a lot of time figuring out the best algorithm to use and writing code to implement algorithms.

Knuth’s The Art of Computer Programming (TAOCP) was the book that everybody consulted to find an algorithm to solve their current problem (wafer thin paper, containing tiny handwritten corrections and updates, was glued into the library copies of TAOCP held by my undergraduate university; updates to Knuth was news).

Two developments caused the decline of the age of the Algorithm (and the rise of the age of the Ecosystem and the age of the Platform; topics for future posts).

- The rise of Open Source (it was not called this for a while), meant it became less and less necessary to spend lots of time dealing with algorithms; an implementation of something that was good enough, was available. TAOCP is something that developers suggest other people read, while they search for a package that does something close enough to what they want.

- Software systems kept getting larger, driving down the percentage of time developers spent working on algorithms (the bulk of the code in commercially viable systems deals with error handling and the user interface). Algorithms are still essential (like the bolts holding a bridge together), but don’t take up a lot of developer time.

Algorithms are still being invented, and some developers spend most of their time working with algorithms, but peak Algorithm is long gone.

Perhaps academic researchers in software engineering would do more relevant work if they did not spend so much time studying algorithms. But, as several researchers have told me, algorithms is what people in their own and other departments think computing related research is all about. They remain shackled to the past.

Premium mediocrity is software engineering’s demographic

Software engineering is one of the skills needed to write software, but outside of student coursework is rarely an end in itself. Software is written to do something and the person writing the code needs to know about the something.

If enough people are involved in something, a job title gets created by inserting the appropriate application domain name before ‘software engineer’, e.g., the something software engineer; systems software engineering was one of the first recorded uses of ‘software engineering’, ’embedded software engineer’ is a common usage and more recently ‘research software engineer’ has been trending.

Customers want the software systems they use to fulfill their needs. Implementing a software system involves figuring out what the needs are, how best to implement them using the available resources and producing usable software; all within a given amount of time and money.

How much software engineering knowledge and skill does a something software engineer need? The obvious answer is: enough to get the something done. Ok, how much is needed to get the something done?

There are so many hours in a day: what percentage of available time is best spent learning about software engineering, what percentage leaning about the something and what percentage doing rather than learning?

The only data I have for answering this question is my own experience of talking to people, from a wide range of business and application areas, whose job includes writing software. My background is compilers (from C to Cobol) and static analysis, my knowledge of end-user application domains is derived from talking to the developers who were using the compilers or static analysis tools I was working on at the time.

I have always been struck by the minimalist knowledge of most developers, when it comes to the programming language they were using. It took a while, but eventually I accepted the obvious: most developers don’t need to know much about the language they are using to get their job done.

By a process that resembles incentivized trial and error, people learn how to write code that does what they want; the compiler does not complain and the output looks ok. For some languages, I used to be able to work out which books a relatively new developer had used to guide their learning, by matching a book’s example code snippets with the code they had written.

This minimalist knowledge approach to programming languages is cost effective because most code is simple and has a short lifetime; the cost of learning lots of language details does not provide enough benefit to be worthwhile.

I am a minimalist language Python developer. Why would I spend time learning more about the semantics of Python than I need to?

What are the benefits of being a language expert? Compiler writers get paid to learn the ins and outs of a language and I know a few people who became language experts without being compiler writers (they got hooked on knowing the language). I have found it useful for keeping my code simple (I am not tempted to write complicate code, or use obscure constructs, in the mistaken belief that they are better than the simple stuff), it is also useful for figuring out other people’s complicated or obscure usage (created intentionally or accidentally).

These benefits are not enough to convince me to learn more about Python, the language. I am content to wait until I need to learn more.

I have occasionally taught advanced programming courses, aimed at developers with a few years experience working in industry. These courses had to include the word ‘advanced’ in their title, otherwise developers with a few years experience would never have signed-up; ‘advanced’ is a necessary marketing signal (others who have run such courses report the same behavior). The course contents were essentially a review of basic material, with lots of examples; most of those attending did not know enough to follow real advanced material. The courses were really about uncovering and correcting bad habits that attendees had picked up over time (often, a technique was discovered to fix a problem and then subsequently adopted for more general use).

What about general software engineering skills? A minimalist knowledge approach to software engineering is cost effective because most code does not exist long enough to make it worthwhile investing in reducing future maintenance costs. Yes, it is more expensive for those that survive to become commonly used, but think of all the savings from not investing in those that did not survive. Software engineering decisions should not be driven by surviorship bias.

The first requirement of any commercial software system is to attract paying customers. In a rapidly changing market, being first with a saleable product can be the difference between life and death. Minimizing software engineering effort saves time and money (in the short term). If the product is a success, there will be money to pay for what needs to be done, if the product fails nobody cares. I have seen a lot of software systems that are a commercial success and a complete software engineering mess; successful, well engineered software is less common (or perhaps they just don’t need me to help them out).

Software engineering mediocrity is not only viable, for most people it’s the outcome of making a cost/benefit decision to invest their learning time in the application domain, not software engineering (or computer language).

Of course, nobody wants to be seen as being mediocre (for some people, mediocre overstates their skill level); their behavior is premium mediocre.

There are a few application areas where software engineering skills are needed, e.g., safety critical software and warehouse scale computing. A few high profile cases are hiding the reality that whatever works is cost effective for most software solutions.

Replicating results using research software

The reproducibility of results, from scientific studies, has always been an important issue. Over the last few years software has become a hot topic in reproducibility circles; many researchers have an expectation that if they run the original researcher’s software, they will replicate the results. Reality has not lived up to their expectations and there has been a lot of flapping around looking for a solution. There is a solution, but first, why does the problem exist?

I have spent a lot of time porting software to different compilers (when I was in the compiler business, I wanted everybody to port their applications to the compiler I was working), different hardware (oh, the days when every major vendor had at least one distinct cpu; not like today where it’s x86, ARM, or embedded), different operating systems (umpteen flavors of Unix, all with slightly different header file contents and library behavior; the Unix wars were good for those in the porting business) and every now and again different languages (by translating).

The Wintel alliance wiped out variation in cpus and operating systems (they can still be found lurking in dark corners) and open source compilers created a near monoculture of compilers for the major languages.

The major software portability problems of 30 years ago have become rather minor. But software portability problems that once tended to be minor (at least for scientific software), have grown to become a major headache. Today’s major portability problems center around evolution of the libraries/packages being used, and longer term the evolution of the language(s) used.

Evolution has created development ecosystems where there are rampant dependencies on specific, or earlier than, or later than versions of libraries/packages. I have been out of the porting business for several decades, but talking to those doing it today, the story is the same; experience in porting from A to B is everything, second best is talking to somebody else who has gone in that direction and third best are the one-line forums such as stackoverflow.

Researchers are doing research on who-knows-what and probably have need-to-know knowledge of software and the libraries they are using, the researchers receiving a copy of the original software might know less. What is the probability that the originating and receiving researchers have exactly the same versions of libraries installed? The receiving researcher may not have any of the required libraries installed, and promptly install the latest version (which may well be more recent than the ones used by the original researcher).

A solution is available; distribute a duplicate of the researchers complete system as a container, e.g., a Docker image.

Containers solve the replication problem. But these days people want more, they actually think it should be possible to take research software and modify it to suite their own needs. Good luck with that.

Research software is written to solve a problem, often by people writing their first non-trivial programs (i.e., they are novices), with no incentive to produce something that is easy for others to use. When software is written by experienced developers, who have an incentive to build something that is easy for others to work with, multiple reimplementations are often still required to achieve something of decent quality. Creating robust software, that others can use, is very hard.

The problem with software is its invisibility; the difficulties are not visible. When the internal operations are visible, the difficulties of making changes are easier to see.

James Albert Bonsack’s cigarette rolling machine (from Wikipedia).

Type compatibility: name vs structural equivalence

What are the rules for deciding when two types are the same, or compatibility?

This question needs to be answered to decide whether an object of type T1 can be assigned to an object of type T2, whether they can be compared, added together, etc.

A wide collection of rules have been combined together, by various languages, for type compatibility of scalar types (e.g., integer, character, etc), but for aggregate types two rules dominate: name equivalence, and structural equivalence, or some combination.

With name equivalence, two types are the same if they are declared using the same name (e.g., the name of the tag for a union type, in C)

With structural equivalence, two types are compatible if they have a compatible structure, i.e., their internal contents are type compatible (this requires walking over each field/member checking that it is compatible). For instance, an object declared to have an aggregate type containing three integers is compatible with another aggregate type containing three integers (assuming any type modifiers, such as const’ness or mutability, are the same).

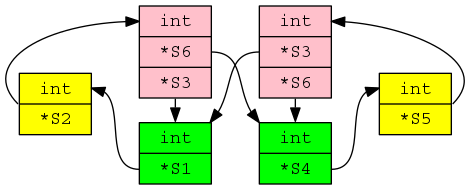

Structural compatibility becomes interesting when pointer types are involved; the pointed to types need to be checked and loops can occur, e.g., type S1 contains a field that has a type pointer to S2, which contains a field that has type pointer to S1.

While most types are easily checked for structural compatibility, every now and again aggregate types connect together in a way that makes it non-trivial to figure out which types are structurally compatible (dot file; needs graphviz):

Handling the edge cases requires maintaining a stack of information about which pairs of types are currently being compared.

In C, type compatibility is a combination of name equivalence (for aggregate types in the same translation unit) and structural equivalence (for function types and aggregate types across translation units).

Function types have to use structural equivalence because the type in a function definition is anonymous (the function name that appears in the definition has this anonymous type), there is no name to compare.

Cycles cannot appear in function types (in C), because the identifier being defined in a typedef is not in scope until just after the completion of its declarator. It is not possible to refer to the identifier being defined insider its own definition (e.g., it is not possible to define a function that takes its own type as a parameter; in typedef int (*f)(f); the second f is a redundant parameter name, the scope of the type denoted by the first f begins just before the semicolon).

Structural equivalence across translation units is a hangover from the early days of C, when developers were sloppy when using (or not) tag names (with different people having different rules for using upper/lower case tag names); developers’ knew what the layout in memory was and created the necessary types for their use of this data.

Type compatibility via name equivalence is easy to explain and makes it explicit when developers are bending the rules (i.e., pointer to struct casts appear in the code).

Type compatibility via structural equivalence is the wild west, which still exists in some development environments.

Recent Comments