Archive

Overview of broad US data on IT job hiring/firing and quitting

Software developers are employed by organizations and people change jobs, either voluntarily or not; every year a new batch of people join the workforce, e.g., new graduates. Governments track employment activities for a variety of reasons, e.g., tax collection, and monitoring labour supply and demand (for the purposes of planning).

The US Bureau of Labor Statistics’ publishes a monthly summary of their Job Openings and Labor Turnover Survey. What can be learned about software development employment from this data (description)?

The data starts in December 2000, with each row contains a monthly count of Job Openings, Hires, Quits, Layoffs and Discharges, and totals, along with one of 21 major non-farm industry codes or one of the 5 government codes (the counts are broken out by State). I’m guessing that software developers are assigned the Information code (i.e., 510000), but who is to say that some have not been classified with the code for, say, Construction or Education and health services. The Information code will cover a lot more than just software developers; I’m trading off broad IT coverage for monthly details on employment turnover (software developer specific information is available, but it comes without the turnover information). The Bureau of Labor Statistics make available a huge quantity of information, and understanding how it all fits together would probably require me to spend several months learning my way around (I have already spent a week or two over the years), so I’m sticking with a prebuilt dataset.

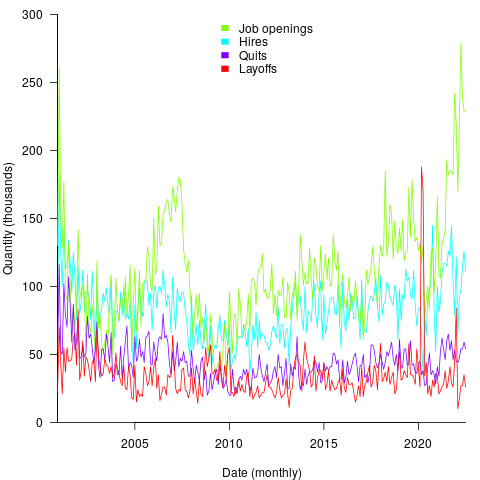

The plot below shows the aggregated monthly counts (i.e., all states) of Job Openings, Hires, Quits, Layoffs and Discharges for the Information industry code (code+data):

The general trend follows the ups and downs of the economy, there is a huge spike in layoffs in early 2020 (the start of COVID), and Job Openings often exceeding Hires (which I did not expect).

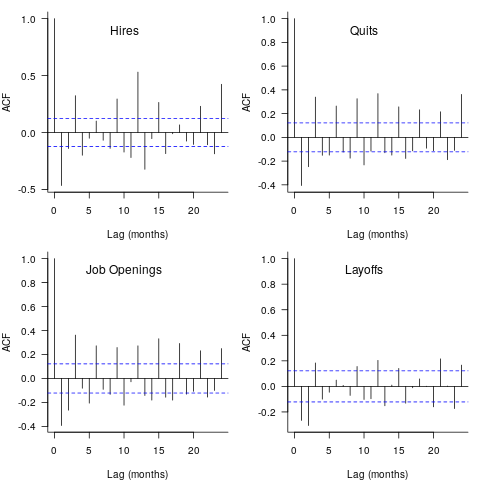

These counts have the form of a time-series, which leads to the questions about repeating patterns in the sequence of values? The plot below shows the autocorrelation of the four employment counts (code+data):

The spike in Hires at 12-months is too large to be just be new graduates entering the workforce; perhaps large IT employers have annual reviews for all employees at the same time every year, causing some people to quit and obtain new jobs (Quits has a slightly larger spike at 12-months). Why is there a regular 3-month cycle for Job Openings? The negative correlation in Layoffs at one & two months is explained by companies laying off a batch of workers one month, followed by layoffs in the following two months being lower than usual.

I don’t know much about employment practices, so I won’t speculate any more. Comments welcome.

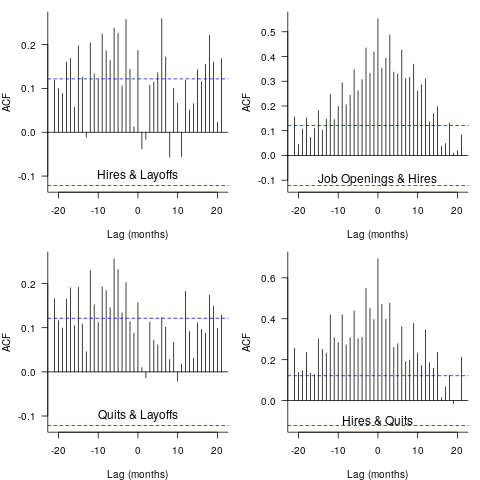

Are there any interest cross-correlations between the pairs of time-series?

The plot below shows four pairs of cross correlations (code+data):

Hires & Layoffs shows a scattered pattern of Hires preceding Layoffs (to be expected), and the bottom left shows there is a pattern of Quits preceding Layoffs (are people searching for steadier employment when layoffs loom?). Top right shows a pattern of Job Openings following Hires (I’m clutching at straws for this; is Hires a proxy for Quits, the cross correlation of Job Openings & Quits does have Job Openings leading), the bottom right shows the pattern of Hires leading Quits.

Nothing in this analysis surprised me, but then it is rather basic and broad brush. These results are the start of an analysis of the IT employment ecosystem; one that probably won’t progress far because of a lack of data and interest on my part.

Optimal sizing of a product backlog

Developers working on the implementation of a software system will have a list of work that needs to be done, a to-do list, known as the product backlog in Agile.

The Agile development process differs from the Waterfall process in that the list of work items is intentionally incomplete when coding starts (discovery of new work items is an integral part of the Agile process). In a Waterfall process, it is intended that all work items are known before coding starts (as work progresses, new items are invariably discovered).

Complaints are sometimes expressed about the size of a team’s backlog, measured in number of items waiting to be implemented. Are these complaints just grumblings about the amount of work outstanding, or is there an economic cost that increases with the size of the backlog?

If the number of items in the backlog is too low, developers may be left twiddling their expensive thumbs because they have run out of work items to implement.

A parallel is sometimes drawn between items waiting to be implemented in a product backlog and hardware items in a manufacturer’s store waiting to be checked-out for the production line. Hardware occupies space on a shelf, a cost in that the manufacturer has to pay for the building to hold it; another cost is the interest on the money spent to purchase the items sitting in the store.

For over 100 years, people have been analyzing the problem of the optimum number of stock items to order, and at what stock level to place an order. The economic order quantity gives the optimum number of items to reorder,  (the derivation assumes that the average quantity in stock is

(the derivation assumes that the average quantity in stock is  ), it is given by:

), it is given by:

, where

, where  is the quantity consumed per year,

is the quantity consumed per year,  is the fixed cost per order (e.g., cost of ordering, shipping and handling; not the actual cost of the goods),

is the fixed cost per order (e.g., cost of ordering, shipping and handling; not the actual cost of the goods),  is the annual holding cost per item.

is the annual holding cost per item.

What is the likely range of these values for software?

is around 1,000 per year for a team of ten’ish people working on multiple (related) projects; based on one dataset,

is around 1,000 per year for a team of ten’ish people working on multiple (related) projects; based on one dataset, is the cost associated with the time taken to gather the requirements, i.e., the items to add to the backlog. If we assume that the time taken to gather an item is less than the time taken to implement it (the estimated time taken to implement varies from hours to days), then the average should be less than an hour or two,

is the cost associated with the time taken to gather the requirements, i.e., the items to add to the backlog. If we assume that the time taken to gather an item is less than the time taken to implement it (the estimated time taken to implement varies from hours to days), then the average should be less than an hour or two, : While the cost of a post-it note on a board, or an entry in an online issue tracking system, is effectively zero, there is the time cost of deciding which backlog items should be implemented next, or added to the next Sprint.

: While the cost of a post-it note on a board, or an entry in an online issue tracking system, is effectively zero, there is the time cost of deciding which backlog items should be implemented next, or added to the next Sprint.

If the backlog starts with

items, and it takes

items, and it takes  seconds to decide whether a given item should be implemented next, and

seconds to decide whether a given item should be implemented next, and  is the fraction of items scanned before one is selected: the average decision time per item is:

is the fraction of items scanned before one is selected: the average decision time per item is:  seconds. For example, if

seconds. For example, if  , pulling some numbers out of the air,

, pulling some numbers out of the air,  , and

, and  , then

, then  , or 5.4 minutes.

, or 5.4 minutes.The Scrum approach of selecting a subset of backlog items to completely implement in a Sprint has a much lower overhead than the one-at-a-time approach.

If we assume that  , then

, then  .

.

An ‘order’ for 45 work items might make sense when dealing with clients who have formal processes in place and are not able to be as proactive as an Agile developer might like, e.g., meetings have to be scheduled in advance, with minutes circulated for agreement.

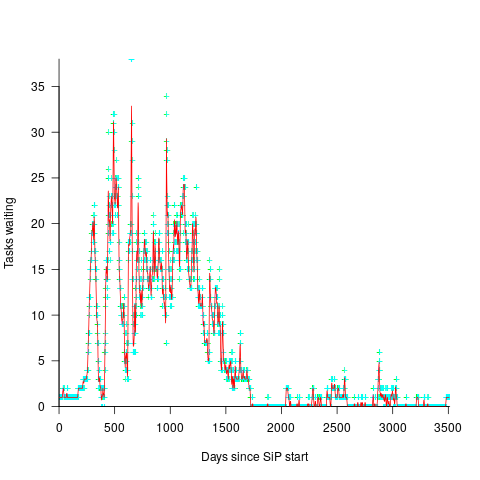

In a more informal environment, with close client contacts, work items are more likely to trickle in or appear in small batches. The SiP dataset came from such an environment. The plot below shows the number of tasks in the backlog of the SiP dataset, for each day (blue/green) and seven-day rolling average (red) (code+data):

Career progression: an invisible issue in software development

Career progression is an important issue in the development of some software systems, but its impact is rarely discussed, let along researched. A common consequence of career progression is that a project looses a member of staff, e.g., they move to work on a different project, or leave the company. Hiring staff and promoting staff are related neglected research areas.

Understanding the initial and ongoing development of non-trivial software systems requires an understanding of the career progression, and expectations of progression, of the people working on the system.

Effectively working on a software system requires some amount of knowledge of how it operates, or is intended to operate. The loss of a person with working knowledge of a system reduces the rate at which a project can be further developed. It takes time to find a suitable replacement, and for that person’s knowledge of the behavior of the existing system to reach a workable level.

We know that most software is short-lived, but know almost nothing about the involvement-lifetime of those who work on software systems.

There has been some research studying the durations over which people have been involved with individual Open source projects. However, I don’t believe the findings from this research, because I think that non-paid involvement on an Open source project has very different duration and motivation characteristics than a paying job (there are also data cleaning issues around the same person using multiple email addresses, and people working in small groups with one person submitting code).

Detailed employment data, in bulk, has commercial value, and so is rarely freely available. It is possible to scrape data from the adverts of job websites, but this only provides information about the kinds of jobs available, not the people employed.

LinkedIn contains lots of detailed employment history, and the US courts have ruled that it is not illegal to scrape this data. It’s on my list of things to do, and I keep an eye out for others making such data available.

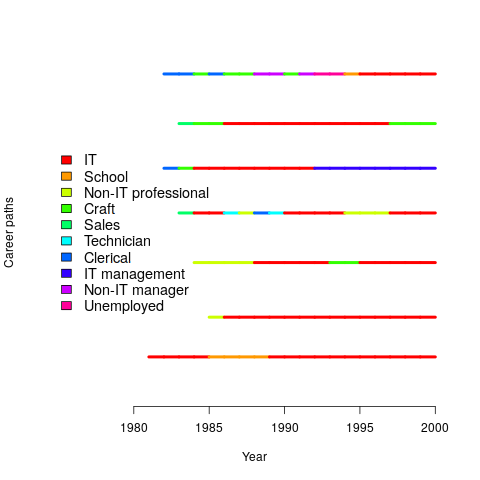

The National Longitudinal Survey of Youth has followed the lives of 10k+ people since 1979 (people were asked to detail their lives in periodic surveys). Using this data, Joseph, Boh, Ang, and Slaughter investigated the job categories within the career paths of 500 people who had worked in a technical IT role. The plot below shows the career paths of people who had spent at least five years working in an IT role (code+data):

Employment history provides an upper bound for the time that a person is likely to have worked on a project (being employed to work on an Open source project while, over time, working at multiple companies is an edge case).

A company may have employees simultaneously working on multiple projects, spending a percentage of their time on each. How big a resource impact is the loss of such a person? Were they simply the same kind of cog in multiple projects, or did they play an important synchronization role across projects? Details on all the projects a person worked on would help answer some questions.

Building a software system involves a lot more than writing the code. Technical managers working on high level, broad brush, issues. The project knowledge that technical managers have contributes to ongoing work, and the impact of loosing a technical manager is probably more of a longer term issue than loosing a coding-developer.

There are systems that are developed and maintained by essentially one person over many years. These get written about and celebrated, but are comparatively rare.

One of the more reliable ways of estimating developer productivity is to measure the impact of them leaving a project.

Programming Languages: History and Fundamentals

Programming Languages: History and Fundamentals by Jean E. Sammet is often cited in discussions of language history, but very rarely read (I appreciate that many oft cited books have not been read by those citing them, but age further reduces the likelihood that anybody has read this book; it was published in 1969). I read this book as an undergraduate, but did not think much of it. For around five years it has been on my list of books to buy, should a second-hand copy become available below £10 (I buy anything vaguely interesting below this price, with most ending up left on trains or the book table of coffee shops).

Thanks to Adam Gashlin the Internet Archive now contains a downloadable copy.

The list of 120 languages covered contains a handful of the 28 languages covered in an article from 1957. Sammet says that of the 120, 20 are already dead or on obsolete computers (i.e., it is unlikely that another compiler will be written), and that about 15 are widely used/implemented).

Today, the book is no longer a discussion of the recent past, but a window in to the Cambrian explosion of programming languages that happened in the 1960s (almost everything since then has been a variation on a theme); languages from the 1950s are also included.

How does the material appear to me from a 2022 vantage-point?

The organization of the book reminded me that programming languages were once categorized by application domain, i.e., scientific/engineering users, business users, and string & list processing (i.e., academic users). This division reflected the market segmentation for computer hardware (back then, personal computers were still in the realm of science fiction). Modern programming language books (e.g., Scott’s “Programming Language Pragmatics”) often organize material based on implementation details, e.g., lexical analysis, and scoping rules.

The overview of programming languages given in the first three chapters covers nearly all the basic issues that beginners are taught today, but the emphasis is different (plus typographical differences, such as keyword spelt ‘key word’).

Two major language constructs are missing: Dynamic storage allocation is not discussed: Wirth’s book Algorithms + Data Structures = Programs is seven years in the future, and Kernighan and Ritchie’s The C Programming Language nine years; Simula gets a paragraph, but no mention of the object-oriented concepts it introduced.

What is a programming language, and what are the distinguishing features that make some of them high-level programming languages?

These questions may sound pointless or obvious today, but people used to spend lots of time arguing over what was, or was not, a high-level language.

Sammet says: “… the first characteristic of a programming language is that the user can write a program without knowing much—if anything—about the physical characteristics of the machine on which the program is to be run.”, and goes on to infer: “… a major characteristic of a programming language is that there must be a reasonable potential of having a source program written in that language run on two computers with different machine codes without rewriting the source program. … In most programming languages, some—but often very little—rewriting of the source program is necessary.”

The reason that some rewriting of the source was likely to be needed is that there were often a lot of small variations between compilers for the same language. Compilers tended to be bespoke, i.e., the Fortran compiler for the X cpu running OS Y was written specifically for that combination. Retargetting an existing compiler to a new cpu or OS was much talked about, but it was more fun to write a new compiler (and anyway, support for new features was needed, and it was simpler to start from scratch; page 149 lists differences in Fortran compilers across IBM machines). It didn’t help that there was also a lot of variation in fundamental quantities such as word length, e.g., 16, 18, 20, 24, 32, 36, 40, 48, 60 bit words; see page 18 of Dictionary of Computer Languages.

Sammet makes the distinction: “One of the prime differences between assembly and higher level languages is that to date the latter do not have the capability of modifying themselves at execution time.”

Sammet then goes on to list the advantages and disadvantages of what she calls higher level languages. Most of the claimed advantages will be familiar to readers: “Ease of Learning”, “Ease of Coding and Understanding”, “Ease of Debugging”, and “Ease of Maintaining and Documenting”. The disadvantages included: “Time Required for Compiling” (the issue here is converting assembler source to object code is much faster than compiling a high-level language), “Inefficient Object Code” (the translation process was often a one-to-one mapping of what was written, e.g., little reuse of register contents), “Difficulties in Debugging Without Learning Machine Language” (symbolic debuggers are still in the future).

Sammet’s observation: “In spite of the fact that higher level languages have been with us for over 10 years, there has been relatively little quantitative or qualitative analysis of their advantages and disadvantages.” is still true 50 years later.

If you enjoy learning about lots of different languages, you will like this book. The discussion of specific languages contains copious examples, which for me brought things to life.

Sites such as the Internet Archive and Bitsavers make the book’s references accessible (there are a few I had not seen before), and offer readers a path to pre-Cambrian times.

Saul Rosen’s 1967 book “Programming Systems and Languages” is sometimes cited in discussions of programming language history. This book is a collection of papers that discuss a variety of languages and the operating systems that support them. Fewer languages are covered, but in more depth, along with lots of implementation details. Again, lots of interesting references.

Recent Comments