Archive

Analysis of when refactoring becomes cost-effective

In a cost/benefit analysis of deciding when to refactor code, which variables are needed to calculate a good enough result?

This analysis compares the excess time-code of future work against the time-cost of refactoring the code. Refactoring is cost-effective when the reduction in future work time is less than the time spent refactoring. The analysis finds a relationship between work/refactoring time-costs and number of future coding sessions.

Linear, or supra-linear case

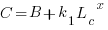

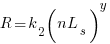

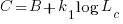

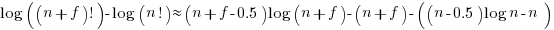

Let’s assume that the time needed to write new code grows at a linear, or supra-linear rate, as the amount of code increases ( ):

):

where:  is the base time for writing new code on a freshly refactored code base,

is the base time for writing new code on a freshly refactored code base,  is the number of lines of code that have been written since the last refactoring, and

is the number of lines of code that have been written since the last refactoring, and  and

and  are constants to be decided.

are constants to be decided.

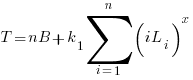

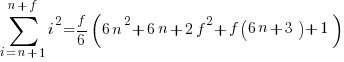

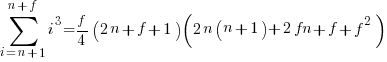

The total time spent writing code over  sessions is:

sessions is:

If the same number of new lines is added in every coding session,  , and

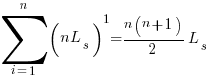

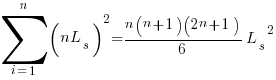

, and  is an integer constant, then the sum has a known closed form, e.g.:

is an integer constant, then the sum has a known closed form, e.g.:

x=1,  ; x=2,

; x=2,

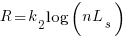

Let’s assume that the time taken to refactor the code written after  sessions is:

sessions is:

where:  and

and  are constants to be decided.

are constants to be decided.

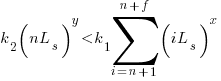

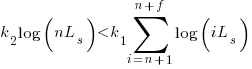

The reason for refactoring is to reduce the time-cost of subsequent work; if there are no subsequent coding sessions, there is no economic reason to refactor the code. If we assume that after refactoring, the time taken to write new code is reduced to the base cost,  , and that we believe that coding will continue at the same rate for at least another

, and that we believe that coding will continue at the same rate for at least another  sessions, then refactoring existing code after

sessions, then refactoring existing code after  sessions is cost-effective when:

sessions is cost-effective when:

assuming that  is much smaller than

is much smaller than  , setting

, setting  , and rearranging we get:

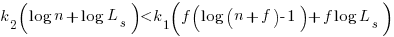

, and rearranging we get:

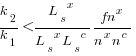

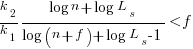

after rearranging we obtain a lower limit on the number of future coding sessions,  , that must be completed for refactoring to be cost-effective after session

, that must be completed for refactoring to be cost-effective after session  ::

::

It is expected that  ; the contribution of code size, at the end of every session, in the calculation of

; the contribution of code size, at the end of every session, in the calculation of  and

and  is equal (i.e.,

is equal (i.e.,  ), and the overhead of adding new code is very unlikely to be less than refactoring all the newly written code.

), and the overhead of adding new code is very unlikely to be less than refactoring all the newly written code.

With  ,

,  must be close to zero; otherwise, the likely relatively large value of

must be close to zero; otherwise, the likely relatively large value of  (e.g., 100+) would produce surprisingly high values of

(e.g., 100+) would produce surprisingly high values of  .

.

Sublinear case

What if the time overhead of writing new code grows at a sublinear rate, as the amount of code increases?

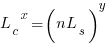

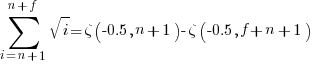

Various attributes have been found to strongly correlate with the  of lines of code. In this case, the expressions for

of lines of code. In this case, the expressions for  and

and  become:

become:

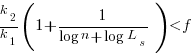

and the cost/benefit relationship becomes:

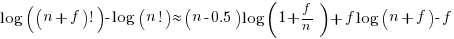

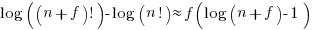

applying Stirling’s approximation and simplifying (see Exact equations for sums at end of post for details) we get:

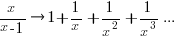

applying the series expansion (for  ):

):  , we get

, we get

Discussion

What does this analysis of the cost/benefit relationship show that was not obvious (i.e., the relationship  is obviously true)?

is obviously true)?

What the analysis shows is that when real-world values are plugged into the full equations, all but two factors have a relatively small impact on the result.

A factor not included in the analysis is that source code has a half-life (i.e., code is deleted during development), and the amount of code existing after  sessions is likely to be less than the

sessions is likely to be less than the  used in the analysis (see Agile analysis).

used in the analysis (see Agile analysis).

As a project nears completion, the likelihood of there being  more coding sessions decreases; there is also the every present possibility that the project is shutdown.

more coding sessions decreases; there is also the every present possibility that the project is shutdown.

The values of  and

and  encode information on the skill of the developer, the difficulty of writing code in the application domain, and other factors.

encode information on the skill of the developer, the difficulty of writing code in the application domain, and other factors.

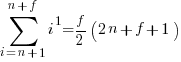

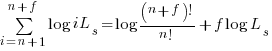

Exact equations for sums

The equations for the exact sums, for  , are:

, are:

, where

, where  is the Hurwitz zeta function.

is the Hurwitz zeta function.

Sum of a log series:

using Stirling’s approximation we get

simplifying

and assuming that  is much smaller than

is much smaller than  gives

gives

My new laptop

I have been using the same MacBook Pro for almost nine years; yes, I’m a sporadic user of laptops, much preferring to work on a decent desktop system. I’ve had zero hardware problems, and have often been able to install programs (often by compiling from source) from the weird and wonderful ecosystems I frequent. Performance does seem to have gotten slower with every OS upgrade (it ‘only’ has 8G of memory). I’m very happy with the eight years of software support provided by Apple; while I’m usually happy to stay with older versions of software, package vendors eventually stop supporting them, ‘forcing’ me to upgrade.

My decision about which laptop to buy next is software driven: Over the next, say, five years which of Apple’s OS X or Linux is most likely to best support the software ecosystems containing the kind of programs I am going to want to run (given a Windows laptop, my first action would be to install the Linux subsystem)?

Diehard Mac fans complain that Apple has lost its way, with regard to Mac upgrades; this does not bother me too much. I am more concerned with the increasing number of features that smack of a walled garden approach to software that is permitted to run on Apple hardware.

My need for a new laptop has not been urgent, and for the last 18-months I have been keeping an eye out for the hardware options that are available for a laptop running Linux.

The most obvious option is buying a Windows laptop from a major supplier, and doing a clean Linux installation; yes, some larger suppliers offer laptops with Linux preinstalled.

About a year ago, I saw a review for the StarBook, which is essentially a hackers’ 14″ laptop built by a bunch of hackers for a living. The hardware specs looked good, with plenty of upgrade options, and choice of preinstalled Linux distribution. While I continue to build desktop systems, I don’t plan to get involved with laptop hardware; however, it’s good to see that Starlab Systems publish complete disassembly instructions.

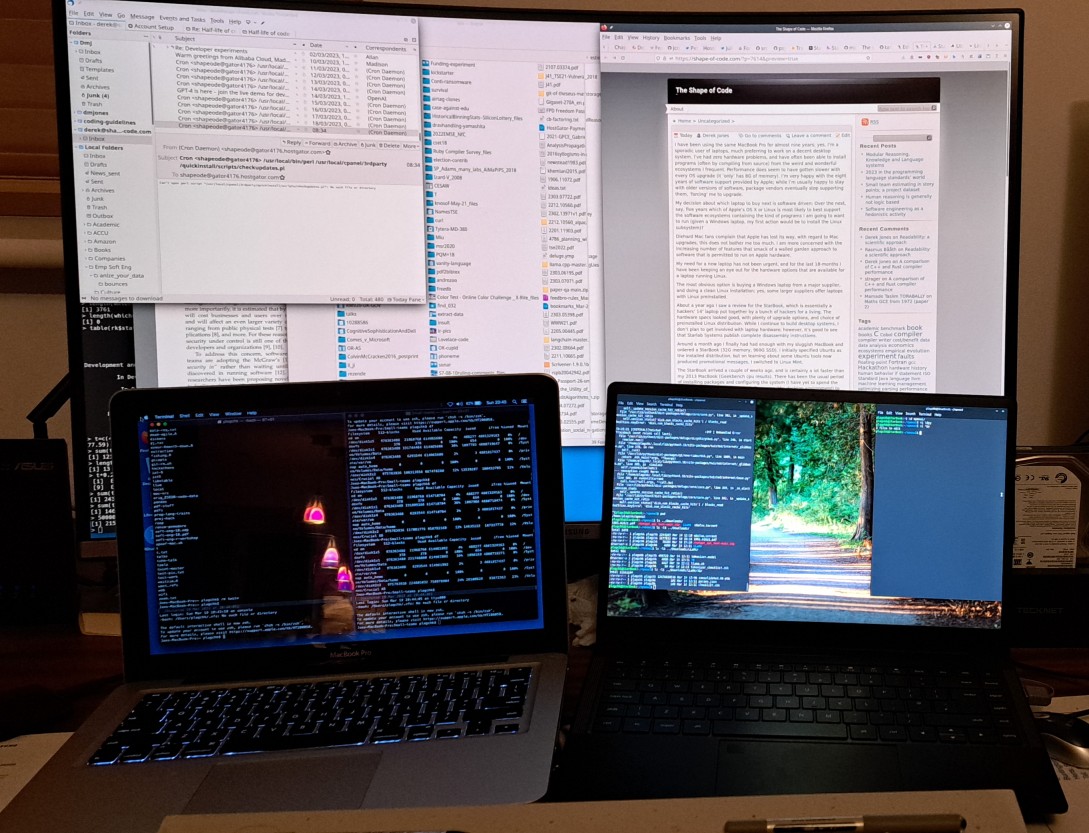

Around a month ago I finally had had enough with my sluggish MacBook and ordered a StarBook (32G memory, 960G SSD). I initially specified Ubuntu as the installed distribution, but on learning that some Ubuntu tools now produced promotional messages, I switched to Linux Mint.

The StarBook arrived a couple of weeks ago, and is certainly a lot faster than my 2013 MacBook (Geekbench cpu results). There has been the usual period of installing packages and configuring the system (I have yet to spend the time need to figure out how to get Cinnamon (the desktop environment) to save/restore the terminal windows across shutdown (the one OSX feature I miss).

All being well, I may be writing again about my new laptop in 2032.

My MacBook, StarBook and Samsung 32-inch curved monitor. While the laptops have very similar length/width, the screen size of the StarBook is 1-inch greater.

Modular Reasoning, Knowledge and Language systems

The spectrum of models of the human mind run from it being a general purpose computer to it being a collection of integrated specialist modules (each performing one function, e.g., speech or language). The Modularity of mind hypothesis offers a halfway house.

ChatGPT sits at the general purpose computer end of the spectrum; there is a single ‘processor’ that accepts a particular kind of input and produces a particular kind of output.

While predict-the-next-token systems like ChatGTP have proven to be good at analysing and constructing sentences, they are often unable to carry out the actions described by these sentences; for instance, they are capable of describing mathematical operations that they are incapable of performing (unless the answer happens to be in their training).

A Modular Reasoning, Knowledge and Language system (MRKL; the suggested pronunciation is miracle), is, as the name suggests, a system built from specialist modules. In this approach, a large language model (LLM), such as ChatGTP, is the language processing module.

In a MRKL system, the input is processed (by an LLM) to figure out which specialist modules have to be queried to obtain the information needed to answer the question, the appropriate text (generated by an LLM) is fed as input to the corresponding modules, and the module outputs are collected and fed to an LLM to generate an answer to the question.

A user question may involve querying multiple modules in some sequence. For instance, the question “What is the average age of the last five British Prime ministers?” might involve querying Google/Alexa answers to obtain a list of previous Prime ministers, followed by extracting individual ages from Wikipedia, followed by querying a maths module to obtain the average of the five ages obtained.

The extent to which an application using an LLM might be said to be a MRKL system is a matter of degree. The following shell script is unlikely to qualify:

curl https://api.openai.com/v1/completions \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer '{$OPENAI_API_KEY} \

-d '{

"model": "text-davinci-003",

"prompt": "Say I found The Shape of Code to be an interesting blog",

"temperature": 0

}' |

The OpenAI API focuses on how to drive their various language models, along with lots of examples. There is no API offering a higher level abstraction or functionality.

An API designed for building MRKL systems, that is starting to gain traction, is langchain; a collection of Python packages, with JavaScript libraries playing catchup.

langchain Module categories include: LLM interaction (e.g., specifying which LLM to use, API keys, and changing default values), document loaders (e.g., readers for pdf, HTML, Gitbook, and Microsoft Word), Agents (these use an LLM to process the input text to find out what actions need to be performed, and to create the input actions that the selected modules need to perform), Memory (store information from previous interactions; other modules can be stateless), and Chat (handle the mechanics of holding a conversation).

What does langchain offer that is making it attractive to a growing number of developers?

- Making use of an LLM within an application will involve some subset of the functionality provided by langchain. The advantage of using langchain is that it provides a framework, MRKL, along with a (sometimes skeleton) existing implementation,

- first mover advantage for an Open source implementation has enabled langchain to attract a growing number of active contributors; it also helps that the core developers have been making regular updates (almost daily), and half-decent documentation is available.

Given the current volume of discussion around LLMs, why has there been so little written about MRKL systems?

Building a MRKL system requires coding ability, and developers are a small percentage of those contributing to the discussion avalanche.

Building a MRML system takes a lot of time and work. Being able to break down a question into subcomponents that can be answered by the available modules, and sequencing them appropriately is a non-trivial problem.

Once Apps solving real-world problems start becoming widely used, and the novelty of generic chat systems wears off, the discussion will switch to more grounded issues.

2023 in the programming language standards’ world

Two weeks ago I was on a virtual meeting of IST/5, the committee responsible for programming language standards in the UK. IST/5 has a new chairman, Guy Davidson, whose efficiency is very unstandard’s like.

It’s been 18 months since I last reported on the programming language standards’ world, what has been going on?

2023 is going to be a bumper year for the publication of revised Standards of long-established programming language: COBOL, Fortran, C, and C++ (a revised Standard for Ada was published last year).

Yes, COBOL; a new COBOL Standard was published in January. Reports of its death were premature, e.g., my 2014 post suggesting that the latest version would be the last version of the Standard, and the closing of PL22.4, the US Cobol group, in 2017. There has even been progress on the COBOL front end for gcc, which now supports COBOL 85.

The size of the COBOL Standard has leapt from 955 to 1,229 pages (around new 200 pages in the normative text, 100 in the annexes). Comparing the 2014/2023 documents, I could not see any major additions, just lots of small changes spread throughout the document.

Every Standard has a project editor, the person tasked with creating a document that reflects the wishes/votes of its committee; the project editor sends the agreed upon document to ISO to be published as the official ISO Standard. The ISO editors would invariably request that the project editor make tiresome organizational changes to the document, and then add a front page and ISO copyright notice; from time to time an ISO editor took it upon themselves to reformat a document, sometimes completely mangling its contents. The latest diktat from ISO requires that submitted documents use the Cambria font. Why Cambria? What else other than it is the font used by the Microsoft Word template promoted by ISO as the standard format for Standard’s documents.

All project editors have stories to tell about shepherding their document through the ISO editing process. With three Standards (COBOL lives in a disjoint ecosystem) up for publication this year, ISO editorial issues have become a widespread topic of discussion in the bubble that is language standards.

Traditionally, anybody wanting to be actively involved with a language standard in the UK had to find the contact details of the convenor of the corresponding language panel, and then ask to be added to the panel mailing list. My, and others, understanding was that provided the person was a UK citizen or worked for a UK domiciled company, their application could not be turned down (not that people were/are banging on the door to join). BSI have slowly been computerizing everything, and, as of a few years ago, people can apply to join a panel via a web page; panel members are emailed the CV of applicants and asked if “… applicant’s knowledge would be beneficial to the work programme and panel…”. In the US, people pay an annual fee for membership of a language committee ($1,340/$2,275). Nobody seems to have asked whether the criteria for being accepted as a panel member has changed. Given that BSI had recently rejected somebodies application to join the C++ panel, the C++ panel convenor accepted the action to find out if the rules have changed.

In December, BSI emailed language panel members asking them to confirm that they were actively participating. One outcome of this review of active panel membership was the disbanding of panels with ‘few’ active members (‘few’ might be one or two, IST/5 members were not sure). The panels that I know to have survived this cull are: Fortran, C, Ada, and C++. I did not receive any email relating to two panels that I thought I was a member of; one or more panel convenors may be appealing their panel being culled.

Some language panels have been moribund for years, being little more than bullet points on the IST/5 agenda (those involved having retired or otherwise moved on).

Recent Comments