Archive

Benchmarking string search algorithms

Searching a sequence of items for occurrences of a specific pattern is a common operation, and researchers are still discovering faster string search algorithms.

While skimming the paper Efficient Exact Online String Matching Through Linked Weak Factors by M. N. Palmer, S. Faro, and S. Scafiti, Tables 1, 2 and 3 jumped out at me. The authors put a lot of effort into benchmarking the performance of 21 search algorithms representing a selection of go-faster techniques (they wanted to show that their new algorithm, using hash chains, was the fastest; Grok explains the paper). I emailed the authors for the data, and Matt Palmer kindly added it to the HashChain Github repo. Matt was also generous with his time answering my questions.

The benchmark search involved three sequences of items, each of 100MB, a genome sequence, a protein sequence and English text. The following analysis if based on results from searching the English text. The search process returned the number of occurrences of the pattern in the character sequence.

The patterns to search for were randomly extracted from the sequence being searched. Eleven pattern lengths were used, ranging from 4 to 4,096 items in powers of 2 (the tables in the paper list lengths in the range 8 to 512). There were 500 runs for each length, with a different pattern used each time, i.e., a total of 5,500 patterns. Every search algorithm matched the same 5,500 patterns.

Some algorithms have tuneable parameters (e.g., the number of characters hashed), and 107 variants of the 21 algorithms were run, giving a total of 555,067 timing measurements (a 200ms time limit sometimes prevented a run completing).

To understand some of the patterns present in the timing results, it’s necessary to know something about how industrial strength string search algorithms work. The search pattern is first processed to create either a finite state machine or some other data structure. The matching process starts at the right of the pattern and moves left, comparing items. This approach makes it possible to skip over sequences of text that cannot match. For instance, if the current text character is X, this is fed into the finite state machine created from the pattern, and if X is not in the pattern, the matching process can move forward by the length of the pattern (if the pattern contains an X, the matching process skips forward the appropriate number of characters). This pattern shift technique was first implemented in the Boyer-Moore algorithm in 1977.

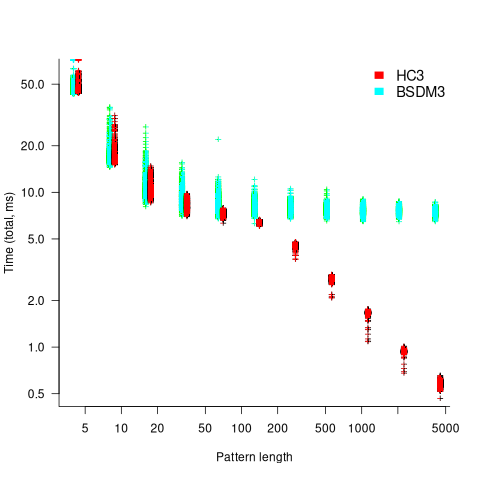

One consequence of skipping over sequences that cannot match, is that longer pattern enable longer skips, resulting in faster searches. The following plot shows the search time against pattern length for two algorithms; total time includes preprocessing the pattern and searching the text (red plus symbols have been offset by 10% to show both timings; code+data):

Why does the search time for HC3 (one variant of the author’s new algorithm) start to plateau and then start decreasing again? One possible cause of this saddle point is the cpu cache line width, which is 64 bytes on the system that ran the benchmarks. When the skip length is at least twice the cache line width, it is not necessary to read characters that would cause the filling of at least one cache line. As always, further research is needed.

What is the most informative method for comparing the algorithm/tool performance?

The most commonly used method is to compare mean performance times, and this is what the authors do for each pattern length. A related alternative, given that different patterns are used for each run, is to compare median times, on the basis that users are interested in the fastest algorithm across many patterns. When variance in the search times is taken into account, there is no clear winner across all pattern lengths (the uncertainty in the mean value is sometimes larger than the difference in performance).

Comparing performance across pattern lengths is interesting for algorithm designers, but users want a single performance value. The traditional approach to building a single model, that would include pattern length and algorithm, is to fit a regression model. However, this approach requires that the change in performance with pattern length roughly have the same form across all algorithms. The plot above clearly shows two algorithms with different forms of change of timing behavior with pattern length (other algorithms exhibit different behaviors).

A completely different modelling approach is to treat each pattern search as a competition between algorithms, with algorithms ranked by search time (fastest first). In this approach, the relative performance of each algorithm is ranked; there are 500 ranks for each pattern length. The fitted model can be used to calculate the probability, over all patterns, that algorithm A1 will be faster than algorithm A2.

Readers might be familiar with the Bradley-Terry model, where two items are ranked, e.g., the results from football games played between pairs of teams during a season. When more than two items are ranked, a Plackett-Luce model can be fitted. The output from fitting these models is a value,  , for each of the algorithms, e.g.,

, for each of the algorithms, e.g.,  . The probability that algorithm

. The probability that algorithm A1 will rank higher than Algorithm A2 is calculated from the expression:

, this expression can also be written as:

, this expression can also be written as:

Switch A1 and A2 to calculate the opposite ranking.

The probability that algorithm A1 ranks higher than Algorithm A2 which in turn ranks higher than Algorithm A3 is calculated as follows (and so on for more algorithms):

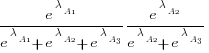

For ease of comparison, only the 53 algorithms with 500 sets of timing data for all pattern lengths was fitted. The following plot shows the fitted  values for each algorithm (blue/green lines are standard errors, and names containing HC are some variant of the authors’ hash chain technique; code+data):

values for each algorithm (blue/green lines are standard errors, and names containing HC are some variant of the authors’ hash chain technique; code+data):

The EPSM algorithm makes use of the SSE and AVX instructions, supported by modern x86 processors, that can operate on up to 512 bits at a time.

The following table lists the fitted lambda values for the top eight ranked algorithm implementation:

Algorithm Lambda

EPSM 9.103007

THC3 8.743377

HC3 8.567715

FHC3 8.381220

THC4 8.079716

FHC4 7.991929

HC4 7.695787

TWFR4 7.557796 |

The likelihood that EPSM will rank higher than THC3 is  .

.

This is better than random, and reflects the variability in algorithm performance, i.e., there is no clear winner.

It might be possible to extract more information from the data.

Some algorithms use of the same technique for part of their search process. Information on the techniques shared by algorithms can be added to the model as covariates. Assuming that a suitable fit is obtained, the model coefficients would indicate the relative impact of each technique on performance. I don’t know enough to select the techniques, and Matt is thinking about it.

Half-life of Microsoft products is 7 years

I get a lot of pushback from developers/managers when I tell them that the average application has a relatively short lifetime, i.e., half-life of 4-8 years. The pushback kicks in when I start citing data, up until then my listeners appear surprised/skeptical. The fact that source code has a brief and lonely existence is accepted, but telling them about the (one study) evidence that a coding mistake is more likely to disappear because of an unrelated coding change than as a result of fixing a fault report appears to make them feel uncomfortable.

Some applications live a long time, and most developers will spend most of their time working on long-lived applications. Short-lived applications are not around long enough to acquire significant developer/manager mind share.

I think the pushback is rooted in more than developer experience; developers don’t like the thought of their work disappearing from the world. The desire for permanence in what we create may be a human characteristic. Extolling the creation of reliable, maintainable, readable code creates an implicit assumption that applications are going to live long enough for the cost of these activities to be paid back.

How accurate are these half-life estimates?

The 4-8 year half-life range is derived from two datasets. A while ago I spotted another dataset: Fabiano Riccardi‘s Killed by Microsoft, currently containing information on 141 killed products.

All three datasets list just the products that have been killed, i.e., they are not a list of all products. A half-life calculation based only on killed products could underestimate the actual lifetime, it depends on whether the rate of killed products remains roughly the same percentage of all products or not. If the number of products killed, in any period, is always roughly the same percentage of all current products, then the calculated lifetime is not affected by the lack of data on the number of live products. Uncertainty in the calculated lifetime is created when the number of products killed is unconnected with the current number of live products.

It’s possible, to save money, products are more likely to be killed when a company is going through a period of poor performance, or the economy is in recession, compared to when business is booming.

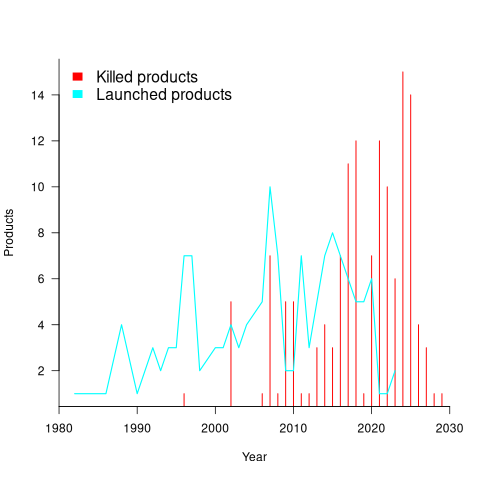

Another source of uncertainty is sampling bias. Companies announce when products are released/withdrawn, creating recency bias because it’s easier to monitor the news than actively search for data on past product releases/withdraws. The plot below shows the number of products Microsoft killed in each year (red bars; post 2025 are to be killed-by dates) and number of new products launched each year blue/green line (code+data):

I’m sure that Microsoft killed more than one product before 2000. The Dot-com bubble burst in March 2000, and I would expect this to have resulted in lots of killed products. The lack of data on products killed before 2000 means that shorter lived products are undercounted.

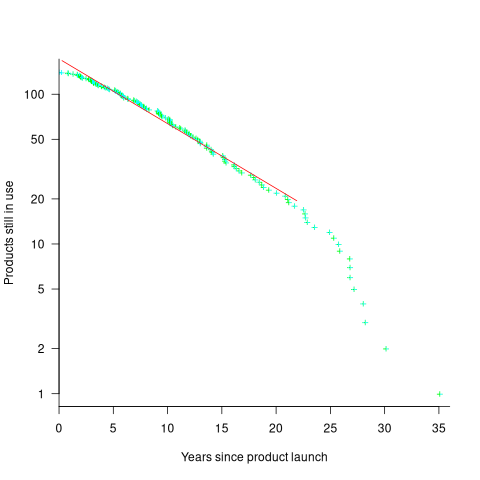

The plot below shows the number of Microsoft (eventually killed) products still supported a given number of years after launch, the red line is a regression fit for products aged between 4 months and 22 years (code+data):

The half-life of the Microsoft products in this dataset, aged between 4 months and 22 years, is 7 years. Is the sharp decline in half-life after 22 years a real thing, or a consequence of the small amount of data before 2005? As always, more data is needed.

How has the price of a computer changed over time?

We are told that computers are now orders of magnitude cheaper than they once were. Computers have changed an awful lot over the last 70 years; how is the functionality supported by different computers normalised such that the price of computers from long ago can be compared with today’s computers?

One approach is to narrow the question down to calculating the cost of performing some basic operation, e.g., numerical calculation or sorting a list of values. Nordhaus’s famous paper: Two Centuries of Productivity Growth in Computing uses this approach.

The primary advantage of the cost-of-operation approach is that it can be made to work across the complete range of computing platforms. The major disadvantage of this approach is that it focuses on the performance of the cpu/memory, ignoring the ability to store large amounts of data and perform I/O (which is most of the cost of some computer systems).

The US consumer price index (CPI) uses Hedonic regression to adjust the average price of a product family whose quality changes over time (the CPI is used to track inflation, and so every price needs to be for a product identical to the exemplar chosen at some start date). Hedonic pricing models are also used to understand how specific features within a product category (e.g., housing, automobiles, or electronics) contribute to the price. The term hedonic is derived from hedonism, and was first used in a 1939 paper analysing the price/quality of automobiles.

The 1979 paper “Hedonic Prices and the Structure of the Digital Computer Industry” by R. Michaels (cannot find a downloadable copy) appears to have kick-started the computer/hedonic research rabbit hole (the 1969 book “The Economics of Computers” by W. F. Sharpe covers computer costings in detail). Hedonic regression estimates the value of a product by braking it down into its major components which are used as the explanatory variables in a regression model that predicts the product price on given dates, e.g., for mainframe computers: price, cpu speed, amount of memory, number/size of hard disks, number of tape drives and card readers.

While mainframes and microcomputers share some characteristics (e.g., cpu, memory, and discs), they address different markets with very different requirements (e.g., mission-critical requires high reliability and tape backups). Different Hedonic regression models need to be fitted for each.

Gathering a representative sample of information on all the major components of a product, preferably for each year, is a lot of work. Many papers make use of information from proprietary databases. A lot of historical information is now available in scanned trade publications, but LLMs are not yet good enough to reliably extract detailed information from scanned documents (e.g., they sometimes ignore information, rather than hallucinating). I am waiting for the error rate to decrease.

The analysis in the book chapter Computer Processors and Peripherals by R. J. Gordon spends a lot of time dealing with the issues around potentially inconsistent data sources. The final price index (table 6.7) shows that the normalised price of mainframes decreased by a factor of 922 between 1951 and 1983 (23% per year, for 33 years). That is, the equivalent 1951 mainframe purchased in 1983 would have been cheaper by a factor of 922. In practice, prices did not decrease by a factor of 922, rather some combination of price/quality of the average mainframe changed by this factor (where quality is some combination of faster cpus, more memory and other factors). For an analysis of computer related products see the book “Price Measurements and Their Uses” by Foss, Manser, and Young.

The price of microcomputers (or computers as we call them today, as there is little public perception of any other type of computer) has decreased, but by how much (in the Hedonic sense)?

The first Hedonic analysis of microcomputers was Cohen’s 1988 Master’s thesis, for personal computers between 1976 and 1987. I think Cohen is pushing product family boundaries to treat the 8-bit computers introduced before the IBM PC in August 1981 (which did not include a hard disk until 1983, and did not really become a 16-bit computer until the IBM AT in 1987) as being comparable with later microcomputers. Cohen’s analysis found positive/negative swings in the adjusted prices of the microcomputers.

The paper Price and quality of desktop and mobile personal computers: A quarter-century historical overview by Berndt, Dulberger and Rappaport claim that there was an average annual 27% decline in microcomputer prices between 1976 and 1999. Again, my comments on pre-1987 microcomputers applies. A later paper (appendix table 1) shows average actual prices increasing until 1991 and decreasing thereafter, with the averages of cpu frequency, memory capacity and hard disk size continually increasing. There was little difference in the prices at the start(1976)/end(2002) of the period analysed, but a huge difference in the quality characteristics. While writing my Evidence-based software engineering book, I emailed Berndt for the data, which a co-author kindly made available. Unfortunately, I found that much of the data was confidential (the name of a company that sold computer sales data appeared in the files), and could not be publicly shared 🙁

Hedonic analysis of computers appears to have become unfashionable around the start of 2000. More recent papers analyse products such as mobile phones and cloud services. Please leave a comment if you know of any recent hedonic analysis of computer prices.

The only detailed microcomputer price data I know of consists of 6,259 detailed prices collected by Stengos, and Zacharias over 35 months, starting in January 1993, from the adverts in PC Magazine.

CPU frequency not relevant to SPEC benchmark performance

Despite the end of Dennard scaling around 2005-7 computer performance, as measured by the SPEC cpu benchmarks, continues to improve. What is driving this ongoing increase in performance, given that cpu clock rates have stopped increasing?

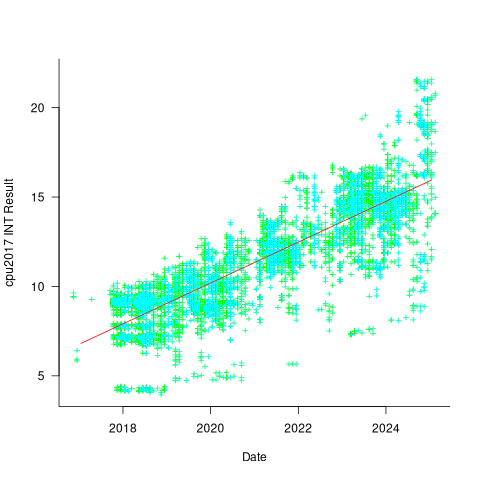

The plot below shows 9,161 results from the SPEC CPU integer benchmark, plus the fitted regression line  (code+data):

(code+data):

There is a scattering of benchmark results because manufacturers offer systems having a range of performance.

Possible factors driving the ongoing increases in system performance include increased DRAM-memory bandwidth, and cpu improvements such as larger caches and more accurate branch prediction. While Moore’s law (i.e., rate of growth of the number of transistors on a chip) has slowed down a lot, the number of transistors in a processor chip has continued to increase (many of these transistors have been used to build chips that contain multiple cpu cores).

The SPEC benchmark result data includes a lot of information about the system that ran the benchmarks, including: processor family/model, number of cpu cores, clock frequency, amount of memory installed and its type.

Results from SPEC CPU2017, the current version of the benchmark, are available from the start of 2017 to now. The following analysis uses these results. Results from the SPEC CPU2006 benchmark are also available, and a regression model fitted to the results from the 780 systems that ran both benchmarks, gives the mapping from CPU INT 2006 to CPU INT 2017 as:  .

.

The processor information in the results file usually specifies family name plus model number/name. The model information usually correlates with clock frequency, perhaps cache size, or gpu support; some examples below.

AMD EPYC 4464P AMD EPYC 4564P

AMD Ryzen 9 7950X AMD Ryzen 7 5800X

Intel Xeon Platinum 8490H Intel Xeon Gold 6438N

Intel Xeon E3-1220 v3 Intel Xeon E5-2697 v3 |

The family name is sufficient for an initial analysis. Details of any cache size differences between models can always be included in a later analysis. The following table shows the number of processor x86 based families present in the 2017 INT results (total 9,161):

AMD EPYC AMD Ryzen Intel

1475 7 1

Intel Celeron Intel Core i3 Intel Core i5

16 31 2

Intel Core i7 Intel Pentium Intel Xeon

1 30 605

Intel Xeon Bronze Intel Xeon D Intel Xeon E3

167 12 16

Intel Xeon E5 Intel Xeon E7 Intel Xeon Gold

3 2 3994

Intel Xeon Platinum Intel Xeon Silver Intel Xeon W

1822 969 8 |

The memory information usually includes total bytes, number of memory sticks and interface standard (e.g., DDR2/3/4/5); some examples below.

64 GB (2 x 32 GB 2Rx4 PC5-5600B-R, running at 5200)

64 GB (2 x 32 GB 2Rx8 PC4-3200AA-E)

256 GB (8*1GB DDR2-400 DIMMS per 4 core module)

192 GB (4 x 12 x 4 GB DDR3-1333R, ECC, CL9)

32 GB (8 x 4 GB Dual-rank PC2-6400 CL5-5-5 FB-DIMMs)

24 GB (6 x 4 GB DDR3-1333 downclocked to 1066 MHz) |

The memory bandwidth can be calculated from the interface standard used. The names of modern DRAM interface standards start with either DDR or PC, and a number, a hyphen and then another number. The values appearing in the SPEC results don’t always follow the naming rules listed in the standard (e.g., last number of a PC name using the corresponding DDR number), and in a few cases a digit was dropped from the last number. Where possible the ‘obvious’ edits were made (sometimes values were just wrong), see code for details. The following table shows the number of interface standards represented in the 2017 CPU INT results (total 9,161; in the 2006 results DDR names predominated):

PC4-2400 PC4-2666 PC4-2933 PC4-3200 PC4-4800 PC5-11200 PC5-12800

26 2248 2163 2080 6 2 3

PC5-4800 PC5-5200 PC5-5600 PC5-6400 PC5-8 PC5-8800

1735 5 653 233 2 5 |

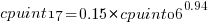

Once the memory is identified, its bandwidth can be looked up (bespoke memory stick clock rates were ignored). Fitting a regression model to the data, with the CPU INT (cpu integer benchmark) result as the outcome, we get (using a multiplicative model allows each factor to have a percentage impact; code+data):

where:  is the memory bandwidth in megabytes per second,

is the memory bandwidth in megabytes per second,  is cpu frequency in MHz, and

is cpu frequency in MHz, and  is the fitted constant for each processor family.

is the fitted constant for each processor family.

The cpu frequency varies between 1.7 and 4.7 GHz (a ratio of 1:2.8), the memory bandwidth between 19,200 and 51,200 MB/s (a ratio of 1:2.7), and processor family performance impact ratio was 1:2.2. Given the fitted power laws, this range of cpu frequencies could impact performance by around 22%, while the range of memory bandwidth could impact performance by a factor of two.

This fitted model implies that cpu frequency changes, over the range supported by systems since 2017, have almost no impact on the performance of integer-based programs, i.e., no floating point.

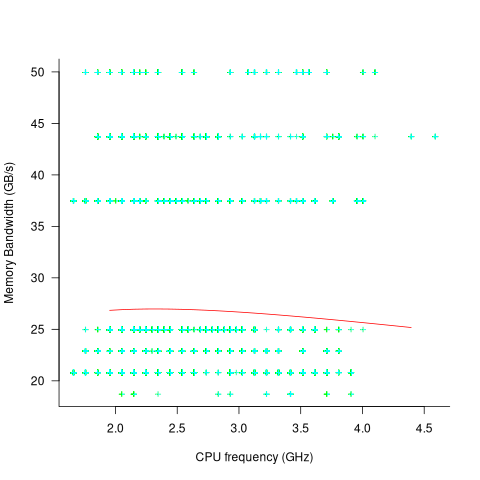

I thought there might be a correlation between memory bandwidth and cpu frequency (because vendors would use faster memory in systems with faster cpus). The plot below shows CPU frequency against memory bandwidth (both axis use linear scales), plus a fitted regression line in red (code+data):

I was wrong. There does not appear to be any connection between a system’s cpu frequency and its memory bandwidth.

These days, most x86 chips include multiple processors, with each processor taking a share of memory bandwidth. Increasing memory bandwidth is essential, if all cores are to be kept busy.

The SPEC CPU benchmark measures the performance of a single processor. If only one of the cpu cores available on a system is being used, that core has the benefit of memory bandwidth that usually has to be shared.

To what extent is a single core benchmark relevant today? I suspect that most programs run on a single core, but developers sometimes attempt to spread cpu intensive programs over multiple cores. As always, data is needed.

The SPEC benchmark is useful for cpu designers (the original target market) and compiler writers wanting to measure the impact of fancy new optimizations.

Repo of software estimation datasets

I have finally gotten around to creating a GitHub repository for the publicly available software estimation datasets. My reasons for doing this include increasing the visibility of the large datasets, having something to reference when I tell people about the miniscule size of most of the datasets modeled in research papers (one of my most popular posts explains why software estimation is mostly fake research), and to help me remember what datasets I do have.

There is a huge disparity in dataset sizes. The main reason for this is that some datasets contain one row for each task within a project, while others contain one row for the whole project.

The Albrecht dataset from 1983 contains 24 rows, and I’m treating it as the minimum size for a dataset to be included in this repo. Smaller datasets have been published, but I don’t see any value including them. Albrecht is only included because it is used by earlier papers.

The current state of knowledge about the characteristics of individual task estimates is discussed in an earlier post.

What of the row per project datasets? Other than overestimates being common, there is not enough data to reliably spot/claim recurring project patterns across datasets. The estimates have probably occurred in a competitive environment, i.e., there is an incentive to bid low. The common techniques used to estimate projects are either based on counting Function points, or on estimating the number of lines of code contained in the delivered system (this value, plus other values, is plugged in to a cost estimation model, e.g., COCOMO).

The problem with estimating using LOC (which is itself estimated) is that there can be large differences in the number of LOC written by different developers to implement the same functionality.

The datasets in the initial upload include those that are commonly cited in research papers, and those analysed on this blog. I will probably discover (i.e., remember) more datasets in the coming weeks, as happened for the repository of reliability datasets created a few months ago.

Recent Comments