Archive

Delphi and group estimation

A software estimate is a prediction about the future. Software developers were not the first people to formalize processes for making predictions about the future. Starting in the last 1940s, the RAND Corporation’s Delphi project created what became known as the Delphi method, e.g., An Experiment in Estimation, and Construction of Group Preference Relations by Iteration.

In its original form experts were anonymous; there was a “… deliberate attempt to avoid the disadvantages associated with more conventional uses of experts, such as round-table discussions or other milder forms of confrontation with opposing views.”, and no rules were given for the number of iterations. The questions involved issues whose answers involved long term planning, e.g., how many nuclear weapons did the Soviet Union possess (this study asked five questions, which required five estimates). Experts could provide multiple answers, and had to give a probability for each being true.

One of those involved in the Delphi project (Helmer-Hirschberg) co-founded the Institute for the Future, which published reports about the future based on answers obtained using the Delphi method, e.g., a 1970 prediction of the state-of-the-art of computer development by the year 2000 (Dalkey, a productive member of the project, stayed at RAND).

The first application of Delphi to software estimation was by Farquhar in 1970 (no pdf available), and Boehm is said to have modified the Delphi process to have the ‘experts’ meet together, rather than be anonymous, (I don’t have a copy of Farquhar, and my copy of Boehm’s book is in a box I cannot easily get to); this meeting together form of Delphi is known as Wideband Delphi.

Planning poker is a variant of Wideband Delphi.

An assessment of Delphi by Sackman (of Grant-Sackman fame) found that: “Much of the popularity and acceptance of Delphi rests on the claim of the superiority of group over individual opinions, and the preferability of private opinion over face-to-face confrontation.” The Oracle at Delphi was one person, have we learned something new since that time?

Group dynamics is covered in section 3.4 of my Evidence-based software engineering book; resource estimation is covered in section 5.3.

The likelihood that a group will outperform an individual has been found to depend on the kind of problem. Is software estimation the kind of problem where a group is likely to outperform an individual? Obviously it will depend on the expertise of those in the group, relative to what is being estimated.

What does the evidence have to say about the accuracy of the Delphi method and its spinoffs?

When asked to come up with a list of issues associated with solving a problem, groups generate longer lists of issues than individuals. The average number of issues per person is smaller, but efficient use of people is not the topic here. Having a more complete list of issues ought to be good for accurate estimating (the validity of the issues is dependent on the expertise of those involved).

There are patterns of consistent variability in the estimates made by individuals; some people tend to consistently over-estimate, while others consistently under-estimate. A group will probably contain a mixture of people who tend to over/under estimate, and an iterative estimation process that leads to convergence is likely to produce a middling result.

By how much do some people under/over estimate?

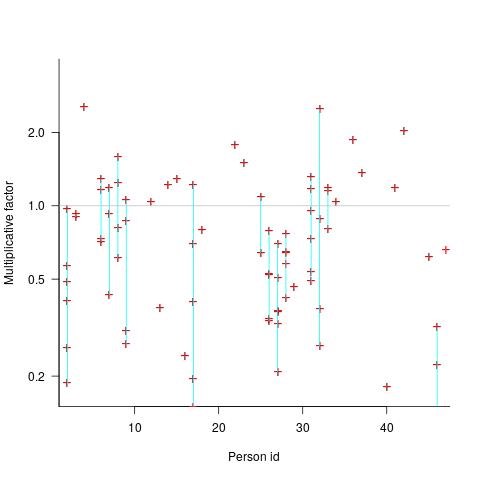

The multiplicative factor values (y-axis) appearing in the plot below are from a regression model fitted to estimate/actual implementation time for a project involving 13,669 tasks and 47 developers (data from a study Nichols, McHale, Sweeney, Snavely and Volkmann). Each vertical line, or single red plus, is one person (at least four estimates needed to be made for a red plus to occur); the red pluses are the regression model’s multiplicative factor for that person’s estimates of a particular kind of creation task, e.g., design, coding, or testing. Points below the grey line are overestimation, and above the grey line the underestimation (code+data):

What is the probability of a Delphi estimate being more accurate than an individual’s estimate?

If we assume that a middling answer is more likely to be correct, then we need to calculate the probability that the mix of people in a Delphi group produces a middling estimate while the individual produces a more extreme estimate.

I don’t have any Wideband Delphi estimation data (or rather, I only have tiny amounts); pointers to such data are most welcome.

Estimate variability for the same task

If 100 people estimate the time needed to implement a feature, in software, what is the expected variability in the estimates?

Studies of multiple implementations of the same specification suggest that standard deviation of the mean number of lines across implementations is 25% of the mean (based on data from 10 sets of multiple implementations, of various sizes).

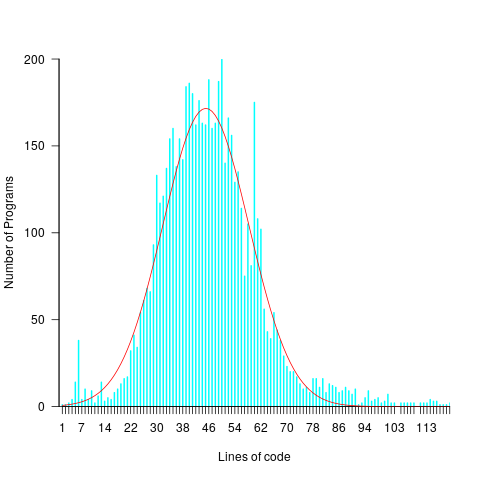

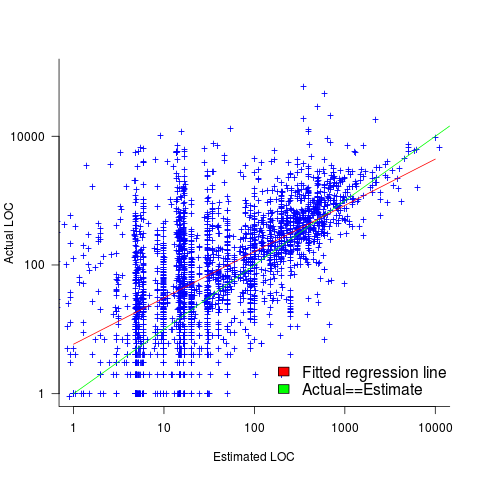

The plot below shows lines of code against the number of programs (implementing the 3n+1 problem) containing that many lines (red line is a Normal distribution fitted by eye, code and data):

Might any variability in the estimates for task implementation be the result of individuals estimating their own performance (which is variable)?

To the extent that an estimate is based on a person’s implementation experience, a developer’s past performance will have some impact on their estimate. However, studies have found a great deal of variability between individual estimates and their corresponding performance.

One study asked 14 companies to bid on implementing a system (four were eventually chosen to implement it; see figure 5.2 in my book). The estimated elapsed time varied by a factor of ten. Until the last week this was the only study of this question for which the data was available (and may have been the only such study).

A study by Alhamed and Storer investigated crowd-sourcing of effort estimates, structured by use of planning poker. The crowd were workers on Amazon’s Mechanical Turk, and the tasks estimated came from the issue trackers of JBoss, Apache and Spring Integration (using issues that had been annotated with an estimate and actual time, along with what was considered sufficient detail to make an estimate). An initial set of 419 issues were whittled down to 30, which were made available, one at a time, as a Mechanical Turk task (i.e., only one issue was available to be estimated at any time).

Worker estimates were given using a time-based category (i.e., the values 1, 4, 8, 20, 40, 80), with each value representing a unit of actual time (i.e., one hour, half-day, day, half-week, week and two weeks, respectively).

Analysis of the results from a pilot study were used to build a model that detected estimates considered to be low quality, e.g., providing a poor justification for the estimate. These were excluded from any subsequent iterations.

Of the 506 estimates made, 321 passed the quality check.

Planning poker is an iterative process, with those making estimates in later rounds seeing estimates made in earlier rounds. So estimates made in later rounds are expected to have some correlation with earlier estimates.

Of the 321 quality check passing estimates, 153 were made in the first-round. Most of the 30 issues have 5 first-round estimates each, one has 4 and two have 6.

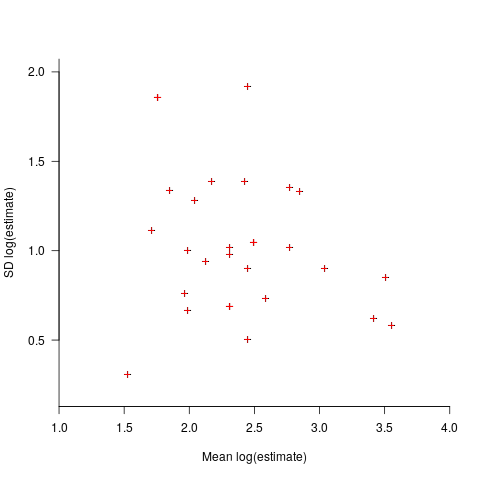

Workers have to pick one of five possible value as their estimate, with these values being roughly linear on a logarithmic scale, i.e., it is not possible to select an estimate from many possible large values, small values, or intermediate values. Unless most workers pick the same value, the standard deviation is likely to be large. Taking the logarithm of the estimate maps it to a linear scale, and the plot below shows the mean and standard deviation of the log of the estimates for each issue made during the first-round (code+data):

The wide spread in the standard deviations across a spread of mean values may be due to small sample size, or it may be real. The only way to find out is to rerun with larger sample sizes per issue.

Now it has been done once, this study needs to be run lots of times to measure the factors involved in the variability of developer estimates. What would be the impact of asking workers to make hourly estimates (they would not be anchored by experimenter specified values), or shifting the numeric values used for the categories (which probably have an anchoring effect)? Asking for an estimate to fix an issue in a large software system introduces the unknown of all kinds of dependencies, would estimates provided by workers who are already familiar with a project be consistently shifted up/down (compared to estimates made by those not familiar with the project)? The problem of unknown dependencies could be reduced by giving workers self-contained problems to estimate, e.g., the 3n+1 problem.

The crowdsourcing idea is interesting, but I don’t think it will scale, and I don’t see many companies making task specifications publicly available.

To mimic actual usage, research on planning poker (which appears to have non-trivial usage) needs to ensure that the people making the estimates are involved during all iterations. What is needed is a dataset of lots of planning poker estimates. Please let me know if you know of one.

Claiming that software is AI based is about to become expensive

The European Commission is updating the EU Machinery Directive, which covers the sale of machinery products within the EU. The updates include wording to deal with intelligent robots, and what the commission calls AI software (contained in machinery products).

The purpose of the initiative is to: “… (i) ensuring a high level of safety and protection for users of machinery and other people exposed to it; and (ii) establishing a high level of trust in digital innovative technologies for consumers and users, …”

What is AI software, and how is it different from non-AI software?

Answering these questions requires knowing what is, and is not, AI. The EU defines Artificial Intelligence as:

- ‘AI system’ means a system that is either software-based or embedded in hardware devices, and that displays behaviour simulating intelligence by, inter alia, collecting and processing data, analysing and interpreting its environment, and by taking action, with some degree of autonomy, to achieve specific goals;

- ‘autonomous’ means an AI system that operates by interpreting certain input, and by using a set of predetermined instructions, without being limited to such instructions, despite the system’s behaviour being constrained by and targeted at fulfilling the goal it was given and other relevant design choices made by its developer;

‘Simulating intelligence’ sounds reasonable, but actually just moves the problem on, to defining what is, or is not, intelligence. If intelligence is judged on an activity by activity bases, will self-driving cars be required to have the avoidance skills of a fly, while other activities might have to be on par with those of birds? There is a commission working document that defines: “Autonomous AI, or artificial super intelligence (ASI), is where AI surpasses human intelligence across all fields.”

The ‘autonomous’ component of the definition is so broad that it covers a wide range of programs that are not currently considered to be AI based.

The impact of the proposed update is that machinery products containing AI software are going to incur expensive conformance costs, which products containing non-AI software won’t have to pay.

Today it does not cost companies to claim that their systems are AI based. This will obviously change when a significant cost is involved. There is a parallel here with companies that used to claim that their beauty products provided medical benefits; the Federal Food and Drug Administration started requiring companies making such claims to submit their products to the new drug approval process (which is hideously expensive), companies switched to claiming their products provided “… the appearance of …”.

How are vendors likely to respond to the much higher costs involved in selling products that are considered to contain ‘AI software’?

Those involved in the development of products labelled as ‘safety critical’ try to prevent costs escalating by minimizing the amount of software treated as ‘safety critical’. Some of the arguments made for why some software is/is not considered safety critical can appear contrived (at least to me). It will be entertaining watching vendors, who once shouted “our products are AI based”, switching to arguing that only a tiny proportion of the code is actually AI based.

A mega-corp interested in having their ‘AI software’ adopted as an industry standard could fund the work necessary for the library/tool to be compliant with the EU directives. The cost of initial compliance might be within reach of smaller companies, but the cost of maintaining compliance as the product evolves is something that only a large company is likely to be able to afford.

The EU’s updating of its machinery directive is the first step towards formalising a legal definition of intelligence. Many years from now there will be a legal case that creates what later generation will consider to be the first legally accepted definition.

Software engineering experiments: sell the idea, not the results

A new paper investigates “… the feasibility of stealthily introducing vulnerabilities in OSS via hypocrite commits (i.e., seemingly beneficial commits that in fact introduce other critical issues).” Their chosen Open source project was the Linux kernel, and they submitted three patches to the kernel review process.

This interesting idea blew up in their faces, when the kernel developers deduced that they were being experimented on (they obviously don’t have a friend on the inside). The authors have come out dodging and weaving.

What can be learned by reading the paper?

Firstly, three ‘hypocrite commits’ is not enough submissions to do any meaningful statistical analysis. I suspect it’s a convenience sample, a common occurrence in software engineering research. The authors sell three as a proof-of-concept.

How many of the submitted patches passed the kernel review process?

The paper does not say. The first eight pages provide an introduction to the Open source development model, the threat model for introducing vulnerabilities, and the characteristics of vulnerabilities that have been introduced (presumably by accident). This is followed by 2.5 pages of background and setup of the experiment (labelled as a proof-of-concept).

The paper then switches (section VII) to discussing a different, but related, topic: the lifetime of (unintended) vulnerabilities in patches that had been accepted (which I think should have been the topic of the paper. This interesting discussion is 1.5 pages; also see The life and death of statically detected vulnerabilities: An empirical study, covered in figure 6.9 in my book.

The last two pages discuss mitigation, related work, and conclusion (“…a proof-of-concept to safely demonstrate the practicality of hypocrite commits, and measured and quantified the risks.”; three submissions is not hard to measure and quantify, but the results are not to be found in the paper).

Having the paper provide the results (i.e., all three commits spotted, and a very negative response by those being experimented on) would have increased the chances of negative reviewer comments.

Over the past few years I have started noticing this kind of structure in software engineering papers, i.e., extended discussion of an interesting idea, setup of experiment, and cursory or no discussion of results. Many researchers are willing to spend lots of time discussing their ideas, but are unwilling to invest much time in the practicalities of testing them. Some reviewers (who decide whether a paper is accepted to publication) don’t see anything wrong with this approach, e.g., they accept these kinds of papers.

Software engineering research remains a culture of interesting ideas, with evidence being an optional add-on.

Another nail for the coffin of past effort estimation research

Programs are built from lines of code written by programmers. Lines of code played a starring role in many early effort estimation techniques (section 5.3.1 of my book). Why would anybody think that it was even possible to accurately estimate the number of lines of code needed to implement a library/program, let alone use it for estimating effort?

Until recently, say up to the early 1990s, there were lots of different computer systems, some with multiple (incompatible’ish) operating systems, almost non-existent selection of non-vendor supplied libraries/packages, and programs providing more-or-less the same functionality were written more-or-less from scratch by different people/teams. People knew people who had done it before, or even done it before themselves, so information on lines of code was available.

The numeric values for the parameters appearing in models were obtained by fitting data on recorded effort and lines needed to implement various programs (63 sets of values, one for each of the 63 programs in the case of COCOMO).

How accurate is estimated lines of code likely to be (this estimate will be plugged into a model fitted using actual lines of code)?

I’m not asking about the accuracy of effort estimates calculated using techniques based on lines of code; studies repeatedly show very poor accuracy.

There is data showing that different people implement the same functionality with programs containing a wide range of number of lines of code, e.g., the 3n+1 problem.

I recently discovered, tucked away in a dataset I had previously analyzed, developer estimates of the number of lines of code they expected to add/modify/delete to implement some functionality, along with the actuals.

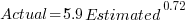

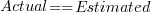

The following plot shows estimated added+modified lines of code against actual, for 2,692 tasks. The fitted regression line, in red, is:  (the standard error on the exponent is

(the standard error on the exponent is  ), the green line shows

), the green line shows  (code+data):

(code+data):

The fitted red line, for lines of code, shows the pattern commonly seen with effort estimation, i.e., underestimating small values and over estimating large values; but there is a much wider spread of actuals, and the cross-over point is much further up (if estimates below 50-lines are excluded, the exponent increases to 0.92, and the intercept decreases to 2, and the line shifts a bit.). The vertical river of actuals either side of the 10-LOC estimate looks very odd (estimating such small values happen when people estimate everything).

My article pointing out that software effort estimation is mostly fake research has been widely read (it appears in the first three results returned by a Google search on software fake research). The early researchers did some real research to build these models, but later researchers have been blindly following the early ‘prophets’ (i.e., later research is fake).

Lines of code probably does have an impact on effort, but estimating lines of code is a fool’s errand, and plugging estimates into models built from actuals is just crazy.

Pricing by quantity of source code

Software tool vendors have traditionally licensed their software on a per-seat basis, e.g., the cost increases with the number of concurrent users. Per-seat licensing works well when there is substantial user interaction, because the usage time is long enough for concurrent usage to build up. When a tool can be run non-interactively in the cloud, its use is effectively instantaneous. For instance, a tool that checks source code for suspicious constructs. Charging by lines of code processed is a pricing model used by some tool vendors.

Charging by lines of code processed creates an incentive to reduce the number of lines. This incentive was once very common, when screens supporting 24 lines of 80 characters were considered a luxury, or the BASIC interpreter limited programs to 1023 lines, or a hobby computer used a TV for its screen (a ‘tiny’ CRT screen, not a big flat one).

It’s easy enough to splice adjacent lines together, and halve the cost. Well, ease of splicing depends on programming language; various edge cases have to be handled (somebody is bound to write a tool that does a good job).

How does the tool vendor respond to a (potential) halving of their revenue?

Blindly splicing pairs of lines creates some easily detectable patterns in the generated source. In fact, some of these patterns are likely to be flagged as suspicious, e.g., if (x) a=1;b=2; (did the developer forget to bracket the two statements with { }).

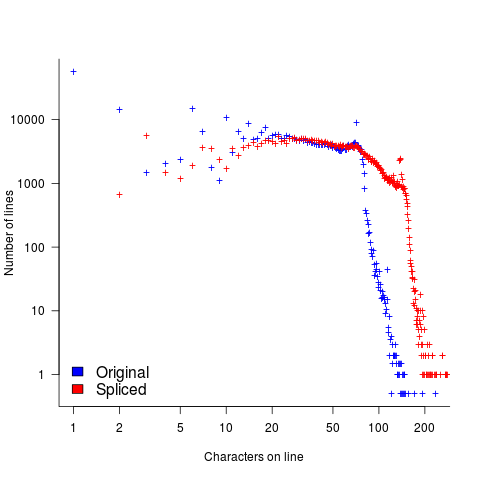

The plot below shows the number of lines in gcc 2.95 containing a given number of characters (left, including indentation), and the same count after even-numbered lines (with leading whitespace removed) have been appended to odd-numbered lines (code+data, this version of gcc was using in my C book):

The obvious change is the introduction of a third straight’ish line segment (the increase in the offset of the sharp decline might be explained away as a consequence of developers using wider windows). By only slicing the ‘right’ pairs of lines together, the obvious patterns won’t be present.

Using lines of codes for pricing has the advantage of being easy to explain to management, the people who sign off the expense, who might not know much about source code. There are other metrics that are much harder for developers to game. Counting tokens is the obvious one, but has developer perception issues: Brackets, both round and curly. In the grand scheme of things, the use/non-use of brackets where they are optional has a minor impact on the token count, but brackets have an oversized presence in developer’s psyche.

Counting identifiers avoids the brackets issue, along with other developer perceptions associated with punctuation tokens, e.g., a null statement in an else arm.

If the amount charged is low enough, social pressure comes into play. Would you want to work for a company that penny pinches to save such a small amount of money?

As a former tool vendor, I’m strongly in favour of tool vendors making a healthy profit.

Creating an effective static analysis requires paying lots of attention to lots of details, which is very time-consuming. There are lots of not particularly good Open source tools out there; the implementers did all the interesting stuff, and then moved on. I know of several groups who got together to build tools for Java when it started to take-off in the mid-90s. When they went to market, they quickly found out that Java developers expected their tools to be free, and would not pay for claimed better versions. By making good enough Java tools freely available, Sun killed the commercial market for sales of Java tools (some companies used their own tools as a unique component of their consulting or service offerings).

Could vendors charge by the number of problems found in the code? This would create an incentive for them to report trivial issues, or be overly pessimistic about flagging issues that could occur (rather than will occur).

Why try selling a tool, why not offer a service selling issues found in code?

Back in the day a living could be made by offering a go-faster service, i.e., turn up at a company and reduce the usage cost of a company’s applications, or reducing the turn-around time (e.g., getting the daily management numbers to appear in less than 24-hours). This was back when mainframes ruled the computing world, and usage costs could be eye-watering.

Some companies offer bug-bounties to the first person reporting a serious vulnerability. These public offers are only viable when the source is publicly available.

There are companies who offer a code review service. Having people review code is very expensive; tools are good at finding certain kinds of problem, and investing in tools makes sense for companies looking to reduce review turn-around time, along with checking for more issues.

The first computer I owned

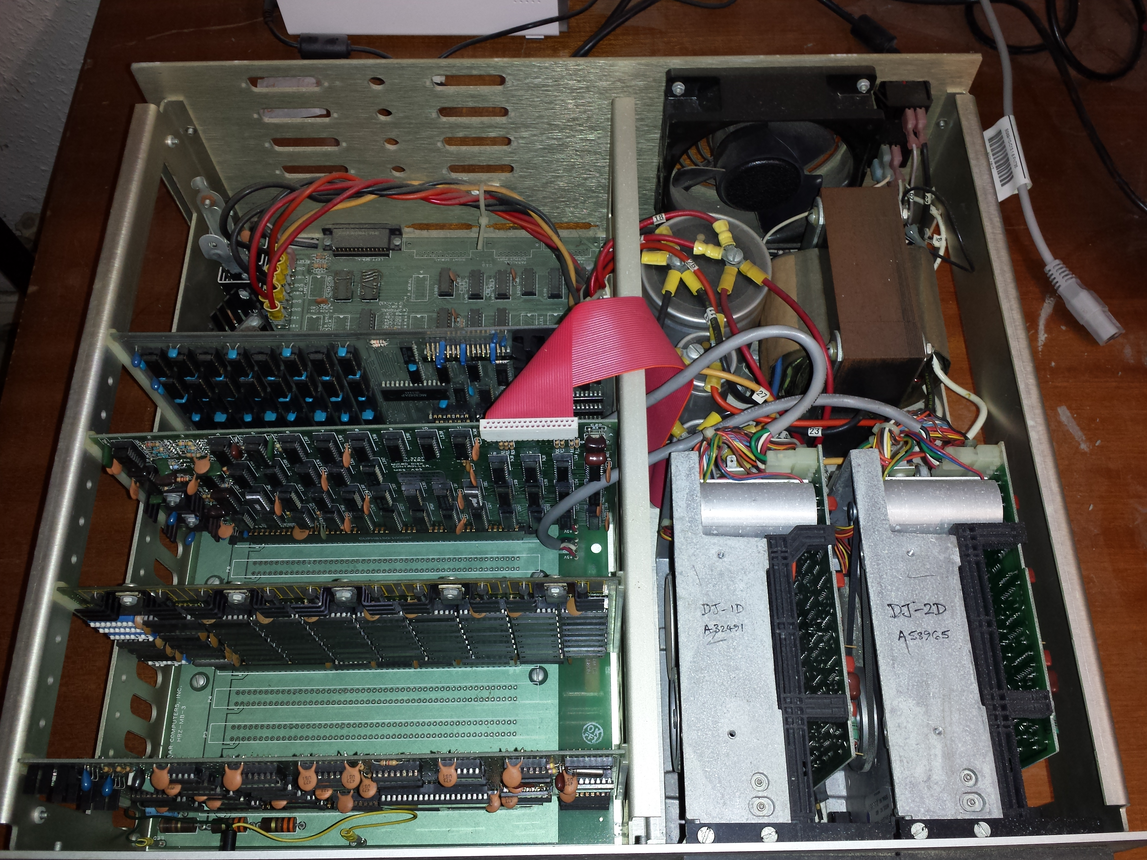

The first computer I owned was a North Star Horizon. I bought it in kit form, which meant bags of capacitors, resistors, transistors, chips, printed circuit boards, etc, along with the circuit diagrams for each board. These all had to be soldered in the right holes, the chips socketed (no surface mount soldering for such a low volume system), and wires connected. I was amazed when the system booted the first time I powered it up; debugging with the very basic equipment I had would have been a nightmare. The only missing component was the power supply transformer, and a trip to the London-based supplier sorted that out. I saved a months’ salary by building the kit (which cost me 4-months salary, and I was one of the highest paid people in my circle).

The few individuals who bought a computer in the late 1970s bought either a Horizon or a Commodore Pet (which was more expensive, but came with an integrated monitor and keyboard). Computer ownership really started to take off when the BBC micro came along at the end of 1981, and could be bought for less than a months’ salary (at least for a white-collar worker).

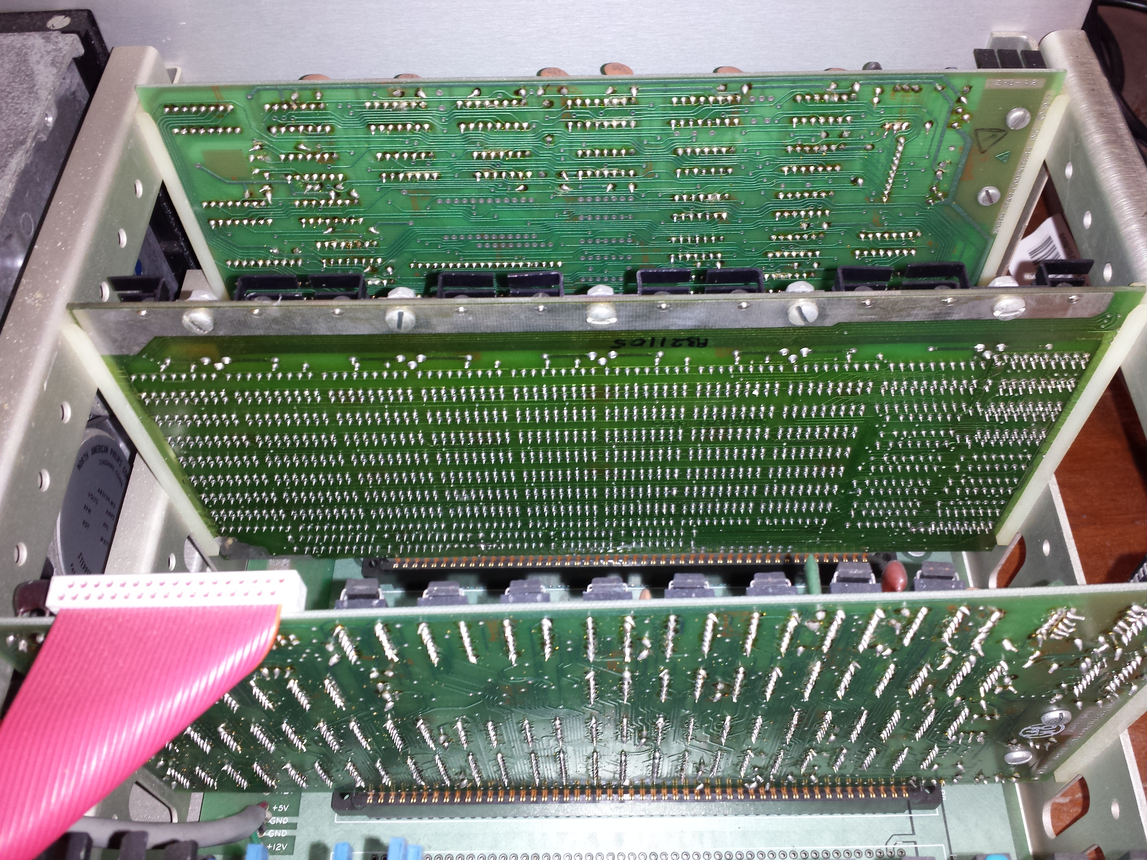

My Horizon contained a Z80A clocking at 4MHz, 32K of RAM, and two 5 1/4-inch floppy drives (each holding 360K; the Wikipedia article says the drives held 90K, mine {according to the labels on the floppies, MD 525-10} are 40-track, 10-sector, double density). I later bought another 32K of memory; the system ROM was at 56K, and contained 4K of code, various tricks allowed the 4K above 60K to be used (the consistent quality of the soldering on one of the boards below identifies the non-hand built board).

The OS that came with the system was CP/M, renamed to CP/M-80 when the Intel 8086 came along, and will be familiar to anybody used to working with early versions of MS-DOS.

As a fan of Pascal, my development environment of choice was UCSD Pascal. The C compiler of choice was BDS C.

Horizon owners are total computer people 🙂 An emulator, running under Linux and capable of running Horizon disk images, is available for those wanting a taste of being a Horizon owner. I didn’t see any mention of audio emulation in the documentation; clicks and whirls from the floppy drive were a good way of monitoring compile progress without needing to look at the screen (not content with using our Horizon’s at home, another Horizon owner and I implemented a Horizon emulator in Fortran, running on the University’s Prime computers). I wonder how many Nobel-prize winners did their calculations on a Horizon?

The Horizon spec needs to be appreciated in the context of its time. When I worked in application support at the University of Surrey, users had a default file allocation of around 100K’ish (memory is foggy). So being able to store stuff on a 360K floppy, which could be purchased in boxes of 10, was a big deal. The mainframe/minicomputers of the day were available with single-digit megabytes, but many previous generation systems had under 100K of RAM. There were lots of programs out there still running in 64K. In terms of cpu power, nearly all existing systems were multi-user, and a less powerful, single-user, cpu beats sharing a more powerful cpu with 10-100 people.

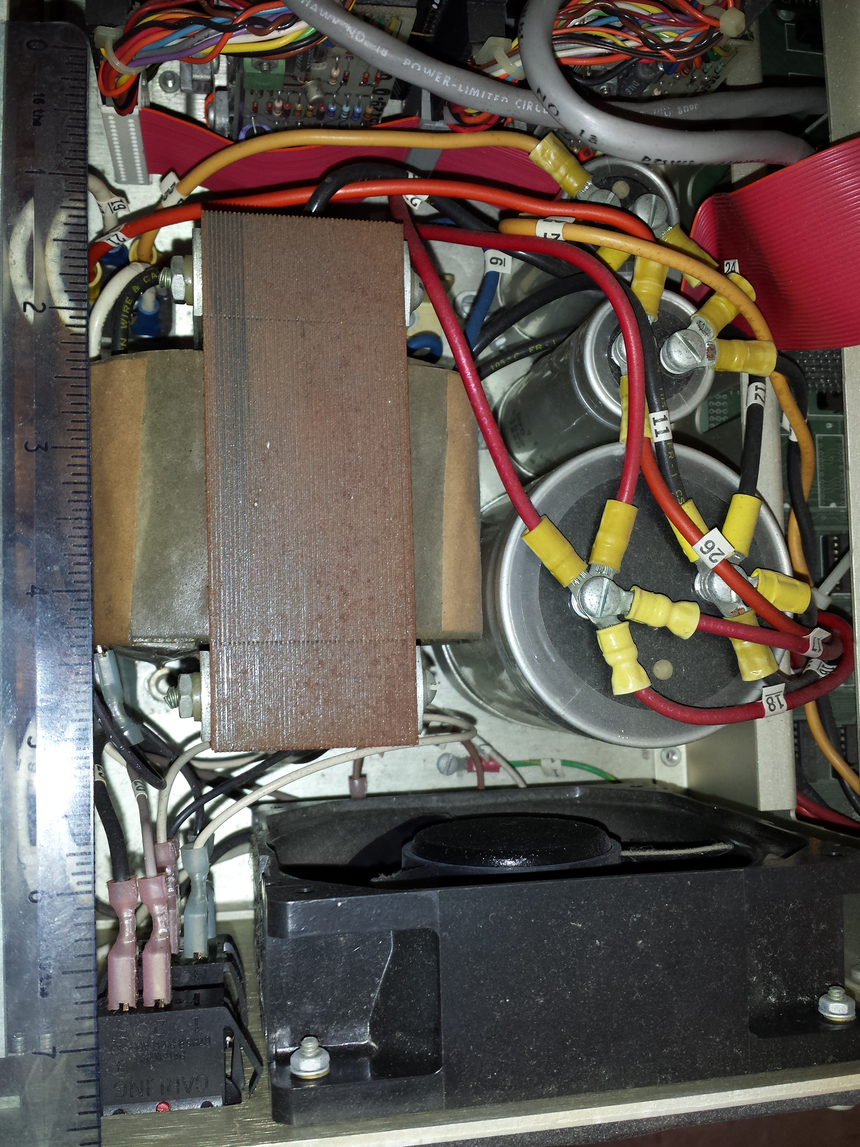

In terms of sheer weight, visual appearance and electrical clout, the Horizon power supply far exceeds those seen in today’s computers, which look tame by comparison (two of those capacitors are 4-inches tall):

My Horizon has been sitting in the garage for 32-years, and tucked away in unused rooms for years before that. The main problem with finding out whether it still works is finding a device to connect to the 25-pin serial port. I have an old PC with a 9-pin serial port, but I have spent enough of my life fiddling around with serial-port cables and Kermit to be content trying a simpler approach. I connect the power supply and switched it on. There was a loud crack and a flash on the disk-controller board; probably a tantalum capacitor giving up the ghost (easy enough to replace). The primary floppy drive did spin up and shutdown after some seconds (as expected), but the internal floppy engagement arm (probably not its real name) does not swing free when I open the bay door (so I cannot insert a floppy).

I am hoping to find a home for it in a computer museum, and have emailed the two closest museums. If these museums are not interested, the first person to knock on my door can take it away, along with manuals and floppies.

Update: This North Star can now be seen at the Retro Computer Museum.

Linux has a sleeper agent working as a core developer

The latest news from Wikileaks, that GCHQ, the UK’s signal intelligence agency, has a sleeper agent working as a trusted member of the Linux kernel core development team should not come as a surprise to anybody.

The Linux kernel is embedded as a core component inside many critical systems; the kind of systems that intelligence agencies and other organizations would like full access.

The open nature of Linux kernel development makes it very difficult to surreptitiously introduce a hidden vulnerability. A friendly gatekeeper on the core developer team is needed.

In the Open source world, trust is built up through years of dedicated work. Funding the right developer to spend many years doing solid work on the Linux kernel is a worthwhile investment. Such a person eventually reaches a position where the updates they claim to have scrutinized are accepted into the codebase without a second look.

The need for the agent to maintain plausible deniability requires an arm’s length approach, and the GCHQ team made a wise choice in targeting device drivers as cost-effective propagators of hidden weaknesses.

Writing a device driver requires the kinds of specific know-how that is not widely available. A device driver written by somebody new to the kernel world is not suspicious. The sleeper agent has deniability in that they did not write the code, they simply ‘failed’ to spot a well hidden vulnerability.

Lack of know-how means that the software for a new device is often created by cutting-and-pasting code from an existing driver for a similar chip set, i.e., once a vulnerability has been inserted it is likely to propagate.

Perhaps it’s my lack of knowledge of clandestine control of third-party computers, but the leak reveals the GCHQ team having an obsession with state machines controlled by pseudo random inputs.

With their background in code breaking, I appreciate that GCHQ have lots of expertise to throw at doing clever things with pseudo random numbers (other than introducing subtle flaws in public key encryption).

What about the possibility of introducing non-random patterns in randomised storage layout algorithms (he says, waving his clueless arms around)?

Which of the core developers is most likely to be the sleeper agent? His codename, Basil Brush, suggests somebody from the boomer generation, or perhaps reflects some personal characteristic; it might also be intended to distract.

What steps need to be taken to prevent more sleeper agents joining the Linux kernel development team?

Requiring developers to provide a record of their financial history (say, 10-years worth), before being accepted as a core developer, will rule out many capable people. Also, this approach does not filter out ideologically motivated developers.

The world may have to accept that intelligence agencies are the future of major funding for widely used Open source projects.

Update

Turns out the sleeper agent was working on xz.

The aura of software quality

Bad money drives out good money, is a financial adage. The corresponding research adage might be “research hyperbole incentivizes more hyperbole”.

Software quality appears to be the most commonly studied problem in software engineering. The reason for this is that use of the term software quality imbues what is said with an aura of relevance; all that is needed is a willingness to assert that some measured attribute is a metric for software quality.

Using the term “software quality” to appear relevant is not limited to researchers; consultants, tool vendors and marketers are equally willing to attach “software quality” to whatever they are selling.

When reading a research paper, I usually hit the delete button as soon as the authors start talking about software quality. I get very irritated when what looks like an interesting paper starts spewing “software quality” nonsense.

The paper: A Family of Experiments on Test-Driven Development commits the ‘crime’ of framing what looks like an interesting experiment in terms of software quality. Because it looked interesting, and the data was available, I endured 12 pages of software quality marketing nonsense to find out how the authors had defined this term (the percentage of tests passed), and get to the point where I could start learning about the experiments.

While the experiments were interesting, a multi-site effort and just the kind of thing others should be doing, the results were hardly earth-shattering (the experimental setup was dictated by the practicalities of obtaining the data). I understand why the authors felt the need for some hyperbole (but 12-pages). I hope they continue with this work (with less hyperbole).

Anybody skimming the software engineering research literature will be dazed by the number and range of factors appearing to play a major role in software quality. Once they realize that “software quality” is actually a meaningless marketing term, they are back to knowing nothing. Every paper has to be read to figure out what definition is being used for “software quality”; reading a paper’s abstract does not provide the needed information. This is a nightmare for anybody seeking some understanding of what is known about software engineering.

When writing my evidence-based software engineering book I was very careful to stay away from the term “software quality” (one paper on perceptions of software product quality is discussed, and there are around 35 occurrences of the word “quality”).

People in industry are very interested in software quality, and sometimes they have the confusing experience of talking to me about it. My first response, on being asked about software quality, is to ask what the questioner means by software quality. After letting them fumble around for 10 seconds or so, trying to articulate an answer, I offer several possibilities (which they are often not happy with). Then I explain how “software quality” is a meaningless marketing term. This leaves them confused and unhappy. People have a yearning for software quality which makes them easy prey for the snake-oil salesmen.

Software engineering research problems having worthwhile benefits

Which software engineering research problems are likely to yield good-enough solutions that provide worthwhile benefits to professional software developers?

I can think of two (hopefully there are more):

- what is the lifecycle of software? For instance, the expected time-span of the active use of its various components, and the evolution of its dependency ecosystem,

- a model of the main processes involved in a software development project.

Solving problems requires data, and I think it is practical to collect the data needed to solve these two problems; here is some: application lifetime data, and detailed project data (a lot more is needed).

Once a good-enough solution is available, its practical application needs to provide a worthwhile benefit to the customer (when I was in the optimizing compiler business, I found that many customers were not interested in more compact code unless the executable was at least a 10% smaller; this was the era of computer memory often measured in kilobytes).

Investment decisions require information about what is likely to happen in the future, and an understanding of common software lifecycles is needed. The fact that most source code has a brief existence (a few years) and is rarely modified by somebody other than the original author, has obvious implications for investment decisions intended to reduce future maintenance costs.

Running a software development project requires an understanding of the processes involved. This knowledge is currently acquired by working on projects managed by people who have successfully done it before. A good-enough model is not going to replace the need for previous experience, some amount of experience is always going to be needed, but it will provide an effective way of understanding what is going on. There are probably lots of different good-enough ways of running a project, and I’m not expecting there to be a one-true-way of optimally running a project.

Perhaps the defining characteristic of the solution to both of these problems is lots of replication data.

Applications are developed in many ecosystems, and there is likely to be variations between the lifecycles that occur in different ecosystems. Researchers tend to focus on Github because it is easily accessible, which is no good when replications from many ecosystems are needed (an analysis of Github source lifetime has been done).

Projects come in various shapes and sizes, and a good-enough model needs to handle all the combinations that regularly occur. Project level data is not really present on Github, so researchers need to get out from behind their computers and visit real companies.

Given the payback time-frame for software engineering research, there are problems which are not cost-effective to attempt to answer. Suggestions for other software engineering problems likely to be worthwhile trying to solve welcome.

Recent Comments