Archive

Documentation as a signal of program size

Developers and researchers invariably measure program size in lines of code, while senior managers measure by resources consumed per accounting period, e.g., money and people.

What size signals are visible to the users of a program?

Before CDs became generally available at the start of the 1990s, software for desktop computers was delivered on floppy discs that did not have the capacity to hold documentation (i.e., 128K to 1.4M), which was distributed in printed book form.

For instance, the first version of Turbo Pascal came with one 5¼ floppy (the compiler+IDE occupied 28K) and a 276-page reference manual.

Today, people are familiar with the intangible nature of software. In previous ages, people wanted to see and feel something for their money, and printed manuals were the substance they received (some products attached the floppies inside the back covers). Physical manuals were also thought to reduce software piracy (when CDs arrived, there was lots of hand-wringing over including electronic manuals).

Microsoft Windows bucked the trend, distributed with almost no physical paper, but many floppies; 13 3½ floppies for the initial upgrade to Windows 95, and 26 for Service Release 2 (oh, the fun of spending an afternoon swapping disks to rebuild a machine). Microsoft Office 97 standard edition was available on 45 floppies, the professional edition on 55.

The problem with distributing manuals in printed book form is that updates are costly; customers need a whole new book and the existing inventory needs to be scrapped. Documentation for Mainframe/Minicomputer/Workstations came in ring binders, allowing updates on an individual page basis. The Sun 4 that arrived at my office (to have a COBOL code generator written for the SPARC cpu) came with around 3-feet of ring binders. I have seen offices with a wall of shelves filled with vendor ring binders.

Is there any correlation between a project’s lines of code and pages of it’s documentation?

Most developers hate writing documentation; readmes don’t count. This means that only (well) funded development projects are likely to pay for an author to produce some amount of non-trivial documentation (a widely used application eventually attracts an external author interested in explaining things). Some Open source projects do contain files believed to be documentation; documentation research is primarily focused on accuracy (see section 6.4.4).

The only data I am aware of containing LOC, documentation page counts, and development man months is the 1979 paper The Characteristics of Large Systems by Belady and Lehman, which lists values for 37 “… independent programs developed in a large software house.” How much of the documentation was user focused, requirements+business logic, or developer focused? I have no idea (a fitted regression model, code+data, shows an almost linear relationship between LOC and document pages). Tests are not broken out as a separate item (code, documentation, not recorded?)

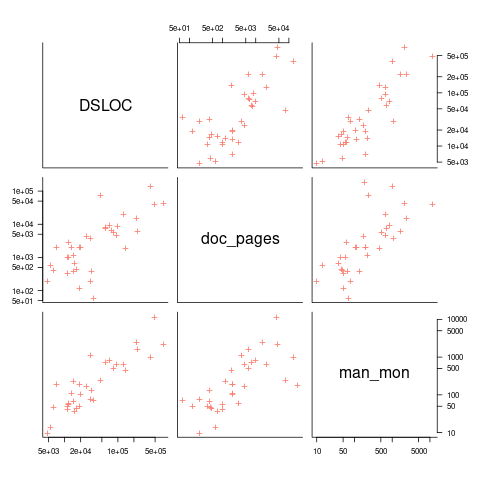

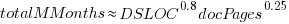

The plot below shows delivered: source lines of code, documentation pages, and total man months, x/y-axis both using log scales (code+data):

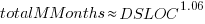

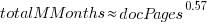

The total man months of implementation for each project is taken up by writing the code and documentation. The following equation is a good fit (explaining just 80% of the variance; code+data):  , but is only slightly better than

, but is only slightly better than  . Given the high correlation between

. Given the high correlation between  and

and  , including both in the same model is probably not a good idea (the equation:

, including both in the same model is probably not a good idea (the equation:  explains just over 50% of the variance).

explains just over 50% of the variance).

There are a few possible outliers in the data. Perhaps removing these would make the picture clearer.

For me, what stands out, compared to today’s projects, is the relatively low DSLOC (a few tens of thousands) and high pages of documentation (thousands). Projects could be smaller/simpler in the 1970s because they were often replacing humans doing the work, not previously written systems; or, perhaps projects were limited by available computer memory, often well less than a megabyte. Perhaps I think the page count is high because I don’t have an accurate idea of how much online documentation is created these days.

The first computer I owned

The first computer I owned was a North Star Horizon. I bought it in kit form, which meant bags of capacitors, resistors, transistors, chips, printed circuit boards, etc, along with the circuit diagrams for each board. These all had to be soldered in the right holes, the chips socketed (no surface mount soldering for such a low volume system), and wires connected. I was amazed when the system booted the first time I powered it up; debugging with the very basic equipment I had would have been a nightmare. The only missing component was the power supply transformer, and a trip to the London-based supplier sorted that out. I saved a months’ salary by building the kit (which cost me 4-months salary, and I was one of the highest paid people in my circle).

The few individuals who bought a computer in the late 1970s bought either a Horizon or a Commodore Pet (which was more expensive, but came with an integrated monitor and keyboard). Computer ownership really started to take off when the BBC micro came along at the end of 1981, and could be bought for less than a months’ salary (at least for a white-collar worker).

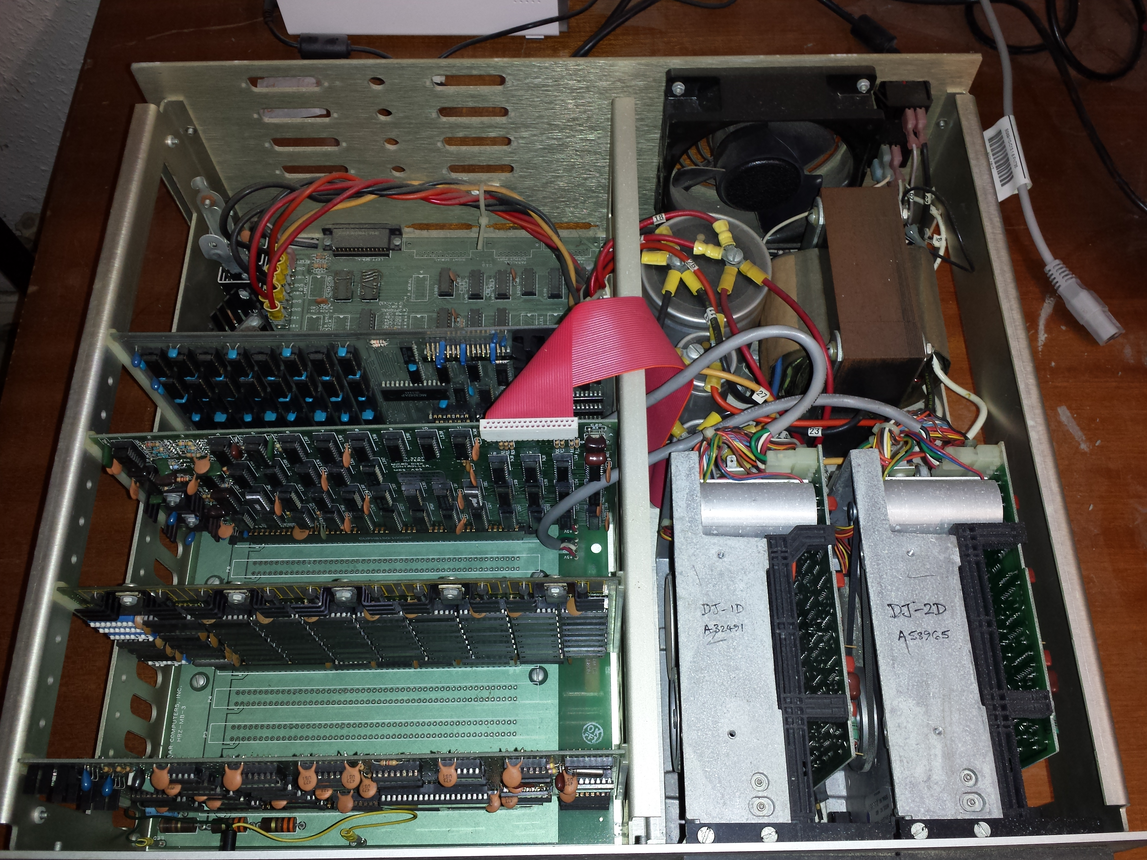

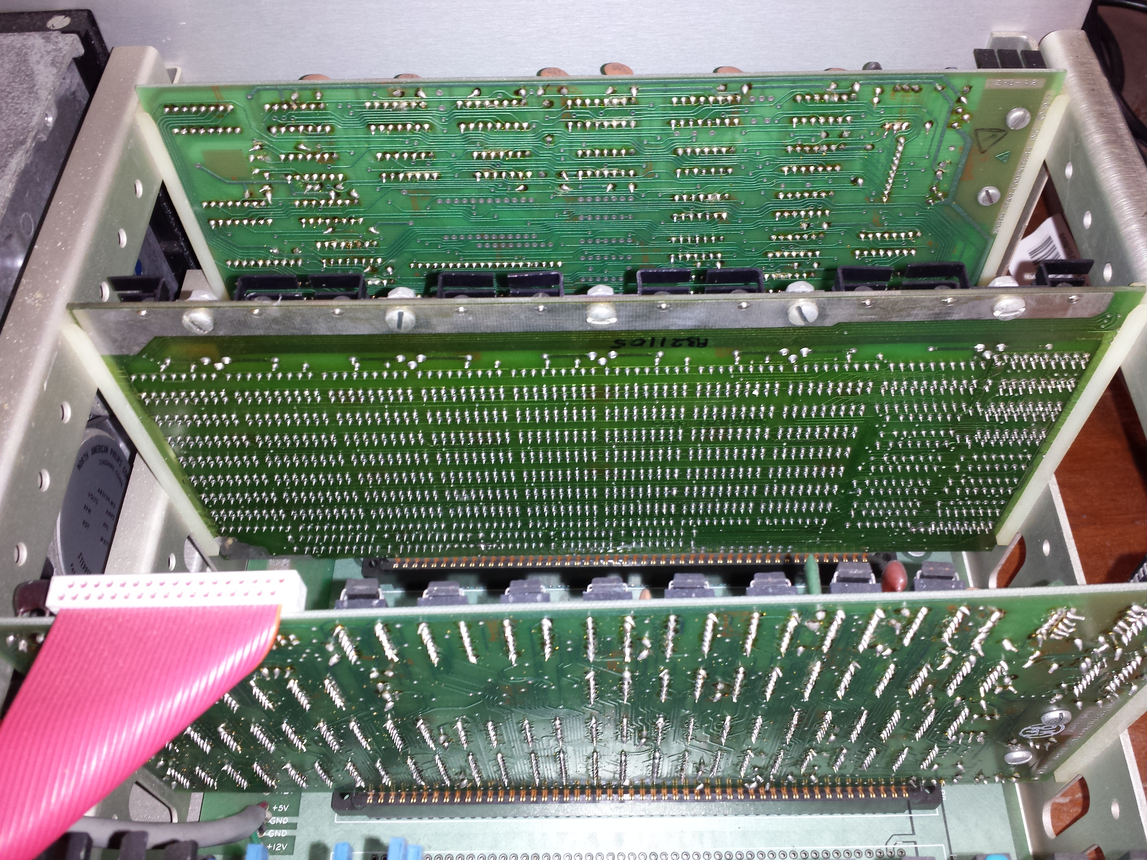

My Horizon contained a Z80A clocking at 4MHz, 32K of RAM, and two 5 1/4-inch floppy drives (each holding 360K; the Wikipedia article says the drives held 90K, mine {according to the labels on the floppies, MD 525-10} are 40-track, 10-sector, double density). I later bought another 32K of memory; the system ROM was at 56K, and contained 4K of code, various tricks allowed the 4K above 60K to be used (the consistent quality of the soldering on one of the boards below identifies the non-hand built board).

The OS that came with the system was CP/M, renamed to CP/M-80 when the Intel 8086 came along, and will be familiar to anybody used to working with early versions of MS-DOS.

As a fan of Pascal, my development environment of choice was UCSD Pascal. The C compiler of choice was BDS C.

Horizon owners are total computer people 🙂 An emulator, running under Linux and capable of running Horizon disk images, is available for those wanting a taste of being a Horizon owner. I didn’t see any mention of audio emulation in the documentation; clicks and whirls from the floppy drive were a good way of monitoring compile progress without needing to look at the screen (not content with using our Horizon’s at home, another Horizon owner and I implemented a Horizon emulator in Fortran, running on the University’s Prime computers). I wonder how many Nobel-prize winners did their calculations on a Horizon?

The Horizon spec needs to be appreciated in the context of its time. When I worked in application support at the University of Surrey, users had a default file allocation of around 100K’ish (memory is foggy). So being able to store stuff on a 360K floppy, which could be purchased in boxes of 10, was a big deal. The mainframe/minicomputers of the day were available with single-digit megabytes, but many previous generation systems had under 100K of RAM. There were lots of programs out there still running in 64K. In terms of cpu power, nearly all existing systems were multi-user, and a less powerful, single-user, cpu beats sharing a more powerful cpu with 10-100 people.

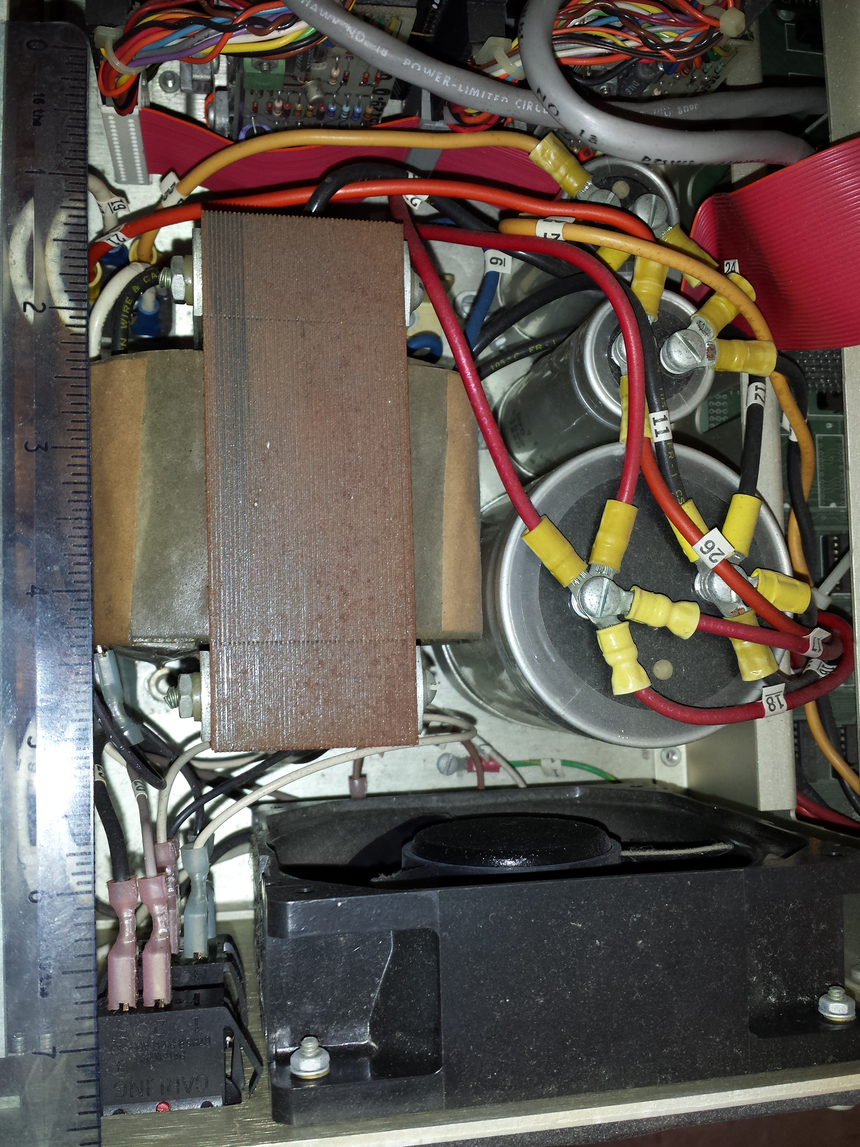

In terms of sheer weight, visual appearance and electrical clout, the Horizon power supply far exceeds those seen in today’s computers, which look tame by comparison (two of those capacitors are 4-inches tall):

My Horizon has been sitting in the garage for 32-years, and tucked away in unused rooms for years before that. The main problem with finding out whether it still works is finding a device to connect to the 25-pin serial port. I have an old PC with a 9-pin serial port, but I have spent enough of my life fiddling around with serial-port cables and Kermit to be content trying a simpler approach. I connect the power supply and switched it on. There was a loud crack and a flash on the disk-controller board; probably a tantalum capacitor giving up the ghost (easy enough to replace). The primary floppy drive did spin up and shutdown after some seconds (as expected), but the internal floppy engagement arm (probably not its real name) does not swing free when I open the bay door (so I cannot insert a floppy).

I am hoping to find a home for it in a computer museum, and have emailed the two closest museums. If these museums are not interested, the first person to knock on my door can take it away, along with manuals and floppies.

Update: This North Star can now be seen at the Retro Computer Museum.

Recent Comments