Archive

Median system cpu clock frequency over last 15 years

We are all familiar with graphs showing the growth of cpu clock frequency over time. The data for these plots is based on vendor announcements listing the characteristics of their latest products, and invariably focuses on the product which is the fastest or contains the most transistors or the lowest power consumption.

Some customers buy the cpu with the highest/most/lowest, but many are happy to pay less for, for good enough. What does a graph of average customer cpu clock frequency over time look like?

Vendors sometimes publish general sales figures, but I have never seen one broken down by clock frequency. However, a few sites collect user system data, including:

- A subset of the Linux Counter project data is available. This does not contain explicit date information, but a must-be-later-than date can be inferred from the listed Linux kernel version,

- Hardware for BSD has data going back to December 2014, but there is no obvious way to extract it (I have not tried that hard),

- the BSDstats project (variable website availability) has been collecting data on machines running some derivative of BSD since August 2008; it contains around 200 times more cpu data than the known Linux Counter data. While the raw data is not available, approximately monthly reports are available on the Wayback Machine.

A BSDstats cpu history was obtained using waybackpack to download the available stored cpu summary pages, followed by html2text, and an awk script to extract the cpu frequency/count data.

BSDstats obtains the cpu information via a call to the sysctl command. For many Intel processors, but not AMD processors, the returned string includes the frequency (to see your cpu information on Linux systems type: more /proc/cpu), for instance:

Celeron(R) CPU 2.80GHz | 336 Pentium(R) 4 CPU 3.00GHz | 258 Pentium(R) 4 CPU 2.40GHz | 170 Athlon(tm) 64 Processor 3000+ | 43 Athlon(tm) 64 X2 Dual Core Processor 4200+ | 28 Athlon(tm) 64 Processor 3500+ | 27 |

For simplicity, only those rows containing frequency information were used in this analysis; 67% of the strings explicitly included a frequency (this saved me having to build a table to map AMD cpu strings to their corresponding frequency).

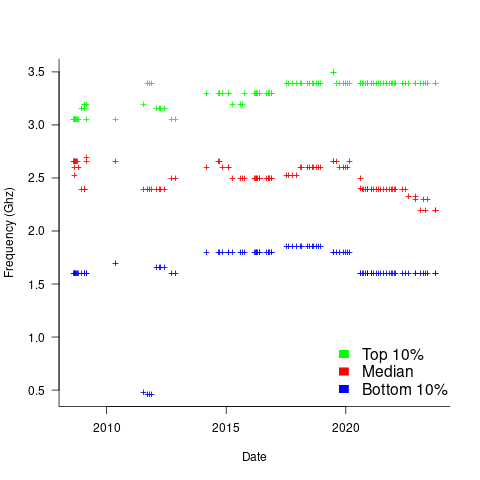

The plot below shows median cpu frequency (in red), along with the top/bottom 10% cpu frequencies, based on the Wayback Machine’s copy of the webpage on a given date, for a total of 2,304,446 cpu identities (code+data):

Broadly, the plot shows that cpu frequencies have essentially remained unchanged since 2008, with systems running BSD having a median frequency of 2.5 GHz, with 10% of systems having a frequency over 3.5 GHz, and 10% of systems a frequency below 1.5 GHz.

I was surprised at how many different frequencies were present in the data; often over 50. A look at the large number of different versions of Intel x86 cpus suggests that this is to be expected.

How representative is this sample of BSD systems, compared to the many more systems running Linux and Windows?

This begs the question of what kinds of environments are being compared. Are these desktop systems, local or hosted clusters, cloud systems?

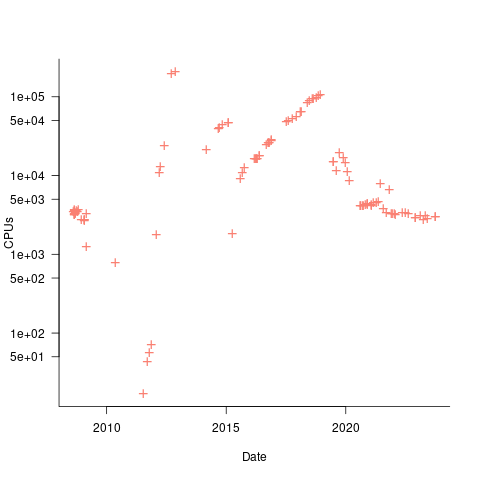

The plot below shows the total number of cpus summarised on each Wayback Machine snapshot (code+data):

A few thousand systems are likely to be personal desktop systems, while the tens of thousands are likely to be clusters or small cloud providers.

Pointers to more data, particularly pre-2000, most welcome.

Testing rounded data for a circular uniform distribution

Circular statistics deals with analysis of measurements made using a circular scale, e.g., minutes past the hour, days of the week. Wikipedia uses the term directional statistics, the traditional use being measurements of angles, e.g., wind direction.

Package support for circular statistics is rather thin on the ground. R’s circular package is one of the best, and the book “Circular Statistics in R” provides the only best introduction to the subject.

Circular statistics has a few surprises for those new to the subject (apart from a few name changes, e.g., the von Mises distribution is effectively the ‘circular Normal distribution’), including:

- the mean value contains two components, a direction and a length, e.g., mean wind direction and strength,

- there are several definitions of variance, with angular variance having a value between 0 and 2, and circular variance having a value between 0 and 1. The circular standard deviation is not the square root of variance, but rather:

, where

, where  is the mean length.

is the mean length.

The basic techniques used in circular statistics are still relatively new, compared to the more well known basic statistical techniques. For instance, it was recently discovered that having more measurements may reduce the reliability of the Rao spacing test (used to test whether a sample has a uniform circular distribution); generally, having more measurements improves the reliability of a statistical test.

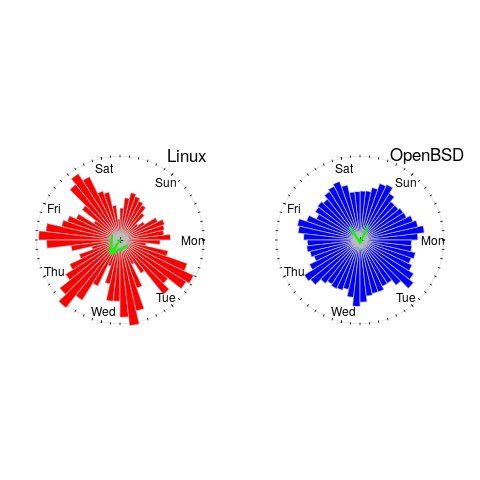

The plot below shows Rose diagrams for the number of commits in each 3-hour period of a day for Linux and FreeBSD (mean direction and length in green; code+data):

The Linux kernel source has far fewer commits at the weekend, compared to working days. Given the number of people whose job is to work on the Linux kernel, compared to the number of people doing it out of interest, this difference is not surprising. The percentage of people working on OpenBSD as a job is small, and there does not appear to be a big difference between weekends and workdays. There is a lot of variation in the number of commits during each 3-hour period of a day, but the number of commits per day does not vary so much; the number of OpenBSD commits per day of week is:

Mon Tue Wed Thu Fri Sat Sun

26909 26144 25705 25104 24765 22812 24304 |

Does this distribution of commits per day have a uniform distribution (to some confidence level)?

Like all measurements, those made on a circular scale are rounded to some number of digits. Measurements may also be rounded, or binned, to particular units of the scale, e.g., measured to the nearest degree, or nearest minute.

A recent paper, by Landler, Ruxton and Malkemper, found that for samples containing around five hundred or more measurements, rounding to the nearest degree was sufficient to cause the Rao spacing test to almost always report non-uniformity, i.e., for non-trivial samples the rounding was sufficient to cause the test to detect non-uniformity (things worked as expected for samples containing fewer than 100 measurements).

Landler et al found that adding a small amount of noise (drawn from a von Mises distribution) to the rounded measurements appeared to ‘fix’ the incorrect behavior, i.e., rejecting the hypothesis of a uniform distribution, when a uniform distribution may be present.

The rao.spacing.test function, in the circular package, rejected that null hypothesis that the OpenBSD daily data has a uniform distribution. However, when noise is added to each day value (i.e., adding a random fraction to the day values, using rvonmises(length(c_per_day), circular(0), 2.0), although runif(length(c_per_day)) is probably more appropriate {and produces essentially the same result}), the call to rao.spacing.test failed to reject the null hypothesis of uniformity at the 0.05 level (i.e., the daily distribution is probably uniform).

How many research results are affected by this discovery?

I very rarely encounter the use of circular statistics (even though they should probably have been used in places), but then I spend my time reading software engineering papers, whose use of statistics tends to be primitive. I plan to include a brief mention of the use of the Rao spacing test with binned data in the addendum to my Evidence-based software engineering book (which includes the above example).

%, would not have passed).

%, would not have passed).

Recent Comments