Low defect density implies climate code less, not more, reliable

I have just been reading a paper comparing the defect density of three climate modelling systems against software from other application domains. The defect density (total reported defects divided by thousands of lines of code) of the climate modelling software was significantly lower than everything else, leading the researchers to conclude that “… suggests that the models are of high software quality,”. I would draw the opposite conclusion, the models have low reliability (I have no idea what software quality is and avoid using the term).

I don’t disagree with Pipitone and Easterbrook numbers, just their conclusion.

There is a very simple technique for creating software that has a low defect density, don’t try too hard to look for defects. There are two reasons why I think this has happened with the climate model software:

- Three of the non-climate systems compared against were the Apache HTTP demon, the VTK visulalization toolkit and the Eclipse project. These are all widely used projects with many thousands of users, millions for Apache; this volume of usage corresponds to a huge amount of testing, and it is no wonder that so many faults have been reported. Each climate model tends to be used by one site, a tiny amount of testing, and it is not surprising that few faults have been reported.

- Climate models have a big intrinsic testing problem; what is the result of a test supposed to be? With applications such as word processors, browsers, compilers, operating systems, etc the expected behavior is known in many cases so it is possible to write test cases that check for the expected behavior. How does anybody know what the expected behavior of a climate model is? If all the climate models did was to solve the Navier-Stokes equation on a rotating sphere there would be no need for multiple models and the UK Meteorological Office’s Unified model would not have grown from 100 KLOC to 800+ KLOC over the last 15 years.

The one system having a similar defect density to the climate models that Pipitone and Easterbrook compare against is an air traffic control system developed using formal methods, exactly the kind of (expensive and time-consuming) development process that one would expect to have a low defect density.

Software is remarkably fault-tolerant and so, yes, serious fault could exist in the climate models and they would still give answers that looked about right. Based on his experience working on a meteorological model Les Hatton tells the story of a fault so serious that the answers should be completely wrong, but they were not.

If somebody wants to convince me that the software in any of these climate models really is reliable then I want to know about the test suites used to check the behavior; what coverage of the source does the suite have (a high MC/DC would be very good, but I would settle for a very high statement coverage) and how were the expected behaviors calculated.

My R naming nemesis

When learning a new language I try to make an effort to write it like a native developer. R has one language feature that has been severely testing my desire to write like a native and this afternoon I realized that most of the people reading my code will also experience the same jarring sensation on encountering this construct, so I am not going to use it any more.

What is this language feature that induces a Stroop effect in my mind? It is the use of the period character as part of an identifier’s name (e.g., foo.bar). In almost all of the hundreds of thousands of lines of code I have read over the years this character is used as an operator, it selects a member/field of a struct/record. I’m sure that if I tried long enough and hard enough I could get used to using this character being part of an identifier; after a year or so writing Cobol I got used to the arithmetic minus character being permitted within identifiers (e.g., foo-bar), but that was 20 years ago and my neurons will probably take much longer to adapt this time around.

Most of the R I am writing will be distributed with my book Empirical software engineering with R and I think readers will experience the same jarring sensation I do (apart from those who have not yet been exposed to large amounts of non-R code). I have convinced myself that this is a good enough reason to give up trying to figure out how to use . in identifier name (I have been concocting all sorts of rules involving . being used to separate the primary part of the name and _ the secondary parts, e.g., total.red_light [yes, I should get out more often]; the underscore vs. camel case debate still erupts every now and again, let’s avoid creating more debate by introducing more choice).

Those R functions that include a . in their name will stand out from the crowd, [arm waving on] perhaps this will help differentiate them as ‘statistics stuff'[arm waving off]. There is always plan B if my unilateral naming decision looks too unilateral, a global renaming script.

Perhaps the use of periods in identifiers can be used as a test for being a native R developer. A simple timing test involving a sequence of characters appears on a screen with the developer having to respond as quickly as possible on the number of identifiers being displayed; I’m sure I would be much slower to give a ‘1’ response to total.count than to total_count, displaying total count and total.count on twp separate lines and asking me to quickly specify which line contained the most identifiers would turn me into a nervous wreck. Responses from a dozen or so different sequences ought to be enough be able to distinguish Jonny foreigner from the natives.

I don’t have a problem with $, which R uses as the column/list item selection operator, a character permitted by some compilers for commonly used languages as part of an identifier. This is because I have not read lots of code containing this identifier naming usage.

For my previous book I did a survey of the linguistic and cognitive psychology issues involved in identifier naming. This did a good job of debunking existing ideas about what constitutes good naming practices, but did not come up with any concrete recommendations to replace them (nature abhors a vacuum and the existing pop psychology naming ideas remained).

These days people write PhDs on identifier naming issues (method names, (not yet completed) correlation with quality and code comprehension to name a few); there is even a subfield within this field, how best to split an identifier into its component parts (e.g., refPtr is probably an abbreviation of reference pointer).

Distribution of uptimes for high-performance computing systems

Computers break down every now and again and this is a serious problem when an application needs runs on thousands of individual computers (nodes) plugged together; lots more hardware creates lots more opportunity for a failure that renders any subsequent calculations by working nodes possible wrong. The solution is checkpointing; saving the state of each node every now and again, and rolling back to that point when a failure occurs. Picking the optimal interval between checkpoints requires knowledge the distribution of node uptimes, what is it?

Short answer: Node uptimes have a negative binomial distribution, or at least five systems at the Los Alamos National Laboratory do.

The longer answer is below as another draft section from my book Empirical software engineering with R. As always comments and pointers to more data welcome. R code and data here.

Distribution of uptimes for high-performance computing systems

Today’s high-performance computing systems are created by connecting together lots of cpus. There is a hierarchy to the connection in that many cpus may populate a single board, several boards may be fitted into a rack unit, several rack units into a cabinet, lots of cabinets lined up in a row within a room and more than one room in a facility. A common operating unit is the node, effectively a computer on which an operating system is running (the actual hardware involved may be a single or multi processor cpu). A high-performance system is built from thousands of nodes and an application program may run on compute nodes from more than one facility.

With so many components, failures occur on a regular basis and long running applications need to recover from such failures if they are to stand a reasonable chance of ever completing.

Applications running on the systems installed at the Los Alamos National Laboratory create checkpoints at regular intervals, writing data needed to do a full restore to storage. When a failure occurs an application is restarted from its most recent checkpoint, one node failure causes all nodes to be rolled back to their most recent checkpoint (all nodes create their checkpoints at the same time).

A tradeoff has to be made between frequently creating checkpoints, which takes resources away from completing execution of the application but reduces the amount of lost calculation, and infrequent checkpoints, which diverts less resources but incurs greater losses when a fault occurs. Calculating the optimum checkpoint interval requires knowing the distribution of node uptimes and the following analysis attempts to find this distribution.

Data

The data comes from 23 different systems installed at the Los Alamos National Laboratory (LANL) between 1996 and 2005. The total failure count for most of the systems is of the order of a few hundred; there are five systems (systems 2, 16, 18, 19 and 20) that each have several thousand failures and these are the ones analysed here.

The data consists of failure records for every node in a system. A failure record includes information such as system id, node number, failure time, restored to service time, various hardware characteristics and possible root causes for the failure. Schroeder and Gibson <book Schroeder_06> performed the first analysis of the dataset and provide more background details.

Is the data believable?

Failure records are created by operations staff when they are notified by the automated monitoring system that a failure has been detected. Given that several people are involved in the process <book LANL_data_06> it seems unlikely that failures will go unreported.

Some of the failure reports have start times before the given node was returned into service from the previous failure; across the five systems this varied between 0.4% and 2.5%. It is possible that these overlapping failures are caused by an incorrectly attempt to fix the first failure, or perhaps they are data entry errors. This error rate is comparable with human error rates for low stress/non-critical work

The failure reports do not include any information about the application software running on the node when it failed; the majority of the programs executed are large-scale scientific simulations, such as simulations of nuclear stockpile stability. Thus it is not possible to accurately calculate the node MTBF for an executing application. LANL say <book LANL_data_06> that the applications “… perform long periods (often months) of CPU computation, interrupted every few hours by a few minutes of I/O for check-pointing.”

Predictions made in advance

The purpose of this analysis is to find the distribution that best fits the node uptime data, i.e., the time interval between failures of the same node.

Your author is not aware of any empirically based theory that predicts the uptime of high performance computing systems. The Poisson and exponential distributions are both frequently encountered in the analysis of hardware failures and it is always comforting to fit in with existing expectations.

Applicable techniques

A [Cullen and Frey test] matches a dataset’s skew and kurtosis against known distributions (in the case of the descdist function in the fitdistrplus package this is a handful of commonly encountered distributions); the fitdist function in the same package can be used to fit the data to a specified distribution.

Results

The table below lists some basic properties of each of the systems analysed. The large difference in mean/median uptimes between some systems is caused by very fat tails in the uptime distribution of some systems, see [LANL-node-uptime-binned].

| System | Nodes | Failures | Mean | Median |

|---|---|---|---|---|

|

2

|

49

|

6997

|

133

|

377

|

|

16

|

16

|

2595

|

89

|

229

|

|

18

|

823

|

3014

|

2336

|

4147

|

|

19

|

738

|

2344

|

2376

|

4069

|

|

20

|

323

|

2063

|

653

|

2544

|

If there are any significant changes in failure rate over time or across different nodes in a given system it could have a significant impact on the distribution of uptime intervals. So we first check to large differences in failure rates.

Do systems experience any significant changes in failure rate over time?

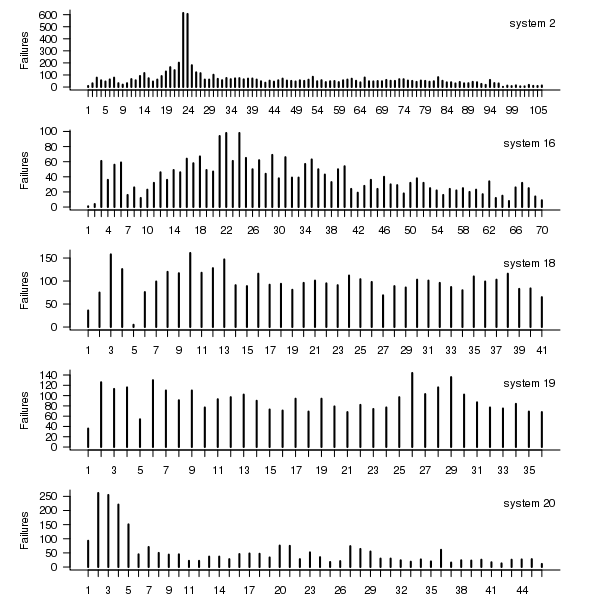

The plot below shows the total number of failures, binned using 30-day periods, for the five systems. Two patterns that stand out are system 20 which experienced many failures during the first few months and then settled down, and system 2’s sudden spike in failures around month 23 before settling down again. This analysis is intended to be broad brush and does not get involved with details of specific systems, but these changes in failure frequency suggest that the exact form of any fitted distribution may change over time in turn potentially leading to a change of checkpoint interval.

Figure 1. Total number of failures per 30-day interval for each LANL system.

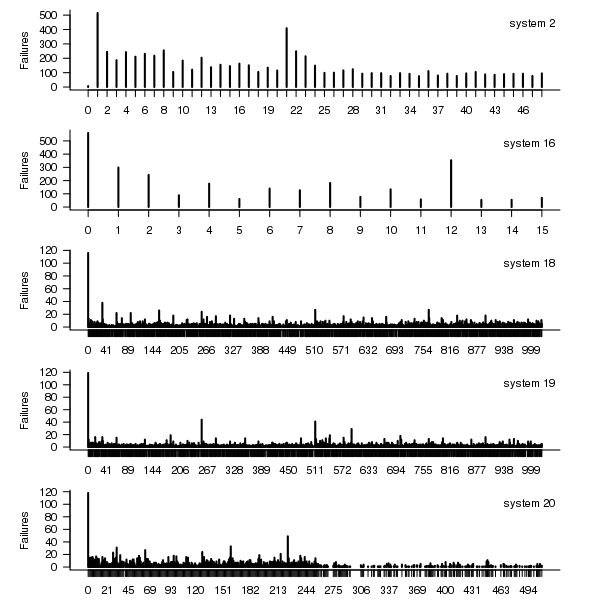

Do some nodes failure more often than others?

The plot below shows the total number of failures for each node in the given system. Node 0 has many more failures than the other nodes (for node 0 of system 2 most of the failure data appears to be missing, so node 1 has the most failures). The distribution suggested by the analysis below is not changed if Node 0 is removed from the dataset.

Figure 2. Total number of failures for each node in the given LANL system.

Fitting node uptimes

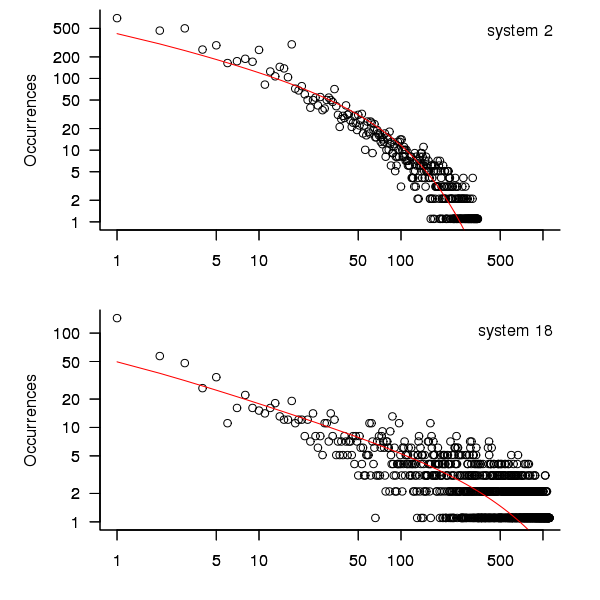

When plotted in units of 1 hour there is a lot of variability and so uptimes are binned into 10 hour units to help smooth the data. The number of uptimes in each 10-hour bin forms a discrete distribution and a [Cullen and Frey test] suggests that the negative binomial distribution might provide the best fit to the data; the Scroeder and Gibson analysis did not try the negative binomial distribution and of those they tried found the Weilbull distribution gave the best fit; the R functions were not able to fit this distribution to the data.

The plot below shows the 10-hour binned data fitted to a negative binomial distribution for systems 2 and 18. Visually the negative binomial distribution provides the better fit and the Akaiki Information Criterion values confirm this (see code for details and for the results on the other systems, which follow one of the two patterns seen in this plot).

Figure 3. For systems 2 and 18, number of uptime intervals, binned into 10 hour interval, red line is fitted negative binomial distribution.

The negative binomial distribution is also the best fit for the uptime of the systems 16, 19 and 20.

The Poisson distribution often crops up in failure analysis. The quality of fit of a Poisson distribution to this dataset was an order of worse for all systems (as measured by AIC) than the negative binomial distribution.

Discussion

This analysis only compares how well commonly encountered distributions fit the data. The variability present in the datasets for all systems means that the quality of all fitted distributions will be poor and there is no theoretical justification for testing other, non-common, distributions. Given that the analysis is looking for the best fit from a chosen set of distributions no attempt was made to tune the fit (e.g., by forming a zero-truncated distribution).

Of the distributions fitted the negative binomial distribution has the lowest AIC and best fit visually.

As discussed in the section on [properties of distributions] the negative binomial distribution can be generated by a mixture of [Poisson distribution]s whose means have a [Gamma distribution]. Perhaps the many components in a node that can fail have a Poisson distribution and combined together the result is the negative binomial distribution seen in the uptime intervals.

The Weilbull distribution is often encountered with datasets involving some form of time between events but was not seen to be a good fit (for a continuous distribution) by a Cullen and Frey test and could not be fitted by the R functions used.

The characteristics of node uptime for two systems (i.e., 2 and 16) follows what might be thought of as a typical distribution of measurements, with some fattening in the tail, while two systems (i.e., 18 and 19) have very fat tails with indeed and system 20 sits between these two patterns. One system characteristic that matches this pattern is the number of nodes contained within it (with systems 2 and 16 having under 50, 18 and 19 having over 1,000 and 20 having around 500). The significantly difference in the size of the tails is reflected in the mean uptimes for the systems, given in the table above.

Summary of findings

The negative binomial distribution, of the commonly encountered distributions, gives the best fit to node uptime intervals for all systems.

There is over an order of magnitude variation in the mean uptime across some systems.

Proposal for a change of approach to programming language teaching

In a previous post I explained why I think developers don’t really know any computer language, and in this post I want to outline how I think we should adapt to this reality and radically change the approach taken to teaching students about using a computer language. First, a couple of points:

- The programming community needs to change its attitude towards language knowledge from being an end in itself to being something that is ok to acquire on an as needed basis. Developers don’t need to know much about the programming language they use in order to get their job done, get over it. Spending time learning the ins and outs of a language’s semantics rarely provides a worthwhile return on investment compared to time spent learning something else, such as the application domain or customer requirements,

- designing a new, ‘simpler’ programming language is not a solution; the existing languages in common use are not going away anytime soon and creating a new general purpose language is only going to overload developers with more stuff to learn and yet another runtime system to interface to,

- we need to concentrate on suggestions about what students and developers should be doing and not what they should not be doing. This is not only a good teaching principle it avoids the problem of having to come up with a good list of things not to do (coding standard recommendations are very rarely based on any evidence apart from the proposers own point of view and even the ones that make it through peer review are little more than group think or a waste of time).

The response to the existing state of affairs should be to approach the teaching of programming languages as an exercise in teaching students only what they need to know to do useful work, rather than acting on the belief that students should strive to be experts in the language they use and burdening them with lots of pointless language details. The exact minimum-set of knowledge could vary across different industries and application domains, so the set might need to be a bit larger than the minimum to be on the safe side.

Invariably some developers will need to know more than the minimum-set, so we also need to figure out what ‘template’ knowledge (or whatever term is used, an alternative is behavior patterns or patterns of behavior) should be included in the next level of language knowledge, this can be documented and made available to anybody who wants to read it; there may or may not be more levels before a developer is told to go and read a reference book or the language reference manual to figure out what they need to know.

This is minimum-set approach, with the opportunity to progress to successively more detailed levels, is often used for learning human languages, computer languages are not any different.

I would expect there to be some variation in the minimum-set between different languages, and would resist the temptation to try and create a ‘common minimum’ until some experience had been gained in teaching single languages.

How would the minimum-set of language knowledge be chosen? Simple. Students need to learn those construct they are likely to use most of the time, and that question can be answered by measuring a large amount of existing source code. Results from measurements that have been made typically show a small number of constructs are used a large percentage of the time. For instance, measurements of C source find that the 33.2% of for-loops have the form: for (assignment ; identifier < identifier ; identifier++), where identifier might be two or more different identifiers; allowing the central test to have the form identifier < expression takes the percentage to over 50%. I would expect the same pattern of usage to occur in source written in other languages but don't have any number to back up that assertion.

Perhaps the most important pattern of (developer) behavior is what its discoverer, Jorma Sajaniemi, calls the roles of variables (each variable is used to hold a particular kind of information, e.g., most wanted holder, stepper, container, etc).

One pattern of behavior that I am more or less completely in the dark about is class/package usage. There is the famous book on design patterns which the authors did a good job of promoting, but I have yet to see any empirical evidence showing the claimed benefits. The analysis of class/package behavioral usage is non-trivial, but it can be done.

Would I insist that developers only use constructs list in the suggested minimum-set (plus possible extras)? No. The purpose of this proposal is to help students and developers learn what they need to know to get a job done. Figuring out what language constructs, if any, should be avoided at all costs is a very tough problem which at the end of the day might not be worth solving.

A minimum-set knowledge of the language being used does not imply poor quality code. Most code is simple anyway, the complicated stuff invariably revolves around the algorithms that need to be used, and a skillful developer is one who uses straightforward language constructs to create easy to maintain code, not one who writes code that relies on detailed knowledge of some language feature.

I expect this proposal to adopt a minimum-set approach to language teaching will draw an angry reaction from the cottage industry that makes its living from writing and giving seminars on the latest trends in language-X. Don't panic guys, managers are well aware that this kind of knowledge rarely has any impact of developer performance and the actual motivation for sending employees on such seminars is to keep them happy (it can be a much more effective way of keeping staff than simply giving them a pay rise).

Most developers don’t really know any computer language

What does it mean to know a language? I can count to ten in half a dozen human languages, say please and thank you, tell people I’m English and a few other phrases that will probably help me get by; I don’t think anybody would claim that I knew any of these languages.

It is my experience that most developers’ knowledge of the programming languages they use is essentially template based; they know how to write a basic instances of the various language constructs such as loops, if-statements, assignments, etc and how to define identifiers to have a small handful of properties, and they know a bit about how to glue these together.

There are many developers who can skilfully weave together useful programs from the hodgepodge of coding knowledge they happen to know (proving that little programming knowledge is needed to write useful programs).

The purpose of this post is not to complain about developers’ lack of knowledge of the programming languages they use; I appreciate that time spent learning about the application domain often gives a better return on investment compared to learning more about a language. The purpose is to suggest that the programming language community (e.g., teachers and tool producers) acknowledge how languages are primarily used and go with the flow rather than maintaining the fiction that developers know anything much about the languages they use and that they should acquire this knowledge to expert level; students should be taught the commonly encountered templates, not the general language rules, developers should be encouraged to use just the common templates (this will also have the side effect of reducing the effort needed to follow other peoples code since the patterns of usage will be familiar to many).

I suspect that many readers will disagree with the statement in this post’s title, and I need to provide more evidence before proposing (in another post) how we might adapt to the reality to be found in development teams.

The only evidence I can offer is my own experience; not a very satisfactory situation; a possible measurement approach discussed below. So what is this experience based evidence (I only claim to ‘know’ the handful of language I have written compiler front ends for, with other languages my usage follows the template form just like everybody else)?

- discussions with developers: individuals and development groups invariably have their own terminology for programming language constructs (my use of terminology appearing in the language definition usually draws blank stares and I have to make a stab at guessing what the local terms mean and using them if I want to be listened to); asking about identifier scoping or type compatibility rules (assuming that either of the terms ‘scope’ or ‘type compatibility’ is understood) usually results in a vague description of specific instances (invariably the commonly encountered situations),

- books that claim to teach a language often provide superficial coverage of the language semantics and concentrate on usage examples (because that is what is useful to their readers). Those books claiming to give insight into the depths of a language often contains many mistakes; perhaps the most well known example is Herbert Schildt’s “The Annotated ANSI C Standard”, Clive Feather’s review of the 1995 edition and Peter Seebach’s review of later versions,

- the word ‘Advanced’ has to appear in programming courses for professional developers with 3–10 years of experience because potential customers think they have reached an advanced level. In practice, such courses teach the basics and get away with it because most of the attendees don’t know them. My own experiences of teaching such courses is that outside of the walking people through the slides, the real teaching is about trying to undo some of the bad habits and misconceptions individuals have picked up over the years.

Recent graduate think they are an expert in the language used on their course because they probably have not met anybody who knows a lot more; some professional developers think they are language experts because the have lots of years of experience, in practice they tend to have spent those years essentially using what they originally learned and are now very adept with that small subset.

How might we measure the program language knowledge of the general developer population?

Software development question/answer sites such as Stack Overflow contain a wealth of information. I think I could write a function that did a reasonably good job of deducing the programming language, if any, being used in the question. Given the language definition (in some cases this might not exist, e.g., Perl and PHP) and the answers to the question of how do I figure out the language expertise of the person who wrote the answer?

First, we need to filter out those questions that are application related, with code being incidental. Latent Semantic Indexing could be used to locate the strongest connections between parts of the language specification and the non-source code answer text. If strong connections are found, the question would be assumed to be programming language related.

Developers only need surface knowledge to sprinkle any answer with phrases related to the language referred to; more in depth analysis is needed.

One idea is to process any code in the question/answer with a compiler capable of generating references to those parts of the language definition used during its semantic processing (ideally ‘part’ would be the sentence level, but I would settle for paragraph level or perhaps couple of paragraph level). A non-trivial overlap between the ‘parts’ references returned by the two searches would be a good indicator of programming language question. The big problem with this idea is complete lack of compilers supporting this language reference functionality (somebody please prove me wrong).

I am currently stumped for a practical technique for a non-superficial way of measuring developer language expertise. The 2013 Mining Software Repositories challenge is based on a dump of the questions/answers from Stack Overflow, I’m looking forward to seeing what useful information researchers extract from it.

Superoptimizers are back in vogue

There has always been the need for a few developers with in-depth knowledge of a particular cpu architecture to sit down and think very hard about how best to implement a snippet of code performing some operation in assembly language, e.g., library implementors wanting the tightest code for a critical inner loop or compiler writers who need to map from intermediate code to machine code.

In 1987 Massalin published his now famous paper that introduced the term Superoptimizer; a program that enumerates all possible combinations of instruction sequences until the shortest/fastest one producing the desired output from the given input is found (various heuristics were used to prune the search space e.g., only considering 15 or so opcodes, and the longest sequence it ever generated contained 12 instructions).

While the idea was widely talked about, it never caught on in practice (a special purpose branch eliminator was produced for GCC; Hacker’s Delight also includes a stand-alone system). Perhaps the guild of mindbogglingly-obtuse-but-fast-instruction-sequences black-balled it (apprentices have to spend several years doing nothing but writing assembly code for their chosen architecture, thinking about how to make it go faster and/or be shorter and only talk to other apprentices/members and communicate with non-converts exclusively about their latest neat sequence), or perhaps it was just a case of not invented here (writing machine code used to be something that even run-of-the-mill developers got to do every now and again), or perhaps it was not considered cost-effective to build a superoptimizer for a given project (I don’t know of anyone offering a generic tool that could be tailored for specific cases) or perhaps developers were happy to just ride the wave of continually faster processors.

It was not until 2008 with Bansal’s thesis that superoptimizer research started to take off (as in paper publication rate increased from once every five years to more than one a year). Bansal found a new market, binary translation i.e., translating the binary of a program built to run on one kind of cpu to run on a different kind of cpu, for instance the Mac 68K emulator.

Bansal and other researchers’ work was oriented towards relatively short instruction sequences. To be really useful, some way of handling longer sequences was needed.

A few days ago Stochastic Superoptimization arrived on the scene (or rather a paper describing it became available for download). Schkufza, Sharma and Aiken use Markov chain Monte Carlo methods to sample the possible instruction sequences rather than generating all of them. The paper gives a 116 instruction example from which the author’s tool removed 16 lines to produce code that went 1.6 times faster (only 30 ‘core’ instructions were given in paper); what is also very interesting is that the tool operates on compiler generated output (gcc/llvm), suggesting the usage build program, profile it and then stochastic superoptimize the hot spots.

Markov chains and Monte Carlo methods are trendy topics that researchers like to write about, so we will certainly see more papers in this area.

These days few developers have had hands-on experience with machine code, so the depth of expertise that was once easy to find is now rare, processors have many more weird and wonderful instructions often interacting with older instructions in obscure ways, and the cpu architecture landscape continues to change regularly. The time may have arrived for superoptimizers to be widely used by industry.

Of course, superoptimizers can work at any level of abstraction, including expression trees built directly from some complicated floating-point calculation that needs to be optimized for accuracy or speed.

Why is Cobol still popular in Japan?

Rummaging around the web for empirical software engineering data, I found a survey of programming language usage in Japan. This survey (based on 505 projects in 24 companies) has Cobol in the number two slot for 2012, a bit higher than I would have expected (it very rarely appears at all in US/UK ‘popularity’ lists):

Language Projects Java 822 28.2% COBOL 464 15.9% VB 371 12.7% C 326 11.2% Other languages 208 7.1% C++ 189 6.5% Visual Basic.NET 136 4.7% Visual C++ 105 3.6% C# 101 3.5% PL/SQL 57 2.0% Pro*C 23 0.8% Excel(VBA) 18 0.6% Developer2000 17 0.6% ABAP 15 0.5% HTML 14 0.5% Delphi 11 0.4% PL/I 10 0.3% Perl 10 0.3% PowerBuilder 7 0.2% Shell 7 0.2% XML 6 0.2%

A quick overview of Cobol for those readers who have never encountered it.

Cobol is a domain specific language ideally suited for business data processing in the 1960/70/80/90s. During this period computer memory was often measured in kilobytes, data came in an unbelievably wide range of different formats, operations on data mostly involved sorting and basic arithmetic, and output data format was/is very important. By “unbelievably wide range” think of lots of point-of-sale vendors deciding how their devices would write data to punch cards/paper tape/magnetic tape, just handling the different encodings that have been used for the plus/minus sign can make the head spin; combine the requirement that programs handle different data formats with tiny computer memory capacity, and you get data structure overlays that make C programmers look like rank amateurs, all the real action in Cobol programs occurs in the DATA DIVISION.

So where are we today? Companies use computers to solve a wider range of problems don’t they (so even if Cobol usage stayed the same its percentage usage should be low)? If point-of-sale terminals still produce a wide range of weird and wonderful data formats, isn’t it easy enough to write the appropriate libraries to convert (and we have much more storage these days)?

Why might Cobol still be so popular in Japan (and perhaps elsewhere, if anybody over 25 was included in the survey)? Some ideas:

- Cobol is still the best language to use for business data processing,

- the sample is not representative of the Japanese software development industry. As a government body perhaps the Information-Technology Promotion Agency primarily deals with large well established companies; the data came from a relatively small number of companies (i.e., 24),

- the Japanese are known for being conservative and maintaining traditions. Change is almost considered a necessity here in the West, this has led to the use of way too many programming languages in industry (I have previously written about what a mistake it is to invent a new language).

Sampling is now an issue in software engineering research

Data analysis in software engineering often has to make do with measurements extracted from the handful of measurable/measured instances at hand, but every now and again the abundance of stuff to measure is such that a subset has to be selected. How should the subset be selected?

Population sampling is a well established part of statistics, and a variety of terms have sprung up to label the various strategies used. I think ‘Accidental sampling’ accurately describes the provenance of many software engineering datasets seen in research papers and some of my work. It is quite common to see academic papers using exactly the same sample as previously published papers, perhaps a new term is needed to describe using samples that are identical to those used in previously papers: lazy sampling, coat-tail sampling…

Program source code, which was once so hard to obtain in any significant quantity, is now available by the terabyte load, a population that all but the fastest analysis and most general of questions warrant processing as a whole.

The question being asked can itself intrinsically lead to a reduction in the size of the population, e.g., properties of programs written in X, or programs with more than 10 active developers.

What should be the unit of sampling? The package making up a standalone system/library is a common choice (e.g., all the files in the tar or zip archive from which binaries are built); this can result in unexpected source files being included in the measurement process, such as test programs. A less common choice is to use individual source files as the sampling unit (it is so much easier to randomly select a list of packages, download, extract and measure them one by one).

Are the source file characteristics of the contents of 1,000 packages statistically very similar to 25,000 files obtained by randomly selecting 1 file from 25,000 packages? I don’t know.

A recent paper by Nagappan, Zimmermann and Bird proposes a sampling algorithm which looks like quota or coverage sampling in that candidate similarity to the current sample is used to decide whether to add that candidate (too much similarity results in exclusion). The authors misleadingly associate the term ‘representativeness’ with this algorithm, where common statistical usage of representative requires that if 40% of a population have attribute Z then 40% of a sample’s members will have this attribute (within sampling tolerances).

If software engineering research is to be useful to commercial software engineering, any discoveries need to be applicable to samples outside of those used in the original analysis. At the moment researchers are having a hard enough time finding any useful patterns in their data, this is not a reason to continue with the practice of coat-tail sampling and we all need to start addressing sampling issues.

Break even ratios for development investment decisions

Developers are constantly being told that it is worth making the effort when writing code to make it maintainable (whatever that might be). Looking at this effort as an investment what kind of return has to be achieved to make it worthwhile?

Short answer: The percentage saving during maintenance has to be twice as great as the percentage investment during development to break even, higher ratio to do better.

The longer answer is below as another draft section from my book Empirical software engineering with R book. As always comments and pointers to more data welcome. R code and data here.

Break even ratios for development investment decisions

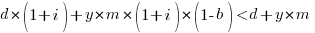

Upfront investments are often made during software development with the aim of achieving benefits later (e.g., reduced cost or time). Examples of such investments include spending time planning, designing or commenting the code. The following analysis calculates the benefit that must be achieved by an investment for that investment to break even.

While the analysis uses years as the unit of time it is not unit specific and with suitable scaling months, weeks, hours, etc can be used. Also the unit of development is taken to be a complete software system, but could equally well be a subsystem or even a function written by one person.

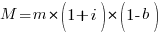

Let  be the original development cost and

be the original development cost and  the yearly maintenance costs, we start by keeping things simple and assume

the yearly maintenance costs, we start by keeping things simple and assume  is the same for every year of maintenance; the total cost of the system over

is the same for every year of maintenance; the total cost of the system over  years is:

years is:

If we make an investment of  % in reducing future maintenance costs with the expectations of achieving a benefit of

% in reducing future maintenance costs with the expectations of achieving a benefit of  %, the total cost becomes:

%, the total cost becomes:

and for the investment to break even the following inequality must hold:

expanding and simplifying we get:

or:

If the inequality is true the ratio  is the primary contributor to the right-hand-side and must be greater than 1.

is the primary contributor to the right-hand-side and must be greater than 1.

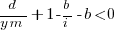

A significant problem with the above analysis is that it does not take into account a major cost factor; many systems are replaced after a surprisingly short period of time. What relationship does the  ratio need to have when system survival rate is taken into account?

ratio need to have when system survival rate is taken into account?

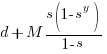

Let  be the percentage of systems that survive each year, total system cost is now:

be the percentage of systems that survive each year, total system cost is now:

where

Summing the power series for the maximum of  years that any system in a company’s software portfolio survives gives:

years that any system in a company’s software portfolio survives gives:

and the break even inequality becomes:

The development/maintenance ratio is now based on the yearly cost multiplied by a factor that depends on the system survival rate, not the total maintenance cost

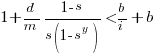

If we take  >= 5 and a survival rate of less than 60% the inequality simplifies to very close to:

>= 5 and a survival rate of less than 60% the inequality simplifies to very close to:

telling us that if the yearly maintenance cost is equal to the development cost (a situation more akin to continuous development than maintenance and seen in 5% of systems in the IBM dataset below) then savings need to be at least twice as great as the investment for that investment to break even. Taking the mean of the IBM dataset and assuming maintenance costs spread equally over the 5 years, a break even investment requires savings to be six times greater than the investment (for a 60% survival rate).

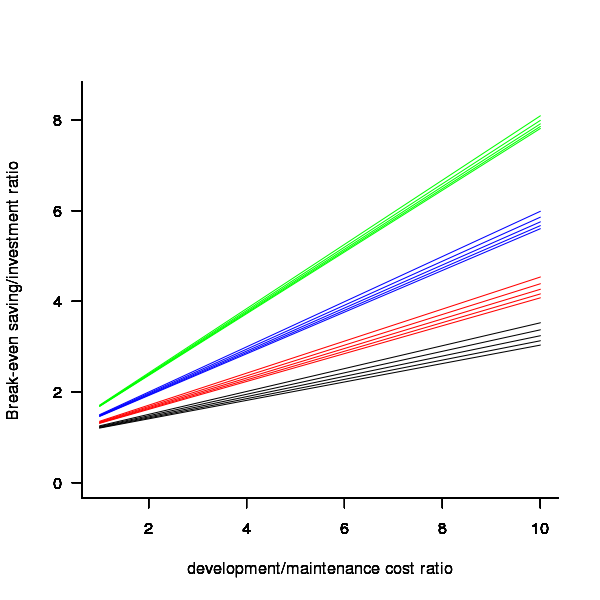

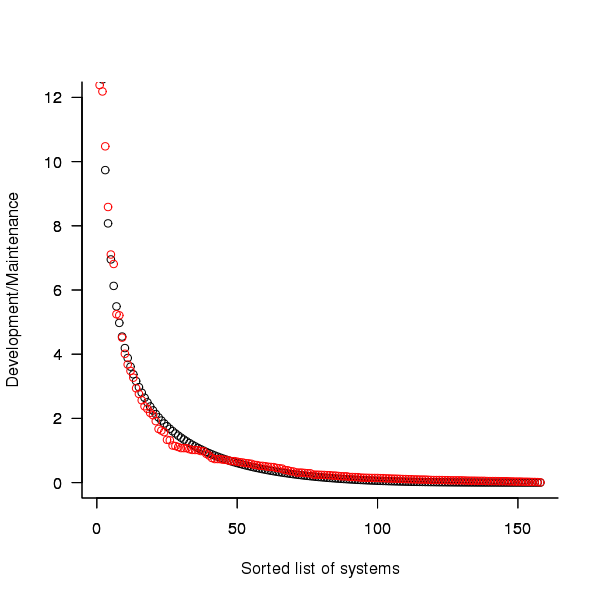

The plot below gives the minimum required saving/investment ratio that must be achieved for various system survival rates (black 0.9, red 0.8, blue 0.7 and green 0.6) and development/yearly maintenance cost ratios; the line bundles are for system lifetimes of 5.5, 6, 6.5, 7 and 7.5 years (ordered top to bottom)

Figure 1. Break even saving/investment ratio for various system survival rates (black 0.9, red 0.8, blue 0.7 and green 0.6) and development/maintenance ratios; system lifetimes are 5.5, 6, 6.5, 7 and 7.5 years (ordered top to bottom)

Development and maintenance costs

Dunn’s PhD thesis <book Dunn_11> lists development and total maintenance costs (for the first five years) of 158 software systems from IBM. The systems varied in size from 34 to 44,070 man hours of development effort and from 21 to 78,121 man hours of maintenance.

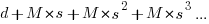

The plot below shows the ratio of development to five year maintenance costs for the 158 software systems. The mean value is around one and if we assume equal spending during the maintenance period then  .

.

Figure 2. Ratio of development to five year maintenance costs for 158 IBM software systems sorted in size order. Data from Dunn <book Dunn_11>.

The best fitting common distribution for the maintenance/development ratio is the <Beta distribution>, a distribution often encountered in project planning.

Is there a correlation between development man hours and the maintenance/development ratio (e.g., do smaller systems tend to have a lower/higher ratio)? A Spearman rank correlation test between the maintenance/development ratio and development man hours gives:

|

showing very little connection between the two values.

Is the data believable?

While a single company dataset might be thought to be internally consistent in its measurement process, IBM is a very large company and it is possible that the measurement processes used were different.

The maintenance data applies to software systems that have not yet reached the end of their lifespan and is not broken down by year. Any estimate of total or yearly maintenance can only be based on assumptions or lifespan data from other studies.

System lifetime

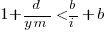

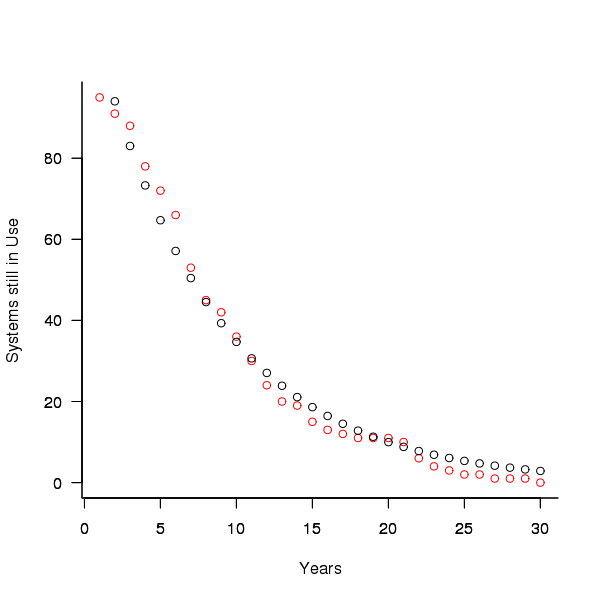

A study by Tamai and Torimitsu <book Tamai_92> obtained data on the lifespan of 95 software systems. The plot below shows the number of systems surviving for at least a given number of years and a fit of an <Exponential distribution> to the data.

Figure 3. Number of software systems surviving to a given number of years (red) and an exponential fit (black, data from Tamai <book Tamai_92>).

The nls function gives  as the best fit, giving a half-life of 5.4 years (time for the number of systems to reduce by 50%), while rounding to

as the best fit, giving a half-life of 5.4 years (time for the number of systems to reduce by 50%), while rounding to  gives a half-life of 6.6 years and reducing to

gives a half-life of 6.6 years and reducing to  a half life of 4.6 years.

a half life of 4.6 years.

It is worrying that such a small change to the estimated fit can have such a dramatic impact on estimated half-life, especially given the uncertainty in the applicability of the 20 year old data to today’s environment. However, the saving/investment ratio plot above shows that the final calculated value is not overly sensitive to number of years.

Is the data believable?

The data came from a questionnaire sent to the information systems division of corporations using mainframes in Japan during 1991.

It could be argued that things have stabilised over the last 20 years ago and complete software replacements are rare with most being updated over longer periods, or that growing customer demands is driving more frequent complete system replacement.

It could be argued that large companies have larger budgets than smaller companies and so have the ability to change more quickly, or that larger companies are intrinsically slower to change than smaller companies.

Given the age of the data and the application environment it came from a reasonably wide margin of uncertainty must be assigned to any usage patterns extracted.

Summary

Based on the available data an investment during development must recoup a benefit during maintenance that is at least twice as great in percentage terms to break even:

- systems with a yearly survival rate of less than 90% must have a benefit/investment rate greater than two if they are to break even,

- systems with a development/yearly maintenance rate of greater than 20% must have a benefit/investment rate greater than two if they are to break even.

The availanble software system replacement data is not reliable enough to suggest any more than that the estimated half-life might be between 4 and 8 years.

This analysis only considers systems that have been delivered and started to be used. Projects are cancelled before reaching this stage and including these in the analysis would increase the benefit/investment break even ratio.

Agreement between code readability ratings given by students

I have previously written about how we know nothing about code readability and questioned how the information content of expressions might be calculated. Buse and Weimer ran a very interesting experiment that asked subjects to rate short code snippets for readability (somebody please rerun this experiment using professional software developers).

I’m interested in measuring how well different students subjects agree with each other (I have briefly written about this before).

Short answer: Very little agreement between individual pairs, good agreement between rankings aggregated by year.

The longer answer is below as another draft section from my book Empirical software engineering with R book. As always comments welcome. R code and data here.

Readability

Source code is often said to have an attribute known as

A study by Buse and Weimer <book Buse_08> asked Computer Science students to rate short snippets of Java source code on a scale of 1 to 5. Buse and Weimer then searched for correlations between these ratings and various source code attributes they obtained by measuring the snippets.

Humans hold diverse opinions, have fragmented knowledge and beliefs about many topics and vary in their cognitive abilities. Any study involving human evaluation that uses an open ended problem on which subjects have had little experience is likely to see a wide range of responses.

Readability is a very nebulous term and students are unlikely to have had much experience working with source code. A wide range of responses is to be expected and the analysis performed here aims to check the degree of readability rating agreement between the subjects.

Data

The data made available by Buse and Weimer are the ratings, on a scale of 1 to 5, given by 121 students to 100 snippets of source code. The student subjects were drawn from those taking first, second and third/fourth year Computer Science degree courses and postgraduates at the researchers’ University (17, 65, 31 and 8 subjects respectively).

The postgraduate data was not used in this analysis because of the small number of subjects.

The source of the code snippets is also available but not used in this analysis.

Is the data believable?

The subjects were not given any instructions on how to rate the code snippets for readability. Also we don’t know what outcome they were trying to achieve when rating, e.g., where they rating on the basis of how readable they personally found the snippets to be, or rating on the basis of the answer they would expect to give if they were being tested in an exam.

The subjects were students who are learning about software development and many of them are unlikely to have had any significant development experience outside of the teaching environment. Experience shows that students vary significantly in their ability to read and write source code and a non-trivial percentage do not go on to become software developers.

Because the subjects are at an early stage of learning about code it is to be expected that their opinions about readability will change while they are rating the 100 snippets. The study did not include multiple copies of some snippets, this would have enabled the consistency of individual subject responses to be estimated.

The results of many studies <book Annett_02> has shown that most subject ratings are based on an ordinal scale (i.e., there is no fixed relationship between the difference between a rating of 2 and 3 and a rating of 3 and 4), that some subjects are be overly generous or miserly in their rating and that without strict rating guidelines different subjects apply different criteria when making their judgements (which can result a subject providing a list of ratings that is inconsistent with every other subject).

Readability is one of those terms that developers use without having much idea what they and others are really referring to. The data from this study can at most be regarded as treating readability to be whatever each subject judges it to be.

Predictions made in advance

Is the readability rating given to code snippets consistent between different students on a computer science course?

The hypothesis is that the between student consistency of the readability rating given to code snippets improves as students progress through the years of attending computer science courses.

Applicable techniques

There are a variety of techniques for estimating rater agreement. <Krippendorff’s alpha> can be applied to ordinal ratings given by two or more raters and is used here.

Subjects do not have to give the same rating to share some degree of consistent response. Two subjects may share a similar pattern of increasing/decreasing/stay the same ratings across snippets. The <Spearman rank correlation> coefficient can be used to measure the correlation between the rank (i.e., relative value within sequence) of two sequences.

Results

When creating the snippets the researchers had no method of estimating what rating subjects would give to them and so there is no reason to expect a uniform distribution of rating values or any other kind of distribution of rating values.

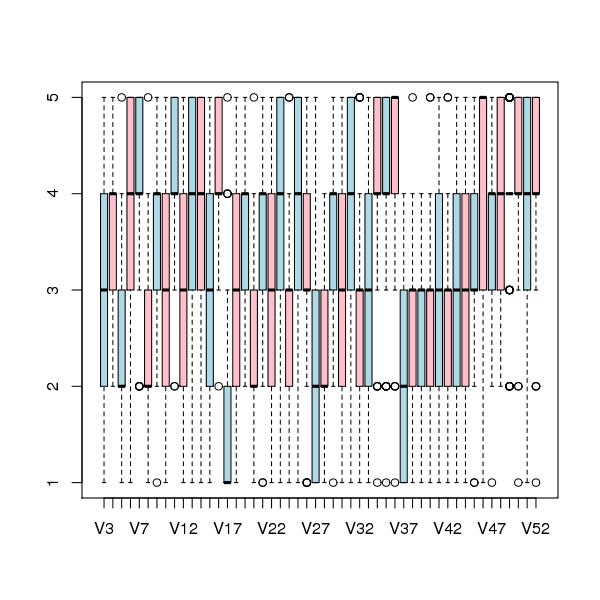

The figure below is a boxplot of the rating of the first 50 code snippets rated by second year students and suggests that many subject ratings are within ±1 of each other.

Figure 1. Boxplot of ratings given to snippets 1 to 50 by second year students (colors used to help distinguish boxplots for each snippet).

Between subject rating agreement

The Krippendorff alpha and mean Spearman rank correlation coefficient (the coefficient is calculated for every pair of subjects and the mean value taken) was obtained using the kripp.alpha and meanrho functions from the irr package (a <Jackknife> was used to obtain the following 95% confidence bounds):

Krippendorff's alpha cs1: 0.1225897 0.1483692 cs2: 0.2768906 0.2865904 cs4: 0.3245399 0.3405599 mean Spearman's rho cs1: 0.1844359 0.2167592 cs2: 0.3305273 0.3406769 cs4: 0.3651752 0.3813630 |

Taken as a whole there is a little of agreement. Perhaps there is greater consensus on the readability rating for a subset of the snippets. Recalculating using only using those snippets whose rated readability across all subjects, by year, has a standard deviation less than 1 (around 22, 51 and 62% of snippets respectively) shows some improvement in agreement:

Krippendorff's alpha cs1: 0.2139179 0.2493418 cs2: 0.3706919 0.3826060 cs4: 0.4386240 0.4542783 mean Spearman's rho cs1: 0.3033275 0.3485862 cs2: 0.4312944 0.4443740 cs4: 0.4868830 0.5034737 |

Between years comparison of ratings

The ratings from individual subjects is only available for one of their years at University. Aggregating the answers from all subjects in each year is one method of obtaining readability information that can be used to compare the opinions of students in different years.

How can subject ratings be aggregated to rank the 100 code snippets in order of what a combined group consider to be readability? The relatively large variation in mean value of the snippet ratings across subjects would result in wide confidence bounds for an aggregate based on ratings. Mapping each subject’s rating to a ranking removes the uncertainty caused by differences in mean subject ratings.

With 100 snippets assigned a rating between 1 and 5 by each subject there are going to be a lot of tied rankings. If, say, a subject gave 10 snippets a rating of 5 the procedure used is to assign them all the rank that is the mean of the ranks the 10 of them would have occupied if their ratings had been very slightly different, i.e., (1+2+3+4+5+6+7+8+9+10)/10 = 5.5. This process maps each students readability ratings to readability rankings, the next step is to aggregate these individual rankings.

The R_package[RankAggreg] package contains a variety of functions for aggregating a collection rankings to obtain a group ranking. However, these functions use the relative order of items in a vector to denote rank, and this form of data representations prevents them supporting ranked lists containing items having the same rank.

For this analysis a simple aggregate ranking algorithm using Borda’s method <book lin_10> was implemented. Borda’s method for creating an aggregate ranking operates on one item at a time, combining all of the subject ranks for that item into a single rank. Methods for combining ranks include taking their mean, their geometric mean and the square-root of the sum of their squares; the mean value was used for this analysis.

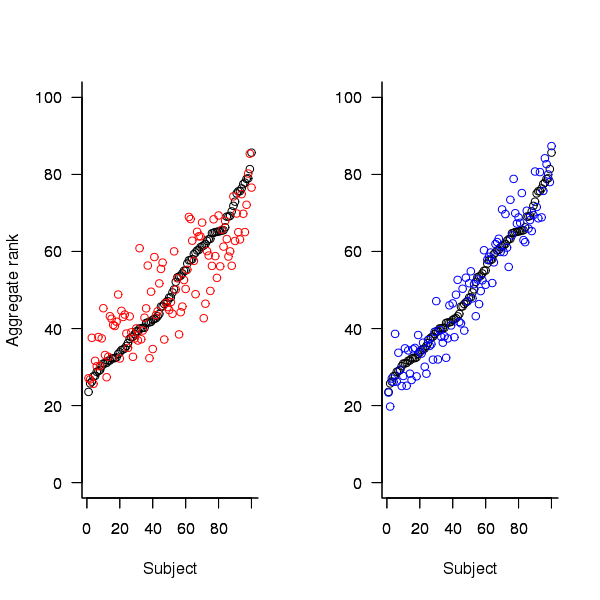

An aggregate ranking was created for subjects in years one, two and four and the plot below compares the ranking between 1st/2nd year students (left) and 2nd/4th year students (right). The order of the second year student snippet rankings have been sorted and the other year rankings for the snippets mapped to the corresponding position.

Figure 2. Aggregated ranking of snippets by subjects in years 1 and 2 (red and black) and years 2 and 4 (black and blue). Snippets have been sorted by year 2 ranking.

The above plot seems to show that at the aggregated year level there is much greater agreement between the 2nd/4th years than any other year pairing and measuring the correlation between each of the years using <Kendall’s tau>:

cs1.tau cs2.tau cs3.tau 0.6627602 0.6337914 0.8199636 |

confirms the greater agreement between this aggregate year pair.

Individual subject correlation to year aggregate ranking

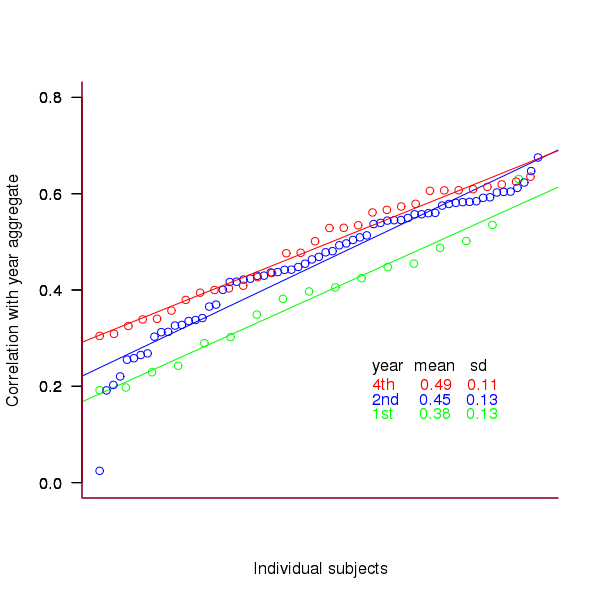

To what extend to subject ratings correlate with their corresponding year aggregate? The following plot gives the correlation, using Kendall’s tau, between each subject and their corresponding year aggregate ranking.

Figure 3. Correlation, using Kendall’s tau, between each subject and their corresponding year aggregate ranking.

The least squares fit shows that the variation in correlation across subjects in any year is very similar (removal of outliers in year 2 would make the lines almost parallel); the mean again shows a correlation that increases with year.

Discussion

The extent to which this study’s calculated values of rater agreement and correlation are considered worthy of further attention depends on the use to which the results will be put.

- From the perspective of trained raters the subject agreement in this study is very low and the rating have no further use.

- From the research perspective the results show that the concept of readability in the computer science student population has some non-zero substance to it that might be worth further study.

- From an overall perspective this study provides empirical evidence for a general lack of consensus on what constitutes readability.

It is not surprising that there is little agreement between student subjects on their readability rating, they are unlikely to have had much experience reading code and have not had any training in rating code for readability.

Professional developers will have spent years working with code and this experience is likely to have resulted in the creation of stable opinions on code readability. While developers usually work with code that is much longer than the few lines contained in the snippets used by Buse and Weimer, this experiment format is easy to administer and supports a fine level of control, i.e., allows a small set of source attributes of interest to be presented while excluding those not of interest. Repeating this study using such people as subjects would show whether this experience results in convergence to general agreement on the readability rating of code.

Summary of findings

The agreement between students readability ratings, for short snippets of code, improves as the students progress through course years 1 to 4 of a computer science degree.

While there is very good aggregated group agreement on the relative ranking of the readability of code snippets there is very little agreement between pairs of individuals.

- Two students chosen at random from within a year will have a low Spearman rank correlation coefficient for their rating of code snippet readability.

- Taken as a yearly aggregate there is a high degree of agreement between years two and four and less, but still good agreement between year 1 and other years.

- There is a broad range of correlations, from poor to good, between year aggregates and student subjects in the corresponding year.

Recent Comments