Archive

How much productive work does a developer do?

Measuring develop productivity is a nightmare issue that I do my best to avoid.

Study after study has found that workers organise their work to suit themselves. Why should software developers be any different?

Studies of worker performance invariably find that the rate of work is higher when workers are being watched by researchers/managers; this behavior is known as the Hawthorne effect. These studies invariably involve some form of production line work involving repetitive activities. Time is a performance metric that is easy to measure for repetitive activities, and directly relatable to management interests.

A study by Bernstein found that production line workers slowed down when observed by management. On the production line studied, it was not possible to get the work done in the allotted time using the management prescribed techniques, so workers found more efficient techniques that were used when management were not watching.

I have worked on projects where senior management decreed that development was to be done according to some latest project management technique. Developers quickly found that the decreed technique was preventing work being completed on time, so ignored it while keeping up a facade to keep management happy (who appeared to be well aware of what was going on). Other developers have told me of similar experiences.

Studies of software developer performance often implicitly assume that whatever the workers (i.e., developers) say must be so; there is no thought given to the possibility that the workers are promoting work processes that suits their interests and not managements.

Just like workers in other industries, software developers can be lazy, lack interest in doing a good job, unprofessional, a slacker, etc.

Hard-working, diligent developers can be just as likely as the slackers, to organise work to suit themselves. A good example of this is adding product features that the developer wants to add, rather than features that the customer wants to use, or working on features/performance that exceed the original requirements (known as gold plating in other industries).

Developers will lobby for projects to use the latest language/package/GUI/tools in their work. While issues around customer/employer cost/benefit might be cited as a consideration, evidence, in the form of a cost/benefit analysis, is not usually given.

Like most people, developers want others to have a good opinion of them. As writers, of code, developers can attach a lot of weight to how its quality will be perceived by other developer. One consequence of this is a willingness to regularly spend time polishing good-enough code. An economic cost/benefit of refactoring is rarely a consideration.

The first step of finding out if developers are doing productive work is finding out what they are doing, or even better, planning in some detail what they should be doing.

Developers are not alone in disliking having their activities constrained by detailed plans. Detailed plans imply some form of estimates, and people really hate making estimates.

My view of the rationale for estimating in story points (i.e., monopoly money) is that they relieve the major developer pushback on estimating, while allowing management to continue to create short-term (e.g., two weeks) plans. The assumption made is that the existence of detailed plans reduces worker wiggle-room for engaging in self-interest work.

Focus of activities planned for 2023

In 2023, my approach to evidence-based software engineering pivots away from past years, which were about maximizing the amount of software engineering data gathered.

I plan to spend a lot more time attempting to join dots (i.e., finding useful patterns in the available data), and I also plan to spend time collecting my own data (rather than other peoples’ data).

I will continue to keep asking people for data, and I’m sure that new data will become available (and be the subject of blog posts). The amount of previously unseen data obtained by continuing to read pre-2020 papers is likely to be very small, and not worth targetting. Post-2020 papers will be the focus of my search for new data (mostly conference proceedings and arXiv’s software engineering recent submissions)

It would be great if there was an active community of evidence-based developers. The problem is that the people with the necessary skills are busily employed building real systems. I’m hopeful that people with the appropriate background and skills will come out of the woodwork.

Ideally, I would be running experiments with developer subjects; this is the only reliable way to verify theories of software engineering. While it’s possible to run small scale experiments with developer volunteers, running a workplace scale experiment will be expensive (several million pounds/dollars). I don’t move in the circles frequented by the very wealthy individuals who might fund such an experiment. So this is a back-burner project.

if-statements continue to be of great interest to me; they represent decisions that relate to requirements and tests that need to be written. I used to spend a lot of time measuring, mostly C, source code: how the same variable is tested in nested conditions, the use of else arms, and the structuring of conditions within a function. The availability of semgrep will, hopefully, enable me to measure various aspect of if-statement usage across different languages.

I hope that my readers continue to keep their eyes open for interesting software engineering data, and will let me know when they find any.

Impact of team size on planning, when sitting around a table

A recent blog post by Allan Kelly caught my attention; on Monday Allan sent me some comments on the draft of my book and I got to ask for a copy of his data (you don’t need your own software engineering data before sending me comments).

During an Agile training course he gives, Allan runs an exercise based on an extended version of the XP game. The basic points are: people form into teams, a task is announced, teams have to estimate how long it will take them to complete the task and then to plan the task implementation. Allan recorded information on team size, time spent estimating and time spent planning (no information on the tasks, which were straightforward, e.g., fold a paper airplane).

In a recent post I gave a brief analysis of team size on productivity. What does this XP game data have to say about the impact of team size on performance?

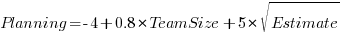

We don’t have task information, but we do have two timing measurements for each team. With a bit of suck-it-and-see analysis, I found that the following equation explained 50% of the variance (code+data):

where:  is the number of people on a team,

is the number of people on a team,  is the time in minutes the team spent estimating and

is the time in minutes the team spent estimating and  is time in minutes the team spent planning the task implementation.

is time in minutes the team spent planning the task implementation.

There was some flexibility in the numbers, depending on the method used to build the regression model.

The introduction of each new team member incurs a fixed overhead. Given that everybody is sitting together around a table, this is not surprising; or, perhaps the problem was so simply that nobody felt the need to give a personal response to everything said by everybody else; or, perhaps the exercise was run just before lunch and people were hungry.

I am not aware of any connection between time spent estimating and time spent planning, but then I know almost nothing about this kind of XP game exercise. That square-root looks interesting (an exponent of 0.4 or 0.6 was a slightly less good fit). Thoughts and experiences anybody?

Update: I forgot to mention that including the date of the workshop in the above model increases the variance explained to 90%. The date here is a proxy for the task being solved. A model that uses just the date explains 75% of the variance.

Recent Comments