Archive

Language usage in Google’s ngram viewer

I thought I would join the fun that people are having with Google’s new ngram viewer. The raw data (only a subset for bigrams and longer ngrams) was also enticing, but at 35+ gigabytes for the compressed 1/2/3-grams of English-all I decided to forgo the longer n-grams.

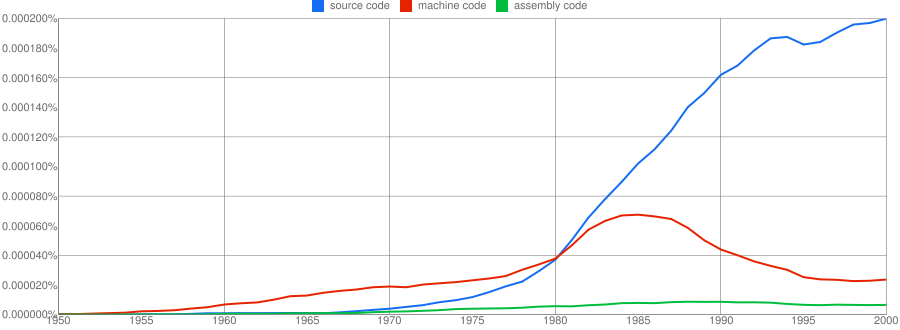

We all know that in the dim and distant past most programmers wrote in machine code, but it was not until 1980 that “source code” appeared more frequently in books that “machine code”.

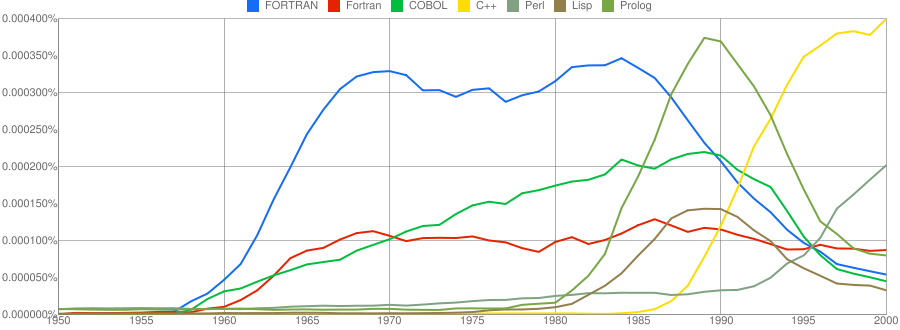

Computer language popularity is a perennial question. Fortran and Cobol address very different markets and I would have expected their usage to follow similar patterns, with “COBOL” having the obvious usage pattern for them both to follow. Instead, both “FORTRAN” and “Fortran” peaked within 10 years, with one staying there for another 20 years before declining and the other still going strong in 2000 (and still ahead of “PHP” and “Python” in 2000; neither shown to keep the clutter down). I am surprised to see “Prolog” usage being so much greater than “Lisp” and I would have expected “Lisp” to have a stronger presence in the 1970s.

I think the C++ crowd will be surprised to see that in 2000 usage was not much greater than what “FORTRAN” had enjoyed for 20 years.

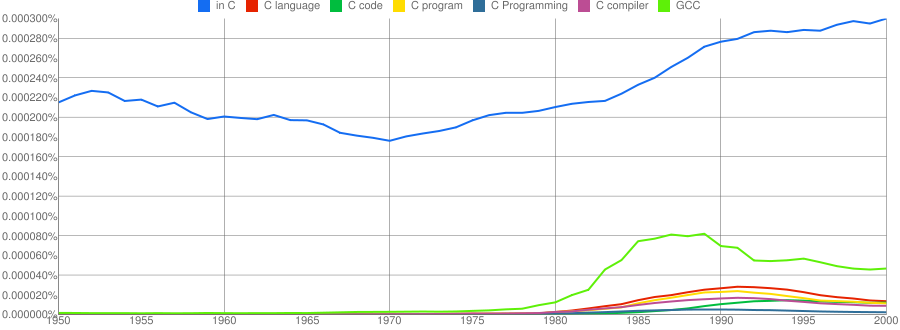

“C”, as in language, usage is obviously different to reliably measure. I have tried the obvious bigrams. Looking at some of the book matches for the phrase “in C” shows that the OCR process has sometimes inserted spaces that probably did not exist in the original, the effect being to split words and create incorrect bigrams. The phrase “in C” would also appear in books on music.

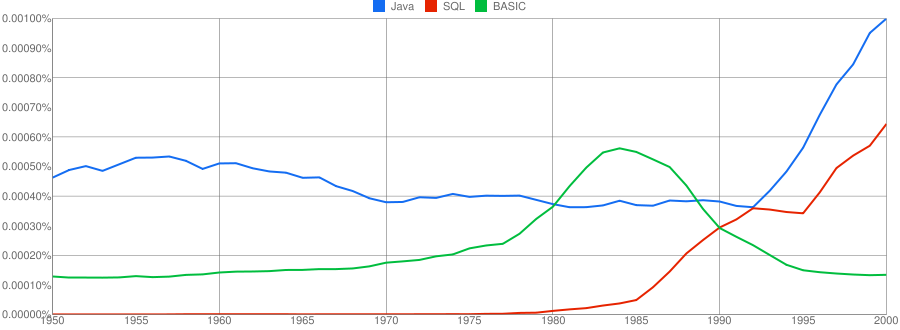

I have put the three words “Java”/”SQL”/”BASIC” in a separate plot because their usage swamps that of the other languages. Java obviously has multiple non-computer related uses and subtracting the estimated background usage suggests a language usage similar to that of “SQL”. There is too much noise for the usage of “Basic” to tell us much.

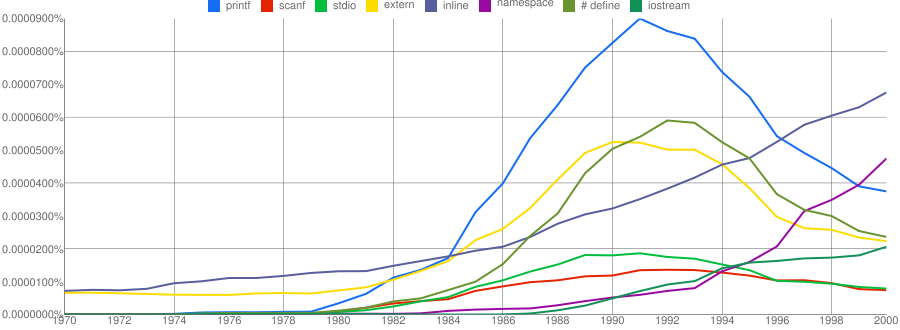

One way of comparing C/C++ language usage is to look source code usage where they are likely to differ. I/O, in the form of printf/scanf and stdio/iostream, is one obvious choice and while the expected C usage starts to declines in the 1990s the C++ usage just shows a stead growth (perhaps the <</>> usage, which does not appear in the Google viewer, has a dramatic growth during this time period).

Surprisingly #define also follows a similar pattern of decline. Even allowing for the rabid anti-macro rhetoric of the C++ in-crowd I would not have expected such a rapid decline. Perhaps this is some artifact of the book selection process used by Google; but then "namespace" shows a healthy growth around this time period.

The growth of "inline" over such a long period of time is a mystery. Perhaps some of this usage does not relate to a keyword appearing within source code examples but to text along the lines of "put this inline to make it faster".

What usage should we expect for the last decade? A greater usage of "PHP" and "Python" is an obvious call to make, along with the continuing growth of SQL, I think "UML" will also feature prominently. Will "C++" show a decline in favor or "Java" and what about "C#"? We will have to wait and see.

Oracle/Google ‘Java’ patents lawsuit

At the technical level the Oracle ‘Java’ lawsuit against Google is not really about Java at all. The patents cited in the lawsuit (5,966,702, 6,061,520, 6,125,447, 6,192,476, RE38,104, 6,910,205 and 7,426,720) involve a variety of techniques that might be used in the implementation of a virtual machine supporting JIT compilation for software written in any language; Oracle does not get into the specifics of which parts of Android make use of these patented techniques (Google has not released the Dalvik source code). With regard to the claimed copyright infringement, I have no idea what Oracle claim has been copied; as I understand it Android uses Apache’s Harmony code for its Dalvik VM runtime library, but there is a possibility that it might have used some of Oracle’s gpl’ed code.

No doubt Google’s lawyers will be asking for specific details and once these are provided they have three options: 1) find prior art that invalidates the patent, 2) recode the implementation so it does not infringe the patent or 3) negotiate a license with Oracle.

My only experience in searching for prior art was as an adviser to the Monitoring Trustee appointed by the European Commission in the EU/Microsoft competition court case; Microsoft’s existing patents were handled by a patent lawyer and I looked at some of the non-patented innovations being claimed by Microsoft.

The obvious tool to use in a search for prior art is Google and this worked well for me provided the information was created after the mid to late 1990s. The cut-off date for historical searches seemed to be around 1993 (this all happened before Google Books was released). Other sources of information included old books (I had not moved in 15 years and my book collection had not been culled), computer manuals and one advisor found what he was looking for in some old computer magazines bought on e-bay.

Some claimed innovations appeared so blindingly obvious that it was difficult to believe that anybody would bother mentioning it in a print. Oracle’s patent 6,910,205

“Interpreting functions utilizing a hybrid of virtual and native machine instructions” (actually a continuation of 6,513,156, filed five years earlier in 1997) describes an obvious method of handling the interface between interpreted and JIT compiled code.

My only implementation experience in this area does predate the Oracle patent (I wrote a native code generator for the UCSD Pascal p-code machine in the mid-80s) but the translation occurred before program execution and so is not appropriate prior art. Virtual machines fell out of favor in the late 80s and when they were revived by Java 10 years later I was no longer working on compilers

Fans of Lisp are always claiming that everything was first done by the Lisp community and there were certainly Lisp compilers around in the 1980s, but I’m not sure if any of them worked during program execution.

Of course Groklaw have started following this case. Kodak has shown that it is possible to win in court over Java related patents. The SunOracle/Microsoft Java lawsuit in the late 1990s was a contract dispute and not about patents/copyright, but still about money/control.

I’m not aware of any previous language implementation patent lawsuits that have gone to court. It will be interesting to see how much success Google have in finding prior art and whether it is possible to implement a JVM that does not make use of any of the techniques specified in the Oracle patents (I suspect that they have a few more that they have not yet cited).

What language was an executable originally written in?

Apple have recently added an unusual requirement to the iPhone developer agreement “Applications must be originally written in Objective-C, C, C++, or JavaScript …”. As has been pointed out elsewhere the real purpose is stop third party’s from acquiring any control over application development on Apple’s products; the banning of other languages is presumably regarded as acceptable collateral damage.

Is it possible to tell by analyzing an executable what language it was originally written in?

There are two ways in which executables contain source language ‘signatures’. Detecting these signatures requires knowledge of specific compiler behavior, i.e., a database of information about the behavior of compilers capable of creating the executables is needed.

Runtime library. Most compilers make use of a language specific runtime library, rather than generating inline code for some kinds of functionality. For instance, setjmp/longjmp in C and vtables in C++.

The presence of a known C runtime library does not guarantee that the application was originally written in C; it could have been written in Java and converted to C source.

The absence of a known C runtime library could mean that the source was compiled by a C compiler using a runtime system unknown to the analyzer.

The presence of a known Java, for instance, runtime library would suggest that the original source contained some Java. This kind of analysis would obviously require that the runtime library database not restrict itself to the ‘C’ languages.

Compiler behavior patterns. There is usually more than one way in which a source language construct can be translated to machine code and a compiler has to pick one of them. The perfect optimizing compiler would always make the optimal choice, but real compilers follow a fixed pattern of code generation for at least some language constructs (e.g., initialization of registers on function entry).

The presence of known code patterns in an executable is evidence that a particular compiler has been used; how much depends on the likelihood it could have been generated by other means and how many other patterns suggest the same compiler. In the case of the GNU Compiler Collection the source language might also be Fortran, Java or Ada; I don’t know enough about the behavior of GCC to provide an informed estimate of whether it is possible to recognize the source language from the translated form of constructs shared by several languages, I suspect not.

The fact that an executable can be decompiled to C is not a guarantee that it was originally written in C.

Some languages support source language constructs whose corresponding machine code is unlikely to ever be generated by source from another language. The Fortran computed goto allows constructs to be written that have no equivalent in the other languages supported by GCC (none of them allow statement labels appearing in a multi-way jump to appear before the jump test):

10 I=I+1 20 J=J+1 goto (10, 20, 30, 40) J 30 I=I+3 40 I=I*2 |

The presence of a compiled form of this kind of construct in the executable would be very suggestive of Fortran source.

Apple are famously paranoid and control freakery. It will be very interesting to see what level of compliance checking they decide to perform on executables submitted to the App Store.

On another note: What does “originally written” mean? For instance, many of the mathematical functions (e.g., sine, log, gamma, etc) contained in R were originally written in Fortran and translated to C for use in R; this C source is what is now maintained. Does this historical implementation decision mean that R cannot be legally ported to the iPhone?

Register vs. stack based VMs

Traditionally the virtual machine architecture of choice has been the stack machine; benefits include simplicity of VM implementation, ease of writing a compiler back-end (most VMs are originally designed to host a single language) and code density (i.e., executables for stack architectures are invariably smaller than executables for register architectures).

For a stack architecture to be an effective solution two conditions need to be met:

- The generated code has to ensure that the top of stack is kept in sync with where the next instruction expects it to be. For instance, on its return a function cannot leave stuff lying around on the stack like it can leave values in registers (whose contents can simply be overwritten).

- Instruction execution needs to be generally free of state, so an add-two-integers instruction should not have to consult some state variable to find out the size of integers being added. When the value of such state variables have to be saved and restored around function calls they effectively become VM registers.

Cobol is one language where it makes more sense to use a register based VM. I wrote one and designed two machine code generators for the MicroFocus Cobol VM and always find it difficult to explain to people what a very different kind of beast it is compared to the VMs usually encountered.

Parrot, the VM designed as the target for compiled PERL, is register based. A choice driven, I suspect, by the difficulty of ensuring a consistent top-of-stack and perhaps the dynamic typing of the language.

On register based cpus with 64k of storage the code density benefits of a stack based VM are usually sufficient to cancel out the storage overhead of the VM interpreter and support a more feature rich application (provided speed of execution is not crucial).

If storage capacity is not a significant issue and a VM has to be used, what are the runtime performance differences between a register and stack based VM? Answering this question requires compiling and executing the same set of applications for the two kinds of VM. Something that until 2001 nobody had done, or at least not published the results.

A comparison of the Java (stack based) VM with a register VM (The Case for Virtual Register Machines) found that while the stack based code was more compact, fewer instructions needed to be executed on the register based VM.

Most VM instructions are very simple and take relatively little time to execute. When hosted on a pipelined processor the main execution time overhead of a VM is the instruction dispatch (Optimizing Indirect Branch Prediction Accuracy in Virtual Machine Interpreters) and reducing the number of VM instructions executed, even if they are larger and more complicated, can produce a worthwhile performance improvement.

Google has chosen a register based VM for its Android platform. While licensing issues may have been a consideration there are a number of technical advantages to this decision:

- A register VM is likely to have an intrinsic performance advantage over a stack VM when hosted on a pipelined processor.

- Byte code verification is likely to be faster on a register VM (i.e., faster startup times) because stack height integrity checks will be greatly simplified.

- A register VM will be more forgiving of incorrect code (in the VM, generated by the compiler, code corrupted during program transmission or storage attacked by malware) than a stack VM.

Assuming compilers are clever enough (part 1)

Developers often assume the compiler they use will do all sorts of fancy stuff for them. Is this because they are lazy and happy to push responsibility for parts of the code they write on to the compiler, or do they actually believe that their compiler does all the clever stuff they assume?

An example of unmet assumptions about compiler performance is the use of const in C/C++, final in Java or readonly in other languages. These are often viewed as a checking mechanism, i.e., the developer wants the compiler to check that no attempt is made to, accidentally, change the value of some variable, perhaps via code added during maintenance.

The surprising thing about variables in source code is that approximately 50% of them don’t change once they have been assigned a value (A Theory of Type Qualifiers for C measurements and Automatic Inference of Stationary Fields for Java).

Developers don’t use const/final qualifiers nearly as often as they could. Most modern compilers can deduce if a locally defined variable is only assigned a value once and make use of this fact during optimization. It takes a lot more resources to deduce this information for non-local variables; developers want their compiler to be fast and so implementors don’t won’t them waiting around while whole program analysis is performed.

Why don’t developers make more use of const/final qualifiers? Is this usage, or lack of, an indicator that developers don’t have an accurate grasp of variable usage, or that they don’t see the benefit of using these qualifiers or perhaps they pass responsibility on to the compiler (program size seems to grow sufficiently fast that whole program optimization often consumes more memory than likely to be available; and when are motherboards going to break out of the 4G limit?)

Using local context to disambiguate source

Developers can often do a remarkably good job of figuring out what a snippet of code does without seeing (i.e., knowing anything about) most of the declarations of the identifiers involved. In a previous post I discussed how frequency of occurrence information could be used to help parse C without using a symbol table. Other information that could be used is the context in which particular identifiers occur. For instance, in:

f(x); y = (f)z; |

while the code f(x); is probably a function call, the use of f as the type in a cast means that f(x) is actually a definition an object x having type f.

A project investigating the analysis of partial Java programs uses this context information as its sole means of disambiguating Java source (while they do build a symbol table they do not analyze the source of any packages that might be imported). Compared to C Java parsers have it easy, but Java’s richer type system means that semantic analysis can be much more complicated.

On a set of benchmarks the researchers obtained a very reasonable 91.2% accuracy in deducing the type of identifiers.

There are other kinds of information that developers probably use to disambiguate source: the operation that the code is intended to perform and the identifier names. Figuring out the ‘high level’ operation that code performs is a very difficult problem, but the names of Java identifiers have been used to predict object lifetime and appear to be used to help deduce operator precedence. Parsing source by just looking at the identifiers (i.e., treating all punctuators and operators as whitespace) has been on my list of interesting project to do for some time, but projects that are likely to provide a more immediate interesting result keep getting in the way.

Recent Comments