Archive

Cost/performance analysis of 1944-1967 computers: Knight’s data

Changes In Computer Performance and Evolving Computer Performance 1963-1967, by Kenneth Knight, are the references to cite when discussing the performance of early computers. I suspect that very few people have read the two papers they are citing (citing without reading is a surprisingly common practice). Both papers were published in Datamation, a computer magazine whose technical contents could rival that of the ACM journals in the 1960s, but later becoming more of a trade magazine. Until the articles appeared on bitsavers.org they were only really available through national or major regional libraries.

Both papers contain lots of interesting performance and cost data on computers going back to the 1940s. However, I was not interested enough to type in all that data. This week I found high quality OCRed copies of the papers on the Internet Archive; my effort was reduced to fixing typos, which felt like less work.

So let’s try to reproduce Knight’s analysis of the data (code and data). Working in the mid-1960s I imagine Knight did everything manually, with the help of mechanical calculators. I have the advantage of fancy software, a very fast computer and techniques that were invented after Knight did his analysis (e.g., generalized linear methods).

Each paper contains its own dataset: the first contains performance+cost data on 225 computers available between 1944 and 1963, while the second contains this information on 63 computers available between 1963 and 1967.

The dataset lists the computer name, the date it was introduced, number of operations per second and the number of seconds that can be rented for a dollar (most computer time used to be rented, then 25 years later personal computers came along and people got to own one, now 25 years after that Cloud is causing a switch back to rental per second).

How are operations measured? The MIPS unit of measurement did not start to be generally used until the 1980s. Knight used 30 or so system characteristics, such as time to perform various arithmetic operations and I/O time, plus characteristics of scientific and commercial applications to calculate a value considered to be a representative scientific or commercial operation.

There is no mention of how seconds-per-dollar values were obtained. Did Knight ask customers or vendors? In a rental market I imagine vendor pricing could be very flexible.

In the 1970s people started talking about Moore’s law, but in the 1960s there was Grosch’s law: Computer performance increases as the square of the cost, i.e., faster computers were cheaper to rent, for a given number of operations. Knight set out to empirically check Grosch’s law, i.e., he was looking for a quadratic fit.

Fitting a regression model to the 1950-1961 data, Knight obtained an exponent of 2.18, while I obtained 2.38 for commercial operations (using a slightly more sophisticated model, because I could); time on faster computers was cheaper than Grosch claimed. For scientific operations Knight obtained 1.92, while I obtained 3.56; despite trying all sorts of jiggery-pokery I could not get a lower value. Unless Knight used very different values to the ones published in the ‘scientific’ columns, one of us has made a big mistake (please let me know if my code is wrong).

Fitting a regression model to the 1963-1967 data, I get figures (both around 2.85 and 2.94) that are roughly in agreement with Knight (2.5 and 3.1). Grosch’s law has broken down by 1963 (if it ever held for scientific operations).

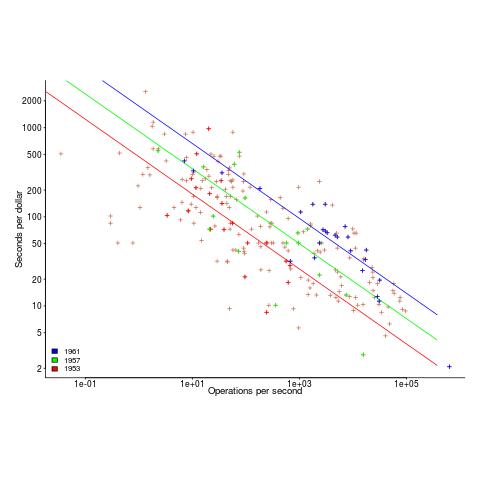

The plot below shows operations per second against operations per dollar for the 1953-1961 data, with fitted lines for some specific years. It shows that while customers get fewer seconds per dollar on faster computers, the number of operations performed in those seconds is raised to the power of two+.

What other information can be extracted from the data? The 1953-1961 data shows seconds per dollar increased, over the whole performance range, by a factor of 1.15 per year, i.e., 15%, for both scientific and commercial; the 1963-1967 year on year increase jumps around a lot.

Using identifier prefixes results in more developer errors

Human speech communication has to be processed in real time using a cpu with a very low clock rate (i.e., the human brain whose neurons fire at rates between 10-100 Hz). Biological evolution has mitigated the clock rate problem by producing a brain with parallel processing capabilities and cultural evolution has chipped in by organizing the information content of languages to take account of the brains strengths and weaknesses. Words provide a good example of the way information content can be structured to be handled by a very slow processor/memory system, e.g., 85% of English words start with a strong syllable (for more details search for initial in this detailed analysis of human word processing).

Given that the start of a word plays an important role as an information retrieval key we would expect the code reading performance of software developers to be affected by whether the identifiers they see all start with the same letter sequence or all started with different letter sequences. For instance, developers would be expected to make fewer errors or work quicker when reading the visually contiguous sequence consoleStr, startStr, memoryStr and lineStr, compared to say strConsole, strStart, strMemory and strLine.

An experiment I ran at the 2011 ACCU conference provided the first empirical evidence of the letter prefix effect that I am aware of. Subjects were asked to remember a list of four assignment statements, each having the form id=constant;, perform an unrelated task for a short period of time and then recall information about the previously seen constants (e.g., their value and which variable they were assigned to).

During recall subjects saw a list of five identifiers and one of the questions asked was which identifier was not in the previously seen list? When the list of identifiers started with different letters (e.g., cat, mat, hat, pat and bat) the error rate was 2.6% and when the identifiers all started with the same letter (e.g., pin, pat, pod, peg, and pen) the error rate was 5.9% (the standard deviation was 4.5% and 6.8% respectively, but ANOVA p-value was 0.038). Having identifiers share the same initial letter appears to double the error rate.

This looks like great news; empirical evidence of software developer behavior following the predictions of a model of human human speech/reading processing. A similar experiment was run in 2006, this asked subjects to remember a list of three assignment statements and they had to select the ‘not seen’ identifier from a list of four possibilities. An analysis of the results did not find any statistically significant difference in performance for the same/different first letter manipulation.

The 2011/2006 experiments throw up lots of questions, including: does the sharing a prefix only make a difference to performance when there are four or more identifiers, how does the error rate change as the number of identifiers increases, how does the error rate change as the number of letters in the identifier change, would the effect be seen for a list of three identifiers if there was a longer period between seeing the information and having to recall it, would the effect be greater if the shared prefix contained more than one letter?

Don’t expect answers to appear quickly. Experimenting using people as subjects is a slow, labour intensive process and software developers don’t always answer the question that you think they are answering. If anybody is interested in replicating the 2011 experiment the tools needed to generate the question sheets are available for download.

For many years I have strongly recommended that developers don’t prefix a set of identifiers sharing some attribute with a common letter sequence (its great to finally have some experimental backup, however small). If it is considered important that an attribute be visible in an identifiers spelling put it at the end of the identifier.

See you all at the ACCU conference tomorrow and don’t forget to bring a pen/pencil. I have only printed 40 experiment booklets, first come first served.

Memory capacity and commercial compiler development

When I started out in the compiler business in the 80s many commercial compilers were originally written by one person. A very good person who dedicated himself (I have never heard of a woman doing this) to the job (i.e., minimal social life) could produce a commercially viable product for a non-huge language (e.g., Fortran, Pascal, C, etc and not C++, Ada, etc) in 12-18 months. Companies who decide to develop a compiler in-house tend to use a team of people and take longer because that is how they work, and they don’t want to depend on one person and anyway such a person might not be available to them.

Commercially viable compiler development stayed within the realm of an individual effort until sometime in the early 90s. What put the single individual out of business was the growth in computer memory capacity into the hundreds of megabytes and beyond. Compilers have to be able to run within the limits of developer machines; you won’t sell many compilers that require 100M of memory if most of your customers don’t have machines with 100M+ of memory.

Code optimizers eat memory and this prevented many optimizations that had been known about for years appearing in commercial products. Once the machines used for software development commonly contained 100M+ of memory compiler vendors could start to incorporate these optimizations into their products.

Improvements in processor speed also helped. But developers are usually willing to let the compiler take a long time to optimize the code going into a final build, provided development compiles run at a reasonable speed.

The increase in memory capacity created the opportunity for compilers to improve and when some did improve they made it harder for others to compete. Suddenly an individual had to spend that 12-18 months, plus many more months implementing fancy optimizations; developing a commercially viable compiler outgrew the realm of individual effort.

Some people claim that the open source model was the primary driver in killing off commercial C compiler development. I disagree. My experience of licensing compiler source was that companies preferred a commercial model they were familiar with and reacted strongly against the idea of having to make available any changes they made to the code of an open source program. GCC (and recently llvm) succeeded because many talented people contributed fancy optimizations and these could be incorporated because developer machines contained lots of memory. If storage had not dramatically increased gcc would probably not be the market leader it is today.

Using evolution to reduce competition

The Microsoft purchase of Skype got me thinking back to my time as an advisor to the Monitoring Trustee appointed by the European Commission in the EU/Microsoft competition court case. The Commission wanted to introduce competition into the Windows Work Group server market and it hoped that by requiring Microsoft to license all of the necessary communication protocols companies would produce products that were plug-compatible with Microsoft products. The major flaw in this plan turned out to be economics, we estimated it would cost around £100 million to implement the protocols and making a worthwhile profit on this investment looked decidedly problematic.

Microsoft’s approach to publishing protocol specifications went through three stages: 1) doing everything they could not to do it, 2) following the judgment handed down by the court, 3) actively documenting additional protocols and making all the documents publicly available. Yes, as the documentation process progressed Microsoft started to see the benefits of having English prose documentation (previously the documentation was the source code) but I suspect the switch from (2) to (3) was made possible by the economic analysis that implied there would not be any competition in the server market.

Skype have not made their client/server protocols public, will Microsoft do so? I suspect not because there is no benefit for them to do so. Also I’m sure that Microsoft will want to steer clear of antitrust authorities and will not be making Skype an integral part of Windows’ internal functionality.

What progress has been made in reverse engineering the Skype protocols? There is a community of people trying to figure them out but they have not made the progress that enabled Andrew Tridgell to quickly get something useful up and running that could then evolve into a full blown implementation of a Microsoft protocol.

What lesson can Skype product managers learn from the Microsoft experience of having to make their proprietary protocols available to third parties? I don’t think Microsoft intentionally did any the following:

- Don’t write any English prose documentation; ensure that the source code is the only specification of the protocols. This will make it easier for point 3) to occur,

- proprietary protocols are your friend, even designing ‘better’ alternatives to non-proprietary protocols,

- don’t put too much of a brake on evolution, i.e., allow developers to do what they always want to do which is to make quick fixes to the code and tweak it here and there resulting in a tangle that cannot be simplified. This will significantly drive up third-party costs as they will not be able to create a product handling a useful subset (i.e., they will have to implement everything) and the tangle make sit harder form them to sure that what they have done is correct.

What might be the short term costs of following this strategy? Very good developers are used to learning by reading code (lack of documentation is a fact of life for may of them). Experience has shown that allowing developers to make quick fixes and tweak code often results in difficult to maintain code (ok, so a small group of developers have to be paid above the market rate to ensure access to their code memory). If developers really do dig themselves into a very large hole it is always possible to completely redesign the protocols and provide a very major upgrade (Skype can always reinvent its own protocols, an option not available to third parties which have to follow slavishly behind; this option has always been open to Microsoft with its protocols, i.e., the courts did not place any restrictions on protocol changes).

Where did the £100 million figure come from? The problem of estimating development cost was approached from various angles. The one I used was to estimate the number of requirements at 50,000 (there are 38,158 MUSTs in the first public release of the documents) of which 1,651 occur in the SMB specification for which there is a 450KLOC implementation (i.e., samba source in 2006), giving an estimate of (50000/1651)*450K -> 13.6 MLOC in the final implementation. At £10 per line we get a bit more than £100 million.

Christmas books for 2009

I thought it would be useful to list the books that gripped me one way or another this year (and may be last year since I don’t usually track such things closely); perhaps they will give you some ideas to add to your Christmas present wish list (please make your own suggestions in the Comments). Most of the books were published a few years ago, I maintain piles of books ordered by when I plan to read them and books migrate between piles until eventually read. Looking at the list I don’t seem to have read many good books this year, perhaps I am spending too much time reading blogs.

These books contain plenty of facts backed up by numbers and an analytic approach and are ordered by physical size.

The New Science of Strong Materials by J. E. Gordon. Ideal for train journeys since it is a small book that can be read in small chunks and is not too taxing. Offers lots of insight into those properties of various materials that are needed to build things (‘new’ here means postwar).

Europe at War 1939-1945 by Norman Davies. A fascinating analysis of the war from a numbers perspective. It is hard to escape the conclusion that in the grand scheme of things us plucky Brits made a rather small contribution, although subsequent Hollywood output has suggested otherwise. Also a contender for a train book.

Japanese English language and culture contact by James Stanlaw. If you are into Japanese culture you will love this, otherwise avoid.

Evolutionary Dynamics by Martin A. Nowak. For the more mathematical folk and plenty of thought power needed. Some very powerful general results from simple processes.

Analytic Combinatorics by Philippe Flajolet and Robert Sedgewick. Probably the toughest mathematical book I have kept at (yet to get close to the end) in a few years. If number sequences fascinate you then give it a try (a pdf is available).

Probability and Computing by Michael Mitzenmacher and Eli Upfal. For the more mathematical folk and plenty of thought power needed. Don’t let the density of Theorems put you off, the approach is broad brush. Plenty of interesting results with applications to solving problems using algorithms containing a randomizing component.

Network Algorithmics by George Varghese. A real hackers book. Not so much a book about algorithms used to solve networking problems but a book about making engineering trade-offs and using every ounce of computing functionality to solve problems having severe resource and real-time constraints.

Virtual Machines by James E. Smith and Ravi Nair. Everything you every wanted to know about virtual machines and more.

Biological Psychology by James W. Kalat. This might be a coffee table book for scientists. Great illustrations, concise explanations, the nuts and bolts of how our bodies runs at the protein/DNA level.

Will language choice converge to a few?

Will the number of commonly used programming languages converge to a few that remain commonly used for ever, will there be many relatively common languages in use, or will the (relatively) commonly used languages change over time?

There are plenty of advantages to having one programming language that everybody uses for ever. English+local dialects seems to be heading towards becoming the World’s one native language, but the programming language world seems to be moving in the direction of diversification and perhaps even experiencing changing popularity of those in common use.

What are the forces that drive programing language usage?

Existing code. If a company wants to maintain and update its software products it needs to hire people to use the language they are written in. This is a force that maintains the status quo.

Existing programmer skills. When given the task of writing new software where language usage is not specified, developers are likely to pick a language they already know. In the case of group development the choice is made by group leaders. This is a force that maintains the status quo.

Fashion. Every field has fashions and programming language usage is no exception. Using a particular language can be seen as sexy, leading-edge, innovative, the next big-thing, etc. Given the opportunity some developers will chose to learn and write code in this language.

Desire to learn a new language. Some developers like to learn new things and this includes programming languages. Given the opportunity such developers will sometimes chose to learn and write code in a language they find interesting.

The cost of creating and implementing a new language continues to be within the reach of one individual who is willing to invest the considerable effort required. Hundreds, if not thousands of new languages have been created every year almost since computers were first invented. The only change here over the last 40 years is probably an increase in the number of new languages.

What has changed in the last 15 years is ease of transmission (e.g., a ubiquitous computing platform and the Internet) and the growth of the fashion industry (e.g., book publishers).

Computing is bathed in newness. New products, new chips, new gadgets, new software, new features, new and improved, the latest. What self respecting developer would want to be caught dead using a language invented before they were born?

Publishers need a continuous stream of new subjects that will drive customers to buy books. What better subject than a hot new programming language?

At the moment we seem to be living through a period of programming language usage divergence. Will this evolutionary trend continue or are we currently in the Cambrian explosion period of software engineering evolution?

What are the forces acting against the use of new languages? At the moment the only significant forces acting against the use of new languages are existing source and existing developer expertise. There are weaker forces, for instance, the worry that in the years to come it will be difficult to find developers to maintain existing software written in what has become an obscure language, but most software has a short lifetime and in many application domains this is not an issue. Whether the fashion for newness will eventually diminish enough to significantly slow the take-up of new languages remains to be seen.

Recent Comments