Archive

Study of developers for the cost of a phase I clinical drug trial

For many years now, I have been telling people that software researchers need to be more ambitious and apply for multi-million pound/dollar grants to run experiments in software engineering. After all, NASA spends a billion or so sending a probe to take some snaps of a planet and astronomers lobby for $100million funding for a new telescope.

What kind of experimental study might be run for a few million pounds (e.g., the cost of a Phase I clinical drug trial)?

Let’s say that each experiment involves a team of professional developers implementing a software system; call this a Project. We want the Project to be long enough to be realistic, say a week.

Different people exhibit different performance characteristics, and the experimental technique used to handle this is to have multiple teams independently implement the same software system. How many teams are needed? Fifteen ought to be enough, but more is better.

Different software systems contain different components that make implementation easier/harder for those involved. To remove single system bias, a variety of software systems need to be used as Projects. Fifteen distinct Projects would be great, but perhaps we can get away with five.

How many developers are on a team? Agile task estimation data shows that most teams are small, i.e., mostly single person, with two and three people teams making up almost all the rest.

If we have five teams of one person, five of two people, and five of three people, then there are 15 teams and 30 people.

How many people will be needed over all Projects?

15 teams (30 people) each implementing one Project 5 Projects, which will require 5*30=150 people (5*15=75 teams) |

How many person days are likely to be needed?

If a 3-person team takes a week (5 days), a 2-person team will take perhaps 7-8 days. A 1-person team might take 9-10 days.

The 15 teams will consume 5*3*5+5*2*7+5*1*9=190 person days The 5 Projects will consume 5*190=950 person days |

How much is this likely to cost?

The current average daily rate for a contractor in the UK is around £500, giving an expected cost of 190*500=£475,000 to hire the experimental subjects. Venue hire is around £40K (we want members of each team to be co-located).

The above analysis involves subjects implementing one Project. If, say, each subject implements two, three or four Projects, one after the other, the cost is around £2million, i.e., the cost of a Phase I clinical drug trial.

What might we learn from having subjects implement multiple Projects?

Team performance depends on the knowledge and skill of its members, and their ability to work together. Data from these experiments would be the first of their kind, and would provide realistic guidance on performance factors such as: impact of team size; impact of practice; impact of prior experience working together; impact of existing Project experience. The multiple implementations of the same Project created provide a foundation for measuring expected reliability and theories of N-version programming.

A team of 1 developer will take longer to implement a Project than a team of 2, who will take longer than a team of 3.

If 20 working days is taken as the ballpark period over which a group of subjects are hired (i.e., a month), there are six team size sequences that one subject could work (A to F below); where individual elapsed time is close to 20 days (team size 1 is 10 days elapsed, team size 2 is 7.5 days, team size 3 is 5 days).

Team size A B C D E F

1 twice once once

2 once thrice once

3 twice twice four |

The cost of hiring subjects+venue+equipment+support for such a study is likely to be at least £1,900,000.

If the cost of beta testing, venue hire and research assistants (needed during experimental runs) is included, the cost is close to £2.75 million.

Might it be cheaper and simpler to hire, say, 20-30 staff from a medium size development company? I chose a medium-sized company because we would be able to exert some influence over developer selection and keeping the same developers involved. The profit from 20-30 people for a month is not enough to create much influence within a large company, and a small company would not want to dedicate a large percentage of its staff for a solid month.

Beta testing is needed to validate both the specifications for each Project and that it is possible to schedule individuals to work in a sequence of teams over a month (individual variations in performance create a scheduling nightmare).

Growth in FLOPs used to train ML models

AI (a.k.a. machine learning) is a compute intensive activity, with the performance of trained models being dependent on the quantity of compute used to train the model.

Given the ongoing history of continually increasing compute power, what is the maximum compute power that might be available to train ML models in the coming years?

How might the compute resources used to train an ML model be measured?

One obvious answer is to specify the computers used and the numbers of days used they were occupied training the model. The problem with this approach is that the differences between the computers used can be substantial. How is compute power measured in other domains?

Supercomputers are ranked using FLOPS (floating-point operations per second), or GigFLOPS or PetaFLOPS ( ). The Top500 list gives values for

). The Top500 list gives values for  (based on benchmark performance, i.e., LINNPACK) and

(based on benchmark performance, i.e., LINNPACK) and  (what the hardware is theoretically capable of, which is sometimes more than twice

(what the hardware is theoretically capable of, which is sometimes more than twice  ).

).

A ballpark approach to measuring the FLOPs consumed by an application is to estimate the FLOPS consumed by the computers involved and multiply by the number of seconds each computer was involved in training. The huge assumption made with this calculation is that the application actually consumes all the FLOPS that the hardware is capable of supplying. In some cases this appears to be the metric used to estimate the compute resources used to train an ML model. Some published papers just list a FLOPs value, while others list the number of GPUs used (e.g., 2,128).

A few papers attempt a more refined approach. For instance, the paper describing the GPT-3 models derives its FLOPs values from quantities such as the number of parameters in each model and number of training tokens used. Presumably, the research group built a calibration model that provided the information needed to estimate FLOPS in this way.

How does one get to be able to use PetaFLOPS of compute to train a model (training the GPT-3 175B model consumed 3,640 PetaFLOP days, or around a few days on a top 8 supercomputer)?

Pay what it costs. Money buys cloud compute or bespoke supercomputers (which are more cost-effective for large scale tasks, if you have around £100million to spend plus £10million or so for the annual electricity bill). While the amount paid to train a model might have lots of practical value (e.g., can I afford to train such a model), researchers might not be keen to let everybody know how much they spent. For instance, if a research team have a deal with a major cloud provider to soak up any unused capacity, those involved probably have no interest in calculating compute cost.

How has the compute power used to train ML models increased over time? A recent paper includes data on the training of 493 models, of which 129 include estimated FLOPs, and 106 contain date and model parameter data. The data comes from published papers, and there are many thousands of papers that train ML models. The authors used various notability criteria to select papers, and my take on the selection is that it represents the high-end of compute resources used over time (which is what I’m interested in). While they did a great job of extracting data, there is no real analysis (apart from fitting equations).

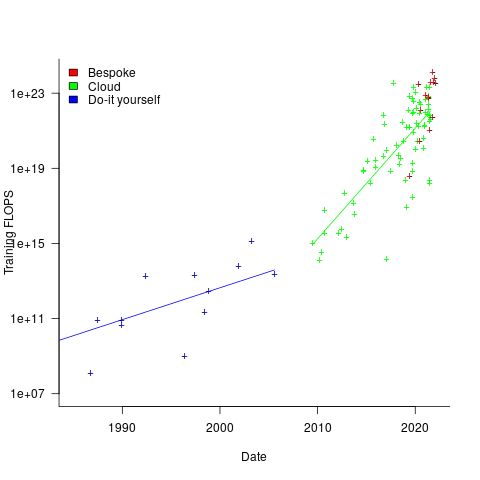

The plot below shows the FLOPs training budget used/claimed/estimated for ML models described in papers published on given dates; lines are fitted regression models, and the colors are explained below (code+data):

My interpretation of the data is based on the economics of accessing compute resources. I see three periods of development:

- do-it yourself (18 data points): During this period most model builders only had access to a university computer, desktop machines, or a compute cluster they had self-built,

- cloud (74 data points): Huge on demand compute resources are now just a credit card away. Researchers no longer have to wait for congested university computers to become available, or build their own systems.

AWS launched in 2006, and the above plot shows a distinct increase in compute resources around 2008.

- bespoke (14 data points): if the ML training budget is large enough, it becomes cost-effective to build a bespoke system, e.g., a supercomputer. As well as being more cost-effective, a bespoke system can also be specifically designed to handle the characteristics of the kinds of applications run.

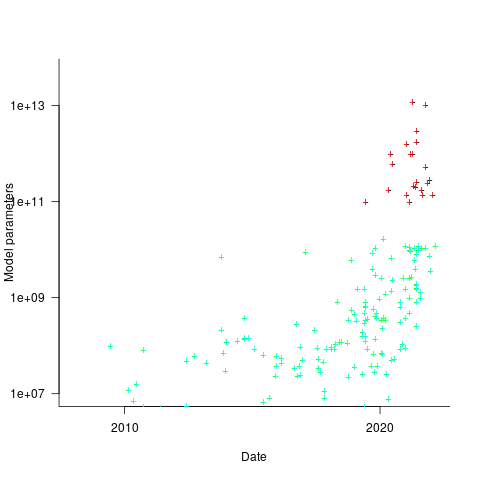

How might models trained using a bespoke system be distinguished from those trained using cloud compute? The plot below shows the number of parameters in each trained model, over time, and there is a distinct gap between

and

and  parameters, which I assume is the result of bespoke systems having the memory capacity to handle more parameters (code+data):

parameters, which I assume is the result of bespoke systems having the memory capacity to handle more parameters (code+data):

The rise in FLOPs growth rate during the Cloud period comes from several sources: 1) the exponential decline in the prices charged by providers delivers researchers an exponentially increasing compute for the same price, 2) researchers obtaining larger grants to work on what is considered to be an important topic, 3) researchers doing deals with providers to make use of excess capacity.

The rate of growth of Cloud usage is capped by the cost of building a bespoke system. The future growth of Cloud training FLOPs will be constrained by the rate at which the prices charged for a FLOP decreases (grants are unlikely to continually increase substantially).

The rate of growth of the Top500 list is probably a good indicator of the rate of growth of bespoke system performance (and this does appear to be slowing down). Perhaps specialist ML training chips will provide performance that exceeds that of the GPU chips currently being used.

The maximum compute that can be used by an application is set by the reliability of the hardware and the percentage of resources used to recover from hard errors that occur during a calculation. Supercomputer users have been facing the possibility of hitting the wall of maximum compute for over a decade. ML training is still a minnow in the supercomputer world, where calculations run for months, rather than a few days.

Cost-effectiveness decision for fixing a known coding mistake

If a mistake is spotted in the source code of a shipping software system, is it more cost-effective to fix the mistake, or to wait for a customer to report a fault whose root cause turns out to be that particular coding mistake?

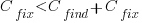

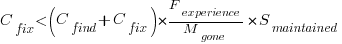

The naive answer is don’t wait for a customer fault report, based on the following simplistic argument:  .

.

where:  is the cost of fixing the mistake in the code (including testing etc), and

is the cost of fixing the mistake in the code (including testing etc), and  is the cost of finding the mistake in the code based on a customer fault report (i.e., the sum on the right is the total cost of fixing a fault reported by a customer).

is the cost of finding the mistake in the code based on a customer fault report (i.e., the sum on the right is the total cost of fixing a fault reported by a customer).

If the mistake is spotted in the code for ‘free’, then  , e.g., a developer reading the code for another reason, or flagged by a static analysis tool.

, e.g., a developer reading the code for another reason, or flagged by a static analysis tool.

This answer is naive because it fails to take into account the possibility that the code containing the mistake is deleted/modified before any customers experience a fault caused by the mistake; let  be the likelihood that the coding mistake ceases to exist in the next unit of time.

be the likelihood that the coding mistake ceases to exist in the next unit of time.

The more often the software is used, the more likely a fault experience based on the coding mistake occurs; let  be the likelihood that a fault is reported in the next time unit.

be the likelihood that a fault is reported in the next time unit.

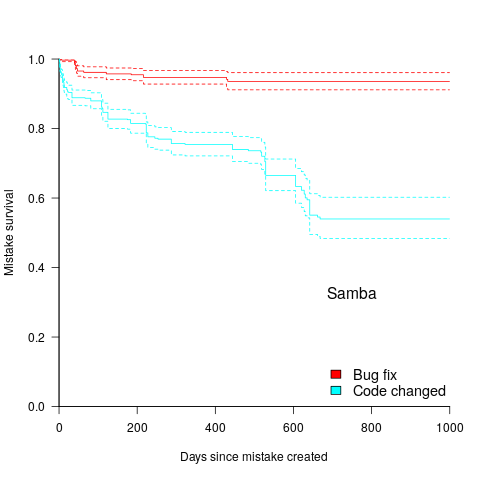

A more realistic analysis takes into account both the likelihood of the coding mistake disappearing and a corresponding fault being reported, modifying the relationship to:

Software systems are eventually retired from service; the likelihood that the software is maintained during the next unit of time,  , is slightly less than one.

, is slightly less than one.

Giving the relationship:

which simplifies to:

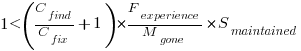

What is the likely range of values for the ratio:  ?

?

I have no find/fix cost data, although detailed total time is available, i.e., find+fix time (with time probably being a good proxy for cost). My personal experience of find often taking a lot longer than fix probably suffers from survival of memorable cases; I can think of cases where the opposite was true.

The two values in the ratio  are likely to change as a system evolves, e.g., high code turnover during early releases that slows as the system matures. The value of

are likely to change as a system evolves, e.g., high code turnover during early releases that slows as the system matures. The value of  should decrease over time, but increase with a large influx of new users.

should decrease over time, but increase with a large influx of new users.

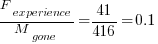

A study by Penta, Cerulo and Aversano investigated the lifetime of coding mistakes (detected by several tools), tracking them over three years from creation to possible removal (either fixed because of a fault report, or simply a change to the code).

Of the 2,388 coding mistakes detected in code developed over 3-years, 41 were removed as reported faults and 416 disappeared through changes to the code:

The plot below shows the survival curve for memory related coding mistakes detected in Samba, based on reported faults (red) and all other changes to the code (blue/green, code+data):

Coding mistakes are obviously being removed much more rapidly due to changes to the source, compared to customer fault reports.

For it to be cost-effective to fix coding mistakes in Samba, flagged by the tools used in this study ( is essentially one), requires:

is essentially one), requires:  .

.

Meeting this requirement does not look that implausible to me, but obviously data is needed.

Moore’s law was a socially constructed project

Moore’s law was a socially constructed project that depended on the coordinated actions of many independent companies and groups of individuals to last for as long it did.

All products evolve, but what was it about Moore’s law that enabled microelectronics to evolve so much faster and for longer than most other products?

Moore’s observation, made in 1965 based on four data points, was that the number of components contained in a fabricated silicon device doubles every year. The paper didn’t make this claim in words, but a line fitted to four yearly data points (starting in 1962) suggested this behavior continuing into the mid-1970s. The introduction of IBM’s Personal Computer, in 1981 containing Intel’s 8088 processor, led to interested parties coming together to create a hugely profitable ecosystem that depended on the continuance of Moore’s law.

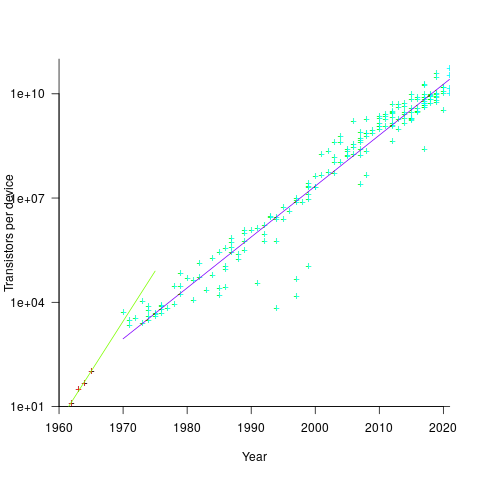

The plot below shows Moore’s four points (red) and fitted regression model (green line). In practice, since 1970, fitting a regression model (purple line) to the number of transistors in various microprocessors (blue/green, data from Wikipedia), finds that the number of transistors doubled every two years (code+data):

In the early days, designing a device was mostly a manual operation; that is, the circuit design and logic design down to the transistor level were hand-drawn. This meant that creating a device containing twice as many transistors required twice as many engineers. At some point the doubling process either becomes uneconomic or it takes forever to get anything done because of the coordination effort.

The problem of needing an exponentially-growing number of engineers was solved by creating electronic design automation tools (EDA), starting in the 1980s, with successive generations of tools handling ever higher levels of abstraction, and human designers focusing on the upper levels.

The use of EDA provides a benefit to manufacturers (who can design differentiated products) and to customers (e.g., products containing more functionality).

If EDA had not solved the problem of exponential growth in engineers, Moore’s law would have maxed-out in the early 1980s, with around 150K transistors per device. However, this would not have stopped the ongoing shrinking of transistors; two economic factors independently incentivize the creation of ever smaller transistors.

When wafer fabrication technology improvements make it possible to double the number of transistors on a silicon wafer, then around twice as many devices can be produced (assuming unchanged number of transistors per device, and other technical details). The wafer fabrication cost is greater (second row in table below), but a lot less than twice as much, so the manufacturing cost per device is much lower (third row in table).

The doubling of transistors primarily provides a manufacturer benefit.

The following table gives estimates for various chip foundry economic factors, in dollars (taken from the report: AI Chips: What They Are and Why They Matter). Node, expressed in nanometers, used to directly correspond to the length of a particular feature created during the fabrication process; these days it does not correspond to the size of any specific feature and is essentially just a name applied to a particular generation of chips.

Node (nm) 90 65 40 28 20 16/12 10 7 5 Foundry sale price per wafer 1,650 1,937 2,274 2,891 3,677 3,984 5,992 9,346 16,988 Foundry sale price per chip 2,433 1,428 713 453 399 331 274 233 238 Mass production year 2004 2006 2009 2011 2014 2015 2017 2018 2020 Quarter Q4 Q4 Q1 Q4 Q3 Q3 Q2 Q3 Q1 Capital investment per wafer 4,649 5,456 6,404 8,144 10,356 11,220 13,169 14,267 16,746 processed per year Capital consumed per wafer 411 483 567 721 917 993 1,494 2,330 4,235 processed in 2020 Other costs and markup 1,293 1,454 1,707 2,171 2,760 2,990 4,498 7,016 12,753 per wafer |

The second economic factor incentivizing the creation of smaller transistors is Dennard scaling, a rarely heard technical term named after the first author of a 1974 paper showing that transistor power consumption scaled with area (for very small transistors). Halving the area occupied by a transistor, halves the power consumed, at the same frequency.

The maximum clock-frequency of a microprocessor is limited by the amount of heat it can dissipate; the heat produced is proportional to the power consumed, which is approximately proportional to the clock-frequency. Instead of a device having smaller transistors consume less power, they could consume the same power at double the frequency.

Dennard scaling primarily provides a customer benefit.

Figuring out how to further shrink the size of transistors requires an investment in research, followed by designing/(building or purchasing) new equipment. Why would a company, who had invested in researching and building their current manufacturing capability, be willing to invest in making it obsolete?

The fear of losing market share is a commercial imperative experienced by all leading companies. In the microprocessor market, the first company to halve the size of a transistor would be able to produce twice as many microprocessors (at a lower cost) running twice as fast as the existing products. They could (and did) charge more for the latest, faster product, even though it cost them less than the previous version to manufacture.

Building cheaper, faster products is a means to an end; that end is receiving a decent return on the investment made. How large is the market for new microprocessors and how large an investment is required to build the next generation of products?

Rock’s law says that the cost of a chip fabrication plant doubles every four years (the per wafer price in the table above is increasing at a slower rate). Gambling hundreds of millions of dollars, later billions of dollars, on a next generation fabrication plant has always been a high risk/high reward investment.

The sales of microprocessors are dependent on the sale of computers that contain them, and people buy computers to enable them to use software. Microprocessor manufacturers thus have to both convince computer manufacturers to use their chip (without breaking antitrust laws) and convince software companies to create products that run on a particular processor.

The introduction of the IBM PC kick-started the personal computer market, with Wintel (the partnership between Microsoft and Intel) dominating software developer and end-user mindshare of the PC compatible market (in no small part due to the billions these two companies spent on advertising).

An effective technique for increasing the volume of microprocessors sold is to shorten the usable lifetime of the computer potential customers currently own. Customers buy computers to run software, and when new versions of software can only effectively be used in a computer containing more memory or on a new microprocessor which supports functionality not supported by earlier processors, then a new computer is needed. By obsoleting older products soon after newer products become available, companies are able to evolve an existing customer base to one where the new product is looked upon as the norm. Customers are force marched into the future.

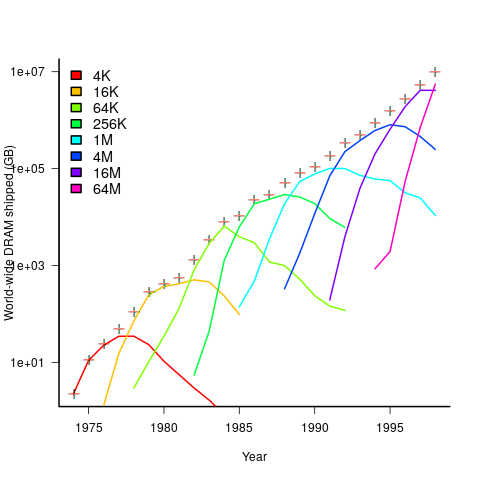

The plot below shows sales volume, in gigabytes, of various sized DRAM chips over time. The simple story of exponential growth in sales volume (plus signs) hides the more complicated story of the rise and fall of succeeding generations of memory chips (code+data):

The Red Queens had a simple task, keep buying the latest products. The activities of the companies supplying the specialist equipment needed to build a chip fabrication plant has to be coordinated, a role filled by the International Technology Roadmap for Semiconductors (ITRS). The annual ITRS reports contain detailed specifications of the expected performance of the subsystems involved in the fabrication process.

Moore’s law is now dead, in that transistor doubling now takes longer than two years. Would transistor doubling time have taken longer than two years, or slowed down earlier, if:

- the ecosystem had not been dominated by two symbiotic companies, or did network effects make it inevitable that there would be two symbiotic companies,

- the Internet had happened at a different time,

- if software applications had quickly reached a good enough state,

- if cloud computing had gone mainstream much earlier.

Many coding mistakes are not immediately detectable

Earlier this week I was reading a paper discussing one aspect of the legal fallout around the UK Post-Office’s Horizon IT system, and was surprised to read the view that the Law Commission’s Evidence in Criminal Proceedings Hearsay and Related Topics were citing on the subject of computer evidence (page 204): “most computer error is either immediately detectable or results from error in the data entered into the machine”.

What? Do I need to waste any time explaining why this is nonsense? It’s obvious nonsense to anybody working in software development, but this view is being expressed in law related documents; what do lawyers know about software development.

Sometimes fallacies become accepted as fact, and a lot of effort is required to expunge them from cultural folklore. Regular readers of this blog will have seen some of my posts on long-standing fallacies in software engineering. It’s worth collecting together some primary evidence that most software mistakes are not immediately detectable.

A paper by Professor Tapper of Oxford University is cited as the source (yes, Oxford, home of mathematical orgasms in software engineering). Tapper’s job title is Reader in Law, and on page 248 he does say: “This seems quite extraordinarily lax, given that most computer error is either immediately detectable or results from error in the data entered into the machine.” So this is not a case of his words being misinterpreted or taken out of context.

Detecting many computer errors is resource intensive, both in elapsed time, manpower and compute time. The following general summary provides some of the evidence for this assertion.

Two events need to occur for a fault experience to occur when running software:

- a mistake has been made when writing the source code. Mistakes include: a misunderstanding of what the behavior should be, using an algorithm that does not have the desired behavior, or a typo,

- the program processes input values that interact with a coding mistake in a way that produces a fault experience.

That people can make different mistakes is general knowledge. It is my experience that people underestimate the variability of the range of values that are presented as inputs to a program.

A study by Nagel and Skrivan shows how variability of input values results in fault being experienced at different time, and that different people make different coding mistakes. The study had three experienced developers independently implement the same specification. Each of these three implementations was then tested, multiple times. The iteration sequence was: 1) run program until fault experienced, 2) fix fault, 3) if less than five faults experienced, goto step (1). This process was repeated 50 times, always starting with the original (uncorrected) implementation; the replications varied this, along with the number of inputs used.

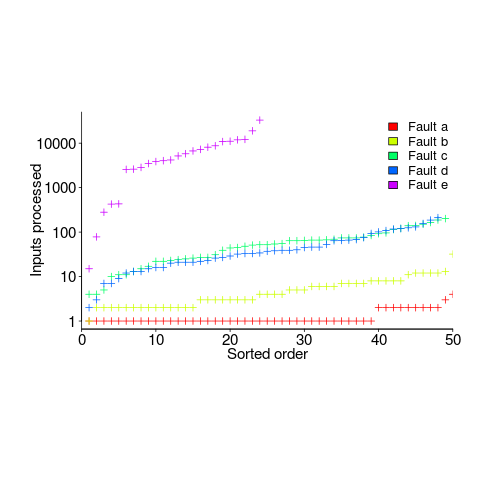

How many input values needed to be processed, on average, before a particular fault is experienced? The plot below (code+data) shows the numbers of inputs processed, by one of the implementations, before individual faults were experienced, over 50 runs (sorted by number of inputs needed before the fault was experienced):

The plot illustrates that some coding mistakes are more likely to produce a fault experience than others (because they are more likely to interact with input values in a way that generates a fault experience), and it also shows how the number of inputs values processed before a particular fault is experienced varies between coding mistakes.

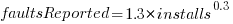

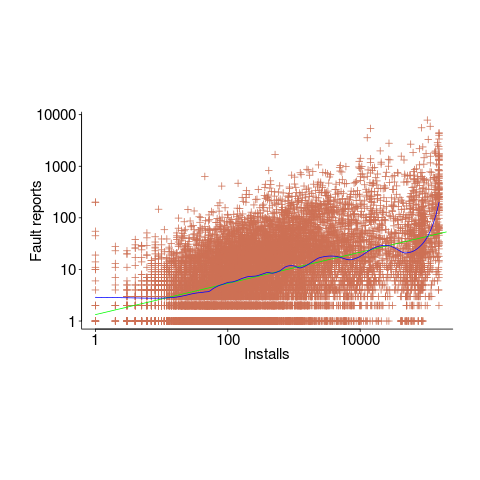

Real-world evidence of the impact of user input on reported faults is provided by the Ultimate Debian Database, which provides information on the number of reported faults and the number of installs for 14,565 packages. The plot below shows how the number of reported faults increases with the number of times a package has been installed; one interpretation is that with more installs there is a wider variety of input values (increasing the likelihood of a fault experience), another is that with more installs there is a larger pool of people available to report a fault experience. Green line is a fitted power law,  , blue line is a fitted loess model.

, blue line is a fitted loess model.

The source containing a mistake may be executed without a fault being experienced; reasons for this include:

- the input values don’t result in the incorrect code behaving differently from the correct code. For instance, given the made-up incorrect code

if (x < 8)(i.e.,8was typed rather than7), the comparison only produces behavior that differs from the correct code whenxhas the value7, - the input values result in the incorrect code behaving differently than the correct code, but the subsequent path through the code produces the intended external behavior.

Some of the studies that have investigated the program behavior after a mistake has deliberately been introduced include:

- checking the later behavior of a program after modifying the value of a variable in various parts of the source; the results found that some parts of a program were more susceptible to behavioral modification (i.e., runtime behavior changed) than others (i.e., runtime behavior not change),

- checking whether a program compiles and if its runtime behavior is unchanged after random changes to its source code (in this study, short programs written in 10 different languages were used),

- 80% of radiation induced bit-flips have been found to have no externally detectable effect on program behavior.

What are the economic costs and benefits of finding and fixing coding mistakes before shipping vs. waiting to fix just those faults reported by customers?

Checking that a software system exhibits the intended behavior takes time and money, and the organization involved may not be receiving any benefit from its investment until the system starts being used.

In some applications the cost of a fault experience is very high (e.g., lowering the landing gear on a commercial aircraft), and it is cost-effective to make a large investment in gaining a high degree of confidence that the software behaves as expected.

In a changing commercial world software systems can become out of date, or superseded by new products. Given the lifetime of a typical system, it is often cost-effective to ship a system expected to contain many coding mistakes, provided the mistakes are unlikely to be executed by typical customer input in a way that produces a fault experience.

Beta testing provides selected customers with an early version of a new release. The benefit to the software vendor is targeted information about remaining coding mistakes that need to be fixed to reduce customer fault experiences, and the benefit to the customer is checking compatibility of their existing work practices with the new release (also, some people enjoy being able to brag about being a beta tester).

- One study found that source containing a coding mistake was less likely to be changed due to fixing the mistake than changed for other reasons (that had the effect of causing the mistake to disappear),

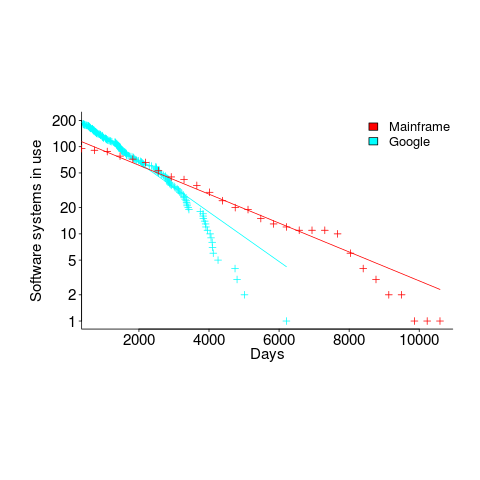

- Software systems don't live forever; systems are replaced or cease being used. The plot below shows the lifetime of 202 Google applications (half-life 2.9 years) and 95 Japanese mainframe applications from the 1990s (half-life 5 years; code+data).

Not only are most coding mistakes not immediately detectable, there may be sound economic reasons for not investing in detecting many of them.

Quality control in a zero cost of replication business

When a new manufacturing material becomes available, its use is often integrated with existing techniques, e.g., using scientific management techniques for software production.

Customers want reliable products, and companies that sell unreliable products don’t make money (and may even lose lots of money).

Quality assurance of manufactured products is a huge subject, and lots of techniques have been developed.

Needless to say, quality assurance techniques applied to the production of hardware are often touted (and sometimes applied) as the solution for improving the quality of software products (whatever quality is currently being defined as).

There is a fundamental difference between the production of hardware and software:

- Hardware is designed, a prototype made and this prototype refined until it is ready to go into production. Hardware production involves duplicating an existing product. The purpose of quality control for hardware production is ensuring that the created copies are close enough to identical to the original that they can be profitably sold. Industrial design has to take into account the practicalities of mass production, e.g., can this device be made at a low enough cost.

- Software involves the same design, prototype, refinement steps, in some form or another. However, the final product can be perfectly replicated at almost zero cost, e.g., downloadable file(s), burn a DVD, etc.

Software production is a once-off process, and applying techniques designed to ensure the consistency of a repetitive process don’t sound like a good idea. Software production is not at all like mass production (the build process comes closest to this form of production).

Sometimes people claim that software development does involve repetition, in that a tiny percentage of the possible source code constructs are used most of the time. The same is also true of human communications, in that a few words are used most of the time. Does the frequent use of a small number of words make speaking/writing a repetitive process in the way that manufacturing identical widgets is repetitive?

The virtually zero cost of replication (and distribution, via the internet, for many companies) does more than remove a major phase of the traditional manufacturing process. Zero cost of replication has a huge impact on the economics of quality control (assuming high quality is considered to be equivalent to high reliability, as measured by number of faults experienced by customers). In many markets it is commercially viable to ship software products that are believed to contain many mistakes, because the cost of fixing them is so very low; unlike the cost of hardware, which is non-trivial and involves shipping costs (if only for a replacement).

Zero defects is not an economically viable mantra for many software companies. When companies employ people to build the same set of items, day in day out, there is economic sense in having them meet together (e.g., quality circles) to discuss saving the company money, by reducing production defects.

Many software products have a short lifespan, source code has a brief and lonely existence, and many development projects are never shipped to paying customers.

In software development companies it makes economic sense for quality circles to discuss the minimum number of known problems they need to fix, before shipping a product.

Altruistic innovation and the study of software economics

Recently, I have been reading rather a lot of papers that are ostensibly about the economics of markets where applications, licensed under an open source license, are readily available. I say ostensibly, because the authors have some very odd ideas about the activities of those involved in the production of open source.

Perhaps I am overly cynical, but I don’t think altruism is the primary motivation for developers writing open source. Yes, there is an altruistic component, but I would list enjoyment as the primary driver; developers enjoy solving problems that involve the production of software. On the commercial side, companies are involved with open source because of naked self-interest, e.g., commoditizing software that complements their products.

It may surprise you to learn that academic papers, written by economists, tend to be knee-deep in differential equations. As a physics/electronics undergraduate I got to spend lots of time studying various differential equations (each relating to some aspect of the workings of the Universe). Since graduating, I have rarely encountered them; that is, until I started reading economics papers (or at least trying to).

Using differential equations to model problems in economics sounds like a good idea, after all they have been used to do a really good job of modeling how the universe works. But the universe is governed by a few simple principles (or at least the bit we have access to is), and there is lots of experimental data about its behavior. Economic issues don’t appear to be governed by a few simple principles, and there is relatively little experimental data available.

Writing down a differential equation is easy, figuring out an analytic solution can be extremely difficult; the Navier-Stokes equations were written down 200-years ago, and we are still awaiting a general solution (solutions for a variety of special cases are known).

To keep their differential equations solvable, economists make lots of simplifying assumptions. Having obtained a solution to their equations, there is little or no evidence to compare it against. I cannot speak for economics in general, but those working on the economics of software are completely disconnected from reality.

What factors, other than altruism, do academic economists think are of major importance in open source? No, not constantly reinventing the wheel-barrow, but constantly innovating. Of course, everybody likes to think they are doing something new, but in practice it has probably been done before. Innovation is part of the business zeitgeist and academic economists are claiming to see it everywhere (and it does exist in their differential equations).

The economics of Linux vs. Microsoft Windows is a common comparison, i.e., open vs. close source; I have not seen any mention of other open source operating systems. How might an economic analysis of different open source operating systems be framed? How about: “An economic analysis of the relative enjoyment derived from writing an operating system, Linux vs BSD”? Or the joy of writing an editor, which must be lots of fun, given how many have text editors are available.

I have added the topics, altruism and innovation to my list of indicators of poor quality, used to judge whether its worth spending more than 10 seconds reading a paper.

Economics chapter added to “Empirical software engineering using R”

The Economics chapter of my Empirical software engineering book has been added to the draft pdf (download here).

This is a slim chapter, it might grow a bit, but I suspect not by a huge amount. Reasons include lots of interesting data being confidential and me not having spent a lot of time on this topic over the years (so my stash of accumulated data is tiny). Also, a significant chunk of the economics data I have is used to discuss issues in the Ecosystems and Projects chapters, perhaps some of this material will migrate back once these chapters are finalized.

You might argue that Economics is more important than Human cognitive characteristics and should have appeared before it (in chapter order). I would argue that hedonism by those involved in producing software is the important factor that pushes (financial) economics into second place (still waiting for data to argue my case in print).

Some of the cognitive characteristics data I have been waiting for arrived, and has been added to this chapter (some still to be added).

As always, if you know of any interesting software engineering data, please tell me.

I am after a front cover. A woodcut of alchemists concocting a potion appeals, perhaps with various software references discretely included, or astronomy related (the obvious candidate has already been used). The related modern stuff I have seen does not appeal. Suggestions welcome.

Ecosystems next.

Is the ISO C++ standard’s committee past its sell by date?

The purpose of having a standard is economic. The classic (British) example is screw threads, having a standard set of screw threads means that products from different manufacturers are interchangeable and competition drives down prices; the US puts more emphasis on standards being an enabler of people interchangeability, i.e., train people once and they can use the acquired skills in multiple companies.

In the early days of computing we had umpteen compilers for Cobol, Fortran and then Pascal and then C and then C++. There were a lot of benefit to be had getting the vendors signed up to support a single standard for their language (of course they still added bells and whistles to ‘enhance’ their offerings). Language standard’s meeting were full of vendors, with a few end users (mostly from large corporations and government).

Fast forward to today and the ranks of compiler vendors has thinned significantly. Microfocus dominates Cobol, Fortran is dominated by a few number cruncher oriented companies, Pascal die hards cling on in surprising places, C vendors are till in double figures (down by an order of magnitude from its heyday) and C++ vendors will soon be accurately countable by Trolls (1, 2, 3, many).

What purpose does an ISO language standard serve in a world with only a few compilers? These days the standard is actually set by the huge volume of existing code that has to be handled by any vendor hoping to be adopted by developers.

The ISO C++ committee has become the playground of bored consultants looking for a creative outlet that work is not providing. Is there any red blooded developer who would not love spending a week, two or three times a year, holed up in a hotel with 100+ similarly minded people pouring over newly invented language features?

Does the world need all these new features in C++? Fortunately for the committee there are training companies who like nothing better than being able to offer ‘latest features of C++’ courses to all those developers who have been on previous ‘latest features of C++’ courses. Then there is the media, who just love writing about new stuff, there is even an ‘official’ C++ Standard news outlet.

In the good old days compiler vendors loved updates to the language standard because it gave them an opportunity to sell upgrades to customers; things are a bit different in the open source compiler market. What is the incentive of an open source compiler vendor to support features added by an ISO committee? In the past there has been a community expectation that it will happen, but is the ground swell of opinion enough to warrant spending resources on supporting new languages? Perhaps the GCC and LLVM folk will get together and mutually agree not to waste resources being the first mover.

Would developers at large notice if the C++ committee didn’t do anything for the next 10 years?

The Javascript ECMAscript standard also has a membership that includes many end users. In this case I suspect companies are sending people to make sure that new languages features don’t impact large code bases and existing investment in ways of doing things.

Update: I’m not saying that C++ language and libraries should stop evolving, but questioning the need to have an ISO Standard’s committee in a world of Open Source and a small number of compilers (that is likely to only become fewer).

Recent Comments