What is known about software effort estimation in 2024

It’s three years since my 2021 post summarizing what I knew about estimating software tasks. While no major new public datasets have appeared (there have been smaller finds), I have talked to lots of developers/managers about the findings from the 2019/2021 data avalanche, and some data dots have been connected.

A common response from managers, when I outline the patterns found, is some variation of: “That sounds about right.” While it’s great to have this confirmation, it’s disappointing to be telling people what they already know, even if I can put numbers to the patterns.

Some of the developer behavior patterns look, to me, to be actionable, e.g., send developers on a course to unbias their estimates. In practice, managers are worried about upsetting developers or destabilising teams. It’s easy for an unhappy developer to find another job (the speakers at the meetups I attend often end by saying: “and we’re hiring.”)

This post summarizes a talk I gave recently on what is known about software estimating; a video will eventually appear on the British Computer Society‘s Software Practice Advancement group’s YouTube channel, and the slides are on Github.

What I call the historical estimation models contain source code, measured in lines, as a substantial component, e.g., COCOMO which overfits a miniscule dataset. The problem with this approach is that estimates of the LOC needed to implement some functionality LOC are very inaccurate, and different developers use different LOC to implement the same functionality.

Most academic research in software effort estimation continues to be based on miniscule datasets; it’s essentially fake research. Who is doing good research in software estimating? One person: Magne Jørgensen.

Almost all the short internal task estimate/actual datasets contain all the following patterns:

- use of round-numbers (known as heaping in some fields). The ratios of the most frequently used round numbers, when estimating time, are close to the ratios of the Fibonacci sequence,

- short tasks tend to be under-estimated and long tasks over-estimate. Surprisingly, the following equation is a good fit for many time-based datasets:

,

, - individuals tend to either consistently over or under estimate (this appears to be connected with the individual’s risk profile),

- around 30% of estimates are accurate, 68% within a factor of two, and 95% within a factor of four; one function point dataset, one story point dataset, many time datasets,

- developer estimation accuracy does not change with practice. Possible reasons for this include: variability in the world prevents more accurate estimates, developers choose to spend their learning resources on other topics (such as learning more about the application domain).

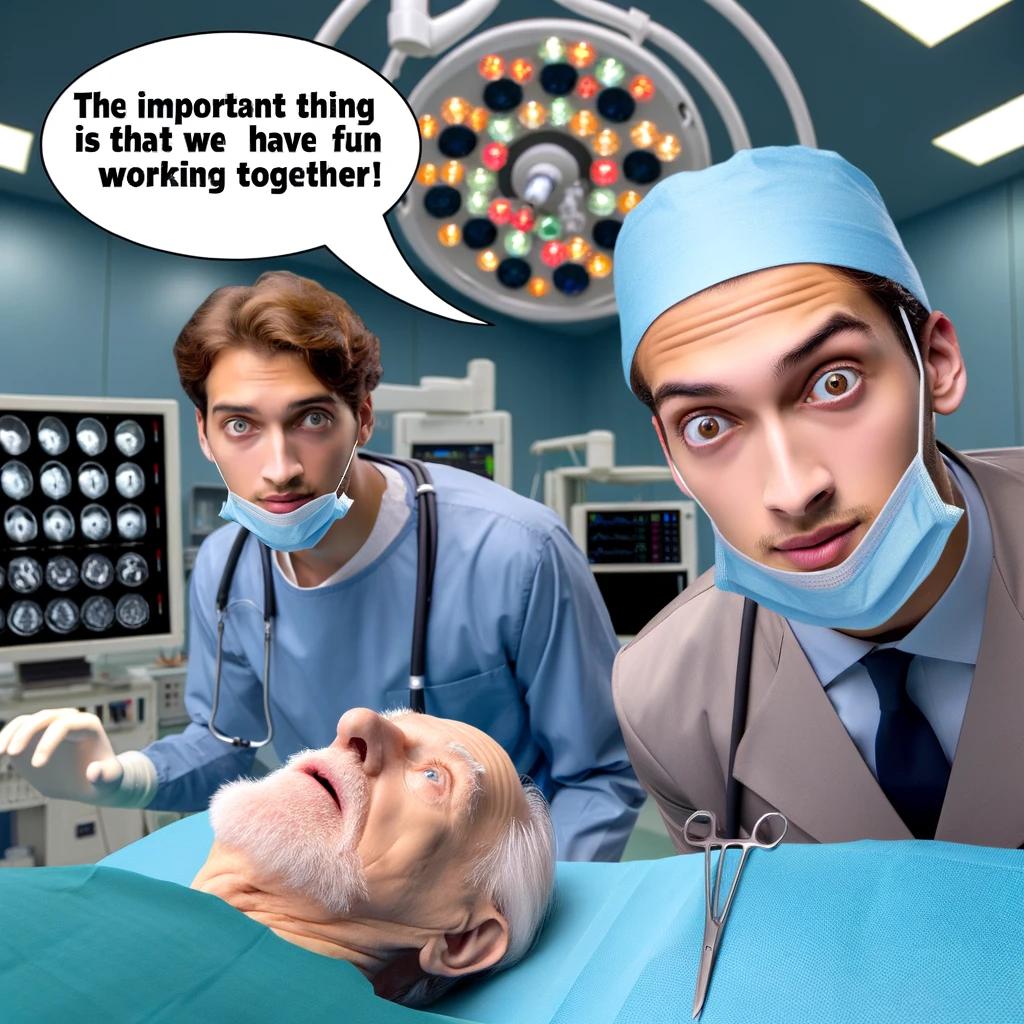

I have a new ChatGPT generated image for my slide covering the #Noestimates movement:

“short tasks tend to be under-estimated and long tasks over-estimate”

Which runs against Vierordt’s law, to quote from Wikipedia:

“short” intervals of time tend to be overestimated, and “long” intervals of time tend to be underestimated

https://en.wikipedia.org/wiki/Vierordt%27s_law

Where do you put the break point between short and long?

Elsewhere I’ve read tha Vierordt puts it at under 2 minutes for short, over 10 for long, (3-9 is ambigious). I’m guessing the estimates you are looking at are substancially longer than minutes.

@Allan Kelly

“short” in Vierordt’s law terms is 10 seconds or so, and applies to a past perceived experience, not a future experience. There is a cottage industry of psychology researchers playing subjects videos/sounds lasting some number of seconds, and then asking them questions.

Vierordt’s law may actually be an artifact of his experimental protocol. This paper 150 years of research on Vierordt’s law – Fechner’s fault? says it’s an artifact, and it looks correct to me, but I have read papers finding a variety of “short” time effects. I have not invested the time to work things through.

The two points in the first paragraph imply that if a short term effect is real, it does not apply to estimating future tasks lasting hours.