Archive

The changing shape of code in the next decade

I think there are two forces that will have a major impact on the shape of code in the next decade:

- Asian developers. China and India each have a population that is more than twice as large as Europe and the US combined, and software development has been kick started in these countries by a significant amount of IT out sourcing. I have one comparative data point for software developers who might be of the hacker ilk. A discussion of my C book on a Chinese blog resulted in a download volume that was 50% of the size of the one that occurred when the book appeared as a news item on Slashdot.

- Scripting languages. Software is written to solve a problem and there are only so many packaged applications (COTS or bespoke) that can profitably be supported. Scripting languages are generally designed to operate within one application domain, e.g., Bash, numerical analysis languages such as R and graphical plotting languages such as gnuplot.

While markup languages are very widely used they tend to be read and written by programs not people.

Having to read code containing non-alphabetic characters is always a shock the first time. Simply having to compare two sequences of symbols for equality is hard work. My first experience of having to do this in real time was checking train station names once I had traveled outside central Tokyo and the names were no longer also given in Romaji.

其中,ul分别是bootmap_size(bit map的size),start_pfn(开始的页框) max_low_pfn(被内核直接映射的最后一个页框的页框号) ; |

Developers based in China and India have many different cultural conventions compared to the West (and each other) and I suspect that these will effect the code they write (my favorite potential effect involves treating time vertically rather than horizontally). Many coding conventions used by a given programming language community exist because of the habits adopted by early users of that language, these being passed on to subsequent users. How many Chinese and Indian developers are being taught to use these conventions, are the influential teachers spreading different conventions? I don’t have a problem with different conventions being adopted other than that having different communities using different conventions increases the cost for one community to adopt another community’s source.

Programs written in a scripting language tend to be much shorter (often being contained within a single file) and make use of much more application knowledge than programs written in general purpose languages. Their data flow tends to be relatively simple (e.g., some values are read/calculated and passed to a function that has some external effect), while the relative complexity of the control flow seems to depend on the language (I only have a few data points for both assertions).

Because of their specialized nature most scripting languages will not have enough users to support any kind of third party support tool market, e.g., testing tools. Does this mean that programs written in a scripting language will contain proportionally more faults? Perhaps their small size means that only a small number of execution paths are possible and these are quickly exercised by everyday usage (I don’t know of any research on this topic).

Information content of expressions

Software developers read source code to obtain information. How might the information content of source code be quantified?

Both of the following functions assign the same value to x and if that is the only information a reader of that code is interested in, then the information content of both assignment statements could be said to be the same.

int foo(void) { x = 5; ... } int bar(void) { x = 2 + 3; ... |

A reader seeking deeper understanding of the above code would ask why the value 5 is built from two values in bar. One reason might be that the author of the function wanted to explicitly call out background information about how the value 5 was derived (this is often done using symbolic names, but the use of literals themselves is sometimes encountered). Perhaps the author of foo did not see the need to expose this information or perhaps the shared value is purely coincidental.

If the two representations denote the same quantity doesn’t the second have a greater information content for a reader seeking deeper understanding?

In the following example:

... x + y & z ... ... ... num_red + num_white & lower_bits ... |

an experienced developer with a knowledge of English is likely to interpret the expression as adding the number of occurrences of two quantities and using bit-wise AND to extract the lower bits. For some readers the second expression has a higher information content. Would use of the names number_of_red further increase the information content?

In the following example the first expression has not added any information that was not already present in the first expression above (except perhaps that the author was not certain of the precedence or perhaps did not expect subsequent readers to be certain).

... ( x + y ) & z ... ... ... x + ( y & z ) ... |

The second expression uses parenthesis to achieve an operand/operator binding that is different from the default. Has this changed the information content of the expression?

There is experimental evidence that developers extract information from the names of variables to help them make decisions about operator precedence. To me the name all_32_bits_one suggests a sequence of bits and I would expect such a representation to be associated with the bit-wise AND operator, not binary plus. With no knowledge of the relative precedence of the two operators in the following expression the name of the middle operand would cause me to misinterpret the code. Does this change the information content of the expression? Does knowledge of the experimental evidence and the correct operator precedence change the information content (i.e., there is a potential fault in the code because the author may have assumed the incorrect precedence)?

... num_red + all_32_bits_one & sign_bit ... |

There is experimental evidence that people use the amount of whitespace appearing between operands and their operators to visually highlight operator precedence

The relative quantities of whitespace used in the following two expressions appear to tell very different stories. Do the two expressions have a different information content?

... x + y & z ... ... ... x + y & z ... |

The idea of measuring the information content of source code is very enticing. However, an accurate measure requires knowledge of the kind of information a reader is trying to obtain and of information that already exists in their brain.

Another question is the easy with which information can be extracted from code. Something that might be labeled as readability, except that readability has connotations of there being an abundant supply of information to extract.

Software maintenance via genetic programming

Genetic algorithms have been used to find solution to a wide variety of problems, including compiler optimizations. It was only a matter of time before somebody applied these techniques to fixing faults in source code.

When I first skimmed the paper “A Genetic Programming Approach to Automated Software Repair” I was surprised at how successful the genetic algorithm was, using as it did such a relatively small amount of cpu resources. A more careful reading of the paper located one very useful technique for reducing the size of the search space; the automated software repair system started by profiling the code to find out which parts of it were executed by the test cases and only considered statements that were executed by these tests for mutation operations (they give a much higher weighting to statements only executed by the failing test case than to statements executed by the other tests; I am a bit surprised that this weighting difference is worthwhile). I hate to think of the amount of time I have wasted trying to fix a bug by looking at code that was not executed by the test case I was running.

I learned more about this very interesting system from one of the authors when he gave the keynote at a workshop organized by people associated with a source code analysis group I was a member of.

The search space was further constrained by only performing mutations at the statement level (i.e., expressions and declarations were not touched) and restricting the set of candidate statements for insertion into the code to those statements already contained within the code, such as if (x != NULL) (i.e., new statements were not randomly created and existing statements were not modified in any way). As measurements of existing code show most uses of a construct are covered by a few simple cases and most statements are constructed from a small number of commonly used constructs. It is no surprise that restricting the candidate insertion set to existing code works so well. Of course no fault fix that depends on using a statement not contained within the source will ever be found.

There is ongoing work looking at genetic modifications at the expression level. This

work shares a problem with GA driven test coverage algorithms; how to find ‘magic numbers’ (in the case of test coverage the magic numbers are those that will cause a controlling expression to be true or false). Literals in source code, like those on the web, tend to follow a power’ish law but the fit to Benford’s law is not good.

Once mutated source that correctly processes the previously failing test case, plus continuing to pass the other test cases, has been generated the code is passed to the final phase of the automated software repair system. Many mutations have no effect on program behavior (the DNA term intron is sometimes applied to them) and the final phase removes any of the added statements that have no effect on test suite output (Westley Weimer said that a reduction from 50 statements to 10 statements is common).

Might the ideas behind this very interesting research system end up being used in ‘live’ software? I think so. There are systems that operate 24/7 where faults cost money. One can imagine a fault being encountered late at night, a genetic based system fixing the fault which then updates the live system, the human developers being informed and deciding what to do later. It does not take much imagination to see the cost advantages driving expensive human input out of the loop in some cases.

An on-going research topic is the extent to which a good quality test suite is needed to ensure that mutated fault fixes don’t introduce new faults. Human written software is known to often be remarkably tolerant to the presence of faults. Perhaps ensuring that software has this characteristic is something that should be investigated.

Compiler writing: The career path to World domination

Compiler writing is not usually thought of as a career path that leads to becoming Ruler of the World. Perhaps this is because compiler writing is a relatively new profession and us compiler writers are still toiling in obscurity awaiting the new dawn.

What might be a workable plan for a compiler writer to become Ruler of the World? One possibility is to write a compiler for the language in which most of the World’s critical software is written (i.e., C) and for that compiler to become the one that the vendors of this critical software all use (i.e., gcc). This compiler needs to do more that just compile the source code it is feed, it also needs to generate code that creates a backdoor in important programs (e.g., the login program).

But, you say, this cannot happen with gcc because its source is available for everybody to read (and spot any backdoor generator). In his 1984 Turing acceptance lecture Ken Thompson showed how a compiler could contain a backdoor that was not visible in its source. The idea is for the compiler writer to modify a compiler to detect when it is being used to compile itself and to insert the backdoor generating code into its own executable. This modified compiler is then used to compile itself and the resulting executable made the default compiler; the backdoor modifications are then removed from the compiler source, they are no longer needed because the previously compiled compiler will spot when it is being used to compile its own source and generate the code necessary to propagate the backdoor code into the executable it creates.

How would the world counter the appearance of such a modified gcc? Obviously critical programs would need to be recompiled by a version of gcc that did not contain the backdoor. Today there are several companies and many amateur groups that distribute their own Linux distributions which they build from source. It should be relatively easy to obtain a usable executable of gcc from 10 years ago; remember what is needed is a version capable of compiling the latest gcc sources.

The ideal time to create a backdoor’ed version of gcc is while its development was under the control of one person, so early in the development history that all versions available anywhere are very likely to be derived from it. How can we prove that the original author of gcc did not do just this?

It could be argued that the very substantial changes to the gcc sources (most of the source has probably been rewritten several times) mean that the coding patterns searched for by the executable to detect that it is compiling itself have long gone and at some point the backdoor failed to propagate itself to the next executable.

Compilers other than gcc might also include backdoors that propagate themselves. However, the method of propagation is likely to be different. Compiling the gcc sources with a non-gcc compiler creates an executable that should exhibit the same behavior as a gcc-compiled executable. Differences in the behavior of these independently built executables is a cause for concern (one difference might be caused by differences in the conversion of floating-point literals, a recent PhD thesis provides more detail).

The problem with compiling the gcc sources is that they make use of language extensions that few, if any, other compilers support. I know IBM added modified one of their C compilers to support those gcc extensions needed to compile the Linux kernel, but I don’t know if this compiler is capable of compiling the gcc sources. The LLVM project intended to support many gcc extensions but I don’t know if they aim to be able to compile the gcc sources.

Another option is to compare the assembler generated when gcc compiles itself against the corresponding source code. A very expensive task for source code measured in hundreds of thousands of lines. Adding the necessary language extension support to another compiler would probably be cheaper and also create a tool that could be used to check future releases of gcc.

Using Coccinelle to match if sequences

I have been using Coccinelle to obtain measurements of various properties of C if and switch statements. It is rare to find a tool that does exactly what is desired but it is often possible to combine various tools to achieve the desired result.

I am interested in measuring sequences of if-else-if statements and one of the things I wanted to know was how many sequences of a given length occurred. Writing a pattern for each possible sequence was the obvious solution, but what is the longest sequence I should search for? A better solution is to use a pattern that matches short sequences and writes out the position (line/column number) where they occur in the code, as in the following Coccinelle pattern:

@ if_else_if_else @ expression E_1, E_2; statement S_1, S_2, S_3; position p_1, p_2; @@ if@p_1 (E_1) S_1 else if@p_2 (E_2) S_2 else S_3 @ script:python @ expr_1 << if_else_if_else.E_1; expr_2 << if_else_if_else.E_2; loc_1 << if_else_if_else.p_1; loc_2 << if_else_if_else.p_2; @@ print "--- ifelseifelse" print loc_1[0].line, " ", loc_1[0].column, " ", expr_1 print loc_2[0].line, " ", loc_2[0].column, " ", expr_2 |

noting that in a sequence of source such as:

if (x == 1) stmt_1; else if (x == 2) stmt_2; else if (x == 3) stmt_3; |

the tokens if (x == 2) will be matched twice, the first setting the position metavariable p_2 and then setting p_1. An awk script was written to read the Coccinelle output and merge together adjacent pairs of matches that were part of a longer if-else-if sequence.

The first pattern did not concern itself with the form of the controlling expression, it simply wrote it out. A second set of patterns was used to match those forms of controlling expression I was interested in, but first I had to convert the output into syntactically correct C so that it could be processed by Coccinelle. Again awk came to the rescue, converting the output:

--- ifelseifelse 186 2 op == FFEBLD_opSUBRREF 191 7 op == FFEBLD_opFUNCREF --- ifelseifelse 1094 3 anynum && commit 1111 8 ( c [ colon + 1 ] == '*' ) && commit |

into a separate function for each matched sequence:

void f_1(void) { // --- ifelseifelse /* 186 2 */ op == FFEBLD_opSUBRREF ; /* 191 7 */ op == FFEBLD_opFUNCREF ; } void f_2(void) { // --- ifelseifelse /* 1094 3 */ anynum && commit ; /* 1111 8 */ ( c [ colon + 1 ] == '*' ) && commit ; } |

The Coccinelle pattern:

@ if_eq_1 @ expression E_1; constant C_1, C_2; position p_1, p_2; @@ E_1 == C_1@p_1 ; E_1 == C_2@p_2 ; @ script:python @ expr_1<< if_eq_1.E_1; const_1 << if_eq_1.C_1; const_2 << if_eq_1.C_2; loc_1 << if_eq_1.p_1; loc_2 << if_eq_1.p_2; @@ print loc_1[0].line, " ", loc_1[0].column, " 3 ", expr_1, " == ", const_1 print loc_2[0].line, " ", loc_2[0].column, " 2 ", expr_1, " == ", const_2 |

matches a sequence of two statements which consist of an expression being compared for equality against a constant, with the expression being identical in both statements. Again positions were written out for post-processing, i.e., joining together matched sequences.

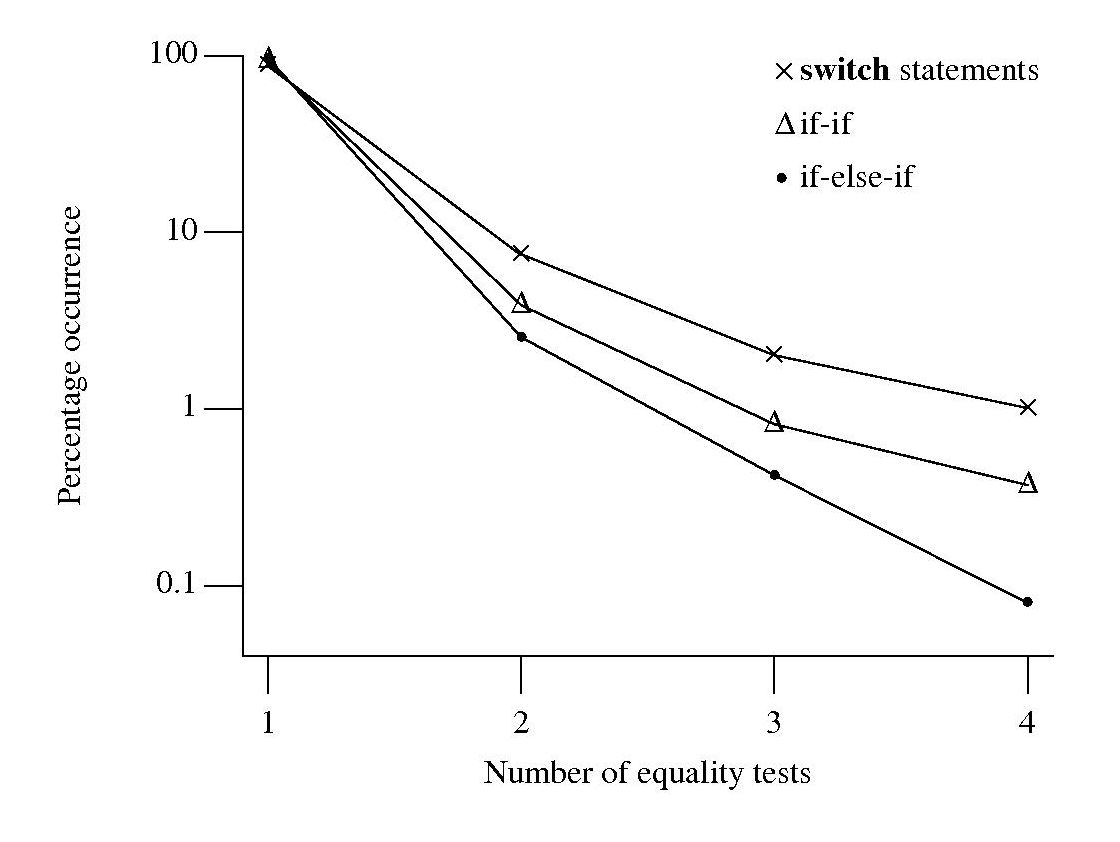

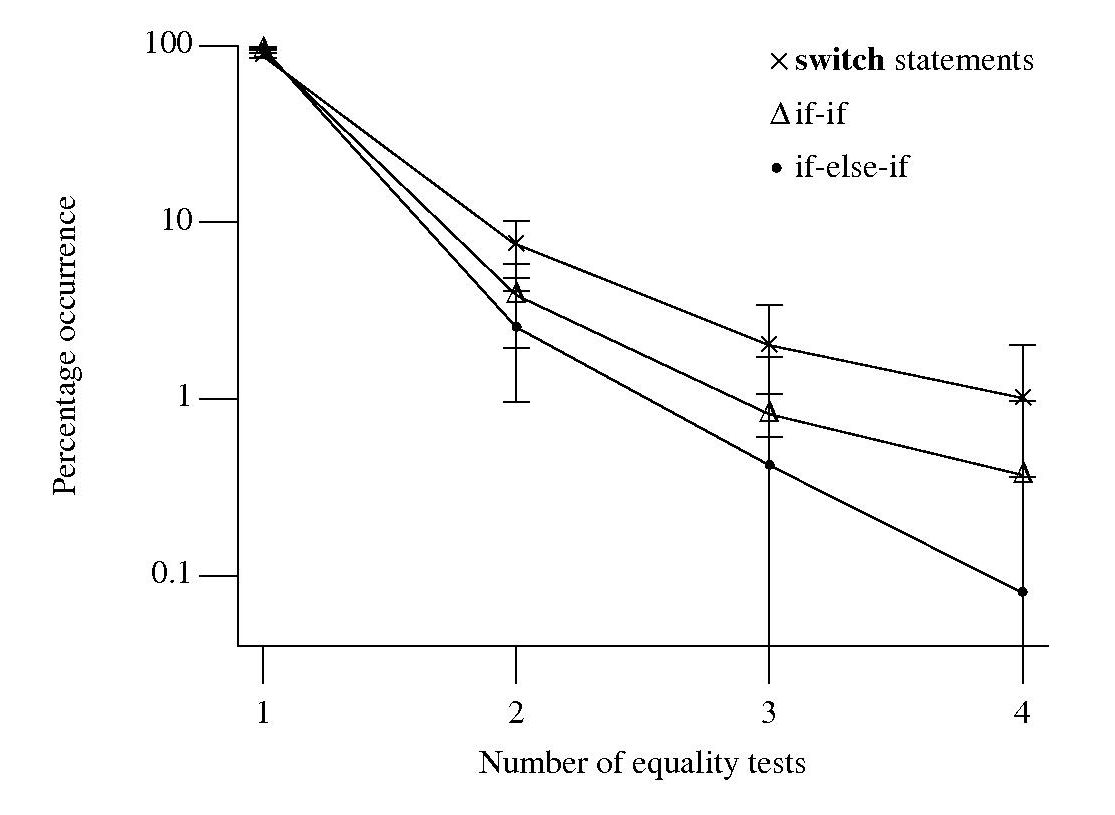

I was interested in any sequence of if-else-if that could be converted to an equivalent switch-statement. Equality tests against a constant is just one form of controlling expression that meets this requirement, another is the between operation. Separate patterns could be written and run over the generated C source containing the extracted controlling expressions.

Breaking down the measuring process into smaller steps reduced the amount of time needed to get a final result (with Coccinelle 0.1.19 the first pattern takes round 70 minutes, thanks to Julia Lawall‘s work to speed things up, an overhead that only has to occur once) and allows the same controlling expression patterns to be run against the output of both the if-else-if and if-if patterns.

At the end of this process I ended up with a list information (line numbers in source code and form of controlling expression) on if-statement sequences that could be rewritten as a switch-statement.

To if-else-if or if-if, that is the question

I am currently measuring if-statements, occurring in visible source, that might be mapped to an equivalent switch-statement. The most obvious usage to look for is a sequence of if-else-if statements that all involve the same expression being tested against an integer constant, as in

if (x == 1) stmt_1; else if (x == 2) stmt_2; else if (x == 3) stmt_3; |

Another possible sequence is:

if (x == 1) stmt_1; if (x == 2) stmt_2; if (x == 3) stmt_3; |

provided all but the last conditionally executed arms do not change the value of the common control variable (e.g., x).

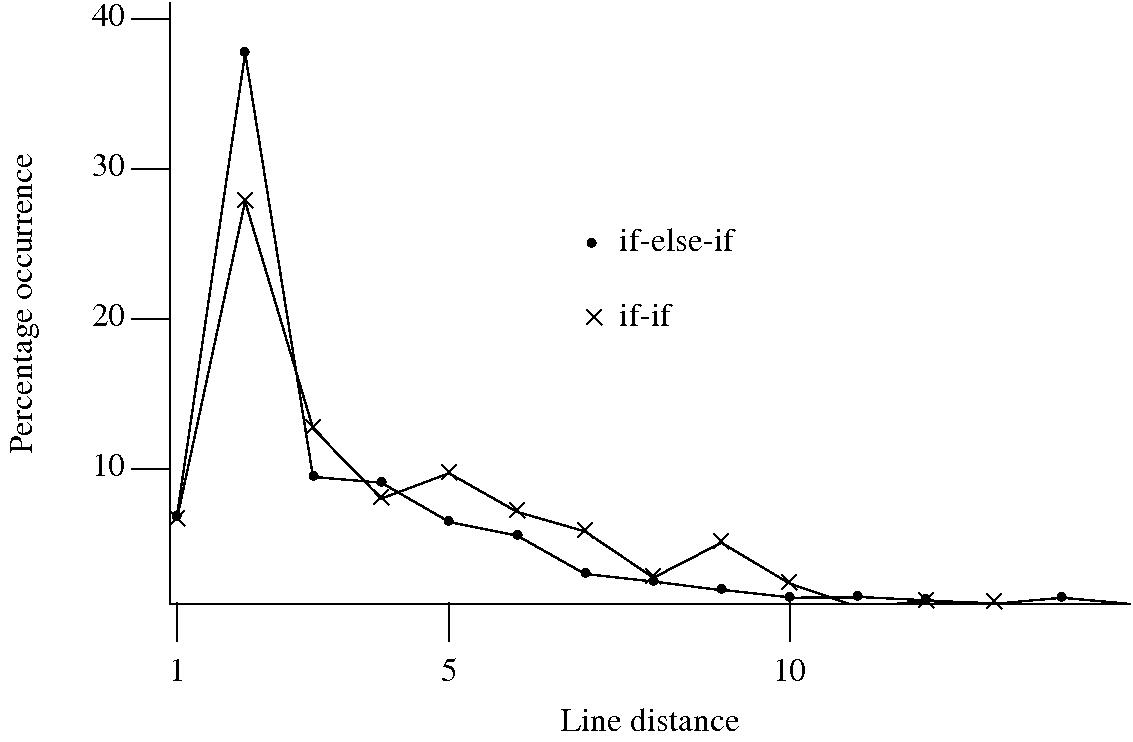

I started to wonder about what would cause a developer to chose one of these forms over the other. Perhaps the if-if form would be used when it was obvious that the common conditional variable was not modified in the conditionally executed arm. This would imply that there would be more statements in the arms of if-else-if sequences than if-if sequences. The following plot of percentage occurrence (over all detected if-else-if/if-if forms) of line number difference between pars of associated if-statements (e.g., when the controlling expression occurs on line

Just over a quarter of the arms contain a single statement (or to be exact the code is contained on a single line); this suggests that when using the if-else-if form most developers put the else and if on the same line. At the next distance along the percentage of if-else-if forms is twice as great as the if-if, probably because of else and if appearing on separate lines (as in the introductory example) in one case and less frequently a comment/blank line in the other. Next along, why the big increase in if-if forms? A comment + blank line, or perhaps no comment or blank line but the use of curly brackets (this is too off the track of where I am supposed to be going to investigate).

This morning I realized why the original plot did not look right, one of the data sets was a way off adding to 100%. An updated version has been uploaded.

It turns out that a single statement (or at least a single line) is more common in the if-else-if form, the opposite of what I had expected. At slightly larger distances there are still differences that can be attributed to else and if appearing on separate lines, curly brackets and a comment/blank line, but the effect is not as large as seen in the original, less accurate, plot.

I have a feeling that I ought to say something about the if-else-if form being preferred to the if-if form. One of the forms will have its behavior changed if the common control variable is modified in one of its arms. But is this an intended or unintended behavior? What is the typical characteristic usage of a common control variable, e.g., do they tend to be accessed but not modified in a given function definition? At the moment I see no obvious cost or benefit strongly favoring one usage over the other, so I will remain silent on the issue.

Finding the ‘minimum’ faulty program

A few weeks ago I received an inquiry about running a course/workshop on compiler writing. This does not does not happen very often and it reminded me that many years ago the ACCU asked if I would run a mentored group on compiler writing, I was busy writing a book at the time. The inquiry got me thinking it would be fun to run a compiler writing mentored group over a 6-9 month period and I emailed the general ACCU reflector asking if anybody was interested in joining such a group (any reader wanting to join the group has to be a member of the ACCU).

Over the weekend I had a brainwave for a project, automatic compiler test generation coupled with a program source code minimizer (I need a better name for this bit). Automatic test generation sounds great in theory but in practice whittling down the source code of those programs that result in a fault being exhibited, to create a usable sized test case that is practical for debugging purposes can be a major effort. What is needed is a tool to automatically do the whittling, i.e., a test case minimizer.

A simple algorithm for whittling down the source of a large test program is to continually throw away that half/third/quarter of the code that is not needed for the fault to manifest itself. A compiler project that took as input source code, removed half/third/quarter of the code and generated output that could be compiled and executed is realistic. The input/reduce/output process could be repeated until the generated source was considered to have reached some minima. Ok, this will soak up some cpu time, but computers are cheap and people are expensive.

Where does the test source code come from? Easy, it is generated from the same yacc grammar that the compiler, written by the mentored group member, uses to parse its input. Fortunately such a generation tool is available and ready to use.

The beauty is using the same grammar to generate tests and parse input. This means there is no need to worry about which language subset to use initially and support for additional language syntax can be added incrementally.

Experience shows that automatically generated test programs quickly uncover faults in production compilers, even when working with language subsets. Compiler implementors are loath to spend time cutting down a large program to find the statement/expression where the fault lies, this project will produce a tool that does the job for them.

So to recap, the mentored group is going to write one or more automatic source code generators that will be used to stress test compilers written by other people (e.g., gcc and Microsoft). Group members will also write their own compiler that reads in this automatically generated source code, throws some of it away and writes out syntactically/semantically correct source code. Various scripts will be be written to glue this all together.

Group members can pick the language they want to work with. The initial subset could just include supports for integer types, if-statements and binary operators.

If you had trouble making any sense all this, don’t join the group.

Using third party measurement data

Until today, to the best of my knowledge, all of the source code analysis papers I have read were written by researchers who had control of the code analysis tools they used and had some form of localised access to the source. By control of the code analysis tools I mean that the researchers specified the tool options and had the ability to check the behavior of the tool, in many cases the source of the tool was available to them and often even written by them, and the localised access may have involved downloading lots of code from the web.

I have just been reading about a broad brush analysis of comment usage based on data provided by a commercial code repository that offers API access to some basic code metrics.

At first I was very frustrated by the lack of depth to the analysis provided in the paper, but then I realised that the authors’ intent was to investigate a few broad ideas about comment usage in a large number of projects (around 10,000). The authors complained in their blog about some of the referees comments and having to submit a shorter paper. I can see where the referees are coming from, the papers are lacking in depth of analysis, but they do contain some interesting results.

I was very interested in Figure 2:

which plots the comment density of the lines in a source code commit. I would expect the ratio to be higher for small commits because a developer probably has a relatively fixed amount to say about updates involving a smallish number of lines (which probably fixes a problem). Larger commits are probably updated functionality and so would have a comment density similar to the ‘average’.

The problem with relying on third parties to supply the data is that obtaining the answers to follow up questions invariably involves lots of work, e.g., creating an environment to perform the measurements needed for the follow up questions. However the third party approach can significantly reduce the amount of work needed to get to a point where the interestingness of the results can be gauged.

Does the Climategate code produce reliable output?

The source of several rather important commercial programs have been made public recently, or to be more exact programs whose output is important (i.e., the Sequoia voting system and code and data from the Climate Research Unit at University of East Anglia the so called ‘Climategate’ leak). While many technical commentators have expressed amazement at how amateurish the programming appears to be, apparently written with little knowledge of good software engineering practices or knowledge of the programming language being used, those who work on commercial projects know that low levels of software engineering/programming competence is the norm.

The emails included in the Climategate leak provide another vivid example, if one were needed, of why scientific data should be made publicly available; scientists are human and are sometimes willing to hide data that does not fit their pet theory or even fails to validate their theory at all.

The Climategate source has only only recently become available and existing technical commentary has been derived from embarassing comments and the usual complaint about not using the right programming language (Fortran is actually a good choice of language for this problem, it is widely used by climatology researchers and a non-professional programmer is probably makes best of their time by using the one language they know tolerably well rather than attempting to use a new language that nobody else in the research group knows).

An important quality indicator of the leaked software was what was not there, test cases (at least I could not find any). How do we know that a program’s output is correct? One way to gain some confidence in a program’s correctness is to process data for which the correct output is known. This blindness to the importance of program level correctness testing is something that I often encounter in people who are subject area experts rather than professional programmers; they believe that if the output has the form they are expecting it must be correct and will sometimes add ‘faults’ to ‘fix’ output that deviates from what they are expecting.

A quick visual scan through the source showed a tale of two worlds, one of single letter identifier names and liberal use of goto, and the other of what looks like meaningful names, structured code and a non-trivial number of comments. The individuals who have contributed to the code base obviously have very different levels of coding ability. Not having written any Fortran in anger for over 15 years my ability to estimate the impact of more subtle coding practices has atrophied.

What kind of faults might a code review look for in these programs? Common coding errors such as using uninitialized variables and incorrect argument passing are obvious choices and their are tools available to check for these kinds of error. A much more insidious kind of error, which requires people with the mathematical expertise to spot, is created by the approximate nature of floating-point arithmetic.

The source is not huge, but not small either, consisting of around 64,000 lines of Fortran and 16,000 lines of IDL (a language designed for interactive data analysis which to my untrained eye looks a lot like MATLAB). There was no obvious support for building the source included within the leaked files (e.g., no makefiles) and my attempt to manually compile using the GNU Fortran compiler failed miserably. So I cannot say anything reliable about the compiler output warnings.

To me the complete lack of test cases implies that the Climategate code does not produce reliable output. Comments in the code such as

***** APPLIES A VERY ARTIFICIAL CORRECTION FOR DECLINE*********suggests that the authors were willing to patch the code to produce output that matched their expectations; this is the mentality of somebody for whom code correctness is not an important issue and if they don’t believe their code is correct then I don’t either.Source code in itself is rarely that important, although it might have been expensive to create. The real important information in the leaked files is the climate data. Now that this is available others can apply their analysis skills to provide an interpretation to what, if anything statistically reliable, it is telling us.