Archive

LLMs and doing software engineering research

This week I attended the 65th COW workshop, the theme was Automated Program Repair and Genetic Improvement.

I first learned about using genetic programming to automatically fix reported faults at the 1st COW workshop in 2009. Claire Le Goues, a PhD student at that workshop, now a professor, returned to talk about the latest program repair work of her research group.

COW speakers are usually very upbeat, but uncertainty about the future was the general feeling I got from speakers at this workshop. The cause of this uncertainty was the topic of some talks and conversations: LLMs. Adding an LLM into the program repair process can produce a dramatic performance improvement.

Isn’t a dramatic performance improvement and a new technique great news for everyone? The performance improvement increases the likelihood of industrial adoption, and a new technique creates many opportunities for new research.

Despite claiming otherwise, most academics have zero interest in industrial adoption of their work, and some actively disdain practical uses of their work.

Major new techniques are great for PhD students; they provide an opportunity to kick-start a career by being in at the start of a new research area.

A major new technique can obsolete an established researcher’s expensively acquired area of expertise (expensive in personal time and effort). The expertise that enables a researcher to make state-of-the-art contributions to an active research area is a valuable asset; it can be used to attract funding, students and peer esteem. When a new technique dramatically improves the state-of-the-art, there is a sharp drop in the value of what is now yesterday’s know-how.

A major new technique removes some existing barriers to entering a field, and creates its own new ones. The result is that new people start working in a field, and some existing experts stop working in it.

At the workshop, I saw this process starting in automated program repair, and I imagine it’s also starting in many other research fields. It will probably take 3–5 years for the dust to start to settle; existing funded projects have to complete, and academia does not move that quickly.

A recent review of the use of LLMs in software engineering research found 229 papers; the table below shows the number of papers per year:

Papers Year

7 2020

11 2021

51 2022

160 2023 to end July |

Assuming, say, 10K software engineering papers per year, then LLM related papers should be around 3% this year, likely in double figures next year, and possibly over 50% the year after.

Is research in software engineering en route to becoming another subfield of prompt engineering research?

Computer: Plot the data

Last Saturday I attended my first 24-hour hackathon in over 5-years (as far as I know, also the first 24-hour hackathon in London since COVID); the GenAI Hackathon.

I had a great idea for the tool to build. Readers will be familiar with the scene in sci-fi films where somebody says “Computer: Plot the data”, and a plot appears on the appropriate screen. I planned to implement this plot-the-data app using LLMs.

The easy option is to use speech to text, using something like OpenAI’s Whisper, as a front-end to a conventional plotting program. The hard option is to also use an LLM to generate the code needed to create the plot; I planned to do it the hard way.

My plan was to structure the internal functionality using langchain tools and agents. langchain can generate Python and execute this code.

I decided to get the plotting working first, and then add support for speech input. With six lines of Python I created a program that works every now and again; here is the code (which assumes that the environment variable OPENAI_API_KEY has been set to a valid OpenAI API key; the function create_csv_agent is provided by langchain):

from langchain.agents import create_csv_agent from langchain.llms import OpenAI import pandas agent = create_csv_agent(OpenAI(temperature=0.0, verbose=True), "aug-oct_day_items.csv", verbose=True) agent.run("Plot the Aug column against Oct column.") |

Sometimes this program figures out that it needs to call matplotlib to display the data, sometimes its output is a set of instructions for how this plot functionality could be implemented, sometimes multiple plots appear (with lines connecting points, and/or a scatter plot).

Like me, and others, readers who have scratched the surface of LLMs have read that setting the argument temperature=0.0 ensures that the output is always the same. In theory this is true, but in practice the implementation of LLMs contains some intrinsic non-determinism.

The behavior can be made more consistent by giving explicit instructions (just like dealing with humans). I prefixed the user input instructions to use matplotlib, use column names as the axis labels, and to generate a scatter plot, finally a request to display the plot is appended.

In the following code, the first call to plot_data specifies the ‘two month columns’, and the appropriate columns are selected from the csv file.

from langchain.agents import create_csv_agent from langchain.llms import OpenAI import pandas def plot_data(file_str, usr_str): agent = create_csv_agent(OpenAI(temperature=0.0, model_name="text-davinci-003", verbose=True), file_str, verbose=True) plot_txt="Use matplotlib to plot data and" +\ " use the column names for axis labels." +\ " I want you to create a scatter " +\ usr_str + " Display the plot." agent.run(plot_txt) plot_data("aug-oct_day_items.csv", "plot using the two month columns.") plot_data("task-est-act.csv", "plot using the estimates and actuals.") plot_data("task-est-act.csv", "plot the estimates and actuals using a logarithmic scale.") |

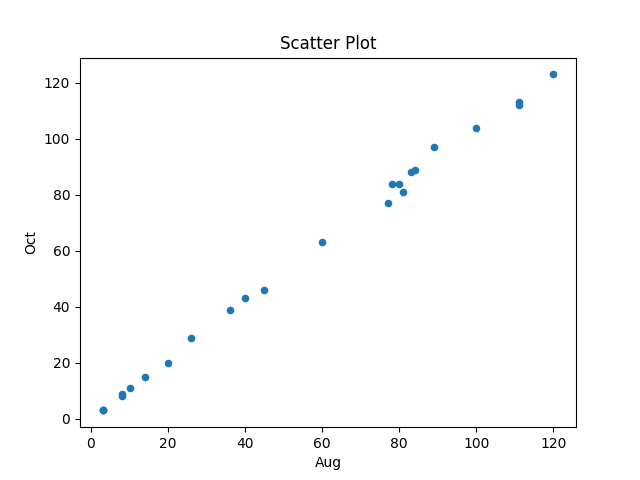

The first call to plot_data worked as expected, producing the following plot (code+data):

The second call failed with an ‘internal’ error. The generated Python has incorrect indentation:

IndentationError: unexpected indent (<unknown>, line 2) I need to make sure I have the correct indentation. |

While the langchain agent states what it needs to do to correct the error, it repeats the same mistake several times before giving up.

Like a well-trained developer, I set about trying different options (e.g., changing the language model) and searching various question/answer sites. No luck.

Finally, I broke with software developer behavior and added the line “Use the same indentation for each python statement.” to the prompt. Prompt engineering behavior is to explicitly tell the LLM what to do, not to fiddle with configuration options.

So now the second call to plot_data works, and the third call sometimes does odd things.

At the hack I failed to convince anybody else to work on this project with me. So I joined another project and helped out (they were very competent and did not really need my help), while fiddling with the Plot-the-data idea.

The code+test data is in the Plot-the-data Github repo. Pull requests welcome.

Is the code reuse problem now solved?

Writing a program to solve a problem involves breaking the problem down into subcomponents that have a known coding solution, and connecting the input/output of these subcomponents into sequences that produced the desired behavior.

When computers first became available, developers had to write every subcomponent. It was soon noticed that new programs contained some functionality that was identical to functionality present in previously written programs, and software libraries were created to reduce development cost/time through reuse of existing code. Developers have being sharing code since the very start of computing.

To be commercially viable, computer manufacturers discovered that they not only had to provide vendor specific libraries, they also had to support general purpose functionality, e.g., sorting and maths libraries.

The Internet significantly reduced the cost of finding and distributing software, enabling an explosion in the quality and quantity of publicly available source code. It became possible to write major subcomponents by gluing together third-party libraries and packages (subject to licensing issues).

Diversity of the ecosystems in which libraries/packages have to function means that developers working in different environments have to apply different glue. Computing diversity increases costs.

A lot of effort was invested in trying to increase software reuse in a very diverse world.

In the 1990 there was a dramatic reduction in diversity, caused by a dramatic reduction in the number of distinct cpus, operating systems and compilers. However, commercial and personal interests continue to drive the creation of new cpus, operating systems, languages and frameworks.

The reduction in diversity has made it cheaper to make libraries/packages more widely available, and reduced the variety of glue coding patterns. However, while glue code contains many common usage patterns, they tend not to be sufficiently substantial or distinct enough for a cost-effective reuse solution to be readily apparent.

The information available on developer question/answer sites, such as Stackoverflow, provides one form of reuse sharing for glue code.

The huge amounts of source code containing shared usage patterns are great training input for large languages models, and widespread developer interest in these patterns means that the responses from these trained models is of immediate practical use for many developers.

LLMs appear to be the long sought cost-effective solution to the technical problem of code reuse; it’s too early to say what impact licensing issues will have on widespread adoption.

One consequence of widespread LLM usage is a slowing of the adoption of new packages, because LLMs will not know anything about them. LLMs are also the death knell for fashionable new languages, which is a very good thing.

Modular Reasoning, Knowledge and Language systems

The spectrum of models of the human mind run from it being a general purpose computer to it being a collection of integrated specialist modules (each performing one function, e.g., speech or language). The Modularity of mind hypothesis offers a halfway house.

ChatGPT sits at the general purpose computer end of the spectrum; there is a single ‘processor’ that accepts a particular kind of input and produces a particular kind of output.

While predict-the-next-token systems like ChatGTP have proven to be good at analysing and constructing sentences, they are often unable to carry out the actions described by these sentences; for instance, they are capable of describing mathematical operations that they are incapable of performing (unless the answer happens to be in their training).

A Modular Reasoning, Knowledge and Language system (MRKL; the suggested pronunciation is miracle), is, as the name suggests, a system built from specialist modules. In this approach, a large language model (LLM), such as ChatGTP, is the language processing module.

In a MRKL system, the input is processed (by an LLM) to figure out which specialist modules have to be queried to obtain the information needed to answer the question, the appropriate text (generated by an LLM) is fed as input to the corresponding modules, and the module outputs are collected and fed to an LLM to generate an answer to the question.

A user question may involve querying multiple modules in some sequence. For instance, the question “What is the average age of the last five British Prime ministers?” might involve querying Google/Alexa answers to obtain a list of previous Prime ministers, followed by extracting individual ages from Wikipedia, followed by querying a maths module to obtain the average of the five ages obtained.

The extent to which an application using an LLM might be said to be a MRKL system is a matter of degree. The following shell script is unlikely to qualify:

curl https://api.openai.com/v1/completions \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer '{$OPENAI_API_KEY} \

-d '{

"model": "text-davinci-003",

"prompt": "Say I found The Shape of Code to be an interesting blog",

"temperature": 0

}' |

The OpenAI API focuses on how to drive their various language models, along with lots of examples. There is no API offering a higher level abstraction or functionality.

An API designed for building MRKL systems, that is starting to gain traction, is langchain; a collection of Python packages, with JavaScript libraries playing catchup.

langchain Module categories include: LLM interaction (e.g., specifying which LLM to use, API keys, and changing default values), document loaders (e.g., readers for pdf, HTML, Gitbook, and Microsoft Word), Agents (these use an LLM to process the input text to find out what actions need to be performed, and to create the input actions that the selected modules need to perform), Memory (store information from previous interactions; other modules can be stateless), and Chat (handle the mechanics of holding a conversation).

What does langchain offer that is making it attractive to a growing number of developers?

- Making use of an LLM within an application will involve some subset of the functionality provided by langchain. The advantage of using langchain is that it provides a framework, MRKL, along with a (sometimes skeleton) existing implementation,

- first mover advantage for an Open source implementation has enabled langchain to attract a growing number of active contributors; it also helps that the core developers have been making regular updates (almost daily), and half-decent documentation is available.

Given the current volume of discussion around LLMs, why has there been so little written about MRKL systems?

Building a MRKL system requires coding ability, and developers are a small percentage of those contributing to the discussion avalanche.

Building a MRML system takes a lot of time and work. Being able to break down a question into subcomponents that can be answered by the available modules, and sequencing them appropriately is a non-trivial problem.

Once Apps solving real-world problems start becoming widely used, and the novelty of generic chat systems wears off, the discussion will switch to more grounded issues.

Recent Comments