Archive

Analyzing mainframe data in light of the IBM antitrust cases

Issues surrounding the decades of decreasing cost/increasing performance of microcomputers, powered by Intel’s x86/Pentium have been extensively analysed. There has been a lot of analysis of pre-Intel computers, in particular Mainframe computers. Is there any major difference?

Mainframes certainly got a lot faster throughout the 1960s and 1970s, and the Computer and Automation’s monthly census shows substantial decreases in rental prices (the OCR error rate is not yet low enough for me to be willing to spend time extracting 300 pages of tabular data).

During the 1960s and 1970s the computer market was dominated by IBM, whose market share was over 70% (its nearest rivals, Sperry Rand, Honeywell, Control Data, General Electric, RCA, Burroughs, and NCR, were known as the seven dwarfs).

While some papers analyzing the mainframe market do mention that there was an antitrust case against IBM, most don’t mention it. There are some interesting papers on the evolution of families of IBM products, but how should this analysis be interpreted in light of IBM’s dominant market position?

For me, the issue of how to approach the interpretation of IBM mainframe cost/performance/sales data is provided by the book The U.S. Computer Industry: A Study of Market Power by Gerald Brock.

Brock compares the expected performance of a dominant company in a hypothetical computer industry, where anticompetitive practices do not occur, with IBM’s performance in the real world. There were a variety of mismatches (multiple antitrust actions have found IBM guilty of abusing its dominant market power).

Any abuse of market power by IBM does not impact the analysis of computer related issues about what happened in the 1950s/1960s/1970, but the possibility of this behavior introduces uncertainty into any analysis of why things happened.

Intel also had its share of antitrust cases, which will be of interest to people analysing the x86/Pentium compatible processor market.

Moore’s law was a socially constructed project

Moore’s law was a socially constructed project that depended on the coordinated actions of many independent companies and groups of individuals to last for as long it did.

All products evolve, but what was it about Moore’s law that enabled microelectronics to evolve so much faster and for longer than most other products?

Moore’s observation, made in 1965 based on four data points, was that the number of components contained in a fabricated silicon device doubles every year. The paper didn’t make this claim in words, but a line fitted to four yearly data points (starting in 1962) suggested this behavior continuing into the mid-1970s. The introduction of IBM’s Personal Computer, in 1981 containing Intel’s 8088 processor, led to interested parties coming together to create a hugely profitable ecosystem that depended on the continuance of Moore’s law.

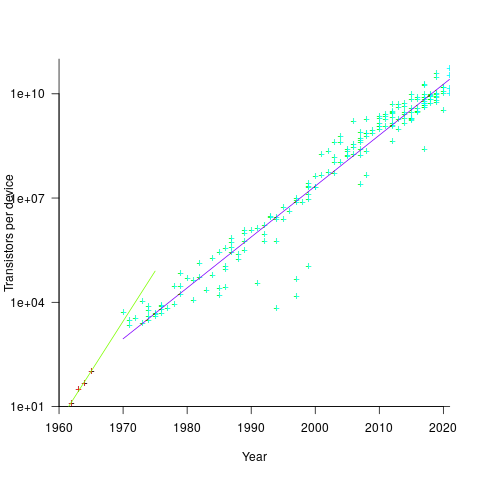

The plot below shows Moore’s four points (red) and fitted regression model (green line). In practice, since 1970, fitting a regression model (purple line) to the number of transistors in various microprocessors (blue/green, data from Wikipedia), finds that the number of transistors doubled every two years (code+data):

In the early days, designing a device was mostly a manual operation; that is, the circuit design and logic design down to the transistor level were hand-drawn. This meant that creating a device containing twice as many transistors required twice as many engineers. At some point the doubling process either becomes uneconomic or it takes forever to get anything done because of the coordination effort.

The problem of needing an exponentially-growing number of engineers was solved by creating electronic design automation tools (EDA), starting in the 1980s, with successive generations of tools handling ever higher levels of abstraction, and human designers focusing on the upper levels.

The use of EDA provides a benefit to manufacturers (who can design differentiated products) and to customers (e.g., products containing more functionality).

If EDA had not solved the problem of exponential growth in engineers, Moore’s law would have maxed-out in the early 1980s, with around 150K transistors per device. However, this would not have stopped the ongoing shrinking of transistors; two economic factors independently incentivize the creation of ever smaller transistors.

When wafer fabrication technology improvements make it possible to double the number of transistors on a silicon wafer, then around twice as many devices can be produced (assuming unchanged number of transistors per device, and other technical details). The wafer fabrication cost is greater (second row in table below), but a lot less than twice as much, so the manufacturing cost per device is much lower (third row in table).

The doubling of transistors primarily provides a manufacturer benefit.

The following table gives estimates for various chip foundry economic factors, in dollars (taken from the report: AI Chips: What They Are and Why They Matter). Node, expressed in nanometers, used to directly correspond to the length of a particular feature created during the fabrication process; these days it does not correspond to the size of any specific feature and is essentially just a name applied to a particular generation of chips.

Node (nm) 90 65 40 28 20 16/12 10 7 5 Foundry sale price per wafer 1,650 1,937 2,274 2,891 3,677 3,984 5,992 9,346 16,988 Foundry sale price per chip 2,433 1,428 713 453 399 331 274 233 238 Mass production year 2004 2006 2009 2011 2014 2015 2017 2018 2020 Quarter Q4 Q4 Q1 Q4 Q3 Q3 Q2 Q3 Q1 Capital investment per wafer 4,649 5,456 6,404 8,144 10,356 11,220 13,169 14,267 16,746 processed per year Capital consumed per wafer 411 483 567 721 917 993 1,494 2,330 4,235 processed in 2020 Other costs and markup 1,293 1,454 1,707 2,171 2,760 2,990 4,498 7,016 12,753 per wafer |

The second economic factor incentivizing the creation of smaller transistors is Dennard scaling, a rarely heard technical term named after the first author of a 1974 paper showing that transistor power consumption scaled with area (for very small transistors). Halving the area occupied by a transistor, halves the power consumed, at the same frequency.

The maximum clock-frequency of a microprocessor is limited by the amount of heat it can dissipate; the heat produced is proportional to the power consumed, which is approximately proportional to the clock-frequency. Instead of a device having smaller transistors consume less power, they could consume the same power at double the frequency.

Dennard scaling primarily provides a customer benefit.

Figuring out how to further shrink the size of transistors requires an investment in research, followed by designing/(building or purchasing) new equipment. Why would a company, who had invested in researching and building their current manufacturing capability, be willing to invest in making it obsolete?

The fear of losing market share is a commercial imperative experienced by all leading companies. In the microprocessor market, the first company to halve the size of a transistor would be able to produce twice as many microprocessors (at a lower cost) running twice as fast as the existing products. They could (and did) charge more for the latest, faster product, even though it cost them less than the previous version to manufacture.

Building cheaper, faster products is a means to an end; that end is receiving a decent return on the investment made. How large is the market for new microprocessors and how large an investment is required to build the next generation of products?

Rock’s law says that the cost of a chip fabrication plant doubles every four years (the per wafer price in the table above is increasing at a slower rate). Gambling hundreds of millions of dollars, later billions of dollars, on a next generation fabrication plant has always been a high risk/high reward investment.

The sales of microprocessors are dependent on the sale of computers that contain them, and people buy computers to enable them to use software. Microprocessor manufacturers thus have to both convince computer manufacturers to use their chip (without breaking antitrust laws) and convince software companies to create products that run on a particular processor.

The introduction of the IBM PC kick-started the personal computer market, with Wintel (the partnership between Microsoft and Intel) dominating software developer and end-user mindshare of the PC compatible market (in no small part due to the billions these two companies spent on advertising).

An effective technique for increasing the volume of microprocessors sold is to shorten the usable lifetime of the computer potential customers currently own. Customers buy computers to run software, and when new versions of software can only effectively be used in a computer containing more memory or on a new microprocessor which supports functionality not supported by earlier processors, then a new computer is needed. By obsoleting older products soon after newer products become available, companies are able to evolve an existing customer base to one where the new product is looked upon as the norm. Customers are force marched into the future.

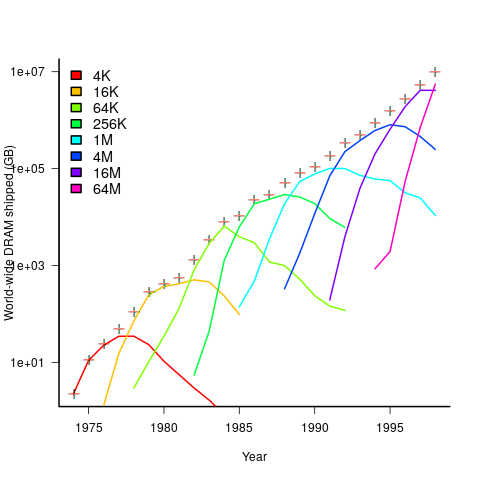

The plot below shows sales volume, in gigabytes, of various sized DRAM chips over time. The simple story of exponential growth in sales volume (plus signs) hides the more complicated story of the rise and fall of succeeding generations of memory chips (code+data):

The Red Queens had a simple task, keep buying the latest products. The activities of the companies supplying the specialist equipment needed to build a chip fabrication plant has to be coordinated, a role filled by the International Technology Roadmap for Semiconductors (ITRS). The annual ITRS reports contain detailed specifications of the expected performance of the subsystems involved in the fabrication process.

Moore’s law is now dead, in that transistor doubling now takes longer than two years. Would transistor doubling time have taken longer than two years, or slowed down earlier, if:

- the ecosystem had not been dominated by two symbiotic companies, or did network effects make it inevitable that there would be two symbiotic companies,

- the Internet had happened at a different time,

- if software applications had quickly reached a good enough state,

- if cloud computing had gone mainstream much earlier.

Licensing to decide the result of gcc vs llvm?

I was not surprised to hear today that Nvidia are halting development of their in-house C/C++ compiler and switching to one of the Open Source compilers. It is a lot cheaper to have one or two people looking after a company’s interests in a compiler developed by somebody else than having an in-house development group. It will be interesting to see how much longer Intel continues to fund their in-house compiler.

Nvidia chose llvm and gave a variety of technical reasons why this was the best choice over gcc.

One advantage (from Nvidia’s point of view) not mentioned is that llvm is licensed under a BSD style agreement. This means Nvidia don’t have to release the source code of any modifications or additions they make (they said these will be kept closed source); gcc is licensed under the GNU General Public License which requires source to be released. Arch rivals AMD (well, the ATI bit of AMD that does graphics hardware) also promote llvm, and I’m sure Nvidia does not want to help them in any way.

The licensing difference between gcc and llvm has the potential to make a big difference to the finances of both development teams.

My understanding of gcc funding is that most of it comes from back-end work (i.e., a company pays to have gcc work or do a better job on some [I imagine their] processor). Given a choice, would these companies rather release the source they paid to have written/modified or keep it closed? Some probably don’t care and hope that by making the source available others will help find and fix problems (i.e., there is a benefit to making it available), on the other hand companies introducing processors with fancy new features will want to minimise any technology that competitors can get for free.

In the years to come, it is possible that gcc will loose a significant amount of this back-end income to llvm because of licensing.

PhD projects are the life-blood of new compiler optimization techniques and for many years source code from them has often ended up as the experimental version of a new optimization phase of gcc. Many students are firm believers in making source freely available and shy away from being involved in non-GPL projects. Will this deter them from using llvm in their research (there may be a growing trend favoring llvm over gcc in research, or the llvm people may be better than the gcc folk at marketing {not hard})?

If llvm does not get the new fancy optimizations for ‘free’ they are going to have to spend money doing the implementing themselves or have their performance slowly fall behind that of gcc. Will this cost be more or less than the additional income from closed source customers?

We are unlikely to know the impact that licensing has on the fortunes of both compilers until the end of this decade. Perhaps designing and building a new processor will not be economically worthwhile in 10 years, perhaps all the worthwhile optimizations will be done. We will have to wait and see.

Update 4 Jan 2012: Video (235M) of talk on status of effort to make llvm the default compiler in FreeBSD at LLVM 2011 Developer’s meeting.

Why does Intel sell compilers?

Intel is a commercial company and the obvious answer to the question of why it sells compilers is: to make money. Does a company that makes billions of dollars in profits really have any interest in making a few million, I’m guessing, from selling compilers? Of course not, Intel’s interest in compilers is as a means of helping them sell more hardware.

How can a compiler be used to increase computer hardware sales? One possibility is improved performance and another is customer perception of improved performance. My company’s first product was a code optimizer and I was always surprised by the number of customers who bought the product without ever performing any before/after timing benchmarks; I learned that engineers are seduced by the image of performance and only a few are ever forced to get involved in measuring it (having been backed into a corner because what they currently have is not good enough).

Intel are not the only company selling x86 chips, AMD and VIA have their own Intel compatible x86 chips. Intel compatible? Doesn’t that mean that programs compiled using the Intel compiler will execute just as quickly on the equivalent chip sold by competitors? Technically the answer is no, but the performance differences are likely to be small in most cases. However, I’m sure there are many developers who have been seduced by Intel’s marketing machine into believing that they need to purchase x86 chips from Intel to make sure they receive this ‘worthwhile’ benefit.

Where do manufacturer performance differences, for the same sequence of instructions, come from? They are caused by the often substantial internal architectural difference between the processors sold by different manufacturers, also Intel and its competitors are continually introducing new processor architectures and processors from the same company will have differences performance characteristics. It is possible for an optimizer to make use of information on different processor characteristics to tune the machine code generated for a particular high-level language construct, with the developer selecting the desired optimization target processor via compiler switches.

Optimizing code for one particular processor architecture is a specialist market. But let’s not forget all those customers who will be seduced by the image of performance and ignore details such as their programs being executed on a wide variety of architectures.

The quality of a compiler’s runtime library can have a significant impact on a program’s performance. The x86 instruction set has grown over time and large performance gains can be had by a library function that adapts to the instructions available on the processor it is currently executing on. The CPUID instruction provides all of the necessary information.

As well as providing information on the kind of processor and its architectural features the CPUID instruction can return information about the claimed manufacturer of the chip (some manufacturers provide a mechanism that allows users to change the character sequence returned by this instruction).

The behavior of some of the functions in Intel’s runtime library depends on the

character sequence returned by the CPUID instruction, producing better performance for the sequence “GenuineIntel”. The US Federal Trade Commission have filed a complaint alleging that this is anti-competitive (more details) and requested that this manufacturer dependency be removed.

I think that removing this manufacturer dependency will have little impact on sales. Any Intel compiler user who is not targeting Intel chips and who is has a real interest in performance can patch the runtime library, the Supercomputer crowd will want to talk to the kind of sophisticated processor/compiler engineers that Intel makes available and for everybody else it is about the perception of performance. In fact Intel ought to agree to a ‘manufacturer free’ runtime library pronto before too many developers have their delusions shattered.

Recent Comments