Archive

The first compiler was implemented in itself

I have been reading about the world’s first actual compiler (i.e., not a paper exercise), described in Corrado Böhm’s PhD thesis (French version from 1954, an English translation by Peter Sestoft). The thesis, submitted in 1951 to the Federal Technical University in Zurich, takes some untangling; when you are inventing a new field, ideas tend to be expressed using existing concepts and terminology, e.g., computer peripherals are called organs and registers are denoted by the symbol  .

.

Böhm had work with Konrad Zuse and must have known about his language, Plankalkül. The language also has a APL feel to it (but without the vector operations).

Böhm’s language does not have a name, his thesis is really about translating mathematical expressions to machine code; the expressions are organised by what we today call basic blocks (Böhm calls them groups). The compiler for the unnamed language (along with a loader) is written in itself; a Java implementation is being worked on.

Böhm’s work is discussed in Donald Knuth’s early development of programming languages, but there is nothing like reading the actual work (if only in translation) to get a feel for it.

Update (3 days later): Correspondence with Donald Knuth.

Update (3 days later): A January 1949 memo from Haskell Curry (he of Curry fame and more recently of Haskell association) also uses the term organ. Might we claim, based on two observations on different continents, that it was in general use?

Evidence for 28 possible compilers in 1957

In two earlier posts I discussed the early compilers for languages that are still widely used today and a report from 1963 showing how nothing has changed in programming languages

The Handbook of Automation Computation and Control Volume 2, published in 1959, contains some interesting information. In particular Table 23 (below) is a list of “Automatic Coding Systems” (containing over 110 systems from 1957, or which 54 have a cross in the compiler column):

Computer System Name or Developed by Code M.L. Assem Inter Comp Oper-Date Indexing Fl-Pt Symb. Algeb.

Acronym

IBM 704 AFAC Allison G.M. C X Sep 57 M2 M 2 X

CAGE General Electric X X Nov 55 M2 M 2

FORC Redstone Arsenal X Jun 57 M2 M 2 X

FORTRAN IBM R X Jan 57 M2 M 2 X

NYAP IBM X Jan 56 M2 M 2

PACT IA Pact Group X Jan 57 M2 M 1

REG-SYMBOLIC Los Alamos X Nov 55 M2 M 1

SAP United Aircraft R X Apr 56 M2 M 2

NYDPP Servo Bur. Corp. X Sep 57 M2 M 2

KOMPILER3 UCRL Livermore X Mar 58 M2 M 2 X

IBM 701 ACOM Allison G.M. C X Dec 54 S1 S 0

BACAIC Boeing Seattle A X X Jul 55 S 1 X

BAP UC Berkeley X X May 57 2

DOUGLAS Douglas SM X May 53 S 1

DUAL Los Alamos X X Mar 53 S 1

607 Los Alamos X Sep 53 1

FLOP Lockheed Calif. X X X Mar 53 S 1

JCS 13 Rand Corp. X Dec 53 1

KOMPILER 2 UCRL Livermore X Oct 55 S2 1 X

NAA ASSEMBLY N. Am. Aviation X

PACT I Pact Groupb R X Jun 55 S2 1

QUEASY NOTS Inyokern X Jan 55 S

QUICK Douglas ES X Jun 53 S 0

SHACO Los Alamos X Apr 53 S 1

SO 2 IBM X Apr 53 1

SPEEDCODING IBM R X X Apr 53 S1 S 1

IBM 705-1, 2 ACOM Allison G.M. C X Apr 57 S1 0

AUTOCODER IBM R X X X Dec 56 S 2

ELI Equitable Life C X May 57 S1 0

FAIR Eastman Kodak X Jan 57 S 0

PRINT I IBM R X X X Oct 56 82 S 2

SYMB. ASSEM. IBM X Jan 56 S 1

SOHIO Std. Oil of Ohio X X X May 56 S1 S 1

FORTRAN IBM-Guide A X Nov 58 S2 S 2 X

IT Std. Oil of Ohio C X S2 S 1 X

AFAC Allison G.M. C X S2 S 2 X

IBM 705-3 FORTRAN IBM-Guide A X Dec 58 M2 M 2 X

AUTOCODER IBM A X X Sep 58 S 2

IBM 702 AUTOCODER IBM X X X Apr 55 S 1

ASSEMBLY IBM X Jun 54 1

SCRIPT G. E. Hanford R X X X X Jul 55 Sl S 1

IBM 709 FORTRAN IBM A X Jan 59 M2 M 2 X

SCAT IBM-Share R X X Nov 58 M2 M 2

IBM 650 ADES II Naval Ord. Lab X Feb 56 S2 S 1 X

BACAIC Boeing Seattle C X X X Aug 56 S 1 X

BALITAC M.I.T. X X X Jan 56 Sl 2

BELL L1 Bell Tel. Labs X X Aug 55 Sl S 0

BELL L2,L3 Bell Tel. Labs X X Sep 55 Sl S 0

DRUCO I IBM X Sep 54 S 0

EASE II Allison G.M. X X Sep 56 S2 S 2

ELI Equitable Life C X May 57 Sl 0

ESCAPE Curtiss-Wright X X X Jan 57 Sl S 2

FLAIR Lockheed MSD, Ga. X X Feb 55 Sl S 0

FOR TRANSIT IBM-Carnegie Tech. A X Oct 57 S2 S 2 X

IT Carnegie Tech. C X Feb 57 S2 S 1 X

MITILAC M.I.T. X X Jul 55 Sl S 2

OMNICODE G. E. Hanford X X Dec 56 Sl S 2

RELATIVE Allison G.M. X Aug 55 Sl S 1

SIR IBM X May 56 S 2

SOAP I IBM X Nov 55 2

SOAP II IBM R X Nov 56 M M 2

SPEED CODING Redstone Arsenal X X Sep 55 Sl S 0

SPUR Boeing Wichita X X X Aug 56 M S 1

FORTRAN (650T) IBM A X Jan 59 M2 M 2

Sperry Rand 1103A COMPILER I Boeing Seattle X X May 57 S 1 X

FAP Lockheed MSD X X Oct 56 Sl S 0

MISHAP Lockheed MSD X Oct 56 M1 S 1

RAWOOP-SNAP Ramo-Wooldridge X X Jun 57 M1 M 1

TRANS-USE Holloman A.F.B. X Nov 56 M1 S 2

USE Ramo-Wooldridge R X X Feb 57 M1 M 2

IT Carn. Tech.-R-W C X Dec 57 S2 S 1 X

UNICODE R Rand St. Paul R X Jan 59 S2 M 2 X

Sperry Rand 1103 CHIP Wright A.D.C. X X Feb 56 S1 S 0

FLIP/SPUR Convair San Diego X X Jun 55 SI S 0

RAWOOP Ramo-Wooldridge R X Mar 55 S1 1

8NAP Ramo-Wooldridge R X X Aug 55 S1 S 1

Sperry Rand Univac I and II AO Remington Rand X X X May 52 S1 S 1

Al Remington Rand X X X Jan 53 S1 S 1

A2 Remington Rand X X X Aug 53 S1 S 1

A3,ARITHMATIC Remington Rand C X X X Apr 56 SI S 1

AT3,MATHMATIC Remington Rand C X X Jun 56 SI S 2 X

BO,FLOWMATIC Remington Rand A X X X Dec 56 S2 S 2

BIOR Remington Rand X X X Apr 55 1

GP Remington Rand R X X X Jan 57 S2 S 1

MJS (UNIVAC I) UCRL Livermore X X Jun 56 1

NYU,OMNIFAX New York Univ. X Feb 54 S 1

RELCODE Remington Rand X X Apr 56 1

SHORT CODE Remington Rand X X Feb 51 S 1

X-I Remington Rand C X X Jan 56 1

IT Case Institute C X S2 S 1 X

MATRIX MATH Franklin Inst. X Jan 58

Sperry Rand File Compo ABC R Rand St. Paul Jun 58

Sperry Rand Larc K5 UCRL Livermore X X M2 M 2 X

SAIL UCRL Livermore X M2 M 2

Burroughs Datatron 201, 205 DATACODEI Burroughs X Aug 57 MS1 S 1

DUMBO Babcock and Wilcox X X

IT Purdue Univ. A X Jul 57 S2 S 1 X

SAC Electrodata X X Aug 56 M 1

UGLIAC United Gas Corp. X Dec 56 S 0

Dow Chemical X

STAR Electrodata X

Burroughs UDEC III UDECIN-I Burroughs X 57 M/S S 1

UDECOM-3 Burroughs X 57 M S 1

M.I.T. Whirlwind ALGEBRAIC M.I.T. R X S2 S 1 X

COMPREHENSIVE M.I.T. X X X Nov 52 Sl S 1

SUMMER SESSION M.I.T. X Jun 53 Sl S 1

Midac EASIAC Univ. of Michigan X X Aug 54 SI S

MAGIC Univ. of Michigan X X X Jan 54 Sl S

Datamatic ABC I Datamatic Corp. X

Ferranti TRANSCODE Univ. of Toronto R X X X Aug 54 M1 S

Illiac DEC INPUT Univ. of Illinois R X Sep 52 SI S

Johnniac EASY FOX Rand Corp. R X Oct 55 S

Norc NORC COMPILER Naval Ord. Lab X X Aug 55 M2 M

Seac BASE 00 Natl. Bur. Stds. X X

UNIV. CODE Moore School X Apr 55 |

Chart Symbols used:

Code R = Recommended for this computer, sometimes only for heavy usage. C = Common language for more than one computer. A = System is both recommended and has common language. Indexing M = Actual Index registers or B boxes in machine hardware. S = Index registers simulated in synthetic language of system. 1 = Limited form of indexing, either stopped undirectionally or by one word only, or having certain registers applicable to only certain variables, or not compound (by combination of contents of registers). 2 = General form, any variable may be indexed by anyone or combination of registers which may be freely incremented or decremented by any amount. Floating point M = Inherent in machine hardware. S = Simulated in language. Symbolism 0 = None. 1 = Limited, either regional, relative or exactly computable. 2 = Fully descriptive English word or symbol combination which is descriptive of the variable or the assigned storage. Algebraic A single continuous algebraic formula statement may be made. Processor has mechanisms for applying associative and commutative laws to form operative program. M.L. = Machine language. Assem. = Assemblers. Inter. = Interpreters. Compl. = Compilers. |

Are the compilers really compilers as we know them today, or is this terminology that has not yet settled down? The computer terminology chapter refers readers interested in Assembler, Compiler and Interpreter to the entry for Routine:

“Routine. A set of instructions arranged in proper sequence to cause a computer to perform a desired operation or series of operations, such as the solution of a mathematical problem.

…

Compiler (compiling routine), an executive routine which, before the desired computation is started, translates a program expressed in pseudo-code into machine code (or into another pseudo-code for further translation by an interpreter).

…

Assemble, to integrate the subroutines (supplied, selected, or generated) into the main routine, i.e., to adapt, to specialize to the task at hand by means of preset parameters; to orient, to change relative and symbolic addresses to absolute form; to incorporate, to place in storage.

…

Interpreter (interpretive routine), an executive routine which, as the computation progresses, translates a stored program expressed in some machine-like pseudo-code into machine code and performs the indicated operations, by means of subroutines, as they are translated. …”

The definition of “Assemble” sounds more like a link-load than an assembler.

When the coding system has a cross in both the assembler and compiler column, I suspect we are dealing with what would be called an assembler today. There are 28 crosses in the Compiler column that do not have a corresponding entry in the assembler column; does this mean there were 28 compilers in existence in 1957? I can imagine many of the languages being very simple (the fashionability of creating programming languages was already being called out in 1963), so producing a compiler for them would be feasible.

The citation given for Table 23 contains a few typos. I think the correct reference is: Bemer, Robert W. “The Status of Automatic Programming for Scientific Problems.” Proceedings of the Fourth Annual Computer Applications Symposium, 107-117. Armour Research Foundation, Illinois Institute of Technology, Oct. 24-25, 1957.

Programming Languages: nothing changes

While rummaging around today I came across: Programming Languages and Standardization in Command and Control by J. P. Haverty and R. L. Patrick.

Much of this report could have been written in 2013, but it was actually written fifty years earlier; the date is given away by “… the effort to develop programming languages to increase programmer productivity is barely eight years old.”

Much of the sound and fury over programming languages is the result of zealous proponents touting them as the solution to the “programming problem.”

I don’t think any major new sources of sound and fury have come to the fore.

… the designing of programming languages became fashionable.

Has it ever gone out of fashion?

Now the proliferation of languages increased rapidly as almost every user who developed a minor variant on one of the early languages rushed into publication, with the resultant sharp increase in acronyms. In addition, some languages were designed practically in vacuo. They did not grow out of the needs of a particular user, but were designed as someone’s “best guess” as to what the user needed (in some cases they appeared to be designed for the sake of designing).

My post on this subject was written 49 years later.

…a computer user, who has invested a million dollars in programming, is shocked to find himself almost trapped to stay with the same computer or transistorized computer of the same logical design as his old one because his problem has been written in the language of that computer, then patched and repatched, while his personnel has changed in such a way that nobody on his staff can say precisely what the data processing Job is that his machine is now doing with sufficient clarity to make it easy to rewrite the application in the language of another machine.

Vendor lock-in has always been good for business.

Perhaps the most flagrantly overstated claim made for POLs is that they result in better understanding of the programming operation by higher-level management.

I don’t think I have ever heard this claim made. But then my programming experience started a bit later than this report.

… many applications do not require the services of an expert programmer.

Ssshh! Such talk is bad for business.

The cost of producing a modern compiler, checking it out, documenting it, and seeing it through initial field use, easily exceeds $500,000.

For ‘big-company’ written compilers this number has not changed much over the years. Of course man+dog written compilers are a lot cheaper and new companies looking to aggressively enter the market can spend an order of magnitude more.

In a young rapidly growing field major advances come so quickly or are so obvious that instituting a measurement program is probably a waste of time.

True.

At some point, however, as a field matures, the costs of a major advance become significant.

Hopefully this point in time has now arrived.

Language design is still as much an art as it is a science. The evaluation of programming languages is therefore much akin to art criticism–and as questionable.

Calling such a vanity driven activity an ‘art’ only helps glorify it.

Programming languages represent an attack on the “programming problem,” but only on a portion of it–and not a very substantial portion.

In fact, it is probably very insubstantial.

Much of the “programming problem” centers on the lack of well-trained experienced people–a lack not overcome by the use of a POL.

Nothing changes.

The following table is for those of you complaining about how long it takes to compile code these days. I once had to compile some Coral 66 on an Elliot 903, the compiler resided in 5(?) boxes of paper tape, one box per compiler pass. Compilation involved: reading the paper tape containing the first pass into the computer, running this program and then reading the paper tape containing the program source, then reading the second paper tape containing the next compiler pass (there was not enough memory to store all compiler passes at once), which processed the in-memory output of the first pass; this process was repeated for each successive pass, producing a paper tape output of the compiled code. I suspect that compiling on the machines listed gave the programmers involved plenty of exercise and practice splicing snapped paper-tape.

Computer Cobol statements Machine instructions Compile time (mins) UNIVAC II 630 1,950 240 RCA 501 410 2,132 72 GE 225 328 4,300 16 IBM 1410 174 968 46 NCR 304 453 804 40 |

Array bound checking in C: the early products

Tools to support array bound checking in C have been around for almost as long as the language itself.

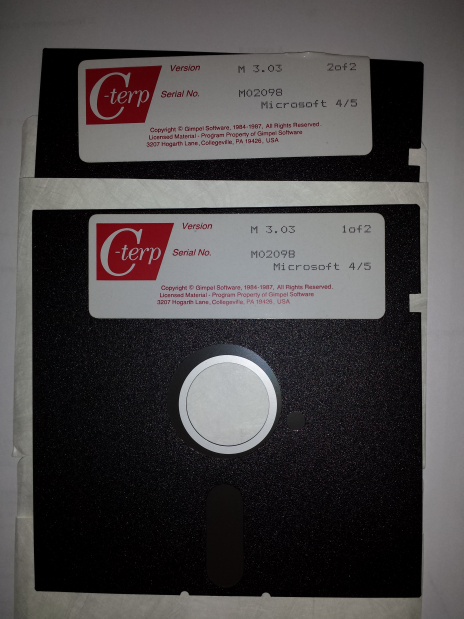

I recently came across product disks for C-terp; at 360k per 5 1/4 inch floppy, that is a C compiler, library and interpreter shipped on 720k of storage (the 3.5 inch floppies with 720k and then 1.44M came along later; Microsoft 4/5 is the version of MS-DOS supported). This was Gimpel Software’s first product in 1984.

The Model Implementation C checker work was done in the late 1980s and validated in 1991.

Purify from Pure Software was a well-known (at the time) checking tool in the Unix world, first available in 1991. There were a few other vendors producing tools on the back of Purify’s success.

Richard Jones (no relation) added bounds checking to gcc for his PhD. This work was done in 1995.

As a fan of bounds checking (finding bugs early saves lots of time) I was always on the lookout for C checking tools. I would be interested to hear about any bounds checking tools that predated Gimpel’s C-terp; as far as I know it was the first such commercially available tool.

Early compiler history

Who wrote the early compilers, when did they do it, and what were the languages compiled?

Answering these questions requires defining what we mean by ‘compiler’ and deciding what counts as a language.

Donald Knuth does a masterful job of covering the early development of programming languages. People were writing programs in high level languages well before the 1950s, and Konrad Zuse is the forgotten pioneer from the 1940s (it did not help that Germany had just lost a war and people were not inclined to given German’s much public credit).

What is the distinction between a compiler and an assembler? Some early ‘high-level’ languages look distinctly low-level from our much later perspective; some assemblers support fancy subroutine and macro facilities that give them a high-level feel.

Glennie’s Autocode is from 1952 and depending on where the compiler/assembler line is drawn might be considered a contender for the first compiler. The team led by Grace Hopper produced A-0, a fancy link-loader, in 1952; they called it a compiler, but today we would not consider it to be one.

Talking of Grace Hopper, from a biography of her technical contributions she sounds like a person who could be technical but chose to be management.

Fortran and Cobol hog the limelight of early compiler history.

Work on the first Fortran compiler started in the summer of 1954 and was completed 2.5 years later, say the beginning of 1957. A very solid claim to being the first compiler (assuming Autocode and A-0 are not considered to be compilers).

Compilers were created for Algol 58 in 1958 (a long dead language implemented in Germany with the war still fresh in people’s minds; not a recipe for wide publicity) and 1960 (perhaps the first one-man-band implemented compiler; written by Knuth, an American, but still a long dead language). The 1958 implementation sounds like it has a good claim to being the second compiler after the Fortran compiler.

In December 1960 there were at least two Cobol compilers in existence (one of which was created by a team containing Grace Hopper in a management capacity). I think the glory attached to early Cobol compiler work is the result of having a female lead involved in the development team of one of these compilers. Why isn’t the other Cobol compiler ever mentioned? Why is the Algol 58 compiler implementation that occurred before the Cobol compiler ever mentioned?

What were the early books on compiler writing?

“Algol 60 Implementation” by Russell and Randell (from 1964) still appears surprisingly modern.

Principles of Compiler Design, the “Dragon book”, came out in 1977 and was about the only easily obtainable compiler book for many years. The first half dealt with the theory of parser generators (the audience was CS undergraduates and parser generation used to be a very trendy topic with umpteen PhD thesis written about it); the book morphed into Compilers: Principles, Techniques, and Tools. Old-timers will reminisce about how their copy of the book has a green dragon on the front, rather than the red dragon on later, trendier (with less parser theory and more code generation) editions.

Happy 30th birthday to GCC

Thirty years ago today Richard Stallman announced the availability of a beta version of gcc on the mod.compilers newsgroup.

Everybody and his dog was writing C compilers in the late 1980s and early 1990s (a C compiler validation suite vendor once told me they had sold over 150 copies; a compiler vendor has to be serious to fork out around $10,000 for a validation suite). Did gcc become the dominant open source because one compiler would inevitably become dominant, or was there some collection of factors that gave gcc a significant advantage?

I think gcc’s market dominance was driven by two environmental factors, with some help from a technical compiler implementation decision.

The technical implementation decision was the use of RTL as the optimization+code generation strategy. Jack Davidson’s 1981 PhD thesis (and much later the LCC book) describe the gory details. The code generators for nearly every other C compiler was closely tied to the machine being targeted (because the implementers were focused on getting a job done, not producing a portable compiler system). Had they been so inclined Davidson and Christopher Fraser could have been the authors of the dominant C compiler.

The first environment factor was the creation of a support ecosystem around gcc. The glue that nourished this ecosystem was the money made writing code generators for the never ending supply of new cpus that companies were creating (that needed a C compiler). In the beginning Cygnus Solutions were the face of gcc+tools; Michael Tiemann, a bright affable young guy, once told me that he could not figure out why companies were throwing money at them and that perhaps it was because he was so tall. Richard Stallman was not the easiest person to get along with and was probably somebody you would try to avoid meeting (I don’t know if he has mellowed). If Cygnus had gone with a different compiler, they had created 175 host/target combinations by 1999, gcc would be as well-known today as Hurd.

Yes, people writing Masters and PhD thesis were using gcc as the scaffolding for their fancy new optimization techniques (e.g., here, here and here), but this work essentially played the role of an R&D group trying to figure out where effort ought to be invested writing production code.

Sun’s decision to unbundle the development environment (i.e., stop shipping a C compiler with every system) caused some developers to switch to another compiler, some choosing gcc.

The second environment factor was the huge leap in available memory on developer machines in the 1990s. Compiler vendors cannot ship compilers that do fancy optimization if developers don’t have computers with enough memory to hold the optimization information (many, many megabytes). Until developer machines contained lots of memory, a one-man band could build a compiler producing code that was essentially as good as everybody else. An open source market leader could not emerge until the man+dog compilers could be clearly seen to be inferior.

During the 1990s the amount of memory likely to be available in developers’ computers grew dramatically, allowing gcc to support more and more optimizations (donated by a myriad of people targeting some aspect of code generation that they found interesting). Code generation improved dramatically and man+dog compilers became obviously second/third rate.

Would things be different today if Linus Torvalds’ had not selected gcc? If Linus had chosen a compiler licensed under a more liberal license than copyleft, things might have turned out very differently. LLVM started life in 2003 and one of my predictions for 2009 was its demise in the next few years; I failed to see the importance of licensing to Apple (who essentially funded its development).

Eventually, success.

With success came new existential threats, in particular death by a thousand forks.

A serious fork occurred in 1997. Stallman was clogging up the works; fortunately he saw the writing on the wall and in 1999 stepped aside.

Money is what holds together the major development teams supporting gcc and llvm. What happens when customers wanting support for new back-ends dries up, what happens when major companies stop funding development? Do we start seeing adverts during compilation? Chris Lattner, the driving force behind llvm recently moved to Tesla; will it turn out that his continuing management was as integral to the continuing success of llvm as getting rid of Stallman was to the continuing success of gcc?

Will a single mainline version of gcc still be the dominant compiler in another 30 years time?

Time will tell.

Does using formal methods mean anything?

What counts as use of formal methods in software development?

Mathematics is involved, but then mathematics is involved in almost every aspect of software.

Formal methods are founded on the lie that doing things in mathematics means the results must be correct. There are plenty of mistakes in published mathematical proofs, as any practicing mathematician will tell you. The stuff that gets taught at school and university has been thoroughly checked and stood the test of time; the new stuff could be as bug written as software.

In the 1970s and 1980s formals methods was all about use of notation and formalisms. Writing algorithms, specifications, requirements, etc. in what looked like mathematical notation was called formal methods. The hope was that one day a tool would be available to check that what had been written did indeed have the characteristics being claimed, e.g., consistency, completeness, fault free (whatever that meant).

While everybody talked about automatic checking tools, what people spent their time doing was inventing new notations and formalisms. You were not a respected formal methods researcher unless you had several published papers, and preferably a book, describing your own formalism.

The market leader was VDM, mainly due to the work/promotion by Dansk Datamatik Center. I was a fan of Denotational semantics. There are even ISO standards for a couple of formal specification languages.

Fast forward to the last 10 years. What counts as done using formal methods today?

These days researchers who claim to be “doing formal methods” seem to be by writing code (which is an improvement over writing symbols on paper; it helps that today’s computers are orders of magnitude more powerful). The code written involves proof assistants such as Coq and Isabelle and programming languages such as OCaml and Haskell.

Can anybody writing code in OCaml or Haskell claim to be doing formal methods, or does a proof assistant of some kind have to be involved in the process?

If a program’s source code is translated into a form that can be handled by a proof assistant, can the issue of correctness of the translation be ignored? There is one research group who thinks it is ok to “trust” the translation process.

If one component of a program (say, parts of a compiler’s code generator) have been analyzed using a proof assistant, is it ok to claim that the entire program (perhaps the syntax and semantics processing that happens before code generation) has been formally verified? There is one research group who think such claims can be made about the entire program.

If I write a specification in Visual Basic, map this specification into C and involve formal methods at some point(s) in the process, then is it ok for me to claim that the correctness of the C implementation has been formally verified? There seem to be enough precedents for this claim to be viable.

In this day and age, is the use of formal methods anything more than a sign of intellectual dishonesty? Or is it just that today’s researchers are lazy, unwilling to put the effort into making sure that claims of correctness are proved start to finish?

‘to program’ is 70 this month

‘To program’ was first used to describe writing programs in August 1946.

The evidence for this is contained in First draft of a report on the EDVAC by John von Neumann and material from the Moore School lectures. Lecture 34, held on 7th August, uses “program” in its modern sense.

My copy of the Shorter Oxford English Dictionary, from 1976, does not list the computer usage at all! Perhaps, only being 30 years old in 1976, the computer usage was only considered important enough to include in the 20 volume version of the dictionary and had to wait a few more decades to be included in the shorter 2 volume set. Can a reader with access to the 20 volume set from 1976 confirm that it does include a computer usage for program?

Program, programme, 1633. [orig., in spelling program, – Gr.-L. programma … reintroduced from Fr. programme, and now more usu. so spelt.] … 1. A public notice … 2. A descriptive notice,… a course of study, etc.; a prospectus, syllabus; now esp. a list of the items or ‘numbers’ of a concert…

It would be another two years before a stored program computer was available ‘to program’ computers in a way that mimics how things are done today.

Grier ties it all together in a convincing argument in his paper: “The ENIAC, the verb “to program” and the emergence of digital computers” (cannot find a copy outside a paywall).

Steven Wolfram does a great job of untangling Ada Lovelace’s computer work. I think it is true to say that Lovelace is the first person to think like a programmer, while Charles Babbage was the first person to think like a computer hardware engineer.

If you encounter somebody claiming to have been programming for more than 70 years, they are probably embellishing their cv (in the late 90s I used to bump into people claiming to have been using Java for 10 years, i.e., somewhat longer than the language had existed).

Update: Oxford dictionaries used to come with an Addenda (thanks to Stephen Cox for reminding me in the comments; my volume II even says “Marl-Z and Addenda” on the spine).

Program, programme. 2. c. Computers. A fully explicit series of instructions which when fed into a computer will automatically direct its operation in carrying out a specific task 1946. Also as v. trans., to supply (a computer) with a p.; to cause (a computer) to do something by this means; also, to express as or in a p. Hence Programming vbl. sbl., the operation of programming a computer; also, the writing or preparation of programs. Programmer, a person who does this.

The fall of Rome and the ascendancy of ego and bluster

The idea that empirical software engineering started 10 years ago, driven by the availability of Open Source that could be measured, turns out to be a rather blinkered view of history.

A few months ago I was searching for a report and tried the Defense Technical Information Center (DTIC), which I had last tried many years ago. The search quickly located the report, plus lots of other stuff, and over the next few weeks I downloaded a few hundred interesting looking reports. These are pdfs that often don’t show up in Google searches and sometimes not on DTIC searches unless the right combination of words is used (many of the pdfs have been created by converting microfiche copies of the original paper, with some, often very good, OCR thrown into the mix).

It turns out that during the 1970s at the Rome Air Development Center (RADC was the primary research lab of the US Air Force) something of a golden age for empirical software engineering research occurred (compared to the following 25 years).

The ingredients necessary for great research came together at Rome during this decade: the US Department of Defense were spending huge amounts of money creating lots of software systems; the management at RADC understood the importance of measurement and analysis, and had the money to hire good consultants to do it.

Why did the volume of quality reports coming out of RADC decline in the 1980s (it closed in 1991)? I have no idea, perhaps the managers responsible for the great work moved on or priorities changed.

Ego and bluster is how software engineering research operated after the decline of Rome (I’m sure plenty of it occurred before and during Rome). A researcher or independent consultant had an idea about how they thought things worked (perhaps bolstered by a personal experience, not lots of data) and if their ego was big enough to think this idea was a good model of reality and they invest enough time blustering their way through workshop presentations and publishing papers, then the idea could become part of mainstream thinking in academia; no empirical evidence needed.

The start of the ego and bluster period could be said to be 1981, the year in which Software Engineering Economics by Barry Boehm was published, and 2008 as the end of the ego and bluster period (or at least the start of its decline) the year of publication of the 3rd edition of Applied Software Measurement by Capers Jones. Both books dress up tiny amounts of empirical data as ‘proof’ of the ideas being promoted.

Without measurement data researchers have to resort to bluster to hide the flimsy foundations of the claims being made, those with the biggest egos taking center stage. Commercial companies are loath to let outsiders measure what they are doing and very few measure what they are doing themselves (so even confidential data is rare). Most researchers moved onto other topics once they realised how little data was available or could be made available to them.

Around 2005, or so, the volume of papers using new empirical data started to grow and a trickle has now turned into a flood. Of course making use of empirical data does not prevent research papers being a complete waste of time and ego and bluster is still widely practiced (and not limited to software engineering).

While a variety of individuals and research groups around the world (sadly only individuals in the UK) are actively working on extracting and analysing open source systems, practical progress has been very slow. Researchers are still coming to grips with the basic characteristics of the data they are seeing.

The current legacy, in software engineering, of long standing beliefs (built on tiny datasets) is a big problem. Lots of researchers have not seen through the bluster and are spending lots of time and effort trying to accommodate the results they are obtaining with what are mistakenly taken to be ‘established’ theories. One example is “Program Evolution – Processes of Software Change” by Lehman and Belady, from the late 1970s. Researchers need to stop looking at Lehman’s ‘laws’ through rose tinted glasses; a modern paper making Lehman’s claims based on such a small set of measurements would be laughed.

Most of those now working with empirical data are students working towards a postgraduate degree, some of whom have gone on to get full-time research jobs. Unfortunately there are still many researchers applying the habits they acquired during the ego and bluster period; fit some old data using the latest fashionable technique, publish and quickly move on to the next fashionable technique. As Max Planck observed, science advances one funeral at a time.

What is the name should be given to this new period of software engineering research? We will probably have to wait many more years before things become clear.

Software Reliability is one report from the Rome period that is well worth checking out.

NASA sponsored research was hit and mostly miss. One very interesting sequence of experiments is documented in: Software reliability: Repetitive run experimentation and modeling and An experiment in software reliability.

Recent Comments