Archive

A comparison of C++ and Rust compiler performance

Large code bases take a long time to compile and build. How much impact does the choice of programming language have on compiler/build times?

The small number of industrial strength compilers available for the widely used languages makes the discussion as much about distinct implementations as distinct languages. And then there is the issue of different versions of the same compiler having different performance characteristics; for instance, the performance of Microsoft’s C++ Visual Studio compiler depends on the release of the compiler and the version of the standard specified.

Implementation details can have a significant impact on compile time. Compile time may be dominated by lexical analysis, while support for lots of fancy optimization shifts the time costs to code generation, and poorly chosen algorithms can result in symbol table lookup being a time sink (especially for large programs). For short programs, compile time may be dominated by start-up costs. These days, developers rarely have to worry about small memory size causing occurring when compiling a large source file.

A recent blog post compared the compile/build time performance of Rust and C++ on Linux and Apple’s OS X, and strager kindly made the data available.

The most obvious problem with attempting to compare the performance of compilers for different languages is that the amount of code contained in programs implementing the same functionality will differ (this is also true when the programs are written in the same language).

strager’s solution was to take a C++ program containing 9.3k LOC, plus 7.3K LOC of tests, and convert everything to Rust (9.5K LOC, plus 7.6K LOC of tests). In total, the benchmark consisted of compiling/building three C++ source files and three equivalent Rust source files.

The performance impact of number of lines of source code was measured by taking the largest file and copy-pasting it 8, 16, and 32 times, to create three every larger versions.

The OS X benchmarks did not include multiple file sizes, and are not included in the following analysis.

A variety of toolchain options were included in different runs, and for Rust various command line options; most distinct benchmarks were run 10 times each. On Linux, there were a total of 360 C++ runs, and for Rust 1,066 runs (code+data).

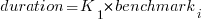

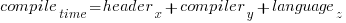

The model  , where

, where  is a fitted regression constant, and

is a fitted regression constant, and  is the fitted regression coefficient for the

is the fitted regression coefficient for the  ‘th benchmark, explains just over 50% of the variance. The language is implicit in the benchmark information.

‘th benchmark, explains just over 50% of the variance. The language is implicit in the benchmark information.

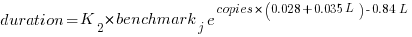

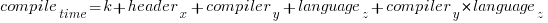

The model  , where

, where  is the number of copies,

is the number of copies,  is 0 for C++ and 1 for Rust, explains 92% of the variance, i.e., it is a very good fit.

is 0 for C++ and 1 for Rust, explains 92% of the variance, i.e., it is a very good fit.

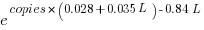

The expression  is a language and source code size multiplication factor. The numeric values are:

is a language and source code size multiplication factor. The numeric values are:

1 8 16 32 C++ 1.03 1.25 1.57 2.45 Rust 0.98 1.52 2.52 6.90 |

showing that while Rust compilation is faster than C++, for ‘shorter’ files, it becomes much relatively slower as the quantity of source increases.

The size factor for Rust is growing quadratically, while it is much closer to linear for C++.

What are the threats to the validity of this benchmark comparison?

The most obvious is the small number of files benchmarked. I don’t have any ideas for creating a larger sample.

How representative is the benchmark of typical projects? An explicit goal in benchmark selection was to minimise external dependencies (to reduce the effort likely to be needed to translate to Rust). Typically, projects contain many dependencies, with compilers sometimes spending more time processing dependency information than compiling the actual top-level source, e.g., header files in C++.

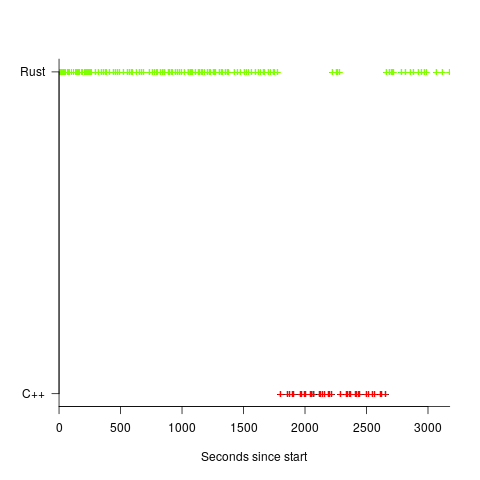

A less obvious threat is the fact that the benchmarks for each language were run in contiguous time intervals, i.e., all Rust, then all C++, then all Rust (see plot below; code+data):

It is possible that one or more background processes were running while one set of language benchmarks was being run, which would skew the results. One solution is to intermix the runs for each language (switching off all background tasks is much harder).

I was surprised by how well the regression model fitted the data; the fit is rarely this good. Perhaps a larger set of benchmarks would increase the variance.

2021 in the programming language standards’ world

Last Tuesday I was on a Webex call (the British Standards Institute’s use of Webex for conference calls predates COVID 19) for a meeting of IST/5, the committee responsible for programming language standards in the UK.

There have been two developments whose effect, I think, will be to hasten the decline of the relevance of ISO standards in the programming language world (to the point that they are ignored by compiler vendors).

- People have been talking about switching to online meetings for years, and every now and again someone has dialed-in to the conference call phone system provided by conference organizers. COVID has made online meetings the norm (language working groups have replaced face-to-face meetings with online meetings). People are looking forward to having face-to-face meetings again, but there is talk of online attendance playing a much larger role in the future.

The cost of attending a meeting in person is the perennial reason given for people not playing an active role in language standards (and I imagine other standards). Online attendance significantly reduces the cost, and an increase in the number of people ‘attending’ meetings is to be expected if committees agree to significant online attendance.

While many people think that making it possible for more people to be involved, by reducing the cost, is a good idea, I think it is a bad idea. The rationale for the creation of standards is economic; customer costs are reduced by reducing

diversityincompatibilities across the same kind of product., e.g., all standard conforming compilers are consistent in their handling of the same construct (undefined behavior may be consistently different). When attending meetings is costly, those with a significant economic interest tend to form the bulk of those attending meetings. Every now and again somebody turns up for a drive-by-shooting, i.e., they turn up for a day to present a paper on their pet issue and are never seen again.Lowering the barrier to entry (i.e., cost) is going to increase the number of drive-by shootings. The cost of this spray of pet-issue papers falls on the regular attendees, who will have to spend time dealing with enthusiastic, single issue, newbies,

- The International Organization for Standardization (ISO is the abbreviation of the French title) has embraced the use of inclusive terminology. The ISO directives specifying the Principles and rules for the structure and drafting of ISO and IEC documents, have been updated by the addition of a new clause: 8.6 Inclusive terminology, which says:

“Whenever possible, inclusive terminology shall be used to describe technical capabilities and relationships. Insensitive, archaic and non-inclusive terms shall be avoided. For the purposes of this principle, “inclusive terminology” means terminology perceived or likely to be perceived as welcoming by everyone, regardless of their sex, gender, race, colour, religion, etc.

New documents shall be developed using inclusive terminology. As feasible, existing and legacy documents shall be updated to identify and replace non-inclusive terms with alternatives that are more descriptive and tailored to the technical capability or relationship.”

The US Standards body, has released the document INCITS inclusive terminology guidelines. Section 5 covers identifying negative terms, and Section 6 deals with “Migration from terms with negative connotations”. Annex A provides examples of terms with negative connotations, preceded by text in bright red “CONTENT WARNING: The following list contains material that may be harmful or

traumatizing to some audiences.”“Error” sounds like a very negative word to me, but it’s not in the annex. One of the words listed in the annex is “dummy”. One member pointed out that ‘dummy’ appears 794 times in the current Fortran standard, (586 times in ‘dummy argument’).

Replacing words with negative connotations leads to frustration and distorted perceptions of what is being communicated.

I think there will be zero real world impact from the use of inclusive terminology in ISO standards, for the simple reason that terminology in ISO standards usually has zero real world impact (based on my experience of the use of terminology in ISO language standards). But the use of inclusive terminology does provide a new opportunity for virtue signalling by members of standards’ committees.

While use of inclusive terminology in ISO standards is unlikely to have any real world impact, the need to deal with suggested changes of terminology, and new terminology, will consume committee time. Most committee members tend to a rather pragmatic, but it only takes one or two people to keep a discussion going and going.

Over time, compiler vendors are going to become disenchanted with the increased workload, and the endless discussions relating to pet-issues and inclusive terminology. Given that there are so few industrial strength compilers for any language, the world no longer needs formally agreed language standards; the behavior that implementations have to support is controlled by the huge volume of existing code. Eventually, compiler vendors will sever the cord to the ISO standards process, and outside the SC22 bubble nobody will notice.

Where are the industrial strength R compilers?

Why don’t compiler projects for the R language make it into production use? The few that have been written have remained individual experimental products, e.g., RLLVMCompile.

Most popular languages attract many compiler implementations. I’m not saying that any of these implementations have more than a handful of users, that they implement the full language (a full implementation is not common), or that they fulfil any need other than their implementers desire to build something.

A commonly heard reason for the lack of production R compilers is that it is not worth the time and effort, because most of an R program’s time is spent in the library code which is written in a compiled language (e.g., C or Fortran). The fact that it is probably not worth the time and effort has not stopped people writing compilers for other languages, but then I think that the kind of people who use R tend not to be the kind of people who want to spend their time writing compilers. On the whole, they are the kind of people who are into statistics and data analysis.

Is it true that that most R programs spend most of their time executing library code? It’s certainly true for me. But I have noticed that a lot of the library functions executed by my code are written in R. Also, if somebody uses R for all their programming needs (it might be the only language they know), then their code might not be heavily library dependent.

I was surprised to read about Tierney’s byte code compiler, because his implementation is how I thought the R-core’s existing implementation worked (it does now). The internals of R is based on 1980s textbook functional techniques, and like many book implementations of the day, performance is dependent on the escape hatch of compiled code. R’s implementers wisely spent their time addressing user concerns, which revolved around statistics and visual presentation, i.e., not internal implementation technicalities.

Building an R compiler is easy, the much harder and time-consuming part is the runtime system.

Threaded code is a quick and simple approach to compiler implementation. R source gets mapped to a sequence of C function calls, with these functions proving a wrapper to library functions implementing the appropriate basic functionality, e.g., add two vectors. This approach has been the subject of at least one Master’s thesis. Thesis implementations rarely reach production use because those involved significantly underestimate the work that remains to be done, which is usually a lot more than the original implementation.

A simple threaded code approach provides a base for subsequent optimization, with the base having a similar performance to an interpreter. Optimizing requires figuring out details of the operations performed and replacing generic function calls with ones designed to be fast for specific cases, or even better replacing calls with inline code, e.g., adding short vectors of integers. There is a lot of existing work for scripting languages and a few PhD thesis researching R (e.g., Wang). The key technique is static analysis of R source.

Jan Vitek is running what appears to be the most active R compiler research group, at the moment e.g., the Ř project. Research can be good for uncovering language usage and trying out different techniques, but it is not intended to produce industry strength code. Lots of the fancy optimizations in early versions of the gcc C compiler started life as a PhD thesis, with the respective individual sometimes going on to spend a few years creating a production quality version for the released compiler.

The essential ingredient for building a production compiler is persistence. There are an awful lot of details that need to be sorted out (this is why research project code does not directly translate to production code, they ignore ‘minor’ details in order to concentrate on the ‘interesting’ research problem). Is there a small group of people currently beavering away on a production quality compiler for R? If there is, I can understand being discrete, on long-term projects it can be very annoying to have people regularly asking when the software is going to be released.

To have a life, once released, a production compiler needs to attract users, who are often loyal to their current compiler (because they know that their code works for this compiler); there needs to be a substantial benefit to entice people to switch. The benefit of compiling R to machine code, rather than interpreting, is performance. What performance improvement is needed to attract a viable community of users (there is always a tiny subset of users who will pay lots for even small performance improvements)?

My R code is rarely cpu bound, so I am not in the target audience, no matter what the speed-up. I don’t have any insight in the performance problems experienced by the R community, and have no idea whether a factor of two, five, ten or more would be enough.

Compiler validation used to be a big thing

Compiler validation used to be a big thing; a NIST quarterly validated products list could run to nearly 150 pages, and approaching 1,000 products (not all were compilers).

Why did compiler validation stop being a thing?

Running a compiler validation service (NIST was also involved with POSIX, graphics, and computer security protocols validation) costs money. If there are enough people willing to pay (NIST charged for the validation service), the service pays for itself.

The 1990s was a period of consolidation, lots of computer manufacturers went out of business and Micro Focus grew to dominate the Cobol compiler business. The number of companies willing to pay for validation fell below the number needed to maintain the service; the service was terminated in 1998.

The source code of the Cobol, Fortran and SQL + others tests that vendors had to pass (to appear for 12 months in the validated products list) is still available; the C validation suite costs money. But passing these tests, then paying NIST’s fee for somebody to turn up and watch the compiler pass the tests, no longer gets your product’s name in lights (or on the validated products list).

At the time, those involved lamented the demise of compiler validation. However, compiler validation was only needed because many vendors failed to implement parts of the language standard, or implemented them differently. In many ways, reducing the number of vendors is a more effective means of ensuring consistent compiler behavior. Compiler monoculture may spell doom for those in the compiler business (and language standards), but is desirable from the developers’ perspective.

How do we know whether today’s compilers implement the requirements contained in the corresponding ISO language standard? You could argue that this is a pointless question, i.e., gcc and llvm are the language standard; but let’s pretend this is not the case.

Fuzzing is good for testing code generation. Checking language semantics still requires expert human effort, and lots of it. People have to extract the requirements contained in the language specification, and write code that checks whether the required behavior is implemented. As far as I know, there are only commercial groups doing this, i.e., nothing in the open source world; pointers welcome.

2019 in the programming language standards’ world

Last Tuesday I was at the British Standards Institute for a meeting of IST/5, the committee responsible for programming language standards in the UK.

There has been progress on a few issues discussed last year, and one interesting point came up.

It is starting to look as if there might be another iteration of the Cobol Standard. A handful of people, in various countries, have started to nibble around the edges of various new (in the Cobol sense) features. No, the INCITS Cobol committee (the people who used to do all the heavy lifting) has not been reformed; the work now appears to be driven by people who cannot let go of their involvement in Cobol standards.

ISO/IEC 23360-1:2006, the ISO version of the Linux Base Standard, has been updated and we were asked for a UK position on the document being published. Abstain seemed to be the only sensible option.

Our WG20 representative reported that the ongoing debate over pile of poo emoji has crossed the chasm (he did not exactly phrase it like that). Vendors want to have the freedom to specify code-points for use with their own emoji, e.g., pineapple emoji. The heady days, of a few short years ago, when an encoding for all the world’s character symbols seemed possible, have become a distant memory (the number of unhandled logographs on ancient pots and clay tablets was declining rapidly). Who could have predicted that the dream of a complete encoding of the symbols used by all the world’s languages would be dashed by pile of poo emoji?

The interesting news is from WG9. The document intended to become the Ada20 standard was due to enter the voting process in June, i.e., the committee considered it done. At the end of April the main Ada compiler vendor asked for the schedule to be slipped by a year or two, to enable them to get some implementation experience with the new features; oops. I have been predicting that in the future language ‘standards’ will be decided by the main compiler vendors, and the future is finally starting to arrive. What is the incentive for the GNAT compiler people to pay any attention to proposals written by a bunch of non-customers (ok, some of them might work for customers)? One answer is that Ada users tend to be large bureaucratic organizations (e.g., the DOD), who like to follow standards, and might fund GNAT to implement the new document (perhaps this delay by GNAT is all about funding, or lack thereof).

Right on cue, C++ users have started to notice that C++20’s added support for a system header with the name version, which conflicts with much existing practice of using a file called version to contain versioning information; a problem if the header search path used the compiler includes a project’s top-level directory (which is where the versioning file version often sits). So the WG21 committee decides on what it thinks is a good idea, implementors implement it, and users complain; implementors now have a good reason to not follow a requirement in the standard, to keep users happy. Will WG21 be apologetic, or get all high and mighty; we will have to wait and see.

C2X and undefined behavior

The ISO C Standard is currently being revised by WG14, to create C2X.

There is a rather nebulous clustering of people who want to stop compilers using undefined behaviors to generate what these people (and probably most other developers) consider to be very surprising code. For instance, always printing p is truep is false, when executing the code: bool p; if ( p ) printf("p is true"); if ( !p ) printf("p is false"); (possible because p is uninitialized, and accessing an uninitialized value is undefined behavior).

This sounds like a good thing; nobody wants compilers generating surprising code.

All the proposals I have seen, so far, involve doing away with constructs that can produce undefined behavior. Again, this sounds like a good thing; nobody likes undefined behaviors.

The problem is, there is a reason for labeling certain constructs as producing undefined behavior; the behavior is who-knows-what.

Now the C Standard could specify the who-knows-what behavior; for instance, it could specify that the result of dividing by zero is 42. Standard’s conforming compilers would then have to generate code to check whether the denominator was zero, and return 42 for this case (until Intel, ARM and other processor vendors ‘updated’ the behavior of their divide instructions). Way-back-when a design decision was made, the behavior of divide by zero is undefined, not 42 or any other value; this was a design decision, code efficiency and compactness was considered to be more important.

I have not seen anybody arguing that the behavior of divide by zero should be specified. But I have seen people arguing that once C’s integer representation is specified as being twos-compliment (currently it can also be ones-compliment or signed-magnitude), then arithmetic overflow becomes defined. Wrong.

Twos-compliment is a specification of a representation, not a specification of behavior. What is the behavior when the result of adding two integers cannot be represented? The result might be to wrap (the behavior expected by many developers), to saturate at the maximum value (frequently needed in image and signal processing), to raise a signal (overflow is not usually supposed to happen), or something else.

WG14 could define the behavior, for when the result of an arithmetic operation is not representable in the number of bits available. Standard’s conforming compilers targeting processors whose arithmetic instructions did not behave as required would have to generate code, for any operation that could overflow, to do what was necessary. The embedded market are heavy users of C; in this market memory is limited, and processor performance is never fast enough, the overhead of supporting a defined behavior could just be too high (a more attractive solution is to code review, to make sure the undefined behavior cannot occur).

Is there another way of addressing the issue of compiler writers’ use/misuse of undefined behavior? Yes, offer them money. Compiler writing is a business, at least at the level at which gcc and llvm operate. If people really are keen to influence the code generated by gcc and llvm, money is the solution. Wot, no money? Then stop complaining.

Modeling visual studio C++ compile times

Last week I spotted an interesting article on the compile-time performance of C++ compilers running under Microsoft Windows. The author had obviously put a lot of work into gathering the data, and had taken care to have multiple runs to reduce the impact of random effects (128 runs to be exact); but, as if often the case, the analysis of the data was lackluster. I posted a comment asking for the data, and a link was posted the next day 🙂

The compilers benchmarked were: Visual Studio 2015, Visual Studio 2017 and clang 7.0.1; the compilers were configured to target: C++20, C++17, C++14, C++11, C++03, or C++98. The source code used was 100 system headers.

If we are interested in understanding the contribution of each component to overall compile-time, the obvious fist regression model to build is:

where:  are the different headers,

are the different headers,  the different compilers and

the different compilers and  the different target languages. There might be some interaction between variables, so something more complicated was tried first; the final fitted model was (code+data):

the different target languages. There might be some interaction between variables, so something more complicated was tried first; the final fitted model was (code+data):

where  is a constant (the

is a constant (the Intercept in R’s summary output). The following is a list of normalised numbers to plug into the equation (clang is the default compiler and C++03 the default language, and so do not appear in the list, the : symbol represents the multiplication; only a few of the 100 headers are listed, details are available):

Estimate Std. Error t value Pr(>|t|) (Intercept) headerany 1.000000000 0.051100398 headerarray headerassert.h 0.522336397 -0.654056185 ... headerwctype.h headerwindows.h -0.648095154 1.304270250 compilerVS15 compilerVS17 -0.185795534 -0.114590143 languagec++11 languagec++14 0.032930014 0.156363433 languagec++17 languagec++20 0.192301727 0.184274629 languagec++98 compilerVS15:languagec++11 0.001149643 -0.058735591 compilerVS17:languagec++11 compilerVS15:languagec++14 -0.038582437 -0.183708714 compilerVS17:languagec++14 compilerVS15:languagec++17 -0.164031495 NA compilerVS17:languagec++17 compilerVS15:languagec++20 -0.181591418 NA compilerVS17:languagec++20 compilerVS15:languagec++98 -0.193587045 0.062414667 compilerVS17:languagec++98 0.014558295 |

As an example, the (normalised) time to compile wchar.h using VS15 with languagec++11 is:

1-0.514807638-0.183862162+0.033951731-0.059720131

Each component adds/substracts to/from the normalised mean.

Building this model didn’t take long. While waiting for the kettle to boil, I suddenly realised that an additive model was probably inappropriate for this problem; oops. Surely the contribution of each component was multiplicative, i.e., components have a percentage impact to performance.

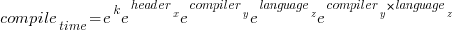

A quick change to the form of the fitted model:

Taking the exponential of both side, the fitted equation becomes:

The numbers, after taking the exponent, are:

(Intercept) headerany 9.724619e+08 1.051756e+00 ... headerwctype.h headerwindows.h 3.138361e-01 2.288970e+00 compilerVS15 compilerVS17 7.286951e-01 7.772886e-01 languagec++11 languagec++14 1.011743e+00 1.049049e+00 languagec++17 languagec++20 1.067557e+00 1.056677e+00 languagec++98 compilerVS15:languagec++11 1.003249e+00 9.735327e-01 compilerVS17:languagec++11 compilerVS15:languagec++14 9.880285e-01 9.351416e-01 compilerVS17:languagec++14 compilerVS15:languagec++17 9.501834e-01 NA compilerVS17:languagec++17 compilerVS15:languagec++20 9.480678e-01 NA compilerVS17:languagec++20 compilerVS15:languagec++98 9.402461e-01 1.058305e+00 compilerVS17:languagec++98 1.001267e+00 |

Taking the same example as above: wchar.h using VS15 with c++11. The compile-time (in cpu clock cycles) is:

9.724619e+08*3.138361e-01*7.286951e-01*1.011743e+00*9.735327e-01

Now each component causes a percentage change in the (mean) base value.

Both of these model explain over 90% of the variance in the data, but this is hardly surprising given they include so much detail.

In reality compile-time is driven by some combination of additive and multiplicative factors. Building a combined additive and multiplicative model is going to be like wrestling an octopus, and is left as an exercise for the reader 🙂

Given a choice between these two models, I think the multiplicative model is probably closest to reality.

The 520’th post

This is the 520’th post on this blog, which will be 10-years old tomorrow. Regular readers may have noticed an increase in the rate of posting over the last few months; at the start of this month I needed to write 10 posts to hit my one-post a week target (which has depleted the list of things I keep meaning to write about).

What has happened in the last 10-years?

- I no longer visit libraries, which are becoming coffee shops+wifi hot-spots where people who have librarian in their job title, hot desk; books, they are around here somewhere. I used to regularly visit libraries, particularly while working on my C book. No libraries have so far needed to be visited, for the writing of my evidence-based software engineering book,

- many old manuals, reports, books and magazines became freely available for download, via sites like the Internet Archive, Bitsavers and the Defense Technical Information Center; for second hand books there is AbeBooks. Site like Research Gate, Semantic Scholar and Google Scholar are fantastic sources for more recent work; for new books there is Amazon,

- Github became the place to make source code+stuff available,

- researchers in software engineering started to become interested in evidence-based research. In the UK the CREST Open Workshops were a fantastic series of events; I went to about a third of them, and there were often a couple of gold nuggets per event (a change of funding means running future events will require a lot more work),

- smart phones became the last, next, major software consumer ecosystem (capturing a large percentage of the world’s population means there is no room left for something bigger), and the cloud started on its path to being 99% of the commercial software ecosystem,

- Python joined the short-list to become the world’s primary programming language (assuming that people still run programs outside of the browser). The decline of PERL became very obvious, and work on adding new features to Cobol stopped (work on adding features to Fortran is still going strong),

- known faults are now being automatically fixed by modifying the source code (using genetic programming). This has yet to move out of research, but we all know where it’s going,

- whole program optimization of systems containing millions of lines of code became a viable option for commercial developers (a topic of late night discussion for compiler writers in the 1980s, and perhaps earlier decades, when having more than 64K of memory was treated as nirvana),

- after 20-years of being the only major open source compiler tool-chain, gcc got some serious competition. I originally predicted that llvm would disappear, failing to recognize that Apple were supporting it for licensing reasons,

- the death throes of Moore’s law went from subtle to, isn’t it dead yet?

I probably missed several major events hiding in plain sight, either because I am too close to them or blinkered.

What did not happen in the last 10 years?

- No major new languages. These require major new hardware ecosystems; in the smartphone market Android used Java and iOS made use of existing languages. There were the usual selection of fashion/vanity driven wannabes, e.g., Julia, Rust, and Go. The R language started to get noticed, but it has been around since 1995, and Python looks set to eventually kill it off,

- no accident killing 100+ people has been attributed to faults in software. Until this happens, software engineering has a dead bodies problem,

- the creation of new software did not slow down from its break-neck speed,

- in the first few years of this blog I used to make yearly predictions, which did not happen (most of the time).

Now I can relax for 9.5 years, before scurrying to complete 1,040 posts, i.e., the rate of posting will now resume its previous, more sedate, pace.

The first commercially available (claimed) verified compiler

Yesterday, I read a paper containing a new claim by some of those involved with CompCert (yes, they of soap powder advertising fame): “CompCert is the first commercially available optimizing compiler that is formally verified, using machine assisted mathematical proofs, to be exempt from miscompilation”.

First commercially available; really? Surely there are earlier claims of verified compilers being commercial availability. Note, I’m saying claims; bits of the CompCert compiler have involved mathematical proofs (i.e., code generation), so I’m considering earlier claims having at least the level of intellectual honesty used in some CompCert papers (a very low bar).

What does commercially available mean? The CompCert system is open source (but is not free software), so I guess it’s commercially available via free downloading licensing from AbsInt (the paper does not define the term).

Computational Logic, Inc is the name that springs to mind, when thinking of commercial and formal verification. They were active from 1983 to 1997, and published some very interesting technical reports about their work (sadly there are gaps in the archive). One project was A Mechanically Verified Code Generator (in 1989) and their Gypsy system (a Pascal-like language+IDE) provided an environment for doing proofs of programs (I cannot find any reports online). Piton was a high-level assembler and there was a mechanically verified implementation (in 1988).

There is the Danish work on the formal specification of the code generators for their Ada compiler (while there was a formal specification of the Ada semantics in VDM, code generators tend to be much simpler beasts, i.e., a lot less work is needed in formal verification). The paper I have is: “Retargeting and rehosting the DDC Ada compiler system: A case study – the Honeywell DPS 6” by Clemmensen, from 1986 (cannot find an online copy). This Ada compiler was used by various hardware manufacturers, so it was definitely commercially available for (lots of) money.

Are then there any earlier verified compilers with a commercial connection? There is A PRACTICAL FORMAL SEMANTIC DEFINITION AND VERIFICATION SYSTEM FOR TYPED LISP, from 1976, which has “… has proved a number of interesting, non-trivial theorems including the total correctness of an algorithm which sorts by successive merging, the total correctness of the McCarthy-Painter compiler for expressions, …” (which sounds like a code generator, or part of one, to me).

Francis Morris’s thesis, from 1972, proves the correctness of compilers for three languages (each language contained a single feature) and discusses how these features may be combined into a more “realistic” language. No mention of commercial availability, but I cannot see the demand being that great.

The definition of PL/1 was written in VDM, a formal language. PL/1 is a huge language and there were lots of subsets. Were there any claims of formal verification of a subset compiler for PL/1? I have had little contact with the PL/1 world, so am not in a good position to know. Anybody?

Over to you dear reader. Are there any earlier claims of verified compilers and commercial availability?

Instructions that cpus don’t need to support

What instructions can computers do without (an earlier post covered instructions they should support)?

The R in RISC was supposed to stand for Reduced, but in practice almost all the instructions you would expect were supported. What was missing were the really complicated instructions that machines of the time (last 1980s), like the VAX, supported (analysis of instruction set usage showed that these complicated instructions were rarely used; from the compiler perspective the combination sequence of operations supported by these instructions rarely occurred in code).

One instruction that was often missing from the early RISC processors was integer multiply. Compilers were expected to generate a series of instructions that had the same effect. Some of the omitted ‘basic’ instructions got added to later versions of the processors that survived commercially (e.g., SPARC).

The status register is still a common omission from RISC designs (at least for the integer operations). Where is the data showing that in the grand scheme of things (i.e., processor performance running real programs), status registers slow things down? I know that hardware designers don’t like them because they introduce bottlenecks. I don’t recall ever having seen an analysis of instruction set usage targeted at the impact of status registers on generated code. Pointers welcome.

These days, nobody seems to analyze instruction set usage like they did in times past. Perhaps Intel’s marketing and the demise of almost every cpu vendor has dampened enthusiasm for researching new cpu designs. These days most new cpu designs seem to be fashion driven, rather than data driven.

Do computers need registers? An issue that once attracted lots of research was the optimal number of registers for a processor. The minimum number of registers (or temporary storage locations) needed to evaluate an expression was known by 1970. There were various studies of the impact, on code generation, of increasing/decreasing the number of registers available to the compiler. But these studies were done using 1990s era compilers and modern compilers do many more optimizations; whole program optimization ought to be able to make use of many more registers than are probably available on today’s processors (at least I think so, until somebody does a study that shows otherwise). There is a register-less processor that is supposed to be taking the world by storm, sometime soon.

Do computers need to support the IEEE floating-point representation? Logarithmic number systems are starting to be used in various devices, but accuracy remains an issue for some applications.

Recent Comments