Archive

Build an ISO Standard and the world will beat a path to your door

An email I received today, announcing the release of version 1.0 of the GNU Modula-2 compiler, reminded me of some plans I had to write something about a proposal to add some new definitions to the next version of the ISO C Standard.

In the 80s I was heavily involved in the Pascal community and some of the leading members of this community thought that the successor language designed by Niklaus Wirth, Modula-2, ought to be the next big language. Unfortunately for them this view was not widely shared and after much soul searching it was decided that the lack of an ISO standard for the language was responsible for holding back widespread adoption. A Modula-2 ISO Standard was produced and, as they say, the rest is history.

The C proposal involves dividing the existing definition undefined behavior into two subcategories; bounded undefined behavior and critical undefined behavior. The intent is to provide guidance to people involved with software assurance. My long standing involvement with C means that I find the technical discussions interesting; I have to snap myself out of getting too involved in them with the observation that should the proposals be included in the revised C Standard they will probably have the same impact as the publication of the ISO Standard had on Modula-2 usage.

The only way for changes to an existing language standard to have any impact on developer usage is for them to require changes to existing compiler behavior or to specify additional runtime library functionality (e.g., Extensions to the C Library Part I: Bounds-checking interfaces).

What language was an executable originally written in?

Apple have recently added an unusual requirement to the iPhone developer agreement “Applications must be originally written in Objective-C, C, C++, or JavaScript …”. As has been pointed out elsewhere the real purpose is stop third party’s from acquiring any control over application development on Apple’s products; the banning of other languages is presumably regarded as acceptable collateral damage.

Is it possible to tell by analyzing an executable what language it was originally written in?

There are two ways in which executables contain source language ‘signatures’. Detecting these signatures requires knowledge of specific compiler behavior, i.e., a database of information about the behavior of compilers capable of creating the executables is needed.

Runtime library. Most compilers make use of a language specific runtime library, rather than generating inline code for some kinds of functionality. For instance, setjmp/longjmp in C and vtables in C++.

The presence of a known C runtime library does not guarantee that the application was originally written in C; it could have been written in Java and converted to C source.

The absence of a known C runtime library could mean that the source was compiled by a C compiler using a runtime system unknown to the analyzer.

The presence of a known Java, for instance, runtime library would suggest that the original source contained some Java. This kind of analysis would obviously require that the runtime library database not restrict itself to the ‘C’ languages.

Compiler behavior patterns. There is usually more than one way in which a source language construct can be translated to machine code and a compiler has to pick one of them. The perfect optimizing compiler would always make the optimal choice, but real compilers follow a fixed pattern of code generation for at least some language constructs (e.g., initialization of registers on function entry).

The presence of known code patterns in an executable is evidence that a particular compiler has been used; how much depends on the likelihood it could have been generated by other means and how many other patterns suggest the same compiler. In the case of the GNU Compiler Collection the source language might also be Fortran, Java or Ada; I don’t know enough about the behavior of GCC to provide an informed estimate of whether it is possible to recognize the source language from the translated form of constructs shared by several languages, I suspect not.

The fact that an executable can be decompiled to C is not a guarantee that it was originally written in C.

Some languages support source language constructs whose corresponding machine code is unlikely to ever be generated by source from another language. The Fortran computed goto allows constructs to be written that have no equivalent in the other languages supported by GCC (none of them allow statement labels appearing in a multi-way jump to appear before the jump test):

10 I=I+1 20 J=J+1 goto (10, 20, 30, 40) J 30 I=I+3 40 I=I*2 |

The presence of a compiled form of this kind of construct in the executable would be very suggestive of Fortran source.

Apple are famously paranoid and control freakery. It will be very interesting to see what level of compliance checking they decide to perform on executables submitted to the App Store.

On another note: What does “originally written” mean? For instance, many of the mathematical functions (e.g., sine, log, gamma, etc) contained in R were originally written in Fortran and translated to C for use in R; this C source is what is now maintained. Does this historical implementation decision mean that R cannot be legally ported to the iPhone?

Dimensional analysis of source code

The idea of restricting the operations that can be performed on a variable based on attributes appearing in its declaration is actually hundreds of years old and is more widely known as dimensional analysis. Readers are probably familiar with the concept of type checking where, for instance, a value having a floating-point type is not allowed to be added to a value having a pointer type. Unfortunately, many of those computer languages that support the functionality I am talking about (e.g., Ada) also refer to it as type checking and differentiate it from the more common usage by calling it strong typing. The concept would be much easier for people to understand if a different term were used, e.g., unit checking or even dimension checking.

Dimensional analysis, as used in engineering and the physical sciences, relies on the fact that quantities are often expressed in terms of a small number of basic attributes, e.g., mass, length and time; velocity is calculated by dividing a length by a time,  and area is calculated by multiplying two lengths,

and area is calculated by multiplying two lengths,  . Adding a length quantity to a velocity has no physical meaning and suggests that something is wrong with the calculation, while dividing velocity by time,

. Adding a length quantity to a velocity has no physical meaning and suggests that something is wrong with the calculation, while dividing velocity by time,  , can be interpreted as acceleration. Dividing two quantities that have the same units results in what is known as a dimensionless number.

, can be interpreted as acceleration. Dividing two quantities that have the same units results in what is known as a dimensionless number.

Dimensional analysis can be used to check a calculation involving physical quantities for internal consistency and as a method for trying to deduce the combinations of quantities that an unknown equation might contain based on the physical units the result is known to be represented in.

The frink language has units of measure checking built into it.

How might dimensional analysis be used to check source code for internal consistency? Consider the following code:

x = a / b; c = a; y = c / b; if (x + y ... ... z = x + b; |

c is assigned a‘s value and is therefore assumed to have the same units of measurement. The value assigned to y is calculated by dividing c by b and the train of reasoning leading to the assumption that it has the same units of measurement as x is easy to follow. Based on this analysis, there is nothing suspicious about adding x and y, but adding x and b looks wrong (it would be perfectly ok if all of the variables in this code were dimensionless).

A number of tools have been written to check source code expressions for internal consistency e.g., Fortran (Automated computation and consistency checking of physical dimensions and units in scientific programs), C++ (Applied Template Metaprogramming in SI units) and C (Annotation-less Unit Type Inference for C), but so far only one PhD.

Providing a mechanism for developers to add unit information to variable declarations would enable compilers to perform consistency checks and reduce the likelihood of false positives being reported (because dimensionless values can generally be combined in any way). It is too late in the day for such a major feature to be added to the next revision of the C++ standard; the C standard is also being revised, but the committee is currently being very conservative and insists that any proposed new constructs already be implemented in at least one compiler.

Assuming compilers are clever enough (part 1)

Developers often assume the compiler they use will do all sorts of fancy stuff for them. Is this because they are lazy and happy to push responsibility for parts of the code they write on to the compiler, or do they actually believe that their compiler does all the clever stuff they assume?

An example of unmet assumptions about compiler performance is the use of const in C/C++, final in Java or readonly in other languages. These are often viewed as a checking mechanism, i.e., the developer wants the compiler to check that no attempt is made to, accidentally, change the value of some variable, perhaps via code added during maintenance.

The surprising thing about variables in source code is that approximately 50% of them don’t change once they have been assigned a value (A Theory of Type Qualifiers for C measurements and Automatic Inference of Stationary Fields for Java).

Developers don’t use const/final qualifiers nearly as often as they could. Most modern compilers can deduce if a locally defined variable is only assigned a value once and make use of this fact during optimization. It takes a lot more resources to deduce this information for non-local variables; developers want their compiler to be fast and so implementors don’t won’t them waiting around while whole program analysis is performed.

Why don’t developers make more use of const/final qualifiers? Is this usage, or lack of, an indicator that developers don’t have an accurate grasp of variable usage, or that they don’t see the benefit of using these qualifiers or perhaps they pass responsibility on to the compiler (program size seems to grow sufficiently fast that whole program optimization often consumes more memory than likely to be available; and when are motherboards going to break out of the 4G limit?)

A fault in the C Standard or existing compilers?

Software is not the only entity that can contain faults. The requirements listed in a specification are usually considered to be correct, almost by definition. Of course the users of software implementing a specification may be unhappy with the behavior specified and wish that some alternative behavior occurred. A cut and dried fault occurs when two requirements conflict with each other.

The C Standard can be read as a specification for how C compilers should behave. Despite over 80 man years of effort and the continued scrutiny of developers over 20 years, faults continue to be uncovered. The latest potential fault (it is possible that the fault actually occurs in many existing compilers rather than the C Standard) was brought to my attention by Al Viro, one of the Sparse developers.

The issue involved the following code (which I believe the standard considers to be strictly conforming, but all the compilers I have tried disagree):

int (*f(int x))[sizeof x]; // A prototype declaration int (*g(int y))[sizeof y] // A function definition { return 0; } |

These function declarations are unusual in that their return type is a pointer to an array of integers, a type rarely encountered in this context (the original question involved a return type of pointer to function returning … and was more complicated).

The specific issue was the scope of the parameter (i.e., x and y), is the declaration still in scope at the point that the second occurrence of the identifier is encountered?

As a principle I think that the behavior, whatever it turns out to be, should be the same in both cases (neither the C standard or its rationale state such a principle).

Taking the function prototype case first:

The scope of the parameter x “… terminates at the end of the function declarator.” (sentence 409).

and does function prototype scope include the return type (the syntax calls the particular construct a declarator and there are at least two of them, one nested inside the other, in a function prototype declaration)?

Sentence 1592 says Yes, but sentence 279 and 1845 say No.

None of these references are normative references (standardize for definitive).

Moving on to the function definition case:

Where does the scope of the parameter x begin (sentence 418)?

“… scope that begins just after the completion of its declarator.”

and where does the scope end (sentence 408)?

“… which terminates at the end of the associated block.”

and what happens between the beginning and ending of the scope (sentence 412)?

“Within the inner scope, the identifier designates the entity declared in the inner scope;”

This looks very straight forward, there are no ‘gaps’ in the scope of the parameter definition appearing in a function definition. Consistency with the corresponding function prototype case requires that function declarator be interpreted to include the return type.

There is a related discussion involving a previous Defect Report 345 submitted a while ago.

The problem is that many existing compilers do not treat parameter scope in this way. They operate as-if there was a ‘gap’ in the parameter scope of function definition (probably because the code implementing this functionality is shared with that implementing function prototypes, which have been interpreted to not include the return type).

What happens next? Probably lots of discussion on the C Standard email reflector. Possible outcomes include somebody finding wording that requires a ‘gap’ in the scope of parameters in function definitions, it agreed that such a gap ought to be specified by the standard (because this is how existing code behaves because this is how compilers operate), or that the standard is correct as is and any compiler that behaves differently needs to be fixed.

The probability of encountering a given variable

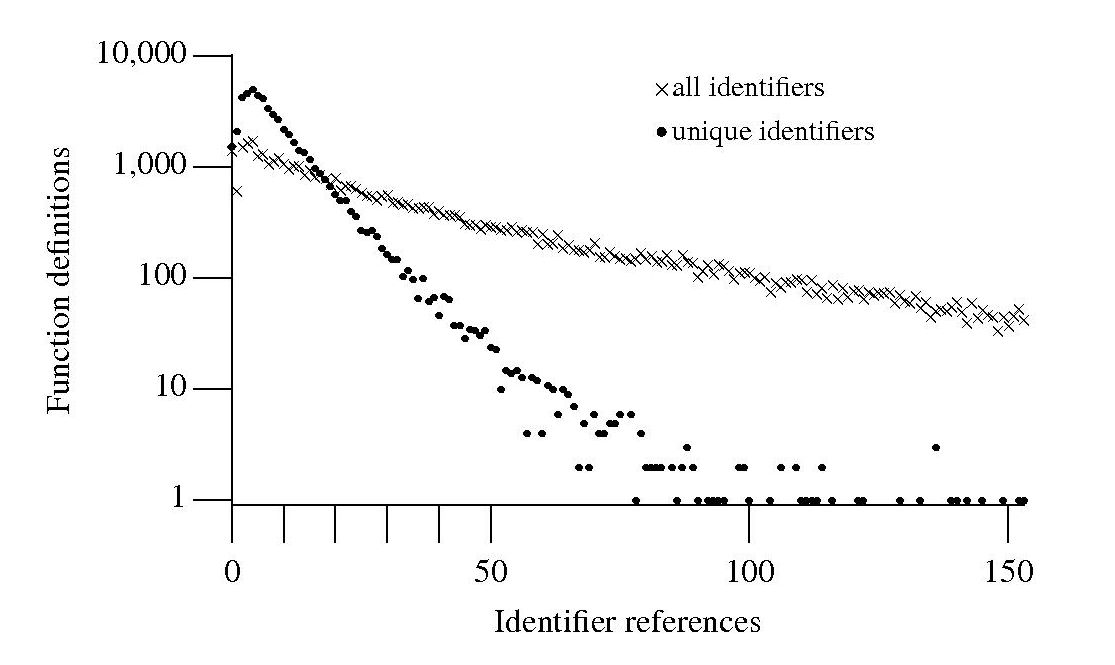

If I am reading through the body of a function, what is the probability of a particular variable being the next one I encounter? A good approximation can be calculated as follows: Count the number of occurrences of all variables in the function definition up to the current point and work out the percentage occurrence for each of them, the probability of a particular variable being seen next is approximately equal to its previously seen percentage. The following graph is the evidence I give for this approximation.

The graph shows a count of the number of C function definitions containing identifiers that are referenced a given number of times, e.g., if the identifier x is referenced five times in one function definition and ten times in another the function definition counts for five and ten are both incremented by one. That one axis is logarithmic and the bullets and crosses form almost straight lines hints that a Zipf-like distribution is involved.

There are many processes that will generate a Zipf distribution, but the one that interests me here is the process where the probability of the next occurrence of an event occurring is proportional to the probability of it having previously occurred (this includes some probability of a new event occurring; follow the link to Simon’s 1955 paper).

One can think of the value (i.e., information) held in a variable as having a given importance and it is to be expected that more important information is more likely to be operated on than less important information. This model appeals to me. Another process that will generate this distribution is that of Monkeys typing away on keyboards and while I think source code contains lots of random elements I don’t think it is that random.

The important concept here is operated on. In x := x + 1; variable x is incremented and the language used requires (or allowed) that the identifier x occur twice. In C this operation would only require one occurrence of x when expressed using the common idiom x++;. The number of occurrences of a variable needed to perform an operation on it, in a given languages, will influence the shape of the graph based on an occurrence count.

One graph does not provide conclusive evidence, but other measurements also produce straightish lines. The fact that the first few entries do not form part of an upward trend is not a problem, these variables are only accessed a few times and so might be expected to have a large deviation.

More sophisticated measurements are needed to count operations on a variable, as opposed to occurrences of it. For instance, few languages (any?) contain an indirection assignment operator (e.g., writing x ->= next; instead of x = x -> next;) and this would need to be adjusted for in a more sophisticated counting algorithm. It will also be necessary to separate out the effects of global variables, function calls and the multiple components involved in a member selection, etc.

Update: A more detailed analysis is now available.

Semantic pattern matching (Coccinelle)

I have just discovered Coccinelle a tool that claims to fill a remarkable narrow niche (providing semantic patch functionality; I have no idea how the name is pronounced) but appears to have a lot of other uses. The functionality required of a semantic patch is the ability to write source code patterns and a set of transformation rules that convert the input source into the desired output. What is so interesting about Coccinelle is its pattern matching ability and the ability to output what appears to be unpreprocessed source (it has to be told the usual compile time stuff about include directory paths and macros defined via the command line; it would be unfair of me to complain that it needs to build a symbol table).

Creating a pattern requires defining identifiers to have various properties (eg, an expression in the following example) followed by various snippets of code that specify the pattern to match (in the following <… …> represents a bracketed (in the C compound statement sense) don’t care sequence of code and the lines starting with +/- have the usual patch meaning (ie, add/delete line)). The tool builds an abstract syntax tree so urb is treated as a complete expression that needs to be mapped over to the added line).

@@ expression lock, flags; expression urb; @@ spin_lock_irqsave(lock, flags); <... - usb_submit_urb(urb) + usb_submit_urb(urb, GFP_ATOMIC) ...> spin_unlock_irqrestore(lock, flags); |

Coccinelle comes with a bunch of predefined equivalence relations (they are called isomophisms) so that constructs such as if (x), if (x != NULL) and if (NULL != x) are known to be equivalent, which reduces the combinatorial explosion that often occurs when writing patterns that can handle real-world code.

It is written in OCaml (I guess there had to be some fly in the ointment) and so presumably borrows a lot from CIL, perhaps in this case a version number of 0.1.3 is not as bad as it might sound.

My main interest is in counting occurrences of various kinds of patterns in source code. A short-term hack is to map the sought-for pattern to some unique character sequence and pipe the output through grep and wc. There does not seem to be any option to output a count of the matched patterns … yet 🙂

What I changed my mind about in 2008

A few years ago The Edge asked people to write about what important issue(s) they had recently changed their mind about. This is an interesting question and something people ought to ask themselves every now and again. So what did I change my mind about in 2008?

1. Formal verification of nontrivial C programs is a very long way off. A whole host of interesting projects (e.g., Caduceus, Comcert and Frame-C) going on in France has finally convinced me that things are a lot closer than I once thought. This does not mean that I think developers/managers will be willing to use them, only that they exist.

2. Automatically extracting useful information from source code identifier names is still a long way off. Yes, I am a great believer in the significance of information contained in identifier names. Perhaps because I have studied the issues in detail I know too much about the problems and have been put off attacking them. A number of researchers (e.g., Emily Hill, David Shepherd, Adrian Marcus, Lin Tan and a previously blogged about project) have simply gone ahead and managed to extract (with varying amount of human intervention) surprising amounts of useful from identifier names.

3. Theoretical analysis of non-trivial floating-point oriented programs is still a long way off. Daumas and Lester used the Doobs-Kolmogorov Inequality (I had to look it up) to deduce the probability that the rounding error in some number of floating-point operations, within a program, will exceed some bound. They also integrated the ideas into NASA’s PVS system.

You can probably spot the pattern here, I thought something would not happen for years and somebody went off and did it (or at least made an impressive first step along the road). Perhaps 2008 was not a good year for really major changes of mind, or perhaps an earlier in the year change of mind has so ingrained itself in my mind that I can no longer recall thinking otherwise.

Parsing without a symbol table

When processing C/C++ source for the first time through a compiler or static analysis tool there are invariably errors caused by missing header files (often because the search path has not been set) or incorrectly defined, or not defined, macro names. One solution to this configuration problem is to be able to process source without handling preprocessing directives (e.g., skipping them, such as not reading the contents of header files or working out which arm of a conditional directive is applicable). Developers can do it, why not machines?

A few years ago GLR support was added to Bison, enabling it to process ambiguous grammars, and I decided to create a C parser that simply skipped all preprocessing directives. I knew that at least one reasonably common usage would generate a syntax error:

func_call(a, #if SOME_FLAG b_1); #else b_2); #endif |

c);

and wanted to minimize its consequences (i.e., cascading syntax errors to the end of the file). The solution chosen was to parse the source a single statement or declaration at a time, so any syntax error would be localized to a single statement or declaration.

Systems for parsing ambiguous grammars work on the basis that while the input may be locally ambiguous, once enough tokens have been seen the number of possible parses will be reduced to one. In C (and even more so in C++) there are some situations where it is impossible to resolve which of several possible parses apply without declaration information on one or more of the identifiers involved (a traditional parser would maintain a symbol table where this information could be obtained when needed). For instance, x * y; could be a declaration of the identifier y to have type x or an expression statement that multiplies x and y. My parser did not have a symbol table and even if it did the lack of header file processing meant that its contents would only contain a partial set of the declared identifiers. The ambiguity resolution strategy I adopted was to pick the most likely case, which in the example is the declaration parse.

Other constructs where the common case (chosen by me and I have yet to get around to actually verifying via measurement) was used to resolve an ambiguity deadlock included:

f(p); // Very common, // confidently picked function call as the common case (m)*p; // Not rare, // confidently picked multiplication as the common case (s) - t; // Quiet rare, // picked binary operator as the common case (r) + (s) - t; // Very rare, //an iteration on the case above |

At the moment I am using the parser to measure language usage, so less than 100% correctness can be tolerated. Some of the constructs that cause a syntax error to be generated every few hundred statement/declarations include:

offsetof(struct tag, field_name) // Declarators cannot be //function arguments int f(p, q) int p; // Tries to reduce this as a declaration without handling char q; // it as part of an old style function definition { MACRO(+); // Preprocessing expands to something meaningful |

Some of these can be handled by extensions to the grammar, while others could be handled by an error recovery mechanism that recognized likely macro usage and inserted something appropriate (e.g., a dummy expression in the MACRO(x) case).

Recent Comments