Archive

Ruby becoming an ISO Standard

The Ruby language is going through the process of becoming an ISO Standard (it has been assigned the document number ISO/IEC 30170).

There are two ways of creating an ISO Standard, a document that has been accepted by another standards’ body can be fast tracked to be accepted as-is by ISO or a committee can be set up to write the document. In the case of Ruby a standard was created under the auspices of JISC (Japanese Industrial Standards Committee) and this has now been submitted to ISO for fast tracking.

The fast track process involves balloting the 18 P-members of SC22 (the ISO committee responsible for programming languages), asking for a YES/NO/ABSTAIN vote on the submitted document becoming an ISO Standard. NO votes have to be accompanied by a list of things that need to be addressed for the vote to change to YES.

In most cases the fast tracking of a document goes through unnoticed (Microsoft’s Office Open XML being a recent high profile exception). The more conscientious P-members attempt to locate national experts who can provide worthwhile advice on the country’s response, while the others usually vote YES out of politeness.

Once an ISO Standard is published future revisions are supposed to be created using the ISO process (i.e., a committee attended by interested parties from P-member countries proposes changes, discusses and when necessary votes on them). When the C# ECMA Standard was fast tracked through ISO in 2005 Microsoft did not start working with an ISO committee but fast tracked a revised C# ECMA Standard through ISO; the UK spotted this behavior and flagged it. We will have to wait and see where work on any future revisions takes place.

Why would any group want to make the effort to create an ISO Standard? The Ruby language designers reasons appear to be “improve the compatibility between different Ruby implementations” (experience shows that compatibility is driven by customer demand not ISO Standards) and government procurement in Japan (skip to last comment).

Prior to the formal standards work the Rubyspec project aimed to create an executable specification. As far as I can see this is akin to some of the tools I wrote about a few months ago.

IST/5, the committee at British Standards responsible for language standards is looking for UK people (people in other countries have to contact their national standards’ body) interested in getting involved with the Ruby ISO Standard’s work. I am a member of IST/5 and if you email me I will pass your contact details along to the chairman.

Quality of data analysis: two recent papers

Software engineering research has and continues to suffer from very low quality data analysis. The underlying problem is that practitioners are happy to go along with the status quo, not bothering to learn basic statistics or criticize data analysis in papers they are asked to review. Two recent papers I have read spring out as being at opposite ends of the spectrum.

In their paper A replicated survey of IT software project failures Khaled El Emam and A. Günes Koru don’t just list the mean values for the responses they get they also give the 95% confidence bounds on those values. At a superficial level this has the effect of making their results look much less interesting; for instance a quick glance at Table 3 “Reasons for project cancellation” suggests there is a significant difference between “Lack of necessary technical skills” at 22% and “Over schedule” at 17% but a look at the 95% confidence bounds, (6%–48%) and (4%–41%) respectively, shows that almost nothing can be said about the relative contribution of these two reasons (why publish these numbers, because nothing else has been published and somebody has to start somewhere). The authors understand the consequences of using a small sample size and have the integrity to list the confidence bounds rather than leave the reader to draw completely unjustified conclusions. I wish everybody was as careful and upfront about their analysis as these authors.

The paper Assessing Programming Language Impact on Development and Maintenance: A Study on C and C++ by Pamela Bhattacharya and Iulian Neamtiu takes some interesting ideas and measurements and completely mangles the statistical analysis (something the conference’s reviewers should have picked up on).

I encourage everybody to measure code and do statistical analysis. It looks like what happened here is that a PhD student got in over her head and made lots of mistakes, something that happens to us all when learning a new subject. The problem is that these mistakes made it through into a published paper and its conclusions are likely to repeated (these conclusions may or may not be true and it may or may not be possible to reliably test them from the data gathered, but the analysis presented in the paper faulty and so its conclusions cannot be trusted). I hope the authors will reanalyze their data using the appropriate techniques and publish an updated version of the paper.

Some of the hypothesis being tested include:

- C++ is replacing C as a main development language. The actual hypothesis tested is the more interesting question: “Is the percentage of C++ in projects that also contain substantial amounts of C growing at the expense of C?”

So the unit of measurement is the project and only four of these are included in the study; an extremely small sample size that must have an error bound of around 50% (no mention of error bounds in the paper). The analysis of the data claims to use linear regression but seems completely confused, lets not get bogged down in the details but move on to other more obvious mistakes.

- C++ code is of higher internal quality than C code. The data consists of various source code metrics, ignoring whether these are a meaningful measure of quality, lets look at how the numbers are analysed. I was somewhat surprised to read: “the distributions of complexity values … are skewed, thus arithmetic mean is not the right indicator of an ongoing trend. Therefore, …, we use the geometric mean …” While the arithmetic mean might not be a useful indicator (I have trouble seeing why not), use of the geometric mean is bizarre and completely wrong. Because of its multiplicative nature the geometric mean of a set of values having a fixed arithmetic mean decreases as its variance increases. For instance, the two sets of values (40, 60) and (20, 80) both have an arithmetic mean of 50, while their geometric means are 48.98979 (i.e.,

) and 40 (i.e.,

) and 40 (i.e.,  ) respectively.

) respectively.

So if anything can be said about the bizarre idea of comparing the geometric mean of complexity metrics as they change over time, it is that increases/decreases are an indicator of decrease/increase in variance of the measurements.

- C++ code is less prone to bugs than C code. The statistical analysis here made a common novice mistake. The null hypothesis tested was: “C code has lower or equal defect density than C++ code.” and this was rejected. The incorrect conclusion drawn was that “C++ code is less prone to bugs than C code.” Statistically one does not follow from the other, the data could be inconclusive and the researchers should have tested this question as the null hypothesis if this is the claim they wanted to make. There are also lots of question marks over other parts of the analysis, but this is the biggest blunder.

Fibonacci and JIT compilers

To maximize a compiler’s ability to generate high quality code many programming language standards do not specify the order in which the operands of a binary operator are evaluated. Most of the time the order of operand evaluation does not matter because all orders produce same final result. For instance in x + y the value of x may be obtained followed by obtaining the value of y and the two values added, alternatively the value of y may be obtained first followed by obtaining the value of x and the two values added; optimizers make use of local context information (e.g., holding one of the values in a register so it is immediately available for use in the evaluation of multiple instances of x) to work out which order produces the highest quality code.

If one of the operands is a function call that modifies the value of the other operand the result may depend on the order of evaluation. For instance, in:

int x; int set_x(void) { x=1; return 1; } int two_values(void) { x=0; return x + set_x(); } |

a left to right evaluation order of the return expression in two_values produces the value 1, while a right to left evaluation order produces the value 2. Every now and again developers accidentally write code that does something like this and are surprised to see program behavior change when they use different compiler options, a new version of the compiler or a different compiler (all things that can result in the order of evaluation changing for a given expression).

It is generally assumed that two calls to two_values within the same program will always produce the same answer, i.e., the decision on which order of evaluation to use is made at compile time and never changes while a program is running. Neither the C++ or C Standard requires that the evaluation order be fixed prior to program execution and use of a just-in-time compiler could result in the value returned by two_values alternating between 1 and 2. Some languages (e.g., Java) for which JIT compilers are expected to be used specify the exact order in which operands have to be evaluated.

One possible way of finding out whether a JIT compiler is being used for your C/C++ program is to test the values returned by two calls to two_values. A JIT compiler may, but is not required, to return different values on each call. Of course a decide-prior-to-execution C/C++ compiler does have the option of optimizing the following function to return true or false on the basis that the standard permits this behavior, but I would be very surprised to see this occur in practice:

bool Schrödinger(void) { return two_values() == two_values(); } |

The runtime variability offered by JIT compilers makes it possible to write a program whose output can be any value between  and

and  (the

(the  ‘th Fibonacci number, where

‘th Fibonacci number, where n is read from the input):

#include <stdio.h> int x; int set_x(int ret_val) { x=1; return ret_val; } int two_unspec(void) { x=0; return x + set_x(1); } int add_zero(val) { x=0; return val - x + set_x(0); } int fib(int fib_num) { if (fib_num > 3) return fib(fib_num-2) + add_zero(fib(fib_num-1)); else if (fib_num == 3) return two_unspec(); else return 1; } int main(void) { int n; scanf("%d", &n); printf("Fibonacci %d = %d\n", n, filb(n)); } |

The C-semantics tool will ‘execute’ this program and produce a list of the  possible outputs (a PhD project under active development; the upper limit is currently around the 6th Fibonacci number which requires 11 hours and produces a 50G log file).

possible outputs (a PhD project under active development; the upper limit is currently around the 6th Fibonacci number which requires 11 hours and produces a 50G log file).

A decide-prior-to-execution compiler has a maximum choice of four possible outputs for a given input value and for a given executable the output produced by different input values will be perfectly correlated.

Fingerprinting the author of the ZeuS Botnet

The source code of the ZeuS Botnet is now available for download. I imagine there are a few organizations who would like to talk to the author(s) of this code.

All developers have coding habits, that is they usually have a particular way of writing each coding construct. Different developers have different sets of habits and sometimes individual developers have a way of writing some language construct that is rarely used by other developers. Are developer habits sufficiently unique that they can be used to identify individuals from their code? I don’t have enough data to answer that question. Reading through the C++ source of ZeuS I spotted a few unusual usage patterns (I don’t know enough about common usage patterns in PHP to say much about this source) which readers might like to look for in code they encounter, perhaps putting name to the author of this code.

The source is written in C++ (32.5 KLOC of client source) and PHP (7.5KLOC of server source) and is of high quality (the C++ code could do with more comments, say to the level given in the PHP code), many companies could increase the quality of their code by following the coding standard that this author seems to be following. The source is well laid out and there are plenty of meaningful variable names.

So what can we tell about the person(s) who wrote this code?

- There is one author; this is based on consistent usage patterns and nothing jumping out at me as being sufficiently different that it could be written by somebody else,

- The author is fluent in English; based on the fact that I did not spot any identifiers spelled using unusual word combinations that often occur when a developer has a poor grasp of English. Update 16-May: skier.su spotted four instances of the debug message “Request sended.” which suggests the author is not as fluent as I first thought.

- The usage that jumped out at me the most is:

for(;; p++)if(*p == '\\' || *p == '/' || *p == 0) { ...

This is taking to an extreme the idea that if a ‘control header’ has a single statement associated with it, then they both appear on the same line; this usage commonly occurs with if-statements and this for/while-statement usage is very rare (this usage also occurs in the PHP code),

- The usage of

true/falsein conditionals is similar to that of newbie developers, for instance writing:return CWA(kernel32, RemoveDirectoryW)(path) == FALSE ? false : true; // and return CWA(shlwapi, PathCombineW)(dest, dir, p) == NULL ? false : true; // also return CWA(kernel32, DeleteFileW)(file) ? true : false;

in a function returning

boolinstead of:return CWA(kernel32, RemoveDirectoryW)(path); //and return CWA(shlwapi, PathCombineW)(dest, dir, p) != NULL // and return CWA(kernel32, DeleteFileW)(file);

The author is not a newbie developer, perhaps sometime in the past they were badly bitten by a Microsoft C++ compiler bug, found that this usage worked around the problem and have used it ever since,

- The author vertically aligns the assignment operator in statement sequences but not in a sequence of definitions containing an initializer:

// = not vertically aligned here DWORD itemMask = curItem->flags & ITEMF_IS_MASK; ITEM *cloneOfItem = curItem; // but is vertically aligned here: desiredAccess |= GENERIC_WRITE; creationDisposition = OPEN_ALWAYS;

Vertical alignment is not common and I would have said that alignment was more often seen in definitions than statements, the reverse of what is seen in this code,

- Non-terminating loops are created using

for(;;)rather than the more commonly seenwhile(TRUE), - The author is happy to use

gototo jump to the end of a function, not a rare habit but lots of developers have been taught that such usage is bad practice (I would say it depends, but that discussion belongs in another post), - Unnecessary casts often appear on negative constants (unnecessary in the sense that the compiler is required to implicitly do the conversion). This could be another instance of a previous Microsoft compiler bug causing a developer to adopt a coding habit to work around the problem.

Could the source have been processed by an code formatter to remove fingerprint information? I think not. There are small inconsistencies in layout here and there that suggest human error, also automatic layout tends to have a ‘template’ look to it that this code does not have.

Update 16 May: One source file stands out as being the only one that does not make extensive use of camelCase and a quick search finds that it is derived from the ucl compression library.

Unused function parameters

I have started redoing the source code measurements that appear in my C book, this time using a lot more source, upgraded versions of existing tools, plus some new tools such as Coccinelle and R. The intent is to make the code and data available in a form that is easy for others to use (I am hoping that one or more people will measure the same constructs in other languages) and to make some attempt at teasing out relationships in the data (previously the data was simply plotted with little or no explanation).

While it might be possible to write a paper on every language construct measured, I don’t have the time to do the work. Instead, I will use this blog to make a note of the interesting things that crop up during the analysis of each construct I measure.

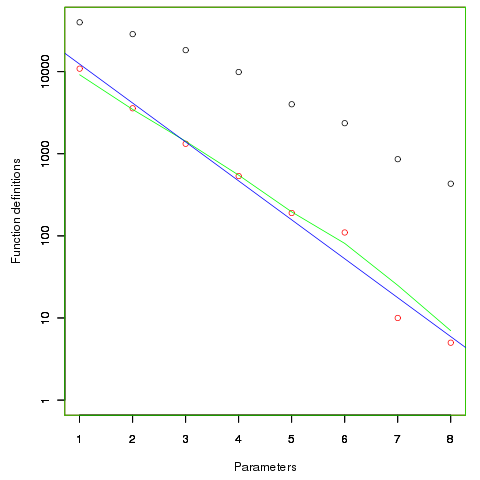

First up are unused function parameters (code and data), which at around 11% of all parameters are slightly more common than unused local variables (Figure 190.1). In the following plot black circles are the total number of functions having a given number of parameters, red circles a given number of unused parameters, blue line a linear regression fit of the red circles, and the green line is derived from black circle values using a formula I concocted to fit the data and have no theoretical justification for it.

The number of functions containing a given number of unused parameters drops by around a factor of three for each additional unused parameter. Why is this? The formula I came up with for estimating the number of functions containing  unused parameters is:

unused parameters is:  , where

, where  is the total number of function definitions containing

is the total number of function definitions containing  parameters.

parameters.

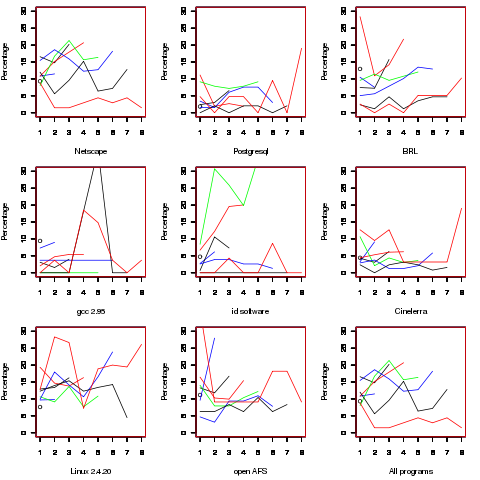

Which parameter, for those functions defined with more than one, is most likely to be unused? My thinking was that the first parameter might either hold the most basic information (and so rarely be unused) or hold information likely to be superseded when new parameters are added (and so commonly be unused), either way I considered later parameters as often being put there for later use (and therefore more likely to be unused). The following plot is for eight programs plus the sum of them all; for all functions defined with between one and eight parameters, the percentage of times the  ‘th parameter is unused is given. The source measured contained 104,493 function definitions containing more than one parameter with 16,643 of these functions having one or more unused parameter, there were a total of 235,512 parameters with 25,886 parameters being unused.

‘th parameter is unused is given. The source measured contained 104,493 function definitions containing more than one parameter with 16,643 of these functions having one or more unused parameter, there were a total of 235,512 parameters with 25,886 parameters being unused.

There is no obvious pattern to which parameters are likely to be unused, although a lot of the time the last parameter is more likely to be unused than the penultimate one.

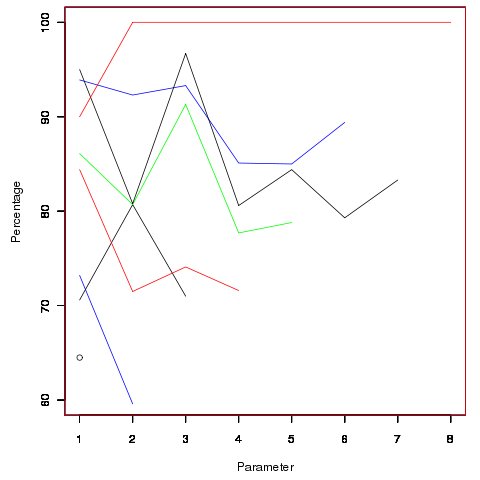

Looking through the raw data I noticed that there seemed to be some clustering of names of unused parameters, in particular if the  ‘th parameter was unused in two adjacent ‘unused parameter’ functions they often had the same name. The following plot is for all measured source and gives the percentage of same name occurrences; the matching process analyses function definitions in the order they occur within a source file and having found one unused parameter its name is compared against the corresponding parameter in the next function definition with the same number of parameters and having an unused parameter at the same position.

‘th parameter was unused in two adjacent ‘unused parameter’ functions they often had the same name. The following plot is for all measured source and gives the percentage of same name occurrences; the matching process analyses function definitions in the order they occur within a source file and having found one unused parameter its name is compared against the corresponding parameter in the next function definition with the same number of parameters and having an unused parameter at the same position.

Before getting too excited about this pattern, we should ask if something similar exists for the used parameters. When I get around to redoing the general parameter measurements, I will look into this question. The numbers of occurrences for functions containing eight parameters is close to minimal.

The functions containing these same-name unused parameters appear to also have related function names. Is this a case of related function being grouped together, often sharing parameter names, and when one of them has an unused parameter the corresponding parameter in the others is also unused?

Is there a correlation between number of parameters and number of statements, are functions containing lots of statements less likely to have unused parameters? What effect does software evolution have (most of this kind of research measures executable code, not variable definitions)?

Estimating the quality of a compiler implemented in mathematics

How can you tell if a language implementation done using mathematical methods lives up to the claims being made about it, without doing lots of work? Answers to the following questions should give you a good idea of the quality of the implementation, from a language specification perspective, at least for C.

- How long did it take you to write it? I have yet to see any full implementation of a major language done in less than a man year; just understanding and handling the semantics, plus writing the test cases will take this long. I would expect an answer of at least several man years

- Which professional validation suites have you tested the implementation against? Many man years of work have gone into the Perennial and PlumHall C validation suites and correctly processing either of them is a non-trivial task. The gcc test suite is too light-weight to count. The C Model Implementation passed both

- How many faults have you found in the C Standard that have been accepted by WG14 (DRs for C90 and C99)? Everybody I know who has created a full implementation of a C front end based on the text of the C Standard has found faults in the existing wording. Creating a high quality formal definition requires great attention to detail and it is to be expected that some ambiguities/inconsistencies will be found in the Standard. C Model Implementation project discoveries include these and these.

- How many ‘rules’ does the implementation contain? For the C Model Implementation (originally written in Pascal and then translated to C) every if-statement it contained was cross referenced to either a requirement in the C90 standard or to an internal documentation reference; there were 1,327 references to the Environment and Language clauses (200 of which were in the preprocessor and 187 involved syntax). My C99 book lists 2,043 sentences in the equivalent clauses, consistent with a 70% increase in page count over C90. The page count for C1X is around 10% greater than C99. So for a formal definition of C99 or C1X we are looking for at around 2,000 language specific ‘rules’ plus others associated with internal housekeeping functions.

- What percentage of the implementation is executed by test cases? How do you know code/mathematics works if it has not been tested? The front end of the C Model Implementation contains 6,900 basic blocks of which 87 are not executed by any test case (98.7% coverage); most of the unexecuted basic blocks require unusual error conditions to occur, e.g., disc full, and we eventually gave up trying to figure out whether a small number of them were dead code or just needed the right form of input (these days genetic programming could be used to help out and also to improve the quality of coverage to something like say MC/DC, but developing on a PC with a 16M hard disc does limit what can be done {the later arrival of a Sun 4 with 32M of RAM was mind blowing}).

Other suggested questions or numbers applicable to other languages most welcome. Some forms of language definition do not include a written specification, which makes any measurement of implementation conformance problematic.

Simple generator for compiler stress testing source

Since writing my C book I have been interested in the problem of generating source that has the syntactic and semantic statistical characteristics of human written code.

Generating code that obeys a language’s syntax is straight forward. Take a specification of the syntax (say is some yacc-like form) and ‘generate’ each of the terminals/nonterminals on the right-hand-side of the start symbol. Nonterminals will lead to rules having right-hand-sides that in turn need to be ‘generated’, a random selection being made when a nonterminal has more than one possible rhs rule. Output occurs when a terminal is ‘generated’.

For the code to mimic human written code it is necessary to bias the random selection process; a numeric value at the start of each rhs rule can be used to specify the percentage probability of that rule being chosen for the corresponding nonterminal.

The following example generates a subset of C expressions; nonterminals in lowercase, terminals in uppercase and implemented as a call to a function having that name:

%grammar first_rule : def_ident " = " expr " ;n" END_EXPR_STMT ; def_ident : MK_IDENT ; constant : MK_CONSTANT ; identifier : KNOWN_IDENT ; primary_expr : 30 constant | 60 identifier | 10 " (" expr ") " ; multiplicative_expr : 50 primary_expr | 40 multiplicative_expr " * " primary_expr | 10 multiplicative_expr " / " primary_expr ; additive_expr : 50 multiplicative_expr | 25 additive_expr " + " multiplicative_expr | 25 additive_expr " - " multiplicative_expr ; expr : START_EXPR additive_expr FINISH_EXPR ; |

A 250 line awk program (awk only because I use it often enough for simply text processing that it is second nature) translates this into two Python lists:

productions = [ [0], [ 1, 1, 1, # first_rule 0, 5, [2, 1001, 3, 1002, 1003, ], ], [ 2, 1, 1, # def_ident 0, 1, [1004, ], ], [ 4, 1, 1, # constant 0, 1, [1005, ], ], [ 5, 1, 1, # identifier 0, 1, [1006, ], ], [ 6, 3, 0, # primary_expr 30, 1, [4, ], 60, 1, [5, ], 10, 3, [1007, 3, 1008, ], ], [ 7, 3, 0, # multiplicative_expr 50, 1, [6, ], 40, 3, [7, 1009, 6, ], 10, 3, [7, 1010, 6, ], ], [ 8, 3, 0, # additive_expr 50, 1, [7, ], 25, 3, [8, 1011, 7, ], 25, 3, [8, 1012, 7, ], ], [ 3, 1, 1, # expr 0, 3, [1013, 8, 1014, ], ], ] terminal = [ [0], [ STR_TERM, " = "], [ STR_TERM, " ;n"], [ FUNC_TERM, END_EXPR_STMT], [ FUNC_TERM, MK_IDENT], [ FUNC_TERM, MK_CONSTANT], [ FUNC_TERM, KNOWN_IDENT], [ STR_TERM, " ("], [ STR_TERM, ") "], [ STR_TERM, " * "], [ STR_TERM, " / "], [ STR_TERM, " + "], [ STR_TERM, " - "], [ FUNC_TERM, START_EXPR], [ FUNC_TERM, FINISH_EXPR], ] |

which can be executed by a simply interpreter:

def exec_rule(some_rule) : rule_len=len(some_rule) cur_action=0 while (cur_action < rule_len) : if (some_rule[cur_action] > term_start_base) : gen_terminal(some_rule[cur_action]-term_start_base) else : exec_rule(select_rule(productions[some_rule[cur_action]])) cur_action+=1 productions.sort() start_code() ns=0 while (ns < 2000) : # Loop generating lots of test cases exec_rule(select_rule(productions[1])) ns+=1 end_code() |

Naive syntax-directed generation results in a lot of code that violates one or more fundamental semantic constraints. For instance the assignment (1+1)=3 is syntactically valid in many languages, which invariably specify a semantic constraint on the lhs of an assignment operator being some kind of modifiable storage location. The simplest solution to this problem is to change the syntax to limit the kinds of constructs that can be generated on the lhs of an assignment.

The hardest semantic association to get right is the connection between variable declarations and references to those variables in expressions. One solution is to mimic how I think many developers write code, that is to generate the statements first and then generate the required definitions for the appropriate variables.

A whole host of minor semantic issues require the syntax generated code to be tweaked, e.g., division by zero occurs more often in untweaked generated code than human code. There are also statistical patterns within the semantics of human written code, e.g., frequency of use of local variables, that need to be addressed.

A few weeks ago the source of Csmith, a C source generator designed to stress the code generation phase of a compiler, was released. Over the years various people have written C compiler stress testers, most recently NPL implemented one in Java, but this is the first time that the source has been released. Imagine my disappointment on discovering that Csmith contained around 40 KLOC of code, only a bit smaller than a C compiler I had once help write. I decided to see if my ‘human characteristics’ generator could be used to create a compiler code generator stress tester.

The idea behind compiler code generator stress testing is to generate a program containing some complicated sequence of code, compile and run it, comparing the value produced against the value that is supposed to be produced.

I modified the human characteristics generator to produce pairs of statements like the following:

i = i_3 * i_6 & i_2 << i_7 ; chk_result(i, 3 * 6 & 2 << 7, __LINE__); |

the second argument to chk_result is the value that i should contain (while generating the expression to assign to i the corresponding constant expression with the variables replaced by their known values is also created).

Having the compiler evaluate the constant expression simplifies the stress tester and provides another check that the compiler gets things right (or gets two different things wrong in the same way, in which case we probably don’t get to see any failure message). The first gcc bug I found concerned this constant expression (in fact this same compiler bug crops up with alarming regularity in the generated code).

As previously mentioned connecting variables in expressions to a corresponding definition is a lot of work. I simplified this problem by assuming that an integer variable i would be predefined in the surrounding support code and that this would be the only variable ever assigned to in the generated code.

There is some simple house-keeping that wraps everything within a program and provides the appropriate variable definitions.

The grammar used to generate full C expressions is 228 lines, the awk translator 252 lines and the Python interpreter 55 lines; just over 1% of Csmith in LOC and it is very easy to configure. However, an awful lot functionality needs to be added before it starts to rival Csmith, not least of which is support for assignment to more than one integer variable!

A change of guard in the C standard’s world?

I have just gotten back from the latest ISO C meeting (known as WG14 in the language standard’s world) which finished a whole day ahead of schedule; always a good sign that things are under control. Many of the 18 people present in London were also present when the group last met in London four years ago and if memory serves this same subset of people were also attending meetings 20 years ago when I traveled around the world as UK head of delegation (these days my enthusiasm to attend does not extend to leaving the country).

The current convenor, John Benito, is stepping down after 15 years and I suspect that many other active members will be stepping back from involvement once the current work on revising C99 is published as the new C Standard (hopefully early next year meaning it will probably be known as C12).

From the very beginning the active UK participants in WG14 have held one important point of view that has consistently been at odds with a view held by the majority of US participants; we in the UK have believed that it should be possible to deduce the requirements contained in the C Standard without reference to any deliberations of WG14, while many US participants have actively argued against what they see as over specification. I think one of the problems with trying to change US minds has been that the opinion leaders have been involved for so long and know the issues so well they cannot see how anybody could possible interpret wording in the standard in anything other than the ‘obvious’ way.

An example of the desire to not over specify is provided by a defect report I submitted 18 years ago, in particular question 19; what does:

#define f(a) a*g #define g(a) f(a) f(2)(9) |

expand to? There are two possibilities and WG14 came to the conclusion that both were valid macro expansions, making the behavior unspecified. However, when it came to a vote the consensus came down on the side of saying nothing about this case in the normative body of the standard, the only visible evidence for this behavior being a bulleted item added to the annex containing the list of unspecified behaviors.

A new member of WG14 (he has only been involved for a few years) spotted this bulleted item that had no corresponding text in the main body of the standard, tracked down the defect report that generated it and submitted a new defect report asking for wording to be added. At the meeting today the straw poll of those present was in favor of adding an appropriate example to C12 {I will link to the appropriate paper once it appears on the public WG14 site}. A minor victory on the road to a full and complete specification.

It will be interesting to see what impact a standing down of the old guard, after the publication of C12, has C2X (the revision of C that is likely to be published around 10 years from now).

For those of you still scratching their head, the two possibilities are:

2*f(9) |

or

2*9*g |

ISO Standards, the beauty and the beast

Standards is one area where a monopoly can provide a worthwhile benefit. After all the primary purpose of a standard for something is having just the one document for everybody to follow (having multiple standards because they are so useful is not a good idea). However, a common problem with monopolies is that charge a very inflated price for their product.

Many years ago the International Standard Organization settled on a pricing scheme for ISO Standards based on document page count. Most standards are very short and have a very small customer base, so there is commercial logic to having a high cost per page (especially since most are bought by large companies who need a copy if they do business in the corresponding application domain). Programming language standards do not fit this pattern, often being very long and potentially having a very large customer base.

With over 18,500 standards in their catalogue ISO might be forgiven for overlooking the dozen or so language standards, or perhaps they figured there is as much profit in charging a few hundred pounds on a few sales as charging less on more sales.

How does the move to electronic distribution effect prices? For a monopoly electronic distribution is an opportunity to make more profit, not to reduce prices. The recently published revision of the Fortran Standard is available for 338 Swiss francs (around £232) from ISO and £356 from BSI (at $351 the price from ANSI in the US is similar to ISO’s). Many years ago, at the dawn of the Internet, members of the US C Standard committee were able to convince ANSI to sell electronic copies at a reasonable rate ($30) and this practice has continued ever since (and now includes C++).

The market for the C and C++ Standards is sufficiently large that a commercial publisher (Wiley) was willing to take the risk of publishing them in book form (after some prodding and leg work by the likes of Francis Glassborow). It will be interesting to see if a publisher is willing to take a chance on a print run of the revised C Standard due out in a few years (I think the answer for the revised C++ Standard is more obvious).

Don’t Standards bodies care about computer languages? Unfortunately we are thorn in their side and they would be happy to be rid of us (but their charter’s do not allow them to do this). Our standards take much longer to produce than other standards, they are large and sales are almost non-existent (at ISO/BSI prices). What is more many of those involved in creating these standards actively subvert ISO/BSI sales by making draft documents, that are very close to the final copyrighted versions, freely available over the Internet.

In a sense ISO programming language standards exist because the organizational structure requires them to accept our work proposals and what we do does not have a large enough impact within the standards world for them to try and be rid of those tiresome people whose work is so far removed from what everybody else does.

Language usage in Google’s ngram viewer

I thought I would join the fun that people are having with Google’s new ngram viewer. The raw data (only a subset for bigrams and longer ngrams) was also enticing, but at 35+ gigabytes for the compressed 1/2/3-grams of English-all I decided to forgo the longer n-grams.

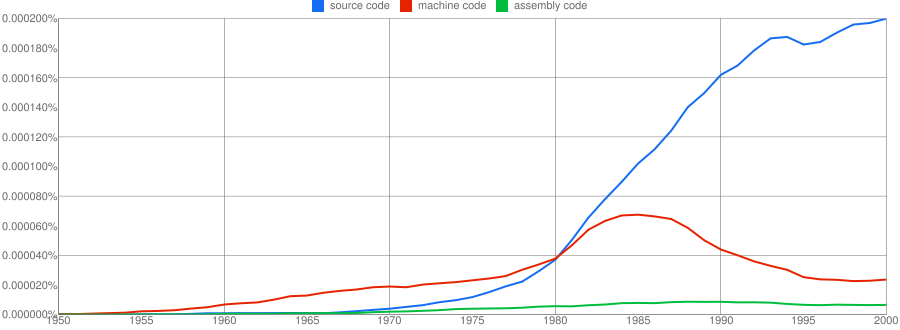

We all know that in the dim and distant past most programmers wrote in machine code, but it was not until 1980 that “source code” appeared more frequently in books that “machine code”.

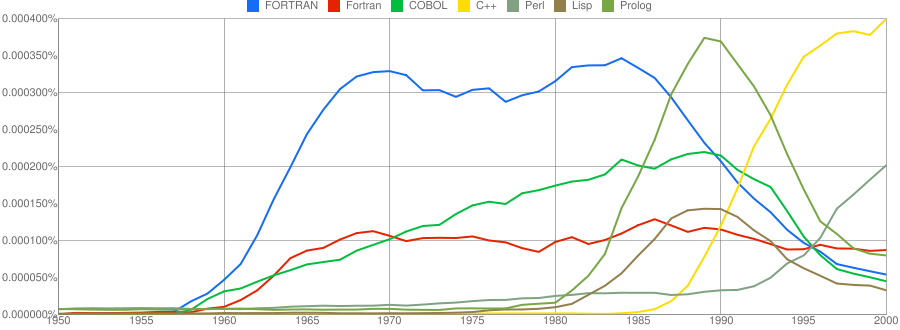

Computer language popularity is a perennial question. Fortran and Cobol address very different markets and I would have expected their usage to follow similar patterns, with “COBOL” having the obvious usage pattern for them both to follow. Instead, both “FORTRAN” and “Fortran” peaked within 10 years, with one staying there for another 20 years before declining and the other still going strong in 2000 (and still ahead of “PHP” and “Python” in 2000; neither shown to keep the clutter down). I am surprised to see “Prolog” usage being so much greater than “Lisp” and I would have expected “Lisp” to have a stronger presence in the 1970s.

I think the C++ crowd will be surprised to see that in 2000 usage was not much greater than what “FORTRAN” had enjoyed for 20 years.

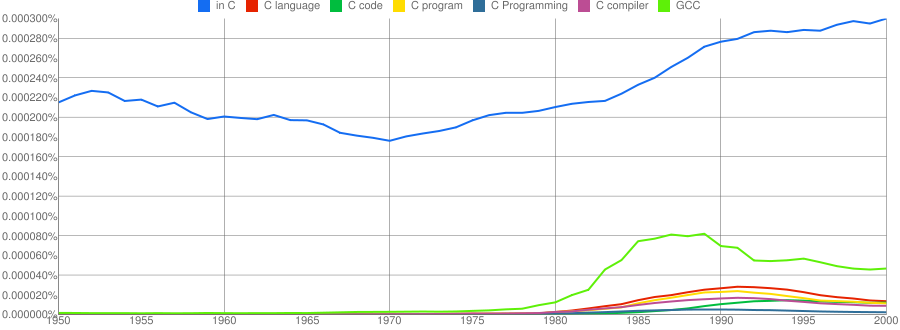

“C”, as in language, usage is obviously different to reliably measure. I have tried the obvious bigrams. Looking at some of the book matches for the phrase “in C” shows that the OCR process has sometimes inserted spaces that probably did not exist in the original, the effect being to split words and create incorrect bigrams. The phrase “in C” would also appear in books on music.

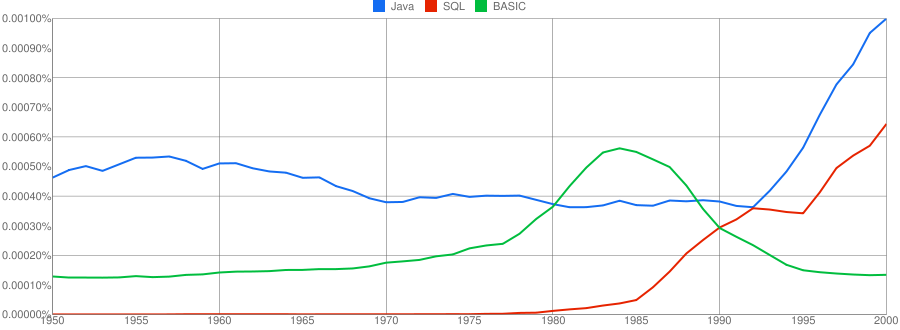

I have put the three words “Java”/”SQL”/”BASIC” in a separate plot because their usage swamps that of the other languages. Java obviously has multiple non-computer related uses and subtracting the estimated background usage suggests a language usage similar to that of “SQL”. There is too much noise for the usage of “Basic” to tell us much.

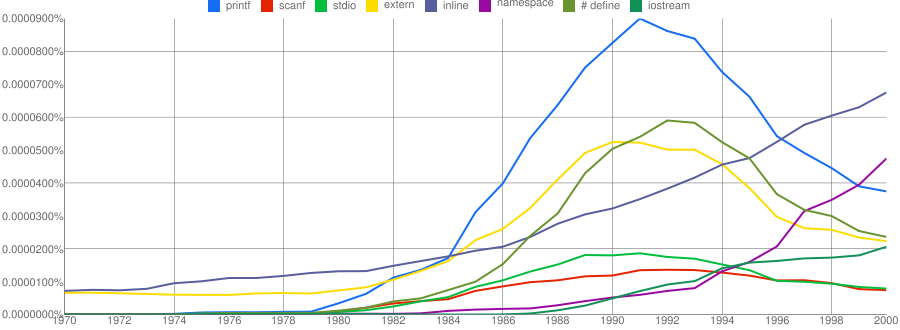

One way of comparing C/C++ language usage is to look source code usage where they are likely to differ. I/O, in the form of printf/scanf and stdio/iostream, is one obvious choice and while the expected C usage starts to declines in the 1990s the C++ usage just shows a stead growth (perhaps the <</>> usage, which does not appear in the Google viewer, has a dramatic growth during this time period).

Surprisingly #define also follows a similar pattern of decline. Even allowing for the rabid anti-macro rhetoric of the C++ in-crowd I would not have expected such a rapid decline. Perhaps this is some artifact of the book selection process used by Google; but then "namespace" shows a healthy growth around this time period.

The growth of "inline" over such a long period of time is a mystery. Perhaps some of this usage does not relate to a keyword appearing within source code examples but to text along the lines of "put this inline to make it faster".

What usage should we expect for the last decade? A greater usage of "PHP" and "Python" is an obvious call to make, along with the continuing growth of SQL, I think "UML" will also feature prominently. Will "C++" show a decline in favor or "Java" and what about "C#"? We will have to wait and see.

Recent Comments