Verified compilers and soap powder advertising

There’s a new paper out claiming to be about a formally-verified C compiler, it even states a Theorem about its abilities! If this paper appeared as part of a Soap powder advert the Advertising Standards Authority would probably require clarification of the claims. What clarifications might appear in the small print tucked away at the bottom of the ad?

- C source code is not verified directly, it is first translated to the formal notations used by the verification system; the software that performs this translation is assumed to be correct.

- The CompCert system may successfully translate programs containing undefined behavior. Any proof statements made about such programs may not be valid.

- The support tools are assumed to be correct; primarily the Coq proof assistant, which is written in OCaml.

- The CompCert system makes decisions about implementation dependent behaviors and any proofs only apply in the context of these decisions.

- The CompCert system makes decisions about unspecified behaviors and any proofs only apply in the context of these decisions.

Some notes on the small print:

The C source translator used by CompCert rarely gets mentioned in any of the published papers; what was done to check its accuracy (I have previously discussed some options)? Presumably the developers who wrote it tried very hard to make sure they did a good job, just like the authors of f2c, a Fortran to C translator, did. Connecting f2c as a front-end of the CompCert system gives us a verified Fortran compiler! I think the f2c translator is much more likely to be correct than the CompCert C source translator, it has been used by a lot more people, processed a lot more source and maintained over a longer period.

When they encounter undefined behavior in source code production C compilers sometimes generate code that has very unexpected behavior. Using the CompCert system will not avoid unexpected behavior in these situations; CompCert simply washes its hands for this kind of code and says all bets are off.

Proving the support tools correct would simply move the assumption of correctness to a different set of tools. I am not aware of any major effort to test whether the Coq system behaves as intended, but have not read all the papers describing it (the list of reported faults is does not appear to be publicly available); bugs have been found in the OCaml implementation.

Like all compilers that generate code, CompCert has to make implementation dependent decisions and select one of the possible unspecified behaviors. The C-Semantics tool generates all unspecified behaviors, rather than just one.

Does native R usage exist?

Note to R users: Users of other languages enjoy spending lots of time discussing the minutiae of the language they use, something R users don’t appear to do; perhaps you spend your minutiae time on statistics which I don’t yet know well enough to spot when it occurs). There follows a minutiae post that may appear to be navel-gazing to you (interesting problem at the end though).

In various posts written about learning R I have said “I am trying to write R like a native”, which begs the question what does R written by a native look like? Assuming for a moment that ‘native R’ exists (I give some reasons why it might not below) how…

To help recognise native R it helps to start out by asking what it is not. Let’s start with an everyday analogy; if I listen to a French/German/American person speaking English I can usually tell what country they are from, they have patterns of usage that we in merry England very rarely, if ever, use; the same is true for programming languages. Back in the day when I spent several hours a day programming in various languages I could often tell when somebody showing me some Pascal code had previously spent many years writing Fortran or that although they were now using Fortran they had previously used Algol 60 for many years.

If expert developers can read R source and with high accuracy predict the language that its author previously spent many years using, then the source is not native R.

Having ruled out any code that is obviously (to a suitably knowledgeable person) not native R, is everything that is left native R? No, native language users share common characteristics; native speakers recognize these characteristics and feel at home. I’m not saying these characteristics are good, bad or indifferent any more than my southern English accent is better/worse than northern English or American accents; it is just the way people around here speak.

Having specified what I think is native R (I would apply the same rules to any language) it is time to ask whether it actually exists.

I’m sure there are people out there whose first language was R and who have spent a lot more time using R over, say, five years rather than any other language. Such people are unlikely to have picked up any noticeable coding accents from other languages and so can be treated as native.

Now we come to the common characteristics requirement, this is where I think an existence problem may exist.

How does one learn to use a language fluently? Taking non-R languages I am familiar with the essential ingredients seem to be:

- spending lots of time using the language, say a couple of hours a day for a few years

- talking to other, heavy, users of the language on a daily basis (often writing snippets of code when discussing problems they are working on),

- reading books and articles covering language usage.

I am not saying that these activities create good programmers, just that they result in language usage characteristics that are common across a large percentage of the population. Talking and reading provides the opportunity to learn specific techniques and writing lots of code provides the opportunity to make use these techniques second nature.

Are these activities possible for R?

- I would guess that most R programs are short, say under 150 lines. This is at least an order of magnitude shorter (if not two or three orders of magnitude) than program written in Java/C++/C/Fortran/etc. I know there are R users out there who have been spending a couple of hours a day using R over several years, but are they thinking about R coding or think about the statistics and what the data analysis really means. I suspect they are spending most of this R-usage thinking time on the statistics and data analysis,

- I can easily imagine groups of people using R and individuals having the opportunity to interact with other R users (do they talk about R and write snippets of code to describe their problem? I don’t work in an R work environment, so I don’t know the answer),

- Where are the R books and articles on language usage? They don’t exist, not in the sense of Sutter’s “Effective C++: 55 Specific Ways to Improve Your Programs and Designs” (there must be a several dozen of this kind of book for C++) Bloch’s “Java Puzzlers: Traps, Pitfalls, and Corner Cases” (probably only a handful for Java) and Koenig’s “C: Traps and Pitfalls” (again a couple of dozen for C). In places Crawley’s “The R Book” has the feel of this kind of book, but Matloff’s “The Art of R Programming” is really an introduction to R for people who already know another language (no discussion of art of R as such). R users write about statistics and data analysis, with the language being a useful tool.

I suspect that many people are actually writing R for short amounts of time to solve data analysis problems they spend a lot of time thinking about; they don’t discuss R the language much (so little opportunity to learn about the techniques that other people use) and they don’t write much code (so little opportunity to try out many new techniques).

Yes, there may be a few people who do spend a couple of hours a day thinking about R the language and also get to write lots of code, these people are more like high priests than your average user.

For the last two years I have been following a no for-loops policy in an attempt to make myself write R how the natives write it. I am beginning to suspect that this view of native R is really just me imposing beliefs from usage of other language that support whole vector/array operations, e.g., APL.

I encountered the following coding problem yesterday. Do you think the non-loop version should be how it is done in R or is the loop version more ‘natural’?

Given a vector of ordered items the problem is to count the length of each subsequence of identical items,

a,a,a,b,b,a,c,c,c,c,b,c,c |

output

a 3 b 2 a 1 c 4 b 1 c 2 |

Non-looping version (looping version is easy to figure out):

subseq_len=function(feature) { r_shift=c(feature[1], feature) l_shift=c(feature, ",,,") # pad with something that will not match # Where are the boundaries between subsequences? boundary=(l_shift != r_shift) sum_matches=cumsum(!boundary) # Difference of cumulative sum at boundaries, whose value will # be off by 1 and we need to handle 'virtual' start of list at 1. t=sum_matches[boundary] seq_len=1+c(t, 0)-c(1, t) # Remove spurious value return(cbind(feature[boundary[-1]], seq_len[-length(seq_len)])) } subseq_len(c("a", "a", "b", "b", "e", "c", "c", "c", "a", "c", "c")) |

Will incorrect answers be biased towards one arm of an if-statement?

Sometimes it is possible to deduce which arm of a nested if-statement will be executed by looking at the form of the conditional expression in the outer if-statement, as in:

if ((L < M) && (M < H)) if (L < H) ; // Execution always end up here else ; // dead code |

but not in:

if ((L > M) && (M < H)) if (L < H) ; // Could end up here else ; // or here |

I ran an experiment at the 2012 ACCU conference where subjects saw nested if-statements like those above and had to specify which arm of the nested if-statement would be executed.

Sometimes subjects gave an answer specifying one arm when in fact both arms are possible. Now dear reader, do you think these incorrect answers will specify the then arm 50% of the time and the else arm 50% or do you think that that incorrect answers will more often specify one particular arm?

Of course I should have thought about this before I started to analyse the data, but this question is unrelated to the subject of the experiment and has only just cropped up because of the unexpectedly high percentage of this kind of incorrect answer. I had an idea what the answer would be but did not stop and think about relative percentages, rushing off to write a few lines of code to print the actual totals, so now my mind is polluted by knowing the answer (well at least for one group of subjects in one experiment).

Why does this “one arm preference” issue matter? The Bayesians out there will insist that the expected distribution (the prior in Bayesian terminology) of incorrectly chosen arms be factored in to the calculation of the probability of getting the numbers seen in the results. The paper Belgian euro coins: 140 heads in 250 tosses – suspicious? gives a succinct summary of the possibilities.

So I have decided to appeal to my experienced readership, yes YOU!

For those questions where the actual execution cannot be predicted in advance, from knowledge of the relative values of variables appearing in the outer if-statement, when an incorrect answer is given should the analysis assume:

- a 50/50 split of incorrect answers between each arm, or

- subjects are more likely to pick one arm; please specify a percentage breakdown between arms.

No pressure, but the submission deadline is very late tomorrow.

The results from the whole experiment will get written up here in future posts.

Update (three days later): Nobody was willing to stick their head above the parapet 🙁

There were 69 correct answers and 16 incorrect answers to questions whose answer was “both arm”. Ten of those incorrect answers specified the ‘then’ arm and 6 the ‘else’; my gut feeling was that there should be even more ‘then’ answers. If there was no “first arm” there is an equal probability of a subject’s incorrect answer appearing in either arm; in this case the probability of a 10/6 split is 12% (so my gut feeling was just hunger pangs after all).

Will programming languages now have to follow ISO fast-track rules?

A while back I wrote about how updated versions of ECMAscript (i.e., the Standard for Javascript) had twice been fast tracked to replace an existing ISO Standard, however the ISO rules require that once a document becomes one of its standards all future work be done using the ISO process (i.e., you only supposed to get the one original fast track and then you have to get at least half a dozen countries to say they will actively participate in ongoing work). Thirteen years after I asked why it was being allowed to happen (as I recall I only raised the issue because I thought I had misunderstood the rules, not because I had a burning desire to enforce them) the issue has suddenly sprung to life (we are talking Standard’s world ‘sudden’ here), with a question being raised at the last SC22 meeting and a more detailed one being prepared by BSI for the next meeting (they occur once per year).

The Elephant in the room here is ISO/IEC 29500:2008, not a programming language but Microsoft’s Office Open XML; there was quite a bit of fuss when this was fast tracked.

If the ISO rules on one-time only use of the fast track process was limited to programming languages I imagine the bureaucrats in Geneva would probably never get to hear about it (SC22 would probably conclude that there was not enough interest in the various documents outside of the submitting country to form an active ISO working group; so leave well alone).

ISO sells over 19,000 standards and has better things to do than spend time on the goings on in an unfashionable part of the galaxy, unless, that is, it has the potential to generate lots of fuss that undermines credibility.

Will Microsoft try to fast track an updated version of ISO 29500? I don’t even know if they are updating it. The possibility that ISO 29500 might be updated and submitted for fast track will make it hard for SC22 to agree to any future fast-track updates to existing ISO Standards it is responsible for.

The following is a list of documents that have been fast tracked to become an ISO Standard:

ECMAScript: ECMA-262 (1st edn) = ISO 16262:1998 ECMA-262 (3rd edn) = ISO 16262:1999 ECMA-262 (5th edn) = ISO 16262:2011 C#: ECMA-334 (2nd edn) = ISO 23270:2003 ECMA-334 (4th edn) = ISO 23270:2006 CLI: ECMA-335 (2nd edn) = ISO 23271:2003 ECMA-335 (6th edn) = ISO 23271:2012 ECMA standards fast-tracked to ISO and not yet revised: ECMA-149 PCTE part 1 = ISO 13719-1:1998 ECMA-158 PCTE part 2 = ISO 13719-2:1998 ECMA-162 PCTE part 3 = ISO 13719-3:1998 ECMA-230 PCTE IDL binding = ISO 13719-4:1998 ECMA-367 EIFFEL = ISO 25436:2006 ECMA-372 C++/CLI -> DIS 26926; failed DIS ballot and project cancelled Replaced rather than revised under JTC1 rules: CHILL (from CCITT): ISO standards 9496:1989, 9496:1995, 9496:1998, 9496:2003 MUMPS/M (from Mass Gen Hospital/ANSI): ISO standards 11756:1992, 11756:1999 Non-ECMA documents fast-tracked through ISO and not yet revised: FORTH (from FORTH Inc): ISO 15145:1997 JEFF (from J consortium): ISO 20970:2002 Ruby (from Japanese Industrial Standards Committee): ISO/IEC 30170:2012

Only compiler vendor customers, not its users, count

The hardest thing about working on compilers is getting somebody to pay you to do it (its a close run race against having the cpu instructions chop and change under you during initial development, but that’s another story). The major shift of compiler vendor business model from proprietary to open source has significant implications for users of compilers. Note I said user not customer, only one of them pays money. Under the commercial model there was usually a very direct connection between compiler user and customer (even in large organizations users rather than the manager who makes the purchase decision are often regarded by vendors as the customer), while under the Open source model most users are not customers (paying money for a distribution does not make you a customer of the people maintaining the compiler who probably don’t receive any of the money you spent).

Like all good businesses compiler vendors don’t want to make their customers unhappy. There is one way guaranteed to make all customers so unhappy that they will remember the experience for years; ship a new compiler release that breaks their existing code (this usually happens because their is a previously undetected bug in the code or because use is being made of an implemented defined/undefined part of the language {the compiler gets to decide what to do when it encounters such code}). Not breaking existing customer code is priority ONE in any commercial compiler development group.

Proprietary vendors have so many customers its almost impossible for them to know in advance what changes will break existing code and the only option is to be ultra conservative about adding new code optimizations (new optimizations can so easily change how source containing undefined behavior is processed). Ultra conservative is the polite term, management paranoia would be more accurate. There is another advantage to vendors for not breaking their customers’ code, they are protected against competition by new market entrants; a new vendor with a shiny go faster compiler doing all the optimizations the existing vendor was not willing to do in case it broke existing code will quickly find out that the performance improvements they offer are rarely big enough to tempt potential customers to switch compilers. Really, the only time companies switch compiler is when they have to port to a new platform to make a sale or their existing vendor goes bust.

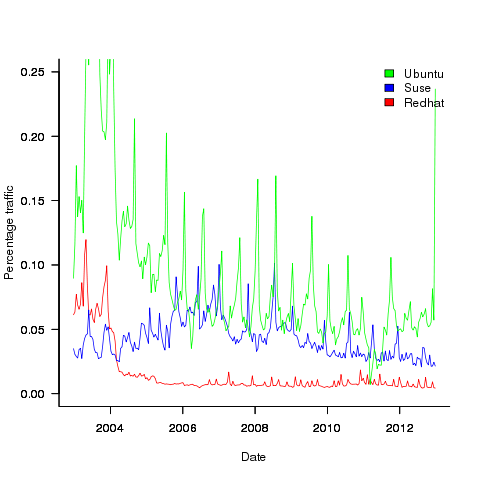

Open source vendors (e.g., those commercially involved in support/maintenance of gcc or llvm) have relatively few customers (e.g., big companies paying them lots of money for specific reasons) and as always these customers want existing code to continue to work. If the customer is paying for a code generator for a previously unsupported processor then there probably isn’t any existing code for that processor; it is a fact of life that porting source to a new processor always involves work. Some Linux distributors (e.g., Suse and Redhat) are customers in the sense that they pay the salary of developers who spend a lot of their time in compiler maintenance/upgrades and presumably work to try and ensure that the code in their respective Linux distributions does not get broken.

Compiler users who are not customers don’t count on the code breakage front (well, count for very very little, if an update broke lots of different developers’ code and enough fuss was made there might well be an update than unbroke the previous one).

What can a user do if code that used to work ok is broken when compiled with a later version of the compiler? The obvious answer is to continue using the older version that produces the desired behavior, fixing the code causing the problem is a better answer (but might involve a lot of work). There is no point in flaming the compiler developers, you are not contributing towards their upkeep; Open source does not give users the consideration that a customer enjoys.

US DoD software development data now available

I found a huge resource of software development data last weekend at the Defense Cost and Resource Center (DCARC). The Software Resource Data Report part of this resource contains information on around 2,000 major software development projects (any US DOD project over $20 million+) giving details of schedule, developer experience, money spent per year, lines of code, amount of code change, hours spent on at various stages of development and a whole lot more.

The catch? The raw data is only available to DoD analysts 🙁 I was a bit surprised that laws got passed mandating the collection of this kind of information and a lot less surprised that the DoD don’t want to make detailed development information for missile systems, radar installations, etc available to some interested parties; those of us who are not going to go out and build such systems are collateral damage.

What is the US government’s reason for requiring the collection and dissemination of this information? They want to reduce the huge amount of money currently being spent on the software development component of military systems (often a very large slice of the total project costs). Will having this data available reduce costs? It will certainly get project managers a lot more worried about project cost/time overruns if they know that lots of people outside the project are going to see their ‘failure’.

Hopefully there are Open data activists in the US who will push for a redacted form of the software data being made available to all interested parties, rather like that provided by the USA Spending site. In the meantime there are a few lucky DoD analysts who have gone from famine to feast and are probably having trouble figuring out where to start.

Update

The military like to rename things, and move stuff around. We now have: Cost Assessment Data Enterprise, with its software data.

Programs spent a lot of time repeating themselves

Inexperienced software developers are always surprised that programs used by lots of people can contain many apparently non-trivial faults and yet continue to operate satisfactorily; experienced developers become familiar with this state of affairs and tend to shrug their shoulders. I have previously written about how software is remarkably fault tolerant. I think this fault tolerance is telling us something important about the characteristics of software and while I have some ideas about what it might be I don’t yet have a good handle (or data) on what is going on to lay out my argument.

In this article I’m going to talk about another characteristic of program execution which I think is connected to program fault tolerance and is also very surprising.

Software differs from hardware in that for a given set of inputs a program will always produce the same output, it will not wear out like hardware and eventually do something different (to simplify things I’m ignoring the possible consequences of uninitialized variables and treating any timing dependencies as part of the input set). So for a fault to be observed different input is required (assuming one exists and none appeared for the first input set).

I used to assume that during a program’s execution the basic cpu operations (e.g., binary arithmetic and bitwise operations) processed a huge number of different combinations of input values (e.g., there are  combinations of input value for a 16-bit add operation) and was very surprised to find out this is not the case. For many programs around 80% of all executed instructions are repeat instructions, that is a given instruction, such as add, operates on the same combinations of input values that it has previously operated on (while executing the program) to generate an output value that is identical to the one previously generated from these input values. If we count the number of static instructions in the program (i.e., the number of assembly instructions in a listing of the disassembled executable program) then 20% of them account for 90% of the repeated instructions; so a small amount of code (i.e., 20%) is not only responsible for most dynamically executed instructions but around 72% (i.e., 80%*90%) of these instructions repeat previous computations. If a large percentage of a what goes on internally within a program is repetition is it any surprise that once it works for a reasonable set of inputs it will probably work on other inputs?

combinations of input value for a 16-bit add operation) and was very surprised to find out this is not the case. For many programs around 80% of all executed instructions are repeat instructions, that is a given instruction, such as add, operates on the same combinations of input values that it has previously operated on (while executing the program) to generate an output value that is identical to the one previously generated from these input values. If we count the number of static instructions in the program (i.e., the number of assembly instructions in a listing of the disassembled executable program) then 20% of them account for 90% of the repeated instructions; so a small amount of code (i.e., 20%) is not only responsible for most dynamically executed instructions but around 72% (i.e., 80%*90%) of these instructions repeat previous computations. If a large percentage of a what goes on internally within a program is repetition is it any surprise that once it works for a reasonable set of inputs it will probably work on other inputs?

Hang on you say, perhaps the percentage of repeat instructions is very high for a given set of external input values (e.g., a file to compress, compile or display as a jpeg) but there is a lot of variation in the set of repeat instructions between different external inputs. Measurements suggest this is not the case, with around 20% of dynamic instructions having input values that can be traced to external program input (12-30% come from globally initialized variables and the rest are generated internally).

There is a technical detail that reduces the repeat instruction percentages given about by a factor of two; researchers always like to give the most favorable numbers and for this discussion we need to make a distinction between local repetition which counts one instruction and its inputs/outputs at a particular point in the code and global repetition which counts all instructions of a given kind irrespective of where they occur in the code. A discussion of fault behavior needs to look at local repetition, not global repetition; there is a factor of two difference in the dynamic percentage and some reduction in the percentage of static instructions involved.

Sometimes the term redundant computation is used, as if the cpu should remember what happened last time it executed an instruction with a particular set of inputs and reuse the answer it got last time. Researchers have proposed caching the results of executing an instruction with a given set of input values and speeding things up or saving power by reusing previous results rather than recalculating them (a possible speedup of 13% on SPEC95 is claimed for a reuse buffer containing 4096 entries).

So a small percentage of the instructions in a program account for most of the execution time (a generally known characteristic) and around 30% of the executed instructions operate on input values they have processed before to produce output they have produced before (to the extent that a cache containing a few thousand entries is big enough to hold the a large percentage of the duplicates). If encountering a new fault requires different execution behavior to occur then having a large percentage of a program always doing the same thing (i.e., same input values same output value) will have a significant impact on the likelihood of encountering a fault. Part of the reason programs are fault tolerant is because external input values don’t have a big an impact on program behavior as we might have thought.

Researchers have also investigated repeats involving units larger than one instruction, such as sub-blocks (a sequence of instructions smaller than a basic block) and even complete functions or just the mathematical ones.

The raw data is obtained using cpu simulators to monitor programs as they are executed, logging the values read as input by an instruction and the value generated as output (in most cases the values are read from registers and written to a register). A single study might log billions of instructions from the SPEC benchmark.

Telepathic communication with a Supreme Being

Every year the Christmas cards I receive are a reminder of how seriously a surprisingly large percentage of software developers I know take their beliefs in a Supreme Being.

Surely anybody possessing the skills needed to do well in software engineering would have little trouble uncovering for themselves the significant inconsistencies in any system of beliefs intended to support the existence of a Supreme Being? The evidence from my Christmas card collection shows that some very bright would disagree with me.

This Christmas I have finally been able to come up with some plausible sounding hand waving that leaves me feeling as if I at least have a handle on this previously incomprehensible (to me) behavior.

The insight is to stop considering the Supreme Being question as a problem in logic (i.e., is there a model consistent with belief in a Supreme Being that is also consistent with the known laws of Physics) and start thinking of it was a problem in explaining structure. Love of structure is a key requirement for anybody wanting to get seriously involved with software development (a basic ability to ‘do logic’ is also required, but logic is just another tool and outside of introductory courses and TV shows is vastly overrated).

On the handful of occasions I have spoken to developers about religion (in general I try to avoid this subject, it is just too contentious) things have always boiled down to one of having core belief, a feeling that random is just not a good enough explanation for things being the way they are, while the existence of a Supreme Being slots rather well into their world view.

The human agency detection system has been proposed as one of the reasons for religion; see Scott Atran’s book “In Gods We Trust: The evolutionary landscape of religion” for a fascinating analysis of various cognitive, social and economic factors that create a landscape favoring the existence of some form of religion.

Of course anybody choosing to go with a Supreme Being model has to make significant adjustments to other components of their world view and some of these changes will generate internal inconsistencies. Any developer who has ever been involved in building a large system will have experienced the strange sensation of seeing a system they know to be internally inconsistent function in a fashion that appears perfectly acceptable to everybody involved; listening to users’ views of the system brings more revelations (how could anybody think that was how it worked?) Having had these experiences with insignificantly small systems (compared to the Universe) I can see why some developers might be willing to let slide inconsistencies generated by inserting a Supreme Being into their world view.

I think the reason I don’t have a Supreme Being in my world view is that I am too in love with the experimental method, show me some repeatable experiments and I would be willing to take a Supreme Being more seriously. Perhaps at the end of the day it does all boil down to personal taste.

At the personal level I can see why people are not keen to discuss their telepathic communication sessions (or pray to use one of the nontechnical terms) with their Supreme Being. Having to use a channel having a signal/noise ratio that low must be very frustrating.

%, would not have passed).

%, would not have passed).

The most worthwhile R coding guidelines I know

Since my post questioning whether native R usage exists (e.g., a common set of R coding patterns) several people have asked about coding/style guidelines for R. My approach to style/coding guidelines is economic, adhering to a guideline involves paying a cost now for some future benefit. Obviously to be worthwhile the benefit must be greater than the cost, there is also the issue of who pays the cost and who reaps the benefit (why would anybody pay the cost if somebody else reaps the benefit?). The following three topics are probably where the biggest benefits are to be had and only the third is specific to R (and given the state of my R knowledge may be wrong).

Comment your code. Investing 5-10 seconds per few lines of code now could save substantially more time at some future date. Effective commenting is a skill that has to be learned, start learning now. Think of commenting as sending a text message or tweet to the person you will be in 6 months time (i.e., the person who can hum the tune but has forgotten the details).

Consistently use variable names that mean something to you. This should be a sub 2-second decision that is probably going to save you no more than 5-10 seconds, but in many cases you reap the benefit soon after the investment, without having to wait many months. Names evoke associations in your mind, take advantage of this associative lookup to reduce the cognitive load of working with your code. Effective naming is a skill that has to be learned, start learning now. There are people who ignore the evidence that different people’s linguistic preferences and associations can be very different and insist that everybody adhere to one particular naming convention; ignore them.

Code organization and structure. Experience shows that there are ways of organizing and structuring +1,000 line programs that have a significant impact on the effort needed to actively work on the code, the more code there is the greater the impact. R programs tend to be short, say around 100 lines (I dare say much longer ones exist). Apart from recommending that code be broken up into separate functions, I cannot think of any organizational/structural issue that is worth recommending for 100 lines of code (if you don’t appreciate the advantage of using separate functions you need some hands on training, not words in a blog post).

Is that it, are there no other worthwhile recommendations? There might be, I just don’t have enough experience using R to know. Does anybody else have enough experience to know? I suspect not; where would they have gotten the information needed to do the cost/benefit analysis? Even in the rare case where a detailed analysis is made for a language the results are rather thin on the ground and somewhat inconclusive.

What is the reason behind those R style guides/coding guideline documents that have been written? The following are some possibilities:

and no, I don’t have any empirical data to backup my guidelines 🙁