A prisoner’s dilemma when agreeing to a management schedule

Two software developers, both looking for promotion/pay-rise by gaining favorable management reviews, are regularly given projects to complete by a date specified by management; the project schedules are sometimes unachievable, with probability  .

.

Let’s assume that both developers are simultaneously given a project, and the corresponding schedule. If the specified schedule is unachievable, High quality work can only be performed by asking for more time, otherwise performing Low quality work is the only way of meeting the schedule.

If either developer faces an unachievable deadline, they have to immediately decide whether to produce High or Low quality work. A High quality decision requires that they ask management for more time, and incur a penalty they perceive to be  (saying they cannot meet the specified schedule makes them feel less worthy of a promotion/pay-rise); a Low quality decision is perceived to be likely to incur a penalty of

(saying they cannot meet the specified schedule makes them feel less worthy of a promotion/pay-rise); a Low quality decision is perceived to be likely to incur a penalty of  (because of its possible downstream impact on project completion), if one developer chooses Low, and

(because of its possible downstream impact on project completion), if one developer chooses Low, and  , if both developers choose Low. It is assumed that:

, if both developers choose Low. It is assumed that:  .

.

This is a prisoner’s dilemma problem. The following mathematical results are taken from: The Effects of Time Pressure on Quality in Software Development: An Agency Model, by Robert D. Austin (cannot find a downloadable pdf).

There are two Nash equilibriums, for the decision made by the two developers: Low-Low and High-High (i.e., both perform Low quality work, or both perform High quality work). Low-High is not a stable equilibrium, in that on the next iteration the two developers may switch their decisions.

High-High is a pure strategy (i.e., always use it), when:

High-High is Pareto superior to Low-Low when:

How might management use this analysis to increase the likelihood that a High-High quality decision is made?

Evidence shows that 50% of developer estimates, of task effort, underestimate the actual effort; there is sufficient uncertainty in software development that the likelihood of consistently produce accurate estimates is low (i.e.,  is a very fuzzy quantity). Managers wanting to increase the likelihood of a High-High decision could be generous when setting deadlines (e.g., multiple developer estimates by 200%, when setting the deadline for delivery), but managers are often under pressure from customers, to specify aggressively short deadlines.

is a very fuzzy quantity). Managers wanting to increase the likelihood of a High-High decision could be generous when setting deadlines (e.g., multiple developer estimates by 200%, when setting the deadline for delivery), but managers are often under pressure from customers, to specify aggressively short deadlines.

The penalty for a developer admitting that they cannot deliver by the specified schedule,  , could be set very low (e.g., by management not taking this factor into account when deciding developer promotion/pay-rise). But this might encourage developers to always give this response. If all developers mutually agreed to cooperate, to always give this response, none of them would lose relative to the others; but there is an incentive for the more capable developers to defect, and the less capable developers to want to use this strategy.

, could be set very low (e.g., by management not taking this factor into account when deciding developer promotion/pay-rise). But this might encourage developers to always give this response. If all developers mutually agreed to cooperate, to always give this response, none of them would lose relative to the others; but there is an incentive for the more capable developers to defect, and the less capable developers to want to use this strategy.

Regular code reviews are a possible technique for motivating High-High, by increasing the likelihood of any lone Low decision being detected. A Low-Low decision may go unreported by those involved.

To summarise: an interesting analysis that appears to have no practical use, because reasonable estimates of the values of the variables involved are unavailable.

C Standard meeting, April-May 2019

I was at the ISO C language committee meeting, WG14, in London this week (apart from the few hours on Friday morning, which was scheduled to be only slightly longer than my commute to the meeting would have been).

It has been three years since the committee last met in London (the meeting was planned for Germany, but there was a hosting issue, and Germany are hosting next year), and around 20 people attended, plus 2-5 people dialing in. Some regular attendees were not in the room because of schedule conflicts; nine of those present were in London three years ago, and I had met three of those present (this week) at WG14 meetings prior to the last London meeting. I had thought that Fred Tydeman was the longest serving member in the room, but talking to Fred I found out that I was involved a few years earlier than him (our convenor is also a long-time member); Fred has attended more meeting than me, since I stopped being a regular attender 10 years ago. Tom Plum, who dialed in, has been a member from the beginning, and Larry Jones, who dialed in, predates me. There are still original committee members active on the WG14 mailing list.

Having so many relatively new meeting attendees is a good thing, in that they are likely to be keen and willing to do things; it’s also a bad thing for exactly the same reason (i.e., if it not really broken, don’t fix it).

The bulk of committee time was spent discussing the proposals contains in papers that have been submitted (listed in the agenda). The C Standard is currently being revised, WG14 are working to produce C2X. If a person wants the next version of the C Standard to support particular functionality, then they have to submit a paper specifying the desired functionality; for any proposal to have any chance of success, the interested parties need to turn up at multiple meetings, and argue for it.

There were three common patterns in the proposals discussed (none of these patterns are unique to the London meeting):

- change existing wording, based on the idea that the change will stop compilers generating code that the person making the proposal considers to be undesirable behavior. Some proposals fitting this pattern were for niche uses, with alternative solutions available. If developers don’t have the funding needed to influence the behavior of open source compilers, submitting a proposal to WG14 offers a low cost route. Unless the proposal is a compelling use case, affecting lots of developers, WG14’s incentive is to not adopt the proposal (accepting too many proposals will only encourage trolls),

- change/add wording to be compatible with C++. There are cost advantages, for vendors who have to support C and C++ products, to having the two language be as mutually consistent as possible. Embedded systems are a major market for C, but this market is not nearly as large for C++ (because of the much larger overhead required to support C++). I pointed out that WG14 needs to be careful about alienating a significant user base, by slavishly following C++; the C language needs to maintain a separate identity, for long term survival,

- add a new function to the C library, based on its existence in another standard. Why add new functions to the C library? In the case of math functions, it’s to increase the likelihood that the implementation will be correct (maths functions often have dark corners that are difficult to get right), and for string functions it’s the hope that compilers will do magic to turn a function call directly into inline code. The alternative argument is not to add any new functions, because the common cases are already covered, and everything else is niche usage.

At the 2016 London meeting Peter Sewell gave a presentation on the Cerberus group’s work on a formal definition of C; this work has resulted in various papers questioning the interpretation of wording in the standard, i.e., possible ambiguities or inconsistencies. At this meeting the submitted papers focused on pointer provenance, and I was expecting to hear about the fancy optimizations this work would enable (which would be a major selling point of any proposal). No such luck, the aim of the work was stated as clearly specifying the behavior (a worthwhile aim), with no major new optimizations being claimed (formal methods researchers often oversell their claims, Peter is at the opposite end of the spectrum and could do with an injection of some positive advertising). Clarifying behavior is a worthwhile aim, but not at the cost of major changes to existing wording. I have had plenty of experience of asking WG14 for clarification of existing (what I thought to be ambiguous) wording, only to be told that the existing wording was clear and not ambiguous (to those reviewing my proposed defect). I wonder how many of the wording ambiguities that the Cerberus group claim to have found would be accepted by WG14 as a defect that required a wording change?

Winner of the best pub quiz question: Does the C Standard require an implementation to be able to exactly represent floating-point zero? No, but it is now required in C2X. Do any existing conforming implementations not support an exact representation for floating-point zero? There are processors that use a logarithmic representation for floating-point, but I don’t know if any conforming implementation exists for such systems; all implementations I know of support an exact representation for floating-point zero. Logarithmic representation could handle zero using a special bit pattern, with cpu instructions doing the right thing when operating on this bit pattern, e.g., 0.0+X == X, (I wonder how much code would break, if the compiler mapped the literal 0.0 to the representable value nearest to zero).

Winner of the best good intentions corrupted by the real world: intmax_t, an integer type capable of representing any value of any signed integer type (i.e., a largest representable integer type). The concept of a unique largest has issues in a world that embraces diversity.

Today’s C development environment is very different from 25 years ago, let alone 40 years ago. The number of compilers in active use has decreased by almost two orders of magnitude, the number of commonly encountered distinct processors has shrunk, the number of very distinct operating systems has shrunk. While it is not a monoculture, things appear to be heading in that direction.

The relevance of WG14 decreases, as the number of independent C compilers, in widespread use, decreases.

What is the purpose of a C Standard in today’s world? If it were not already a standard, I don’t think a committee would be set up to standardize the language today.

Is the role of WG14 now, the arbiter of useful common practice across widely used compilers? Documenting decisions in revisions of the C Standard.

Work on the Cobol Standard ran for almost 60-years; WG14 has to be active for another 20-years to equal this.

Dimensional analysis of the Halstead metrics

One of the driving forces behind the Halstead complexity metrics was physics envy; the early reports by Halstead use the terms software physics and software science.

One very simple, and effective technique used by scientists and engineers to check whether an equation makes sense, is dimensional analysis. The basic idea is that when performing an operation between two variables, their measurement units must be consistent; for instance, two lengths can be added, but a length and a time cannot be added (a length can be divided by time, returning distance traveled per unit time, i.e., velocity).

Let’s run a dimensional analysis check on the Halstead equations.

The input variables to the Halstead metrics are:  , the number of distinct operators,

, the number of distinct operators,  , the number of distinct operands,

, the number of distinct operands,  , the total number of operators, and

, the total number of operators, and  , the total number of operands. These quantities can be interpreted as units of measurement in tokens.

, the total number of operands. These quantities can be interpreted as units of measurement in tokens.

The formula are:

- Program length:

There is a consistent interpretation of this equation: operators and operands are both kinds of tokens, and number of tokens can be interpreted as a length. - Calculated program length:

There is a consistent interpretation of this equation: the operand of a logarithm has to be dimensionless, and the convention is to treat the operand as a ratio (if no denominator is specified, the value 1 having the same dimensions as the numerator is taken, giving a dimensionless result), the value returned is dimensionless, which can be multiplied by a variable having any kind of dimension; so again two (token) lengths are being added. - Volume:

A volume has units of (i.e., it is created by multiplying three lengths). There is only one length in this equation; the equation is misnamed, it is a length.

(i.e., it is created by multiplying three lengths). There is only one length in this equation; the equation is misnamed, it is a length. - Difficulty:

Here the dimensions of and

and  cancel, leaving the dimensions of

cancel, leaving the dimensions of  (a length); now Halstead is interpreting length as a difficulty unit (whatever that might be).

(a length); now Halstead is interpreting length as a difficulty unit (whatever that might be). - Effort:

This equation multiplies two variables, both having a length dimension; the result should be interpreted as an area. In physics work is force times distance, and power is work per unit time; the term effort is not defined.

Halstead is claiming that a single dimension, program length, contains so much unique information that it can be used as a measure of a variety of disparate quantities.

Halstead’s colleagues at Purdue were rather damming in their analysis of these metrics. Their report Software Science Revisited: A Critical Analysis of the Theory and Its Empirical Support points out the lack of any theoretical foundation for some of the equations, that the analysis of the data was weak and that a more thorough analysis suggests theory and data don’t agree.

I pointed out in an earlier post, that people use Halstead’s metrics because everybody else does. This post is unlikely to change existing herd behavior, but it gives me another page to point people at, when people ask why I laugh at their use of these metrics.

C2X and undefined behavior

The ISO C Standard is currently being revised by WG14, to create C2X.

There is a rather nebulous clustering of people who want to stop compilers using undefined behaviors to generate what these people (and probably most other developers) consider to be very surprising code. For instance, always printing p is truep is false, when executing the code: bool p; if ( p ) printf("p is true"); if ( !p ) printf("p is false"); (possible because p is uninitialized, and accessing an uninitialized value is undefined behavior).

This sounds like a good thing; nobody wants compilers generating surprising code.

All the proposals I have seen, so far, involve doing away with constructs that can produce undefined behavior. Again, this sounds like a good thing; nobody likes undefined behaviors.

The problem is, there is a reason for labeling certain constructs as producing undefined behavior; the behavior is who-knows-what.

Now the C Standard could specify the who-knows-what behavior; for instance, it could specify that the result of dividing by zero is 42. Standard’s conforming compilers would then have to generate code to check whether the denominator was zero, and return 42 for this case (until Intel, ARM and other processor vendors ‘updated’ the behavior of their divide instructions). Way-back-when a design decision was made, the behavior of divide by zero is undefined, not 42 or any other value; this was a design decision, code efficiency and compactness was considered to be more important.

I have not seen anybody arguing that the behavior of divide by zero should be specified. But I have seen people arguing that once C’s integer representation is specified as being twos-compliment (currently it can also be ones-compliment or signed-magnitude), then arithmetic overflow becomes defined. Wrong.

Twos-compliment is a specification of a representation, not a specification of behavior. What is the behavior when the result of adding two integers cannot be represented? The result might be to wrap (the behavior expected by many developers), to saturate at the maximum value (frequently needed in image and signal processing), to raise a signal (overflow is not usually supposed to happen), or something else.

WG14 could define the behavior, for when the result of an arithmetic operation is not representable in the number of bits available. Standard’s conforming compilers targeting processors whose arithmetic instructions did not behave as required would have to generate code, for any operation that could overflow, to do what was necessary. The embedded market are heavy users of C; in this market memory is limited, and processor performance is never fast enough, the overhead of supporting a defined behavior could just be too high (a more attractive solution is to code review, to make sure the undefined behavior cannot occur).

Is there another way of addressing the issue of compiler writers’ use/misuse of undefined behavior? Yes, offer them money. Compiler writing is a business, at least at the level at which gcc and llvm operate. If people really are keen to influence the code generated by gcc and llvm, money is the solution. Wot, no money? Then stop complaining.

OSI licenses: number and survival

There is a lot of source code available which is said to be open source. One definition of open source is software that has an associated open source license. Along with promoting open source, the Open Source Initiative (OSI) has a rigorous review process for open source licenses (so they say, I have no expertise in this area), and have become the major licensing brand in this area.

Analyzing the use of licenses in source files and packages has become a niche research topic. The majority of source files don’t contain any license information, and, depending on language, many packages don’t include a license either (see Understanding the Usage, Impact, and Adoption of Non-OSI Approved Licenses). There is some evolution in license usage, i.e., changes of license terms.

I knew that a fair-few open source licenses had been created, but how many, and how long have they been in use?

I don’t know of any other work in this area, and the fastest way to get lots of information on open source licenses was to scrape the brand leader’s licensing page, using the Wayback Machine to obtain historical data. Starting in mid-2007, the OSI licensing page kept to a fixed format, making automatic extraction possible (via an awk script); there were few pages archived for 2000, 2001, and 2002, and no pages available for 2003, 2004, or 2005 (if you have any OSI license lists for these years, please send me a copy).

What do I now know?

Over the years OSI have listed 110107 different open source licenses, and currently lists 81. The actual number of license names listed, since 2000, is 205; the ‘extra’ licenses are the result of naming differences, such as the use of dashes, inclusion of a bracketed acronym (or not), license vs License, etc.

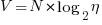

Below is the Kaplan-Meier survival curve (with 95% confidence intervals) of licenses listed on the OSI licensing page (code+data):

How many license proposals have been submitted for review, but not been approved by OSI?

Patrick Masson, from the OSI, kindly replied to my query on number of license submissions. OSI doesn’t maintain a count, and what counts as a submission might be difficult to determine (OSI recently changed the review process to give a definitive rejection; they have also started providing a monthly review status). If any reader is keen, there is an archive of mailing list discussions on license submissions; trawling these would make a good thesis project 🙂

The Algorithmic Accountability Act of 2019

The Algorithmic Accountability Act of 2019 has been introduced to the US congress for consideration.

The Act applies to “person, partnership, or corporation” with “greater than $50,000,000 … annual gross receipts”, or “possesses or controls personal information on more than— 1,000,000 consumers; or 1,000,000 consumer devices;”.

What does this Act have to say?

(1) AUTOMATED DECISION SYSTEM.—The term ‘‘automated decision system’’ means a computational process, including one derived from machine learning, statistics, or other data processing or artificial intelligence techniques, that makes a decision or facilitates human decision making, that impacts consumers.

That is all encompassing.

The following is what the Act is really all about, i.e., impact assessment.

(2) AUTOMATED DECISION SYSTEM IMPACT ASSESSMENT.—The term ‘‘automated decision system impact assessment’’ means a study evaluating an automated decision system and the automated decision system’s development process, including the design and training data of the automated decision system, for impacts on accuracy, fairness, bias, discrimination, privacy, and security that includes, at a minimum—

I think there is a typo in the following: “training, data” -> “training data”

(A) a detailed description of the automated decision system, its design, its training, data, and its purpose;

How many words are there in a “detailed description of the automated decision system”, and I’m guessing the wording has to be something a consumer might be expected to understand. It would take a book to describe most systems, but I suspect that a page or two is what the Act’s proposers have in mind.

(B) an assessment of the relative benefits and costs of the automated decision system in light of its purpose, taking into account relevant factors, including—

Whose “benefits and costs”? Is the Act requiring that companies do a cost benefit analysis of their own projects? What are the benefits to the customer, compared to a company not using such a computerized approach? The main one I can think of is that the customer gets offered a service that would probably be too expensive to offer if the analysis was done manually.

The potential costs to the customer are listed next:

(i) data minimization practices;

(ii) the duration for which personal information and the results of the automated decision system are stored;

(iii) what information about the automated decision system is available to consumers;

This act seems to be more about issues around data retention, privacy, and customers having the right to find out what data companies have about them

(iv) the extent to which consumers have access to the results of the automated decision system and may correct or object to its results; and

(v) the recipients of the results of the automated decision system;

What might the results be? Yes/No, on a load/job application decision, product recommendations are a few.

Some more potential costs to the customer:

(C) an assessment of the risks posed by the automated decision system to the privacy or security of personal information of consumers and the risks that the automated decision system may result in or contribute to inaccurate, unfair, biased, or discriminatory decisions impacting consumers; and

What is an “unfair” or “biased” decision? Machine learning finds patterns in data; when is a pattern in data considered to be unfair or biased?

In the UK, the sex discrimination act has resulted in car insurance companies not being able to offer women cheaper insurance than men (because women have less costly accidents). So the application form does not contain a gender question. But the applicants first name often provides a big clue, as to their gender. So a similar Act in the UK would require that computer-based insurance quote generation systems did not make use of information on the applicant’s first name. There is other, less reliable, information that could be used to estimate gender, e.g., height, plays sport, etc.

Lots of very hard questions to be answered here.

MI5 agent caught selling Huawei exploits on Russian hacker forums

An MI5 agent has been caught selling exploits in Huawei products, on an underground Russian hacker forum (a paper analyzing the operation of these forums; perhaps the researchers were hired as advisors). How did this news become public? A reporter heard Mr Wang Kit, a senior Huawei manager, complaining about not receiving a percentage of the exploit sale, to add to his quarterly sales report. A fair point, given that Huawei are funding a UK centre to search for vulnerabilities.

The ostensive purpose of the Huawei cyber security evaluation centre (funded by Huawei, but run by GCHQ; the UK’s signals intelligence agency), is to allay UK fears that Huawei have added back-doors to their products, that enable the Chinese government to listen in on customer communications.

If this cyber centre finds a vulnerability in a Huawei product, they may or may not tell Huawei about it. Obviously, if it’s an exploitable vulnerability, and they think that Huawei don’t know about it, they could pass the exploit along to the relevant UK government department.

If the centre decides to tell Huawei about the vulnerability, there are two good reasons to first try selling it, to shady characters of interest to the security services:

- having an exploit to sell gives the person selling it credibility (of the shady technical kind), in ecosystems the security services are trying to penetrate,

- it increases Huawei’s perception of the quality of the centre’s work; by increasing the number of exploits found by the centre, before they appear in the wild (the centre has to be careful not to sell too many exploits; assuming they manage to find more than a few). Being seen in the wild adds credibility to claims the centre makes about the importance of an exploit it discovered.

How might the centre go about calculating whether to hang onto an exploit, for UK government use, or to reveal it?

The centre’s staff could organized as two independent groups; if the same exploit is found by both groups, it is more likely to be found by other hackers, than an exploit found by just one group.

Perhaps GCHQ knows of other groups looking for Huawei exploits (e.g., the NSA in the US). Sharing information about exploits found, provides the information needed to more accurately estimate the likelihood of others discovering known exploits.

How might Huawei estimate the number of exploits MI5 are ‘selling’, before officially reporting them? Huawei probably have enough information to make a good estimate of the total number of exploits likely to exist in their products, but they also need to know the likelihood of discovering an exploit, per man-hour of effort. If Huawei have an internal team searching for exploits, they might have the data needed to estimate exploit discovery rate.

Another approach would be for Huawei to add a few exploits to the code, and then wait to see if they are used by GCHQ. In fact, if GCHQ accuse Huawei of adding a back-door to enable the Chinese government to spy on people, Huawei could claim that the code was added to check whether GCHQ was faithfully reporting all the exploits it found, and not keeping some for its own use.

The 2019 Huawei cyber security evaluation report

The UK’s Huawei cyber security evaluation centre oversight board has released it’s 2019 annual report.

The header and footer of every page contains the text “SECRET”“OFFICIAL”, which I assume is its UK government security classification. It lends an air of mystique to what is otherwise a meandering management report.

Needless to say, the report contains the usually puffery, e.g., “HCSEC continues to have world-class security researchers…”. World class at what? I hear they have some really good mathematicians, but have serious problems attracting good software engineers (such people can be paid a lot more, and get to do more interesting work, in industry; the industry demand for mathematicians, outside of finance, is weak).

The most interesting sentence appears on page 11: “The general requirement is that all staff must have Developed Vetting (DV) security clearance, …”. Developed Vetting, is the most detailed and comprehensive form of security clearance in UK government (to quote Wikipedia).

Why do the centre’s staff have to have this level of security clearance?

The Huawei source code is not that secret (it can probably be found online, lurking in the dark corners of various security bulletin boards).

Is the real purpose of this cyber security evaluation centre, to find vulnerabilities in the source code of Huawei products, that GCHQ can then use to spy on people?

Or perhaps, this centre is used for training purposes, with staff moving on to work within GCHQ, after they have learned their trade on Huawei products?

The high level of security clearance applied to the centre’s work is the perfect smoke-screen.

The report claims to have found “Several hundred vulnerabilities and issues…”; a meaningless statement, e.g., this could mean one minor vulnerability and several hundred spelling mistakes. There is no comparison of the number of vulnerabilities found per effort invested, no comparison with previous years, no classification of the seriousness of the problems found, no mention of Huawei’s response (i.e., did Huawei agree that there was a problem).

How many vulnerabilities did the centre find that were reported by other people, e.g., the National Vulnerability Database? This information would give some indication of how good a job the centre was doing. Did this evaluation centre find the Huawei vulnerability recently disclosed by Microsoft? If not, why not? And if they did, why isn’t it in the 2019 report?

What about comparing the number of vulnerabilities found in Huawei products against the number found in vendors from the US, e.g., CISCO? Obviously back-doors placed in US products, at the behest of the NSA, need not be counted.

There is some technical material, starting on page 15. The configuration and component lifecycle management issues raised, sound like good points, from a cyber security perspective. From a commercial perspective, Huawei want to quickly respond to customer demand and a dynamic market; corners are likely to be cut off good practices every now and again. I don’t understand why the use of an unnamed real-time operating system was flagged: did some techie gripe slip through management review? What is a C preprocessor macro definition doing on page 29? This smacks of an attempt to gain some hacker street-cred.

Reading between the lines, I get the feeling that Huawei has been ignoring the centre’s recommendations for changes to their software development practices. If I were on the receiving end, I would probably ignore them too. People employed to do security evaluation are hired for their ability to find problems, not for their ability to make things that work; also, I imagine many are recent graduates, with little or no practical experience, who are just repeating what they remember from their course work.

Huawei should leverage its funding of a GCHQ spy training centre, to get some positive publicity from the UK government. Huawei wants people to feel confident that they are not being spied on, when they use Huawei products. If the government refuses to play ball, Huawei should shift its funding to a non-government, open evaluation center. Employees would not need any security clearance and would be free to give their opinions about the presence of vulnerabilities and ‘spying code’ in the source code of Huawei products.

Describing software engineering in terms of a traditional science

If you were asked to describe the ‘building stuff’ side of software engineering, by comparing it with one of the traditional sciences, which science would you choose?

I think a lot of people would want to compare it with Physics. Yes, physics envy is not restricted to the softer sciences of humanities and liberal arts. Unlike physics, software engineering is not governed by a handful of simple ‘laws’, it’s a messy collection of stuff.

I used to think that biology had all the necessary important characteristics needed to explain software engineering: evolution (of code and products), species (e.g., of editors), lifespan, and creatures are built from a small set of components (i.e., DNA or language constructs).

Now I’m beginning to think that chemistry has aspects that are a better fit for some important characteristics of software engineering. Chemists can combine atoms of their choosing to create whatever molecule takes their fancy (subject to bonding constraints, a kind of syntax and semantics for chemistry), and the continuing existence of a molecule does not depend on anything outside of itself; biological creatures need to be able to extract some form of nutrient from the environment in which they live (which is also a requirement of commercial software products, but not non-commercial ones). Individuals can create molecules, but creating new creatures (apart from human babies) is still a ways off.

In chemistry and software engineering, it’s all about emergent behaviors (in biology, behavior is just too complicated to reliably say much about). In theory the properties of a molecule can be calculated from the known behavior of its constituent components (e.g., the electrons, protons and neutrons), but the equations are so complicated it’s impractical to do so (apart from the most simple of molecules; new properties of water, two atoms of hydrogen and one of oxygen, are still being discovered); the properties of programs could be deduced from the behavior its statements, but in practice it’s impractical.

What about the creative aspects of software engineering you ask? Again, chemistry is a much better fit than biology.

What about the craft aspect of software engineering? Again chemistry, or rather, alchemy.

Is there any characteristic that physics shares with software engineering? One that stands out is the ego of some of those involved. Describing, or creating, the universe nourishes large egos.

Recent Comments