Remotivating data analysed for another purpose

The motivation for fitting a regression model has a major impact on the model created. Some of the motivations include:

- practicing software developers/managers wanting to use information from previous work to help solve a current problem,

- researchers wanting to get their work published seeks to build a regression model that show they have discovered something worthy of publication,

- recent graduates looking to apply what they have learned to some data they have acquired,

- researchers wanting to understand the processes that produced the data, e.g., the author of this blog.

The analysis in the paper: An Empirical Study on Software Test Effort Estimation for Defense Projects by E. Cibir and T. E. Ayyildiz, provides a good example of how different motivations can produce different regression models. Note: I don’t know and have not been in contact with the authors of this paper.

I often remotivate data from a research paper. Most of the data in my Evidence-based Software Engineering book is remotivated. What a remotivation often lacks is access to the original developers/managers (this is often also true for the authors of the original paper). A complicated looking situation is often simplified by background knowledge that never got written down.

The following table shows the data appearing in the paper, which came from 15 projects implemented by a defense industry company certified at CMMI Level-3.

Proj Test Req Test Meetings Faulty Actual Scenarios

Plan Rev Env Scenarios Effort

Time Time

P1 144.5 1.006 85 60 100 2850 270

P2 25.5 1.001 25.5 4 5 250 40

P3 68 1.005 42.5 32 65 1966 185

P4 85 1.002 85 104 150 3750 195

P5 198 1.007 123 87 110 3854 410

P6 57 1.006 35 25 20 903 100

P7 115 1.003 92 55 56 2143 225

P8 81 1.009 156 62 72 1988 287

P9 388 1.004 150 208 553 13246 1153

P10 177 1.008 93 77 157 4012 360

P11 62 1.001 175 186 199 5017 310

P12 111 1.005 116 82 143 3994 423

P13 63 1.009 188 177 151 3914 226

P14 32 1.008 25 28 6 435 63

P15 167 1.001 177 143 510 11555 1133 |

where: TestPlanTime is the test plan creation time in hours, ReqRev is the test/requirements review of period in hours, TestEnvTime is the test environment creation time in hours, Meetings is the number of meetings, FaultyScenarios is the number of faulty test scenarios, Scenarios is the number of Scenarios, and ActualEffort is the actual software test effort.

Industrial data is hard to obtain, so well done to the authors for obtaining this data and making it public. The authors fitted a regression model to estimate software test effort, and the model that almost perfectly fits to actual effort is:

ActualEffort=3190 + 2.65*TestPlanTime

-3170*ReqRevPeriod - 3.5*TestEnvTime

+10.6*Meetings + 11.6*FaultScrenarios + 3.6*Scenarios |

My reading of this model is that having obtained the data, the authors felt the need to use all of it. I have been there and done that.

Why all those multiplication factors, you ask. Isn’t ActualTime simply the sum of all the work done? Yes it is, but the above table lists the work recorded, not the work done. The good news is that the fitted regression models shows that there is a very high correlation between the work done and the work recorded.

Is there a simpler model that can be used to explain/predict actual time?

Looking at the range of values in each column, ReqRev varies by just under 1%. Fitting a model that does not include this variable, we get (a slightly less perfect fit):

ActualEffort=100 + 2.0*TestPlanTime

- 4.3*TestEnvTime

+10.7*Meetings + 12.4*FaultScrenarios + 3.5*Scenarios |

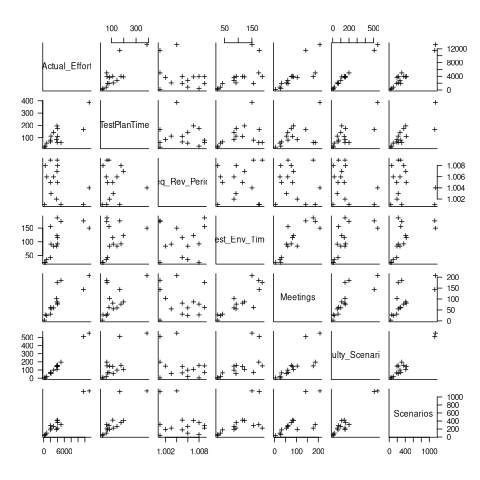

Simple plots can often highlight patterns present in a dataset. The plot below shows every column plotted against every other column (code+data):

Points forming a straight line indicate a strong correlation, and the points in the top-right ActualEffort/FaultScrenarios plot look straight. In fact, this one variable dominates the others, and fits a model that explains over 99% of the deviation in the data:

ActualEffort=550 + 22.5*FaultScrenarios |

Many of the points in the ActualEffort/Screnarios plot are on a line, and adding Meetings data to the model explains just under 99% of the variance in the data:

actualEffort=-529.5

+15.6*Meetings + 8.7*Scenarios |

Given that FaultScrenarios is a subset of Screnarios, this connectedness is not surprising. Does the number of meetings correlate with the number of new FaultScrenarios that are discovered? Questions like this can only be answered by the original developers/managers.

The original data/analysis was interesting enough to get a paper published. Is it of interest to practicing software developers/managers?

In a small company, those involved are likely to already know what these fitted models are telling them. In a large company, the left hand does not always know what the right hand is doing. A CMMI Level-3 certified defense company is unlikely to be small, so this analysis may be of interest to some of its developer/managers.

A usable estimation model is one that only relies on information available when the estimation is needed. The above models all rely on information that only becomes available, with any accuracy, way after the estimate is needed. As such, they are not practical prediction models.

Recent Comments