Benchmarking desktop PCs circa 1990

Before buying a computer customers want to be confident of choosing the best they can get for the money, and performance has often been a major consideration. Computer benchmark performance results were once widely discussed.

Knight’s analysis of early mainframe performance was widely cited for many years.

Performance on the Byte benchmarks was widely cited before Intel started spending billions on advertising, clock frequency has not always had the brand recognition it has today.

The Byte benchmark was originally designed for Intel x86 processors running Microsoft DOS; The benchmark was introduced in the June 19885 issue, and was written in the still relatively new C language (earlier microprocessor benchmarks were often written in BASIC, because early micros often came with a free BASIC interpreter), it was updated in the 1990s to be Windows based, and implemented for Unix.

Benchmarking computers using essentially the same cpu architecture and operating system removes many complications that have to be addressed when these differ. Before Wintel wiped them out, computers from different manufacturers (and often the same manufacturer) contained completely different cpu architectures, ran different operating systems, and compilers were usually created in-house by the manufacturer (or some university who got a large discount on their computer purchase).

The Fall 1990 issue of Byte contains tables of benchmark results from 1988-90. What can we learn from these results?

The most important takeaway from the tables is that those performing the benchmarks appreciated the importance of measuring hardware performance using the applications that customers are likely to be running on their computer, e.g., word processors, spreadsheets, databases, scientific calculations (computers were still sufficiently niche back then that scientific users were a non-trivial percentage of the market), and compiling (hackers were a large percentage of Byte’s readership).

The C benchmarks attempted to measure CPU, FPU (built-in hardware support for floating-point arrived with the 486 in April 1989, prior to that it was an add-on chip that required spending more money), Disk and Video (at the time support for color was becoming mainstream, but bundled hardware graphics support still tended to be minimal).

Running the application benchmarks takes a lot of time, plus the necessary software (which takes time to install from floppies, the distribution technology of the day). Running the C benchmarks is much quicker and simpler.

Ideally the C benchmarks are a reliable stand-in for the application benchmarks (meaning that only the C benchmarks need be run).

Let’s fit some regression models to the measurements of the 61 systems benchmarked, all supporting hardware floating-point (code+data). Surprisingly there is no mention of such an exercise being done by the Byte staff, even though one of the scientific benchmarks included regression fitting.

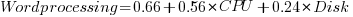

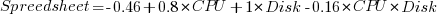

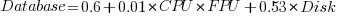

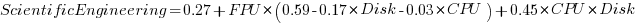

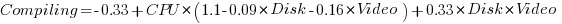

The following fitted equations explain around 90% of the variance of the data, i.e., they are good fits.

For word-processing, the CPU benchmark explains around twice as much as the Disk benchmark.

For spreadsheets, CPU and Disk contribute about the same.

Database is nearly all Disk.

Scientific/Engineering is FPU, plus interactions with other components.

Compiling is CPU, plus interactions with other components.

Byte’s benchmark reports were great eye candy, and readers probably took away a rough feel for the performance of various systems. Perhaps somebody at the time also fitted regression models to the data. The magazine contained plenty of adverts for software to do this.

Recent Comments