Archive

Pre-Internet era books that have not yet been bettered

It is a surprise to some that there are books written before the arrival of the Internet (say 1995) that have not yet been improved on. The list below is based on books I own and my thinking that nothing better has been published on that topic may be due to ignorance on my part or personal bias. Suggestions and comments welcome.

Before the Internet the only way to find new and interesting books was to visit a large book shop. In my case these were Foyles, Dillons (both in central London) and Computer Literacy (in Silicon valley).

Foyles was the most interesting shop to visit. Its owner was somewhat eccentric, books were grouped by publisher and within these subgroups alphabetic by author, and they stocked one of everything (many decades before Amazon’s claim to fame of stocking the long tail, but unlike Amazon they did not have more than one of the popular books). The lighting was minimal, every available space was piled with books (being tall was necessary to reach some books), credit card payment had to be transacted through a small window in the basement reached via creaky stairs or a 1930’s lift. A visit to the computer section at Foyles, which back in the day held more computer books than any other shop I have ever visited, was an afternoon’s experience (the end result of tight fisted management, not modern customer experience design), including the train journey home with a bundle of interesting books. Today’s Folyes has sensible lighting, a Coffee shop and 10% of the computer books it used to have.

When they can be found, these golden oldies are often available for less than the cost of the postage. Sometimes there are republished versions that are cheaper/more expensive. All of the books below were originally published before 1995. I have listed the ISBN for the first edition when there is a second edition (it can be difficult to get Amazon to list first editions when later editions are available).

“Chaos and Fractals” by Peitgen, Jürgens and Saupe ISBN 0387979034. A very enjoyable months reading. A second edition came out around 2004, but does not look to be that different from the 1992 version.

“The Terrible Truth About Lawyers” by Mark H. McCormack, ISBN 0002178699. Very readable explanation of how to deal with lawyers.

“Understanding Comics: The Invisible Art” by Scott McCloud. A must read for anybody interested in producing code that is easy to understand.

“C: A Reference Manual” by Samuel P. Harbison and Guy L. Steele Jr, ISBN 0-13-110008-4. Get the first edition from 1984, subsequent editions just got worse and worse.

“NTC’s New Japanese-English Character Dictionary” by Jack Halpern, ISBN 0844284343. If you love reading dictionaries you will love this.

“Data processing technology and economics” by Montgomery Phister. Technical details covering everything you ever wanted to know about the world of 1960’s computers; a bit of a specialist interest, this one.

I ought to mention “Godel, Escher, Bach” by D. Hofstadter, which I never rated but lots of other people enjoyed.

Producing software for money and/or recognition

In the commercial environment money makes the world go around, while in academia recognition (e.g., number of times your work is cited, being fawned over at conferences, impressive job titles) is the coin of the realm (there are a few odd balls who do it out of love for the subject or a desire to understand how things work, but modern academia is a large bureaucracy whose primary carrot is recognition).

There is an incentive problem for those writing software in academia; software does not attract much, if any, recognition.

Does the lack of recognition for writing software matter? Surely what counts are the research results, not the tools used to get there (be they writing software or doing mathematics).

A recent paper bemoans the lack of recognition for the development of Python packages for Astronomy researchers. Well, its too late now, they have written the software and everybody gets to make perfect copies for free.

What the authors of Astropy want, is for researchers who use this software to include a citation to it in any published papers. Do all 162 authors deserve equal credit? If a couple of people add a new package, should they get a separate citation? What if a new group of people take over maintenance, when should the citation switch over from the old authors/maintainers to the new ones? These are a couple of the thorny questions that need to be answered.

R is perhaps the most widely used academic developed software ecosystem. A small dedicated group of people has invested a lot of their time over many years to make something special. A lot more people have invested effort to create a wide variety of add-on packages.

The base R library includes the citation function, which returns the BibTeX information for a given package; ready to be added to a research papers work flow.

Both commercial and academic producers need to periodically create new versions to keep ahead of the competition, attract more customers and obtain income. While they both produce software to obtain ‘income’, commercial and academic software systems have different incentives when it comes to support for end users of the software.

Keeping existing customers happy is the way to get them to pay for upgrades and this means maintaining compatibility with what went before. Managers in commercial companies make sure that developers don’t break backwards compatibility (developers hate having to code around what went before and would love to throw it all away).

In the academic world it does not matter whether end users upgrade, as long as they cite the package, the version used is irrelevant; so there is a lot less pressure to keep backwards compatibility. Academics are supposed to create new stuff, they are researchers after all, so the incentives are pushing them to create brand new packages/systems to be seen as doing new stuff (and obtain a whole new round of citations). A good example is Hadley Wickham, who has created some great R packages, who seems to be continually moving on, e.g., reshape -> reshape2 -> tidyr (which is what any good academic is supposed to do).

The run-time performance of a system is something end users always complain about, but often get used to. The reason is invariably that there is little or no incentive to address this issue (for both commercial and academic systems). Microsoft Windows is slower than it need be and the R interpreter could go a lot faster (the design of the interpreter looks like something out of the 1980s; I’m seeing a lot of packages in R only, so the idea that R programs spend all their time executing in C/Fortran libraries may be out of date. Where is the incentive to use post-2000 designs?)

How many new versions of a software package can be produced before enough people stop being willing to pay for an update? How many different packages solving roughly the same problem can academics produce?

I don’t think producing new packages for income has a long term future.

Software architect is an illegal job title in the UK

If you are working in the UK with the job title “software architect”, or styling yourself as such, you are breaking the law. Yes, you are committing an offense under: Architects Act 1997 Part IV Section 20. In particular: “(1) A person shall not practise or carry on business under any name, style or title containing the word “architect” unless he is a person registered [F1 in Part 1 of the Register].”

The Architecture Registration Board are happy to take £142 off you, ever year, for the privilege of using architect in your job title. There is also the matter of a Part 3 examination; don’t know what that is.

If you really do like the word architect in your job title and don’t want to pay £142 a year, you could move into another line of business: “(2) Subsection (1) does not prevent any use of the designation “naval architect”, “landscape architect” or “golf-course architect”.” I am assuming that the he in the wording also applies to she‘s and that a sex change will not help.

Do building architects care? I suspect not. Are the police going to do anything about it? Well, if they don’t like you and are looking for some way of hauling you before the courts, the fine is not that bad.

Fortran 2008 Standard has been updated

An updated version of ISO/IEC 1539-1 Information technology — Programming languages — Fortran — Part 1: Base language has just been published. So what has JTC1/SC22/WG5 been up to?

This latest document is bug a release of the 2010 standard, known as Fortran 2008 (because the ANSI Standard from which the ISO Standard was derived, sed -e "s/ANSI/ISO/g" -e "s/National/International/g", was published in 2008) and incorporates all the published corrigenda. I must have been busy in 2008, because I did not look to see what had changed.

Actually the document I am looking at is the British Standard. BSI don’t bother with sed, they just glue a BSI Standards Publication page on the front and add BS to the name, i.e., BS ISO/IEC 1539-1:2010.

The interesting stuff is in Annex B, “Deleted and obsolescent features” (the new features are Fortranized versions of languages features you have probable seen elsewhere).

Programming language committees are known for issuing dire warnings that various language features are obsolescent and likely to be removed in a future revision of the standard, but actually removing anything is another matter.

Well, the Fortran committee have gone and deleted six features! Why wasn’t this on the news? Did the committee foresee the 2008 financial crisis and decide to sneak out the deletions while people were looking elsewhere?

What constructs cannot now appear in conforming Fortran programs?

- “Real and double precision DO variables. .. A similar result can be achieved by using a DO construct with no loop control and the appropriate exit test.”

What other languages call a for-loop, Fortran calls a DO loop. So loop control variables can no longer have a floating-point type.

- “Branching to an END IF statement from outside its block.”

An if-statement is terminated by the token sequence

END IF, which may have an optional label. It is no longer possible toGOTOthat label from outside the block of the if-statement. You are going to have to label the statement after it. - “PAUSE statement.”

This statement dates from the days when a computer (singular, not plural) had its own air-conditioned room and a team of operators to tend its every need. A

PAUSEstatement would cause a message to appear on the operators’ console and somebody would be dispatched to check the printer was switched on and had paper, or some such thing, and they would then resume execution of the paused program.I think WG5 has not seen the future here. Isn’t the

PAUSEstatement needed again for cloud computing? I’m sure that Amazon would be happy to quote a price for having an operator respond to aPAUSEstatement. - “ASSIGN and assigned GO TO statements and assigned format specifiers.”

No more assigning labels to variables and GOTOing them, as a means of leaping around 1,000 line functions. This modern programming practice stuff is a real killjoy.

- “H edit descriptor.”

First programmers stopped using punched cards and now the H edit descriptor have been removed from Fortran; Herman Hollerith no longer touches the life of working programmers.

In the good old days real programmers wrote

11HHello World. Using quote delimiters for string literals is for pansies. - “Vertical format control. … There was no standard way to detect whether output to a unit resulted in this vertical format control, and no way to specify that it should be applied; this has been deleted. The effect can be achieved by post-processing a formatted file.”

Don’t panic, C still supports the

\vescape sequence.

Student projects for 2016/2017

This is the time of year when students have to come up with an idea for their degree project. I thought I would suggest a few interesting ideas related to software engineering.

- The rise and fall of software engineering myths. For many years a lot of people (incorrectly) believed that there existed a 25-to-1 performance gap between the best/worst software developers (its actually around 5 to 1). In 1999 Lutz Prechelt wrote a report explaining out how this myth came about (somebody misinterpreted values in two tables and this misinterpretation caught on to become the dominant meme).

Is the 25-to-1 myth still going strong or is it dying out? Can anything be done to replace it with something closer to reality?

One of the constants used in the COCOMO effort estimation model is badly wrong. Has anybody else noticed this?

- Software engineering papers often contain trivial mathematical mistakes; these can be caused by cut and paste errors or mistakenly using the values from one study in calculations for another study. Simply consistency checks can be used to catch a surprising number of mistakes, e.g., the quote “8 subjects aged between 18-25, average age 21.3” may be correct because 21.3*8 == 170.4, ages must add to a whole number and the values 169, 170 and 171 would not produce this average.

The Psychologies are already on the case of Content Mining Psychology Articles for Statistical Test Results and there is a tool, statcheck, for automating some of the checks.

What checks would be useful for software engineering papers? There are tools available for taking pdf files apart, e.g., qpdf, pdfgrep and extracting table contents.

- What bit manipulation algorithms does a program use? One way of finding out is to look at the hexadecimal literals in the source code. For instance, source containing

0x33333333,0x55555555,0x0F0F0F0Fand0x0000003Fin close proximity is likely to be counting the number of bits that are set, in a 32 bit value.Jörg Arndt has a great collection of bit twiddling algorithms from which hex values can be extracted. The numbers tool used a database of floating-point values to try and figure out what numeric algorithms source contains; I’m sure there are better algorithms for figuring this stuff out, given the available data.

Feel free to add suggestions in the comments.

Does public disclosure of vulnerabilities improve vendor response?

Does public disclosure of vulnerabilities in vendor products result in them releasing a fix more quickly, compared to when the vulnerability is only disclosed to the vendor (i.e., no public disclosure)?

A study by Arora, Krishnan, Telang and Yang investigated this question and made their data available 🙂 So what does the data have to say (its from the US National Vulnerability Database over the period 2001-2003)?

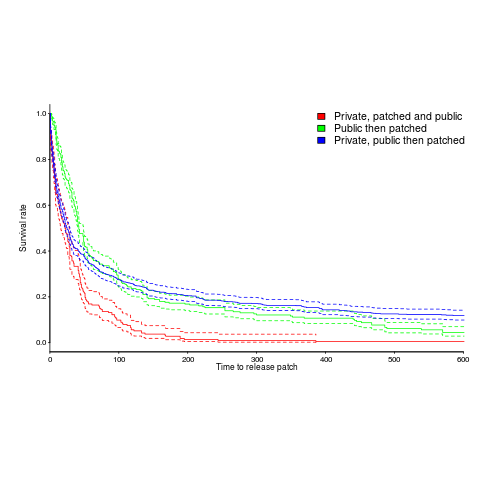

The plot below is a survival curve for disclosed vulnerabilities, the longer it takes to release a patch to fix a vulnerability, the longer it survives.

There is a popular belief that public disclosure puts pressure on vendors to release patchs more quickly, compared to when the public knows nothing about the problem. Yet, the survival curve above clearly shows publically disclosed vulnerabilities surviving longer than those only disclosed to the vendor. Is the popular belief wrong?

Digging around the data suggests a possible explanation for this pattern of behavior. Those vulnerabilities having the potential to cause severe nastiness tend not to be made public, but go down the path of private disclosure. Vendors prioritize those vulnerabilities most likely to cause the most trouble, leaving the less troublesome ones for another day.

This idea can be checked by building a regression model (assuming the necessary data is available and it is). In one way or another a lot of the data is censored (e.g., some reported vulnerabilities were not patched when the study finished); the Cox proportional hazards model can handle this (in fact, its the ‘standard’ technique to use for this kind of data).

This is a time dependent problem, some vulnerabilities start off being private and a public disclosure occurs before a patch is released, so there are some complications (see code+data for details). The first half of the output generated by R’s summary function, for the fitted model, is as follows:

Call:

coxph(formula = Surv(patch_days, !is_censored) ~ cluster(ID) +

priv_di * (log(cvss_score) + y2003 + log(cvss_score):y2002) +

opensource + y2003 + smallvendor + log(cvss_score):y2002,

data = ISR_split)

n= 2242, number of events= 2081

coef exp(coef) se(coef) robust se z Pr(>|z|)

priv_di 1.64451 5.17849 0.19398 0.17798 9.240 < 2e-16 ***

log(cvss_score) 0.26966 1.30952 0.06735 0.07286 3.701 0.000215 ***

y2003 1.03408 2.81253 0.07532 0.07889 13.108 < 2e-16 ***

opensource 0.21613 1.24127 0.05615 0.05866 3.685 0.000229 ***

smallvendor -0.21334 0.80788 0.05449 0.05371 -3.972 7.12e-05 ***

log(cvss_score):y2002 0.31875 1.37541 0.03561 0.03975 8.019 1.11e-15 ***

priv_di:log(cvss_score) -0.33790 0.71327 0.10545 0.09824 -3.439 0.000583 ***

priv_di:y2003 -1.38276 0.25089 0.12842 0.11833 -11.686 < 2e-16 ***

priv_di:log(cvss_score):y2002 -0.39845 0.67136 0.05927 0.05272 -7.558 4.09e-14 *** |

The explanatory variable we are interested in is priv_di, which takes the value 1 when the vulnerability is privately disclosed and 0 for public disclosure. The model coefficient for this variable appears at the top of the table and is impressively large (which is consistent with popular belief), but at the bottom of the table there are interactions with other variable and the coefficients are less than 1 (not consistent with popular belief). We are going to have to do some untangling.

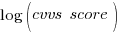

cvss_score is a score, assigned by NIST, for the severity of vulnerabilities (larger is more severe).

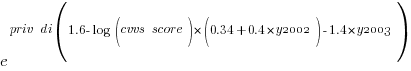

The following is the component of the fitted equation of interest:

where:  is 0/1,

is 0/1,  varies between 0.8 and 2.3 (mean value 1.8),

varies between 0.8 and 2.3 (mean value 1.8),  and

and  are 0/1 in their respective years.

are 0/1 in their respective years.

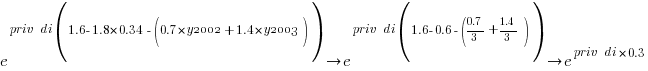

Applying hand waving to average away the variables:

gives a (hand waving mean) percentage increase of  , when

, when priv_di changes from zero to one. This model is saying that, on average, patches for vulnerabilities that are privately disclosed take 35% longer to appear than when publically disclosed

The percentage change of patch delivery time for vulnerabilities with a low cvvs_score is around 90% and for a high cvvs_score is around 13% (i.e., patch time of vulnerabilities assigned a low priority improves a lot when they are publically disclosed, but patch time for those assigned a high priority is slightly improved).

I have not calculated 95% confidence bounds, they would be a bit over the top for the hand waving in the final part of the analysis. Also the general quality of the model is very poor; Rsquare= 0.148 is reported. A better model may change these percentages.

Has the situation changed in the 15 years since the data used for this analysis? If somebody wants to piece the necessary data together from the National Vulnerability Database, the code is ready to go (ok, some of the model variables may need updating).

Update: Just pushed a model with Rsquare= 0.231, showing a 63% longer patch time for private disclosure.

Recent Comments