Archive

Only compiler vendor customers, not its users, count

The hardest thing about working on compilers is getting somebody to pay you to do it (its a close run race against having the cpu instructions chop and change under you during initial development, but that’s another story). The major shift of compiler vendor business model from proprietary to open source has significant implications for users of compilers. Note I said user not customer, only one of them pays money. Under the commercial model there was usually a very direct connection between compiler user and customer (even in large organizations users rather than the manager who makes the purchase decision are often regarded by vendors as the customer), while under the Open source model most users are not customers (paying money for a distribution does not make you a customer of the people maintaining the compiler who probably don’t receive any of the money you spent).

Like all good businesses compiler vendors don’t want to make their customers unhappy. There is one way guaranteed to make all customers so unhappy that they will remember the experience for years; ship a new compiler release that breaks their existing code (this usually happens because their is a previously undetected bug in the code or because use is being made of an implemented defined/undefined part of the language {the compiler gets to decide what to do when it encounters such code}). Not breaking existing customer code is priority ONE in any commercial compiler development group.

Proprietary vendors have so many customers its almost impossible for them to know in advance what changes will break existing code and the only option is to be ultra conservative about adding new code optimizations (new optimizations can so easily change how source containing undefined behavior is processed). Ultra conservative is the polite term, management paranoia would be more accurate. There is another advantage to vendors for not breaking their customers’ code, they are protected against competition by new market entrants; a new vendor with a shiny go faster compiler doing all the optimizations the existing vendor was not willing to do in case it broke existing code will quickly find out that the performance improvements they offer are rarely big enough to tempt potential customers to switch compilers. Really, the only time companies switch compiler is when they have to port to a new platform to make a sale or their existing vendor goes bust.

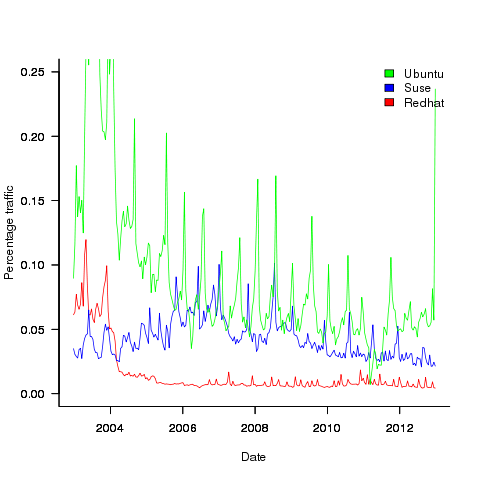

Open source vendors (e.g., those commercially involved in support/maintenance of gcc or llvm) have relatively few customers (e.g., big companies paying them lots of money for specific reasons) and as always these customers want existing code to continue to work. If the customer is paying for a code generator for a previously unsupported processor then there probably isn’t any existing code for that processor; it is a fact of life that porting source to a new processor always involves work. Some Linux distributors (e.g., Suse and Redhat) are customers in the sense that they pay the salary of developers who spend a lot of their time in compiler maintenance/upgrades and presumably work to try and ensure that the code in their respective Linux distributions does not get broken.

Compiler users who are not customers don’t count on the code breakage front (well, count for very very little, if an update broke lots of different developers’ code and enough fuss was made there might well be an update than unbroke the previous one).

What can a user do if code that used to work ok is broken when compiled with a later version of the compiler? The obvious answer is to continue using the older version that produces the desired behavior, fixing the code causing the problem is a better answer (but might involve a lot of work). There is no point in flaming the compiler developers, you are not contributing towards their upkeep; Open source does not give users the consideration that a customer enjoys.

US DoD software development data now available

I found a huge resource of software development data last weekend at the Defense Cost and Resource Center (DCARC). The Software Resource Data Report part of this resource contains information on around 2,000 major software development projects (any US DOD project over $20 million+) giving details of schedule, developer experience, money spent per year, lines of code, amount of code change, hours spent on at various stages of development and a whole lot more.

The catch? The raw data is only available to DoD analysts 🙁 I was a bit surprised that laws got passed mandating the collection of this kind of information and a lot less surprised that the DoD don’t want to make detailed development information for missile systems, radar installations, etc available to some interested parties; those of us who are not going to go out and build such systems are collateral damage.

What is the US government’s reason for requiring the collection and dissemination of this information? They want to reduce the huge amount of money currently being spent on the software development component of military systems (often a very large slice of the total project costs). Will having this data available reduce costs? It will certainly get project managers a lot more worried about project cost/time overruns if they know that lots of people outside the project are going to see their ‘failure’.

Hopefully there are Open data activists in the US who will push for a redacted form of the software data being made available to all interested parties, rather like that provided by the USA Spending site. In the meantime there are a few lucky DoD analysts who have gone from famine to feast and are probably having trouble figuring out where to start.

Update

The military like to rename things, and move stuff around. We now have: Cost Assessment Data Enterprise, with its software data.

Programs spent a lot of time repeating themselves

Inexperienced software developers are always surprised that programs used by lots of people can contain many apparently non-trivial faults and yet continue to operate satisfactorily; experienced developers become familiar with this state of affairs and tend to shrug their shoulders. I have previously written about how software is remarkably fault tolerant. I think this fault tolerance is telling us something important about the characteristics of software and while I have some ideas about what it might be I don’t yet have a good handle (or data) on what is going on to lay out my argument.

In this article I’m going to talk about another characteristic of program execution which I think is connected to program fault tolerance and is also very surprising.

Software differs from hardware in that for a given set of inputs a program will always produce the same output, it will not wear out like hardware and eventually do something different (to simplify things I’m ignoring the possible consequences of uninitialized variables and treating any timing dependencies as part of the input set). So for a fault to be observed different input is required (assuming one exists and none appeared for the first input set).

I used to assume that during a program’s execution the basic cpu operations (e.g., binary arithmetic and bitwise operations) processed a huge number of different combinations of input values (e.g., there are  combinations of input value for a 16-bit add operation) and was very surprised to find out this is not the case. For many programs around 80% of all executed instructions are repeat instructions, that is a given instruction, such as add, operates on the same combinations of input values that it has previously operated on (while executing the program) to generate an output value that is identical to the one previously generated from these input values. If we count the number of static instructions in the program (i.e., the number of assembly instructions in a listing of the disassembled executable program) then 20% of them account for 90% of the repeated instructions; so a small amount of code (i.e., 20%) is not only responsible for most dynamically executed instructions but around 72% (i.e., 80%*90%) of these instructions repeat previous computations. If a large percentage of a what goes on internally within a program is repetition is it any surprise that once it works for a reasonable set of inputs it will probably work on other inputs?

combinations of input value for a 16-bit add operation) and was very surprised to find out this is not the case. For many programs around 80% of all executed instructions are repeat instructions, that is a given instruction, such as add, operates on the same combinations of input values that it has previously operated on (while executing the program) to generate an output value that is identical to the one previously generated from these input values. If we count the number of static instructions in the program (i.e., the number of assembly instructions in a listing of the disassembled executable program) then 20% of them account for 90% of the repeated instructions; so a small amount of code (i.e., 20%) is not only responsible for most dynamically executed instructions but around 72% (i.e., 80%*90%) of these instructions repeat previous computations. If a large percentage of a what goes on internally within a program is repetition is it any surprise that once it works for a reasonable set of inputs it will probably work on other inputs?

Hang on you say, perhaps the percentage of repeat instructions is very high for a given set of external input values (e.g., a file to compress, compile or display as a jpeg) but there is a lot of variation in the set of repeat instructions between different external inputs. Measurements suggest this is not the case, with around 20% of dynamic instructions having input values that can be traced to external program input (12-30% come from globally initialized variables and the rest are generated internally).

There is a technical detail that reduces the repeat instruction percentages given about by a factor of two; researchers always like to give the most favorable numbers and for this discussion we need to make a distinction between local repetition which counts one instruction and its inputs/outputs at a particular point in the code and global repetition which counts all instructions of a given kind irrespective of where they occur in the code. A discussion of fault behavior needs to look at local repetition, not global repetition; there is a factor of two difference in the dynamic percentage and some reduction in the percentage of static instructions involved.

Sometimes the term redundant computation is used, as if the cpu should remember what happened last time it executed an instruction with a particular set of inputs and reuse the answer it got last time. Researchers have proposed caching the results of executing an instruction with a given set of input values and speeding things up or saving power by reusing previous results rather than recalculating them (a possible speedup of 13% on SPEC95 is claimed for a reuse buffer containing 4096 entries).

So a small percentage of the instructions in a program account for most of the execution time (a generally known characteristic) and around 30% of the executed instructions operate on input values they have processed before to produce output they have produced before (to the extent that a cache containing a few thousand entries is big enough to hold the a large percentage of the duplicates). If encountering a new fault requires different execution behavior to occur then having a large percentage of a program always doing the same thing (i.e., same input values same output value) will have a significant impact on the likelihood of encountering a fault. Part of the reason programs are fault tolerant is because external input values don’t have a big an impact on program behavior as we might have thought.

Researchers have also investigated repeats involving units larger than one instruction, such as sub-blocks (a sequence of instructions smaller than a basic block) and even complete functions or just the mathematical ones.

The raw data is obtained using cpu simulators to monitor programs as they are executed, logging the values read as input by an instruction and the value generated as output (in most cases the values are read from registers and written to a register). A single study might log billions of instructions from the SPEC benchmark.

%, would not have passed).

%, would not have passed).

Recent Comments