Archive

Parsing R code: Freedom of expression is not always a good idea

With my growing interest in R it was inevitable that I would end up writing a parser for it. The fact that the language is relatively small (the add-on packages do the serious work) hastened the event because it did not look like much work; famous last words. I knew about R’s design and implementation being strongly influenced by the world view of functional programming and this should have set alarm bells ringing; this community have a history of willfully ignoring some of the undesirable consequences of their penchant of taking simple ideas and over generalizing them (i.e., I should have expected hidden complications).

While the official R language definition only contains a tiny fraction of the information needed to create a full implementation I decided to use it rather than ‘cheat’ and look at the R project implementation sources. I took as my oracle of correctness the source code of the substantial amount of R in its 3,000+ package library. This approach would help me uncover some of the incorrect preconceived ideas I have about how R source fits together.

I started with a C lexer and chopped and changed (it is difficult to do decent error recovery in automatically generated lexers and I prefer to avoid them). A few surprises cropped up ** is supported as an undocumented form of ^ and by default ]] must be treated as two tokens (e.g., two ] in a[b[c]] but one ]] in d[[e]], an exception to the very commonly used maximal munch rule).

The R grammar is all about expressions with some statement bits and pieces thrown in. R operator precedence follows that of Fortran, except the precedence of unary plus/minus has been increased to be above multiply/divide (instead of below). Easy peasy, cut and paste an existing expression grammar and done by tea time :-). Several tea times later I have a grammar that parses all of the R packages (apart from 80+ real syntax errors contained therein and a hand full of kinky operator combinations I’m not willing to contort the grammar to support). So what happened?

Two factors accounts for most of the difference between my estimate of the work required and the actual work required:

- my belief that a well written grammar has no ambiguities (while zero is a common goal for many projects a handful might be acceptable if the building is on fire and I have to leave). A major advantage of automatic generation of parser tables from a grammar specification is being warned about ambiguities in the grammar (either shift/reduce or reduce/reduce conflicts). At an early stage I was pulling my hair out over having 59 conflicts and decided to relent and look at the R project source and was amazed to find their grammar has 81 ambiguities!

I have managed to get the number of ambiguities down to the mid-30s, not good at all but it will have to do for the time being.

- some of my preconceptions about of how R syntax worked were seriously wrong. In some cases I spotted my mistake quickly because I recognized the behavior from other languages I know, other misconceptions took a lot longer to understand and handle because I did not believe anybody would design expression evaluation to work that way.

The root cause of the difference can more concretely be traced to the approach to specifying language syntax. The R project grammar is written using the form commonly seen in functional language implementations and introductory compiler books. This form has the advantage of being very short and apparently simple; the following is a cut down example in a form of BNF used by many parser generators:

expr : expr op expr | IDENTIFIER ; op : &' | '==' | '>' | '+' | '-' | '*' | '/' | '^' ; |

This specifies a sequence of IDENTIFIERs separated by binary operators and is ambiguous when the expression contains more than two operators, e.g., a + b * c can be parsed in more than one way. Parser generators such as Yacc will complain and flag any ambiguity and pick one of the possibilities as the default behavior for handling a given ambiguity; developers can specify additional grammar information in the file read by Yacc to guide its behavior when deciding how to resolve specific ambiguities. For instance, the relative precedence of operators can be specified and this information would be used by Yacc to decide that the ambiguous expression a + b * c should be parsed as-if it had been written like a + (b * c) rather than like (a + b) * c. The R project grammar is short, highly ambiguous and relies on the information contained in the explicitly specified relative operator precedence and associativity directives to resolve the ambiguities.

An alternative method of specifying the grammar is to have a separate list of grammar rules for each level of precedence (I always use this approach). In this approach there is no ambiguity, the precedence and associativity are implicitly specified by how the grammar is written. Obviously this approach creates much longer grammars, there will be at least two rules for every precedence level (19 in R, many with multiple operators). The following is a cut down example containing just multiple, divide, add and subtract:

... multiplicative_expression: cast_expression | multiplicative_expression '*' cast_expression | multiplicative_expression '/' cast_expression ; additive_expression: multiplicative_expression | additive_expression '+' multiplicative_expression | additive_expression '-' multiplicative_expression ; ... |

The advantages of this approach are that, because there are no ambiguities, the developer can see exactly how the grammar behaves and if an ambiguity is accidentally introduced when editing the source it should be noticed when the parser generator reports a problem where previously there were none (rather than the new ambiguity being hidden in the barrage of existing ones that are ignored because they are so numerous).

My first big misconception about R syntax was to think that R had statements, it doesn’t. What other languages would treat as statements R always treats as expressions. The if, for and while constructs have values (e.g., 2*(if (x == y) 2 else 4)). No problem, I used Algol 68 as an undergraduate, which supports this kind of usage. I assumed that when an if appeared as an operand in an expression it would have to be bracketed using () or {} to avoid creating a substantial number of parsing ambiguities; WRONG. No brackets need be specified, the R expression if (x == y) 2 else 4+1 is ambiguous (it could be treated as-if it had been written if (x == y) 2 else (4+1) or (if (x == y) 2 else 4)+1) and the R project grammar relies on its precedence specification to resolve the conflict (in favor of the first possibility listed above).

My next big surprise came from the handling of unary operators. Most modern languages give all unary operators the same precedence, generally higher than any binary operator. Following Fortran the R unary operators have a variety of different precedence levels; however R did not adopt the restrictions Fortran places on where unary operators can occur.

I assumed that R had adopted the restrictions used by other languages containing unary operators at different precedence levels, e.g., not allowing a unary operator token to follow a binary operator token (i.e., there has to be an intervening opening parenthesis); WRONG. R allows me to write 1 == !1 < 0, while Fortran (and Ada, etc) require that a parenthesis be inserted between the operands == ! (hopefully resulting in the intent being clearer).

I had to think a bit to come up with an explicit set of grammar rules to handle R unary operator's freedom of expression (without creating any ambiguities).

Stepping back from the details. My view is that programming language syntax should be designed to reduce the number of mistakes developers make. Requiring that brackets appear in certain contexts helps prevent mistakes by the original author and subsequent readers of the code.

Claims that R (or any other language) syntax is 'natural' is clearly spurious and really no more than a statement of preference by the speaker. Our DNA has not yet been found to equip us to handle one programming language better than another.

Over the coming months I hope to have the time to analyse R source looking for faults that might not have occurred had brackets been used. Also how much code might be broken if R started to require brackets in certain contexts?

An example of the difference that brackets can make is provided by the handling of the unary ! operator in R and C/C++/Java/etc. Take the expression !x > y, which R parses as-if written !(x > y) and C/C++/Java/etc as if written (!x) > y. I would not claim that either is better than the other from the point of view of developers getting the behavior right, I know that some C programmers get it wrong and I suspect that some R programmers do too.

By increasing the precedence of unary plus/minus the R designers ensured that 8/-2/2 was parsed like (8/-2)/2 rather than 8/(-2/2).

Number of possible different one line programs

Writing one line programs is a popular activity in some programming languages (e.g., awk and Perl). How many different one line programs is it possible to write?

First we need to get some idea of the maximum number of characters that written on one line. Microsoft Windows XP or later has a maximum command line length of 8191 characters, while Windows 2000 and Windows NT 4.0 have a 2047 limit. POSIX requires that _POSIX2_LINE_MAX have a value of at least 2048.

In 2048 characters it is possible to assign values to and use at least once 100 different variables (e.g., a1=2;a2=2.3;....; print a1+a2*a3...). To get a lower bound lets consider the number of different expressions it is possible to write. How many functionally different expressions containing 100 binary operators are there?

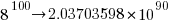

If a language has, say, eight binary operators (e.g., +, -, *, /, %, &, |, ^), then it is possible to write  visually different expressions containing 100 binary operators. Some of these expressions will be mathematically equivalent (adopting the convention of leaving out the operands), e.g.,

visually different expressions containing 100 binary operators. Some of these expressions will be mathematically equivalent (adopting the convention of leaving out the operands), e.g., + * can also be written as * + (the appropriate operands will also have the be switched around).

If we just consider expressions created using the commutative operators (i.e., +, *, &, |, ^), then with these five operators it is possible to write 1170671511684728695563295535920396 mathematically different expressions containing 100 operators (assuming the common case that the five operators have different precedence levels, which means the different expressions have a one to one mapping to a rooted tree of height five); this  is a lot smaller than

is a lot smaller than  .

.

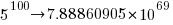

Had the approximately  computers/smart phones in the world generated expressions at the rate of

computers/smart phones in the world generated expressions at the rate of  per second since the start of the Universe,

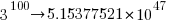

per second since the start of the Universe,  seconds ago, then the

seconds ago, then the  created so far would be almost half of the total possible.

created so far would be almost half of the total possible.

Once we start including the non-commutative operators such a minus and divide the number of possible combinations really starts to climb and the calculation of the totals is real complicated. Since the Universe is not yet half way through the commutative operators I will leave working this total out for another day.

Update (later in the day)

To get some idea of the huge jump in number of functionally different expressions that occurs when operator ordering is significant, with just the three operators -, / and % is is possible to create  mathematically different expressions. This is a factor of

mathematically different expressions. This is a factor of  greater than generated by the five operators considered above.

greater than generated by the five operators considered above.

If we consider expressions containing just one instance of the five commutative operators then the number of expressions jumps by another two orders of magnitude to  . This count will continue to increase for a while as more commutative operators are added and then start to decline; I have not yet worked things through to find the maxima.

. This count will continue to increase for a while as more commutative operators are added and then start to decline; I have not yet worked things through to find the maxima.

Update (April 2012).

Sequence A140606 in the On-Line Encyclopedia of Integer Sequences lists the number of inequivalent expressions involving n operands; whose first few values are: 1, 6, 68, 1170, 27142, 793002, 27914126, 1150212810, 54326011414, 2894532443154, 171800282010062, 11243812043430330, 804596872359480358, 62506696942427106498, 5239819196582605428254, 471480120474696200252970, 45328694990444455796547766, 4637556923393331549190920306

Birth month for compiler writers

Today is my birthday and an event from a long ago project springs to mind. All four of us from the UK arm of the team were born in February, one person on the same day as me (Happy Birthday Mick, where ever you are). This clustering of birth months led us to the obvious conclusion that the best compiler writers are born in February. Over the years I have retold this story to other compiler writers and found out their birth month. Now I will try and be a bit more scientific and have set up a survey (see below).

What counts as a compiler and what does somebody have to do to be considered a compiler writer (lets stay away from the issue of quality)? I would include software that performs computer language translation to another language (i.e., not just intermediate code or assembler) and static analysis of source provided it involved a lot of semantics (i.e., not working on the GUI that presents the data). I would exclude writing test cases, documentation, project management and maintenance (i.e., only fixing faults and dealing with customer queries).

I would classify a compiler writer as somebody who spent a substantial amount of their time working almost exclusively on writing a compiler. How substantial? Well, I think it ought o be possible to do something useful in about 4 months (I thought about saying 6 months, but decided to be generous.

Please take part, even if you do not consider yourself to be a compiler writer. A control group is always useful (perhaps readers of this blog have a preferred birth month)

I will make the numbers available and discuss them in a future article (probably in March).

[SURVEYS 1]

If anybody else is interested in running a survey, the surveys WordPress plugin allows more than one question to be specified and worked better than the other popular plugins for me (there is one bug that needs to be fixed: show_survey.php, line 51 should be:

$email_body = t("Hi,\nThere is a new result for the survey at %s...\n", $_SERVER['REQUEST_URI']); ).

February 2012 news in the programming language standard’s world

Yesterday I was at the British Standards Institute for a meeting of the programming languages committee. Some highlights and commentary:

- The first Technical Corrigendum (bug fixes, 47 of them) for Fortran 2008 was approved.

- The Lisp Standard working group was shutdown, through long standing lack of people interested in taking part; this happened at the last SC22 meeting, the UK does not have such sole authority.

- WG14 (C Standard) has requested permission to start a new work item to create a new annex to the standard containing a Secure Coding Standard. Isn’t this the area of expertise of WG23 (Language vulnerabilities)? Well, yes; but when the US Department of Homeland Security is throwing money at cyber security increasing the number of standards’ groups working on the topic creates more billable hours for consultants.

- WG21 (C++ Standard) had 73 people at their five day meeting last week (ok, it was in Hawaii). Having just published a 1,300+ page Standard which no compiler yet comes close to implementing they are going full steam ahead creating new features for a revised standard they aim to publish in 2017. Does the “Hear about the upcoming features in C++” blogging/speaker circuit/consulting gravy train have that much life left in it? We will see.

The BSI building has new lifts (elevators in the US). To recap, lifts used to work by pressing a button to indicate a desire to change floors, a lift would arrive, once inside one or more people needed press buttons specifying destination floor(s). Now the destination floor has to be specified in advance, a lift arrives and by the time you have figured out there are no buttons to press on the inside of the lift the doors open at the desired floor. What programming language most closely mimics this new behavior?

Mimicking most languages of the last twenty years the ground floor is zero (I could not find any way to enter a G). This rules out a few languages, such as Fortran and R.

A lift might be thought of as a function that can be called to change floors. The floor has to be specified in advance and cannot be changed once in the lift, partial specialization of functions and also the lambda calculus springs to mind.

In a language I just invented:

// The lift specified a maximum of 8 people lift = function(p_1, p_2="", p_3="", p_4="", p_5="", p_6="", p_7="", p_8="") {...} // Meeting was on the fifth floor first_passenger_5th_floor = function lift(5); second_passenger_4th_floor = function first_passenger_fifth_floor(4); |

the body of the function second_passenger_4th_floor is a copy of the body of lift with all the instances of p_1 and p_2 replaced by the 5 and 4 respectively.

Few languages have this kind of functionality. The one that most obviously springs to mind is Lisp (partial specialization of function templates in C++ does not count because they are templates that are still in need of an instantiation). So the ghost of the Lisp working group lives on at BSI in their lifts.

O Cobol, Cobol! wherefore art thou Cobol?

Programming language popularity has been in the news again and as always Cobol is nowhere to be seen in the rankings. Even back in the day, when people in the know generally considered Cobol to be the most widely used language it often failed to appear, or appeared very low down, in language rankings. I think Cobol’s unrepresentative rankings occur because users of Cobol are assumed to hang out in the same places as users of other programming languages. The letters bo in the name is the clue, business oriented people are not usually interested in technical stuff and tend not to read the magazines (and these days web sites) that users of the other popular languages read.

Cobol is very business domain specific and does not contain functionality that makes it a reasonable choice for writing applications in other domains (it is possible to write a compiler in Cobol, for instance the Micro Focus compiler is written in Cobol). It has very sophisticated languages constructs for handling data having the most convoluted formats imaginable, essential in the business world which has to process data whose format has evolved over the years into a tangled mess (developers have to deal with spaghetti code, business has to deal with spaghetti data formats). Cobol’s control flow and code structuring facilities are primitive (all variables are global and the perform statement is very similar to the gosub statement found in Basic’s that are line number based) because business data processing tends to be relatively simple and programs to handle them are generally small (the large Cobol programs of legend are invariably made up of lots of small programs run in series with complicated data format dependencies between them).

I started to realise just how different Cobol is when working on my first Cobol code generator (yes it was written in Cobol). If a processor has lots of registers it is usually worthwhile to dedicate one to holding the value zero (of the 32 registers supported by most RISC processors, often only 31 can hold different values, one is dedicated to returning zero when read from and ignores any value written to it), in the case of Cobol it is considered worthwhile to dedicate a register to hold 0x20202020 (four space characters) rather than zero.

Is Cobol still the most widely used language today? No, I don’t think so. Business people love spreadsheets which means developers have switched to writing pre/post data format processing code, previously in Cobol, in Visual Basic (to convert input data into a form accepted by the spreadsheet and then print the results of the spreadsheet calculations in a presentable format); this Visual Basic source can often have a Cobol-like feel to it. This spreadsheet usage also resulted in the comma separated list becoming a widely used format for data representation, eroding Cobol’s unique selling point of sophisticated input/output data format processing.

What does language popularity mean? Does using a language you don’t like count towards it being popular? There are several languages I like and very rarely get to use, does this mean I don’t get to contribute to their popularity?

In these tough financial times the number of job adverts requiring knowledge of a specified language is probably of more interest than number of posts to web sites. One job search site lists 3,032 Cobol jobs and counting job ad hits for the top languages listed in a recent popularity poll puts Cobol at the bottom end of the cluster of highest ranked languages.

On mainframes I think Cobol is likely to still be No. 1; it is probably impossible to replace the dominant language in a niche market.

Recent Comments