Archive

Creating a global Standard requires being politically neutral

Governments actively promote Standards because following them saves their citizens time and money. The UK and US have contrasting rationales, with the UK focusing on savings achieved through repeated use of standardized items and the US focusing on the repeated use of skills people acquired through using a standardized item (i.e., reduced training costs).

Manufacturers wanting to export products want to be able to ship identical products all over the world, i.e., not have to make costly changes for different national markets. To be able to do this, they need the rest of the world to have a Standard way of doing things. The once dominant military and industrial status of Great Britain, and now the US, motivated them to create and encourage other countries to follow the Standards they created.

These days, most programming language Standards work is done by people employed by US companies attending an international committee, SC22, with (currently) 28 countries paying to be P (participating) members and 21 countries as O (observing) members (most countries don’t appear to have any active involvement in language standards). The reason for the dominance of US companies is that few non-US companies are willing to fund staff to do Standard’s work. For a few languages SC22 essentially rubber stamps documents produced elsewhere, e.g., most of the Cobol work used to be done by a US committee and ECMAScript (aka JavaScript) work is done in a European committee mostly attended by US companies.

Other countries sometimes get to dominate the creation of a language Standard, e.g., the UK led the Pascal Standard work. At the last SC22 meeting, a person from the US lamented that Europe was set to become the dominant driver of the Ada Standard. I resisted the urge to cheer: Make Europe Great Again.

Getting an international Standard adopted throughout the world requires that ISO be politically neutral and accept any sovereign country as a member (provided they pay the membership fees). For instance, North Korea is a member of ISO.

The only politics I have previously seen in programming language standard meetings has involved company rivalry, not geopolitical rivalry. A recent request for comment from SC2 (the ISO committee responsible for coded character sets; readers are more likely to be familiar with Unicode, essentially the same information published by a non-profit consortium based in California) looks like geopolitics, in the sense of geopolitical virtue signalling.

The document is: Request for SC2 member comments on proposal to encode “Ruble sign with double vertical stem”. What does the character “Ruble sign with double vertical stem” look like? To quote the document: “The proposed character is a text element that cannot be represented by any existing character or character sequence.” Readers will have to imagine Russia’s Ruble currency symbol, ₽, with two vertical stems (I assume these stems are short antennae like lines).

What is the geopolitical connection? Readers will be aware of Russia’s invasion of Ukraine, but may not be aware of Russia’s involvement in Transnistria (quoting Wikipedia, “… a landlocked breakaway state internationally recognized as part of Moldova.”). Since 1994, the proposed character has been used as the Transnistria currency symbol.

The request for comment includes a “Non-technical considerations” section summarises various controversy points, and finishes with: “We are not aware of any non-technical criteria having been used by SC2 or WG2 in the past that could be applied to disqualify this character. We are also concerned that adopting a criterion that allows for opposing a character because of association with politically or socially defined user communities could be problematic.”

The proposed character is not included in ISO 4217 (which defines numeric codes for the representation of currencies). However, SC22 does not require that a character used to represent a currency be included in ISO 4217. Previously, SC22 has accepted currency characters that are not in ISO 4217.

Is this a one-off objection, or does it mark the start of a stream of requests to remove one or more politically incorrect characters from ISO 10646/Unicode?

A lot of people put a lot of effort into creating a unified Standard for all the characters created by the World’s people. I hope the destructive nature of virtue signalling does not take hold in programming language Standard ecosystem.

2021 in the programming language standards’ world

Last Tuesday I was on a Webex call (the British Standards Institute’s use of Webex for conference calls predates COVID 19) for a meeting of IST/5, the committee responsible for programming language standards in the UK.

There have been two developments whose effect, I think, will be to hasten the decline of the relevance of ISO standards in the programming language world (to the point that they are ignored by compiler vendors).

- People have been talking about switching to online meetings for years, and every now and again someone has dialed-in to the conference call phone system provided by conference organizers. COVID has made online meetings the norm (language working groups have replaced face-to-face meetings with online meetings). People are looking forward to having face-to-face meetings again, but there is talk of online attendance playing a much larger role in the future.

The cost of attending a meeting in person is the perennial reason given for people not playing an active role in language standards (and I imagine other standards). Online attendance significantly reduces the cost, and an increase in the number of people ‘attending’ meetings is to be expected if committees agree to significant online attendance.

While many people think that making it possible for more people to be involved, by reducing the cost, is a good idea, I think it is a bad idea. The rationale for the creation of standards is economic; customer costs are reduced by reducing

diversityincompatibilities across the same kind of product., e.g., all standard conforming compilers are consistent in their handling of the same construct (undefined behavior may be consistently different). When attending meetings is costly, those with a significant economic interest tend to form the bulk of those attending meetings. Every now and again somebody turns up for a drive-by-shooting, i.e., they turn up for a day to present a paper on their pet issue and are never seen again.Lowering the barrier to entry (i.e., cost) is going to increase the number of drive-by shootings. The cost of this spray of pet-issue papers falls on the regular attendees, who will have to spend time dealing with enthusiastic, single issue, newbies,

- The International Organization for Standardization (ISO is the abbreviation of the French title) has embraced the use of inclusive terminology. The ISO directives specifying the Principles and rules for the structure and drafting of ISO and IEC documents, have been updated by the addition of a new clause: 8.6 Inclusive terminology, which says:

“Whenever possible, inclusive terminology shall be used to describe technical capabilities and relationships. Insensitive, archaic and non-inclusive terms shall be avoided. For the purposes of this principle, “inclusive terminology” means terminology perceived or likely to be perceived as welcoming by everyone, regardless of their sex, gender, race, colour, religion, etc.

New documents shall be developed using inclusive terminology. As feasible, existing and legacy documents shall be updated to identify and replace non-inclusive terms with alternatives that are more descriptive and tailored to the technical capability or relationship.”

The US Standards body, has released the document INCITS inclusive terminology guidelines. Section 5 covers identifying negative terms, and Section 6 deals with “Migration from terms with negative connotations”. Annex A provides examples of terms with negative connotations, preceded by text in bright red “CONTENT WARNING: The following list contains material that may be harmful or

traumatizing to some audiences.”“Error” sounds like a very negative word to me, but it’s not in the annex. One of the words listed in the annex is “dummy”. One member pointed out that ‘dummy’ appears 794 times in the current Fortran standard, (586 times in ‘dummy argument’).

Replacing words with negative connotations leads to frustration and distorted perceptions of what is being communicated.

I think there will be zero real world impact from the use of inclusive terminology in ISO standards, for the simple reason that terminology in ISO standards usually has zero real world impact (based on my experience of the use of terminology in ISO language standards). But the use of inclusive terminology does provide a new opportunity for virtue signalling by members of standards’ committees.

While use of inclusive terminology in ISO standards is unlikely to have any real world impact, the need to deal with suggested changes of terminology, and new terminology, will consume committee time. Most committee members tend to a rather pragmatic, but it only takes one or two people to keep a discussion going and going.

Over time, compiler vendors are going to become disenchanted with the increased workload, and the endless discussions relating to pet-issues and inclusive terminology. Given that there are so few industrial strength compilers for any language, the world no longer needs formally agreed language standards; the behavior that implementations have to support is controlled by the huge volume of existing code. Eventually, compiler vendors will sever the cord to the ISO standards process, and outside the SC22 bubble nobody will notice.

2019 in the programming language standards’ world

Last Tuesday I was at the British Standards Institute for a meeting of IST/5, the committee responsible for programming language standards in the UK.

There has been progress on a few issues discussed last year, and one interesting point came up.

It is starting to look as if there might be another iteration of the Cobol Standard. A handful of people, in various countries, have started to nibble around the edges of various new (in the Cobol sense) features. No, the INCITS Cobol committee (the people who used to do all the heavy lifting) has not been reformed; the work now appears to be driven by people who cannot let go of their involvement in Cobol standards.

ISO/IEC 23360-1:2006, the ISO version of the Linux Base Standard, has been updated and we were asked for a UK position on the document being published. Abstain seemed to be the only sensible option.

Our WG20 representative reported that the ongoing debate over pile of poo emoji has crossed the chasm (he did not exactly phrase it like that). Vendors want to have the freedom to specify code-points for use with their own emoji, e.g., pineapple emoji. The heady days, of a few short years ago, when an encoding for all the world’s character symbols seemed possible, have become a distant memory (the number of unhandled logographs on ancient pots and clay tablets was declining rapidly). Who could have predicted that the dream of a complete encoding of the symbols used by all the world’s languages would be dashed by pile of poo emoji?

The interesting news is from WG9. The document intended to become the Ada20 standard was due to enter the voting process in June, i.e., the committee considered it done. At the end of April the main Ada compiler vendor asked for the schedule to be slipped by a year or two, to enable them to get some implementation experience with the new features; oops. I have been predicting that in the future language ‘standards’ will be decided by the main compiler vendors, and the future is finally starting to arrive. What is the incentive for the GNAT compiler people to pay any attention to proposals written by a bunch of non-customers (ok, some of them might work for customers)? One answer is that Ada users tend to be large bureaucratic organizations (e.g., the DOD), who like to follow standards, and might fund GNAT to implement the new document (perhaps this delay by GNAT is all about funding, or lack thereof).

Right on cue, C++ users have started to notice that C++20’s added support for a system header with the name version, which conflicts with much existing practice of using a file called version to contain versioning information; a problem if the header search path used the compiler includes a project’s top-level directory (which is where the versioning file version often sits). So the WG21 committee decides on what it thinks is a good idea, implementors implement it, and users complain; implementors now have a good reason to not follow a requirement in the standard, to keep users happy. Will WG21 be apologetic, or get all high and mighty; we will have to wait and see.

2018 in the programming language standards’ world

I am sitting in the room, at the British Standards Institution, where today’s meeting of IST/5, the committee responsible for programming languages, has just adjourned (it’s close to where I have to be in a few hours).

BSI have downsized us, they no longer provide a committee secretary to take minutes and provide a point of contact. Somebody from a service pool responds (or not) to emails. I did not blink first to our chair’s request for somebody to take the minutes 🙂

What interesting things came up?

It transpires that reports of the death of Cobol standards work may be premature. There are a few people working on ‘new’ features, e.g., support for JSON. This work is happening at the ISO level, rather than the national level in the US (where the real work on the Cobol standard used to be done, before being handed on to the ISO). Is this just a couple of people pushing a few pet ideas or will it turn into something more substantial? We will have to wait and see.

The Unicode consortium (a vendor consortium) are continuing to propose new pile of poo emoji and WG20 (an ISO committee) were doing what they can to stay sane.

Work on the Prolog standard, now seems to be concentrated in Austria. Prolog was the language to be associated with, if you were on the 1980s AI bandwagon (and the Japanese were going to take over the world unless we did something about it, e.g., spend money); this time around, it’s machine learning. With one dominant open source implementation and one commercial vendor (cannot think of any others), standards work is a relic of past glories.

In pre-internet times there was an incentive to kill off committees that were past their sell-by date; it cost money to send out mailings and document storage occupied shelf space. In an electronic world there is no incentive to spend time killing off such committees, might as well wait until those involved retire or die.

WG23 (programming language vulnerabilities) reported lots of interest in their work from people involved in the C++ standard, and for some reason the C++ committee people in the room started glancing at me. I was a good boy, and did not mention bored consultants.

It looks like ISO/IEC 23360-1:2006, the ISO version of the Linux Base Standard is going to be updated to reflect LBS 5.0; something that was not certain few years ago.

Is the ISO C++ standard’s committee past its sell by date?

The purpose of having a standard is economic. The classic (British) example is screw threads, having a standard set of screw threads means that products from different manufacturers are interchangeable and competition drives down prices; the US puts more emphasis on standards being an enabler of people interchangeability, i.e., train people once and they can use the acquired skills in multiple companies.

In the early days of computing we had umpteen compilers for Cobol, Fortran and then Pascal and then C and then C++. There were a lot of benefit to be had getting the vendors signed up to support a single standard for their language (of course they still added bells and whistles to ‘enhance’ their offerings). Language standard’s meeting were full of vendors, with a few end users (mostly from large corporations and government).

Fast forward to today and the ranks of compiler vendors has thinned significantly. Microfocus dominates Cobol, Fortran is dominated by a few number cruncher oriented companies, Pascal die hards cling on in surprising places, C vendors are till in double figures (down by an order of magnitude from its heyday) and C++ vendors will soon be accurately countable by Trolls (1, 2, 3, many).

What purpose does an ISO language standard serve in a world with only a few compilers? These days the standard is actually set by the huge volume of existing code that has to be handled by any vendor hoping to be adopted by developers.

The ISO C++ committee has become the playground of bored consultants looking for a creative outlet that work is not providing. Is there any red blooded developer who would not love spending a week, two or three times a year, holed up in a hotel with 100+ similarly minded people pouring over newly invented language features?

Does the world need all these new features in C++? Fortunately for the committee there are training companies who like nothing better than being able to offer ‘latest features of C++’ courses to all those developers who have been on previous ‘latest features of C++’ courses. Then there is the media, who just love writing about new stuff, there is even an ‘official’ C++ Standard news outlet.

In the good old days compiler vendors loved updates to the language standard because it gave them an opportunity to sell upgrades to customers; things are a bit different in the open source compiler market. What is the incentive of an open source compiler vendor to support features added by an ISO committee? In the past there has been a community expectation that it will happen, but is the ground swell of opinion enough to warrant spending resources on supporting new languages? Perhaps the GCC and LLVM folk will get together and mutually agree not to waste resources being the first mover.

Would developers at large notice if the C++ committee didn’t do anything for the next 10 years?

The Javascript ECMAscript standard also has a membership that includes many end users. In this case I suspect companies are sending people to make sure that new languages features don’t impact large code bases and existing investment in ways of doing things.

Update: I’m not saying that C++ language and libraries should stop evolving, but questioning the need to have an ISO Standard’s committee in a world of Open Source and a small number of compilers (that is likely to only become fewer).

A survey of opinions on the behavior of various C constructs

The Cerberus project, researching C semantics, has written up the results of their survey of ‘expert’ C users (short version and long detailed version). I took part in the original survey and at times found myself having to second guess what the questioner was asking; the people involved were/are still learning how C works. Anyway, many of the replies provide interesting insights into current developer interpretation of the behavior of various C constructs (while many of the respondents were compiler writers, it looks like some of them were not C compiler writers).

Some of those working on the Cerberus project are proposing changes to the C standard based on issues they encountered while writing a formal specification for parts of C and are bolstering their argument, in part, using the results of their survey. In many ways the content of the C Standard was derived from a survey of those attending WG14 meetings (or rather x3j11 meetings back in the day).

I think there is zero probability that any of these proposed changes will make it into a revised C standard; none of the reasons are technical and include:

- If it isn’t broken, don’t fix it. Lots of people have successfully implemented compilers based on the text of the standard, which is the purpose of the document. Where is the cost/benefit of changing the wording to enable a formal specification using one particular mathematical notation?

- WG14 receives lots of requests for changes to the C Standard and has an implicit filtering process. If the person making the request thinks the change is important, they will:

- put the effort into wording the proposal in the stylized form used for language change proposals (i.e., not intersperse changes in a long document discussing another matter),

- be regular attendees of WG14 meetings, working with committee members on committee business and helping to navigate their proposals through the process (turning up to part of a meeting will see your proposal disappear as soon as you leave the building; the next WG14 meeting is in London during April).

It could be argued that having to attend many meetings around the world favors those working for large companies. In practice only a few large companies see any benefit in sending an employee to a standard’s meeting for a week to work on something that may be of long term benefit them (sometimes a hardware company who wants to make sure that C can be compiled efficiently to their processors).

The standard’s creation process is about stability (don’t break existing code; many years ago a company voted against a revision to the Cobol standard because they had lost the source code to one of their products and could not check whether the proposed updates would break this code) and broad appeal (not narrow interests).

Update: Herb Sutter’s C++ trip report gives an interesting overview of the process adopted by WG21.

Cobol 2014, perhaps the definitive final version of the language…

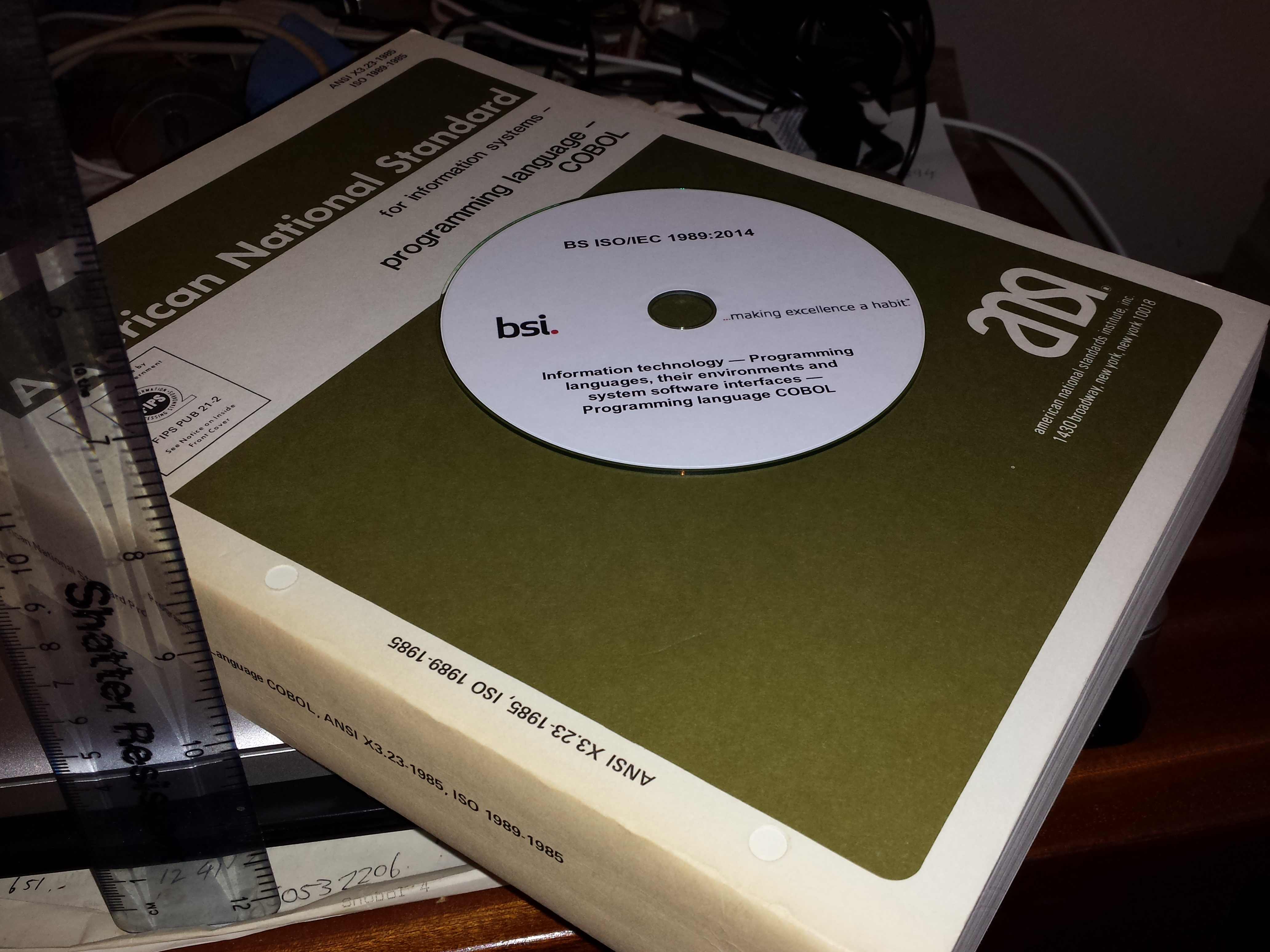

Look what arrived in the post this morning, a complementary copy of the new Cobol Standard (the CD on top of a paper copy of the 1985 standard).

In the good old days, before the Internet, members of IST/5 received a complementary copy of every new language standard in comforting dead tree form (a standard does not feel like a standard until it is weighed in the hand; pdfs are so lightweight); these days we get complementary access to pdfs. I suspect that this is not a change of policy at British Standards, but more likely an excessive print run that they need to dispose of to free up some shelf space. But it was nice of them to think of us workers rather than binning the CDs (my only contribution to Cobol 2014 was to agree with whatever the convener of the committee proposed with regard to Cobol).

So what does the new 955-page standard have to say for itself?

“COBOL began as a business programming language, but its present use has spread well beyond that to a general purpose programming language. Significant enhancements in this International Standard include:

— Dynamic-capacity tables

— Dynamic-length elementary items

— Enhanced locale support in functions

— Function pointers

— Increased size limit on alphanumeric, boolean, and national literals

— Parametric polymorphism (also known as method overloading)

— Structured constants

— Support for industry-standard arithmetic rules

— Support for industry-standard date and time formats

— Support for industry-standard floating-point formats

— Support for multiple rounding options”

I guess those working with Cobol will find these useful, but I don’t see them being enough to attract new users from other languages.

I have heard tentative suggestions of the next revision appearing in the 2020s, but with membership of the Cobol committee dying out (literally in some cases and through retirement in others) perhaps this 2014 publication is the definitive final version of Cobol.

Reality in the world of programming language standards

I see a lot of steam being vented about the standards’ process as applied to programming languages and software related topics. Knowing something about how the process works might help people live calmer lives, at least once they have calmed down after reading this article. What I have to say applies to programming language standards because these are what I have been involved with, as a member and convener of various UK and international committtees, for 25+ years.

- ISO and your national standards’ body don’t care about the standard you are talking about.

These organizations are monopolies who are required to demonstrate that documented procedures are followed by all concerned. Can you think of any organizational structure that would create less incentives for those on the inside to listen to those on the outside?

Yes, these organizations do sell standards but the sales model is all about the long tail and no peak, to speak of, of best sellers. The real business model for running a standards’ organization is to either charge members a fee (your country pays membership dues for each Standards Committee it wants a say in; if your country has not paid to be a member of ISO JTC 1/SC22 you have no say in programming language standards. ANSI in the US charges people for the right to volunteer their time to attend meetings to work on a standard) and/or rely on government subsidy.

Not being cared about is actually a luxury that people who work in programming language standards should aspire to. The bureaucrats who work in standards hate us; here in the UK there has been at least one attempt to kill off work on programming language standards and I have heard of similar experiences in other countries. The problem is that the standards we produce don’t fit the mold that works for most other standards; programming language standards contain an order of magnitude more pages than the average standard (until recently there was a print run of new standards which then had to be stored until sold and the volume occupied by programming language standards was of note {so I’m reliably informed}), take longer to produce (i.e., more work for the bureaucrats) and all this cost is not justified by the sales figures (which are confidential and last time I saw them only just required me to take my shoes and socks off to count).

- Standards are created by the volunteers who regularly turn up at meetings.

It is only the enthusiasm of these volunteers that makes the process work. If you don’t turn up at meetings then what you think does not count (not quite true, something you write might influence the thinking of one of the worker bees who attends meetings resulting in wording in the standard).

If you really are interested in a standard then become an active member of the committee responsible for it, at least the national one and if you have the time the international one

- Committee documents can be made public.

There are no rules preventing a standards committee putting its documents on a website for Joe public to download. The issue is finding somebody willing to do the work of hosting the website (the programming language world is lucky to have Keld Simonsen) and a willingness of committee members to be open about all their documents.

Looking in from the outside it seems to me that many non-programming language committees want to maintain an aura of mystic and privileged access.

Single-quote as a digit separator soon to be in C++

At the C++ Standard’s meeting in Chicago last week agreement was finally reached on what somebody in the language standards world referred to as one of the longest bike-shed controversies; the C++14 draft that goes out for voting real-soon-now will include support for single-quotation-mark as a digit separator. Assuming the draft makes it through ISO voting you could soon be writing (Compiler support assumed) 32'767 and 0.000'001 and even 1'2'3'4'5'6'7'8'9 if you so fancied, in your conforming C++ programs.

Why use single-quote? Wouldn’t underscore have been better? This issue has been on the go since 2007 and if you feel really strongly about it the next bike-shed C++ Standard’s meeting is in Issaquah, WA at the start of next year.

Changing the lexical grammar of a language is fraught with danger; will there be a change in the behavior of existing code? If the answer is Yes, then the next question is how many people will be affected and how badly? Let’s investigate; here are the lexical details of the proposed change:

pp-number: digit . digit pp-number digit pp-number ' digit pp-number ' nondigit pp-number identifier-nondigit pp-number e sign pp-number E sign pp-number . |

Ideally the change of behavior should cause the compiler to generate a diagnostic, when code containing it is encountered, so the developer gets to see the problem and do something about it. The following conforming C++ code will upset a C++14 compiler (when I write C++ I mean the C++ Standard as it exists in 2013, i.e., what was called C++11 before it was ratified):

#define M(x) #x // stringize the macro argument char *p=M(1'2,3'4); |

At the moment the call to the macro M contains one argument, the sequence of three tokens {1}, {'2,3'} and {4} (the usual convention is to bracket the characters making up one token with matching curly braces).

In C++14 the call to M will contain the two arguments {1'2} and {3,4}. conforming compiler is required to complain when the number of arguments to a macro invocation don’t match the definition…. Unless the macro is defined to accept a variable number of arguments:

#define M(x, ...) __VA_ARGS__ int x[2] = { M(1'2,3'4) }; // C++11: int x[2] = {}; // C++14: int x[2] = { 3'4 }; |

This is the worst kind of change in behavior, known as a silent change, the existing code compiles without complaint but has different behavior.

How much existing code contains either of these constructs? I suspect very very little human written code, maybe even none. This is the sort of stuff that is more likely to be produced by automatic code generators. But how much more likely? I have no idea.

How much benefit does the new feature provide? It certainly looks useful, but coming up with a number for the benefit is hard. I guess it has the potential to shave a fraction of a second off of the attention a developer has to pay when reading code, after they have invested in learning about the construct (which is lots of seconds). Multiplied over many developers and not that many instances (the majority of numeric literals contain a single digit), we could be talking a man year or two per year of worldwide development effort?

All of the examples I have seen require the ‘assistance’ of macros, here is another (courtesy of Jeff Snyer):

#define M(x) A ## x #define A0xb int operator "" _de(char); int x = M(0xb'c'_de); |

Are there any examples of a silent change that don’t involve the preprocessor?

Recent Comments