Archive

Investigating an LLM generated C compiler

Spending over $20,000 on API calls, a team at Anthropic plus an LLM (Claude Opus version 4.6) wrote a C compiler capable of compiling the Linux kernel and other programs to a variety of cpus. Has Anthropic commercialised monkeys typing on keyboards, or have they created an effective sheep herder?

First of all, does this compiler handle a non-trivial amount of the C language?

Having written a variety of industrial compiler front ends, optimizers, code generators and code analysers (which paid off the mortgage on my house), along with a book that analysed of the C Standard, sentence by sentence (download the pdf), I’m used to finding my way around compilers.

Claude’s C compiler source appears to be surprisingly well written/organised (based on a few hours reading of the code). My immediate thought was that this must be regurgitation of pieces from existing compilers. Searches for a selection of comments in the source failed to find any matches. Stylistically, the code is written by an entity that totally believes in using abstractions; functions call functions that call functions, that call …, eventually arriving at a leaf function that just assigns a value. Not at all like a human C compiler writer (well, apart from this one).

There are some oddities in an implementation of this (small) size. For instance, constant folding includes support for floating-point literals. Use of floating-point is uncommon, and opportunities to fold literals rare. Perhaps this support was included because, well, an LLM did the work. But it increases the amount of code that can be incorrect, for little benefit. When writing a compiler in an implementation language different from the one being compiled, differences between the two languages can have an impact. For instance, Claude C uses Rust’s 128-bit integer type during constant folding, despite this and most other C compilers only supporting at most 64-bit integer types.

A README appears in each of the 32 source directories, giving a detailed overview of the design and implementation of the activities performed by the code. The average length is 560 lines. These READMEs look like edited versions of the prompts used.

To get a sense of how the compiler handled rarely used language features and corner cases, I fed it examples from my book (code). The Complex floating point type is supported, along with Universal Character Names, fiddly scoping rules, and preprocessor oddities. This compiler is certainly non-trivial.

The compiler’s major blind spot is failing to detect many semantic constraints, e.g., performing arithmetic on variables having a struct type, or multiple declarations of functions and variables with the same name in the same scope (the parser README says “No type checking during parsing”; no type checking would be more accurate). The training data is source code that compiles to machine code, i.e., does not contain any semantic errors that a compiler is required to flag. It’s not surprising that Claude C fails to detect many semantic errors. There is a freely available collection of tests for the 80 constraint clauses in the C Standard that can be integrated into the Claude C compiler test suite, including the prompts used to generate the tests.

A compiler is an information conveyor belt. Source is first split into tokens (based on language specific rules), which are parsed to build a tree representation and a symbol table, which is then converted to SSA form so that a sequence of established algorithms can be used to lower the level of abstraction and detect common optimizations patterns, the low-level representation is mapped to machine code, and written to a file in the format for an executable program.

The prompts used to orchestrate the information processing conveyor belt have not been released. I’m guessing that the human team prompted the LLM with a detailed specification of the interfaces between each phase of the compiler.

The compiler is implemented in Rust, the currently fashionable language, and the obvious choice for its PR value. The 106K of source is spread across 351 files (average 531 LOC), and built in 17.5 seconds on my system.

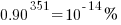

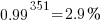

LLMs make mistakes, with coding benchmark success rates being at best around 90%. Based on these numbers, the likelihood of 351 files being correctly generated, at the same time, is  (with 99% probability of correctness we get

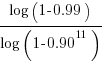

(with 99% probability of correctness we get  ). Splitting the compiler into, say, 32 phases each in a directory containing 11 files, and generating and testing each phase independently significantly increases the probability of success (or alternatively, significantly reduces the number of repetitions of the generate code and test process). The success probability of each phase is:

). Splitting the compiler into, say, 32 phases each in a directory containing 11 files, and generating and testing each phase independently significantly increases the probability of success (or alternatively, significantly reduces the number of repetitions of the generate code and test process). The success probability of each phase is:  , and if the same phase is generated 13 times, i.e.,

, and if the same phase is generated 13 times, i.e.,  , there is a 99% probability that at least one of them is correct.

, there is a 99% probability that at least one of them is correct.

Some code need not do anything other than pass on the flow of information unchanged. For instance, code to perform the optimization common subexpression elimination does exist, but the optimization is not performed (based on looking at the machine code generated for a few tests; see codegen.c). Detecting non-functional code could require more prompting skill than generating the code. The prompt to implementation this optimization (e.g., write Rust code to perform value numbering) is very different from the prompt to write code containing common subexpressions, compile to machine code and check that the optimization is performed.

There is little commenting in the source for the lexer, parser, and machine code generators, i.e., the immediate front end and final back end. There is a fair amount of detailed commenting in source of the intervening phases.

The phases with little commenting are those which require lots of very specific, detailed information that is not often covered in books and papers. I suspect that the prompts for this code contains lots of detailed templates for tokenizing the source, building a tree, and at the back end how to map SSA nodes to specific instruction sequences.

The intermediate phases have more publicly available information that can be referenced in prompts, such as book chapters and particular papers. These prompts would need to be detailed instructions on how to annotate/transform the tree/SSA conveyed from earlier phases.

Zig is the next fashionable language

New programming languages are constantly being created, with most remaining unknown outside a small circle of friends. Every 5-10 years or so, a few of these languages break out to become fashionable to use. In the early 1980s, I was a fan of Pascal and had conversations with developers trying to figure out why they were fans of C. Yes, C was once the fashionable language to talk about. One of these languages went on to be used almost everywhere, while the other had the common fate of fading into obscurity.

Fashion serves a purpose. People, not just developers, enjoy experimenting, feel a need for self-expression, with a “fresh start” making them feel new and energized. Following fashion provides a means of fulfilling these desires. Developers who don’t need to earn a living using established languages are able to invest their time being part of the growing community of the current fashionable language.

Over the last 5-10 years Rust and Go were fashionable languages, with Julia being part of the trend vibe for a few years in the mid 2010s.

This last year, I have noticed a significant decline in articles extolling Go and meeting developers using it. In the last 6 months, I have seen a marked growth in criticism of Rust (very slow compilation speed in particular).

Fashion requires change and reinvention, because widespread use ruins its specialness. When Rust is no longer perceived to be fashionable (I think Go is already at this point), a new language will be ‘chosen’ to fill the void. Which languages are the likely candidates?

For some time, I have been telling people that my candidate language is Zig. My original whimsical reason was that very few programming languages have names starting with letters near the end of the alphabet (the preponderance of language names start with a letter near the beginning).

Experience has taught me that technical merits have little to do with language choice, clearly illustrated by the wide use of PHP and JavaScript.

A necessary condition for a language to become fashionable is that it be usable on the widely available software development platforms of the day (which is how PHP and JavaScript catapulted their user growth), another is that there be at least one core developer generally available to respond to user questions. The first condition provides something to use, and the second the basis for a welcoming community.

New languages are created on a regular basis by PhD students, but these implementations are a means to an end, i.e., publishing papers about some technique. There might be something to use, but rarely a welcoming community.

Andrew Kelley, the creator of Zig and president of the Zig foundation, quit his job in 2018 to work full-time on Zig. Andrew has put a lot of effort into building a community and raising funds to hire people.

Doing all the necessary things is not enough, there is luck and timing is important. The start date for Zig is four years after the start date for Rust. Not long enough for Rust to become unfashionable and need a replacement. However, seven years after leaving his job, Andrew is still working on Zig. Persistence is also an important success factor.

While I don’t actively follow Zig, I do visit sites where fringe languages are regularly discussed. Over the last six months, there has been a noticeable increase in Zig related discussions, and somebody has written a Zig book. Given that Zig related discussions are uncommon, this uptick may just be noise.

To me, Zig looks ready to go, and also has an appealing backstory (i.e., created by a lone developer working hard over many years) that contrasts with the Rust/Go perceived backstory (i.e., created in the bowls of an, not at the time, ‘evil’ corporation). I have not seen any other contenders for the next fashionable language.

Will LLMs cause fashionable languages to stop being a thing? Perhaps in the long term, or perhaps a new criterion for being fashionable will be not-known to LLMs. At the moment, developers are very aware of the failing of LLM code generation. In the short term, I think that fashionable languages will remain a thing.

Comparing developer/LLM coding performance

Lots of claims are being made about how LLMs will soon outperform developers on coding tasks. Given the lack of any effective measure of developer performance, these claims are meaningless. At some point, lower costs will entice management to accept good enough LLM performance as a replacement for human developers, i.e., LLM don’t need to be technically better than developers.

The outperform claims are, currently, marketing puff, and I was not expecting anybody to make a serious attempt to compare developer/LLM performance. However, concerns about AI exceeding human capacity to control it (and maybe wiping out humans) has resulted in some well funded AI safety research groups. There is at least one group actively recruiting developers to “… establish human performance baselines on tasks related to software engineering, machine learning, and cybersecurity …”.

The most talked about AI threat scenarios all seem to start with recursive self-improvement, i.e., LLMs training themselves, exponentially improving with each iteration (the implied exponential always seems to be continuously up, rather than getting exponentially closer to a maximum).

Can current LLMs improve themselves faster than a developer can?

Implementing a new LLM is beyond the ability of today’s LLM, but they can implement some of the components used to build an LLM. How does LLM performance compare against developers, on the implementation of these components?

The paper RE-Bench: Evaluating frontier AI R&D capabilities of language model agents against human experts from METR (Model Evaluation & Threat Research) comes with code and “… anonymized human expert data coming soon.” for seven tasks. The baseline was derived from the performance of 61 human experts.

I’m always pleased to see researchers doing experiments with developers. I wish there were more groups doing this kind of thing.

However, I think that these researchers have made the common mistake of using very complicated subject tasks in their experiment. Most software development tasks are mundane, with the occasional complicated task (which can often be solved by using an appropriate package/library). The tasks may be representative of the harder tasks that need to be done, but they are not representative of the complete LLM implementation scenario.

A consequence of using complicated tasks is that most subjects only had enough time to complete one task (they were given 8 hours). With so few tasks (seven) the confidence intervals are going to be very wide on any general statement about human/LLM performance. With around ten subjects per task, the individual task confidence intervals are also going to be wide.

Task 7 made me laugh: “… that generates solutions to CodeContests problems in Rust, …”

Why Rust? Did they happen to have access to lots of Rust experts, or does the research group contain enthusiastic fans of Rust? I suspect the latter. There is a certain kind of highly intelligent developer who strongly believes that writing programs in a particular language imbues the code with magical properties (their rationale won’t be worded that way). For the last few years, Rust has been one of these pixie dust languages. Many decades ago, C had this charisma.

Perhaps each generation of ever more ‘intelligent’ LLMs will choose to design a new language to use to implement their ‘successor’.

There are a myriad of tasks related to software engineering. Solving GitHub issues is a thankless task, and having LLMs reliably close open issues would be of enormous benefit. A study published two months ago obtained a 1.96% solution rate (no explicit testing of developers).

The Whitehouse report on adopting memory safety

Last month’s Whitehouse report: BACK TO THE BUILDING BLOCKS: A Path Towards Secure and Measurable Software “… outlines two fundamental shifts: the need to both rebalance the responsibility to defend cyberspace and realign incentives to favor long-term cybersecurity investments.”

From the abstract: “First, in order to reduce memory safety vulnerabilities at scale, … This report focuses on the programming language as a primary building block, …” Wow, I never expected to see the term ‘memory safety’ in a report from the Whitehouse (not that I recall ever reading a Whitehouse report). And, is this the first Whitehouse report to talk about programming languages?

tl;dr They mistakenly to focus on the tools (i.e., programming languages), the focus needs to be on how the tools are used, e.g., require switching on C compiler’s memory safety checks which currently default to off.

The report’s intent is to get the community to progress from defence (e.g., virus scanning) to offence (e.g., removing the vulnerabilities at source). The three-pronged attack focuses on programming languages, hardware (e.g., CHERI), and formal methods. The report is a rallying call to the troops, who are, I assume, senior executives with no little or no knowledge of writing software.

How did memory safety and programming languages enter the political limelight? What caused the Whitehouse claim that “…, one of the most impactful actions software and hardware manufacturers can take is adopting memory safe programming languages.”?

The cited reference is a report published two months earlier: The Case for Memory Safe Roadmaps: Why Both C-Suite Executives and Technical Experts Need to Take Memory Safe Coding Seriously, published by an alphabet soup of national security agencies.

This report starts by stating the obvious (at least to developers): “Memory safety vulnerabilities are the most prevalent type of disclosed software vulnerability.” (one Microsoft reports says 70%). It then goes on to make the optimistic claim that: “Memory safe programming languages (MSLs) can eliminate memory safety vulnerabilities.”

This concept of a ‘memory safe programming language’ leads the authors to fall into the trap of believing that tools are the problem, rather than how the tools are used.

C and C++ are memory safe programming languages when the appropriate compiler options are switched on, e.g., gcc’s sanitize flags. Rust and Ada are not memory safe programming languages when the appropriate compiler options are switched on/off, or object/function definitions include the unsafe keyword.

People argue over the definition of memory safety. At the implementation level, it includes checks that storage is not accessed outside of its defined bounds, e.g., arrays are not indexed outside the specified lower/upper bound.

I’m a great fan of array/pointer bounds checking and since the 1980s have been using bounds checking tools to check my C programs. I found bounds checking is a very cost-effective way of detecting coding mistakes.

Culture drives the (non)use of bounds checking. Pascal, Ada and now Rust have a culture of bounds checking during development, amongst other checks. C, C++, and other languages have a culture of not having switching on bounds checking.

Shipping programs with/without bounds checking enabled is a contentious issue. The three main factors are:

- Runtime performance overhead of doing the checks (which can vary from almost nothing to a factor of 5+, depending on the frequency of bounds checked accesses {checks don’t need to be made when the compiler can figure out that a particular access is always within bounds}). I would expect the performance overhead to be about the same for C/Rust compilers using the same compiler technology (as the Open source compilers do). A recent study found C (no checking) to be 1.77 times faster, on average, than Rust (with checking),

- Runtime memory overhead. Adding code to check memory accesses increases the size of programs. This can be an issue for embedded systems, where memory is not as plentiful as desktop systems (recent survey of Rust on embedded systems),

- Studies (here and here) have found that programs can be remarkably robust in the presence of errors. Developers’ everyday experience is that programs containing many coding mistakes regularly behave as expected most of the time.

If bounds checking is enabled on shipped applications, what should happen when a bounds violation is detected?

Many bounds violations are likely to be benign, and a few not so. Should users have the option of continuing program execution after a violation is flagged (assuming they have been trained to understand the program message they are seeing and are aware of the response options)?

Java programs ship with bounds checking enabled, but I have not seen any studies of user response to runtime errors.

The reason that C/C++ is the language used to write so many of the programs listed in vulnerability databases is that these languages are popular and widely used. The Rust security advisory database contains few entries because few widely used programs are written in Rust. It’s possible to write unsafe code in Rust, just like C/C++, and studies find that developers regularly write such code and security risks exist within the Rust ecosystem, just like C/C++.

There have been various attempts to implement bounds checking in x86

processors. Intel added the MPX instruction, but there were problems with the specification, and support was discontinued in 2019.

The CHERI hardware discussed in the Whitehouse report is not yet commercially available, but organizations are working towards commercial products.

A comparison of C++ and Rust compiler performance

Large code bases take a long time to compile and build. How much impact does the choice of programming language have on compiler/build times?

The small number of industrial strength compilers available for the widely used languages makes the discussion as much about distinct implementations as distinct languages. And then there is the issue of different versions of the same compiler having different performance characteristics; for instance, the performance of Microsoft’s C++ Visual Studio compiler depends on the release of the compiler and the version of the standard specified.

Implementation details can have a significant impact on compile time. Compile time may be dominated by lexical analysis, while support for lots of fancy optimization shifts the time costs to code generation, and poorly chosen algorithms can result in symbol table lookup being a time sink (especially for large programs). For short programs, compile time may be dominated by start-up costs. These days, developers rarely have to worry about small memory size causing occurring when compiling a large source file.

A recent blog post compared the compile/build time performance of Rust and C++ on Linux and Apple’s OS X, and strager kindly made the data available.

The most obvious problem with attempting to compare the performance of compilers for different languages is that the amount of code contained in programs implementing the same functionality will differ (this is also true when the programs are written in the same language).

strager’s solution was to take a C++ program containing 9.3k LOC, plus 7.3K LOC of tests, and convert everything to Rust (9.5K LOC, plus 7.6K LOC of tests). In total, the benchmark consisted of compiling/building three C++ source files and three equivalent Rust source files.

The performance impact of number of lines of source code was measured by taking the largest file and copy-pasting it 8, 16, and 32 times, to create three every larger versions.

The OS X benchmarks did not include multiple file sizes, and are not included in the following analysis.

A variety of toolchain options were included in different runs, and for Rust various command line options; most distinct benchmarks were run 10 times each. On Linux, there were a total of 360 C++ runs, and for Rust 1,066 runs (code+data).

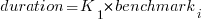

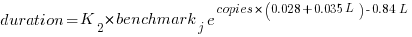

The model  , where

, where  is a fitted regression constant, and

is a fitted regression constant, and  is the fitted regression coefficient for the

is the fitted regression coefficient for the  ‘th benchmark, explains just over 50% of the variance. The language is implicit in the benchmark information.

‘th benchmark, explains just over 50% of the variance. The language is implicit in the benchmark information.

The model  , where

, where  is the number of copies,

is the number of copies,  is 0 for C++ and 1 for Rust, explains 92% of the variance, i.e., it is a very good fit.

is 0 for C++ and 1 for Rust, explains 92% of the variance, i.e., it is a very good fit.

The expression  is a language and source code size multiplication factor. The numeric values are:

is a language and source code size multiplication factor. The numeric values are:

1 8 16 32 C++ 1.03 1.25 1.57 2.45 Rust 0.98 1.52 2.52 6.90 |

showing that while Rust compilation is faster than C++, for ‘shorter’ files, it becomes much relatively slower as the quantity of source increases.

The size factor for Rust is growing quadratically, while it is much closer to linear for C++.

What are the threats to the validity of this benchmark comparison?

The most obvious is the small number of files benchmarked. I don’t have any ideas for creating a larger sample.

How representative is the benchmark of typical projects? An explicit goal in benchmark selection was to minimise external dependencies (to reduce the effort likely to be needed to translate to Rust). Typically, projects contain many dependencies, with compilers sometimes spending more time processing dependency information than compiling the actual top-level source, e.g., header files in C++.

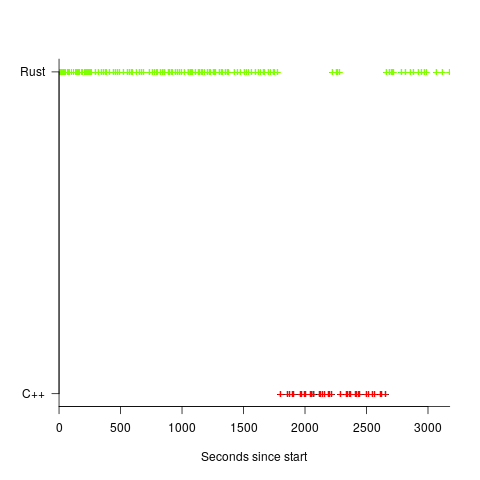

A less obvious threat is the fact that the benchmarks for each language were run in contiguous time intervals, i.e., all Rust, then all C++, then all Rust (see plot below; code+data):

It is possible that one or more background processes were running while one set of language benchmarks was being run, which would skew the results. One solution is to intermix the runs for each language (switching off all background tasks is much harder).

I was surprised by how well the regression model fitted the data; the fit is rarely this good. Perhaps a larger set of benchmarks would increase the variance.

Widely used programming languages: past, present, and future

Programming languages are like pop groups in that they have followers, fans and supporters; new ones are constantly being created and some eventually become widely popular, while those that were once popular slowly fade away or mutate into something else.

Creating a language is a relatively popular activity. Science fiction and fantasy authors have been doing it since before computers existed, e.g., the Elf language Quenya devised by Tolkien, and in the computer age Star Trek’s Klingon. Some very good how-to books have been written on the subject.

As soon as computers became available, people started inventing programming languages.

What have been the major factors influencing the growth to widespread use of a new programming languages (I’m ignoring languages that become widespread within application niches)?

Cobol and Fortran became widely used because there was widespread implementation support for them across computer manufacturers, and they did not have to compete with any existing widely used languages. Various niches had one or more languages that were widely used in that niche, e.g., Algol 60 in academia.

To become widely used during the mainframe/minicomputer age, a new language first had to be ported to the major computers of the day, whose products sometimes supported multiple, incompatible operating systems. No new languages became widely used, in the sense of across computer vendors. Some new languages were widely used by developers, because they were available on IBM computers; for several decades a large percentage of developers used IBM computers. Based on job adverts, RPG was widely used, but PL/1 not so. The use of RPG declined with the decline of IBM.

The introduction of microcomputers (originally 8-bit, then 16, then 32, and finally 64-bit) opened up an opportunity for new languages to become widely used in that niche (which would eventually grow to be the primary computing platform of its day). This opportunity occurred because compiler vendors for the major languages of the day did not want to cannibalize their existing market (i.e., selling compilers for a lot more than the price of a microcomputer) by selling a much lower priced product on microcomputers.

BASIC became available on practically all microcomputers, or rather some dialect of BASIC that was incompatible with all the other dialects. The availability of BASIC on a vendor’s computer promoted sales of the hardware, and it was not worthwhile for the major vendors to create a version of BASIC that reduced portability costs; the profit was in games.

The dominance of the Microsoft/Intel partnership removed the high cost of porting to lots of platforms (by driving them out of business), but created a major new obstacle to the wide adoption of new languages: Developer choice. There had always been lots of new languages floating around, but people only got to see the subset that were available on the particular hardware they targeted. Once the cpu/OS (essentially) became a monoculture most new languages had to compete for developer attention in one ecosystem.

Pascal was in widespread use for a few years on micros (in the form of Turbo Pascal) and university computers (the source of Wirth’s ETH compiler was freely available for porting), but eventually C won developer mindshare and became the most widely used language. In the early 1990s C++ compiler sales took off, but many developers were writing C with a few C++ constructs scattered about the code (e.g., use of new, rather than malloc/free).

Next, the Internet took off, and opened up an opportunity for new languages to become dominant. This opportunity occurred because Internet related software was being made freely available, and established compiler vendors were not interested in making their products freely available.

There were people willing to invest in creating a good-enough implementation of the language they had invented, and giving it away for free. Luck, plus being in the right place at the right time resulted in PHP and Javascript becoming widely used. Network effects prevent any other language becoming widely used. Compatible dialects of PHP and Javascript may migrate widespread usage to quite different languages over time, e.g., Facebook’s Hack.

Java rode to popularity on the coat-tails of the Internet, and when it looked like security issues would reduce it to niche status, it became the vendor supported language for one of the major smart-phone OSs.

Next, smart-phones took off, but the availability of Open Source compilers closed the opportunity window for new languages to become dominant through lack of interest from existing compiler vendors. Smart-phone vendors wanted to quickly attract developers, which meant throwing their weight behind a language that many developers were already familiar with; Apple went with Objective-C (which evolved to Swift), Google with Java (which evolved to Kotlin, because of the Oracle lawsuit).

Where does Python fit in this grand scheme? I don’t yet have an answer, or is my world-view wrong to treat Python usage as being as widespread as C/C++/Java?

New programming languages continue to be implemented; I don’t see this ever stopping. Most don’t attract more users than their implementer, but a few become fashionable amongst the young, who are always looking to attach themselves to something new and shiny.

Will a new programming language ever again become widely used?

Like human languages, programming languages experience strong networking effects. Widely used languages continue to be widely used because many companies depend on code written in it, and many developers who can use it can obtain jobs; what company wants to risk using a new language only to find they cannot hire staff who know it, and there are not many people willing to invest in becoming fluent in a language with no immediate job prospects.

Today’s widely used programmings languages succeeded in a niche that eventually grew larger than all the other computing ecosystems. The Internet and smart-phones are used by everybody on the planet, there are no bigger ecosystems to provide new languages with a possible route to widespread use. To be widely used a language first has to become fashionable, but from now on, new programming languages that don’t evolve from (i.e., be compatible with) current widely used languages are very unlikely to migrate from fashionable to widely used.

It has always been possible for a proficient developer to dedicate a year+ of effort to create a new language implementation. Adding the polish need to make it production ready used to take much longer, but these days tool chains such as LLVM supply a lot of the heavy lifting. The problem for almost all language creators/implementers is community building; they are terrible at dealing with other developers.

It’s no surprise that nearly all the new languages that become fashionable originate with language creators who work for a company that happens to feel a need for a new language. Examples include:

- Go created by Google for internal use, and attracted an outside fan base. Company languages are not new, with IBM’s PL/1 being the poster child (or is there a more modern poster child). At the moment Go is a trendy language, and this feeds a supply of young developers willing to invest in learning it. Once the trendiness wears off, Google will start to have problems recruiting developers, the reason: Being labelled as a Go developer limits job prospects when few other companies use the language. Talk to a manager who has tried to recruit developers to work on applications written in Fortran, Pascal and other once-widely used languages (and even wannabe widely used languages, such as Ada),

- Rust a vanity project from Mozilla, which they have now

abandonedcast adrift. Did Rust become fashionable because it arrived at the right time to become the not-Google language? I await a PhD thesis on the topic of the rise and fall of Rust, - Microsoft’s C# ceased being trendy some years ago. These days I don’t have much contact with developers working in the Microsoft ecosystem, so I don’t know anything about the state of the C# job market.

Every now and again a language creator has the social skills needed to start an active community. Zig caught my attention when I read that its creator, Andrew Kelley, had quit his job to work full-time on Zig. Two and a-half years later Zig has its own track at FOSEM’21.

Will Zig become the next fashionable language, as Rust/Go popularity fades? I’m rooting for Zig because of its name, there are relatively few languages whose name starts with Z; the start of the alphabet is over-represented with language names. It would be foolish to root for a language because of a belief that it has magical properties (e.g., powerful, readable, maintainable), but the young are foolish.

Recent Comments