Archive

Changes in the shape of code during the twenties?

At the end of 2009 I made two predictions for the next decade; Chinese and Indian developers having a major impact on the shape of code (ok, still waiting for this to happen), and scripting languages playing a significant role (got that one right, but then they were already playing a large role).

Since this blog has just entered its second decade, I will bring the next decade’s predictions forward a year.

I don’t see any new major customer ecosystems appearing. Ecosystems are the drivers of software development, and no new ecosystems has several consequences, including:

- No major new languages: Creating a language is a vanity endeavour. Vanity project can take off if they are in the right place at the right time. New ecosystems provide opportunities for new languages to become widely used by being in at the start and growing with the ecosystem. There is another opportunity locus; it is fashionable for companies that see themselves as thought-leaders to have their own language, e.g., Google, Apple, and Mozilla. Invent your language at the right time, while working for a thought-leader company and your language could become well-known enough to take-off.

I don’t see any major new ecosystems appearing, and all the likely companies already have their own language.

Any new language also faces the problem of not having a large collection packages.

- Software will be more thoroughly tested: When an ecosystem is new, the incentives drive early and frequent releases (to build a customer base); software just has to be good enough. Once a product is established, companies can invest in addressing issues that customers find annoying, like faulty behavior; the incentive change results in more testing.

There are other forces at work around testing. Companies are experiencing some very expensive faults (testing may be expensive, but not testing may be more expensive) and automatic test generation is becoming commercially usable (i.e., the cost of some kinds of testing is decreasing).

The evolution of widely used languages.

- I think Fortran and C will have new features added, with relatively little fuss, and will quietly continue to be widely used (to the dismay of the fashionista).

-

There is a strong expectation that C++ and Java should continue to evolve:

- I expect the ISO C++ work to implode, because there are too many people pulling in too many directions. It makes sense for the gcc and llvm teams to cooperate in taking C++ in a direction that satisfies developers’ needs, rather than the needs of bored consultants. What are Microsoft’s views? They only have their own compiler for strategic reasons (they make little if any profit selling compilers, compilers are an unnecessary drain on management time; who cares what happens to the language).

- It is going to be interesting watching the impact of Oracle’s move to charging for runtimes. I have no idea what might happen to Java.

In terms of code volume, the future surely has to be scripting languages, and in particular Python, Javascript and PHP. Ten years from now, will there be a widely used, single language? People have been predicting, for many years, that web languages will take over the world; perhaps there will be a sudden switch and I will see that the choice is obvious.

Moore’s law is now dead, which means researchers are going to have to look for completely new techniques for building logic gates. If photonic computers happen, then ternary notation may reappear again (it was used in at least one early Russian computer); I’m not holding my breath for this to occur.

Premium mediocrity is software engineering’s demographic

Software engineering is one of the skills needed to write software, but outside of student coursework is rarely an end in itself. Software is written to do something and the person writing the code needs to know about the something.

If enough people are involved in something, a job title gets created by inserting the appropriate application domain name before ‘software engineer’, e.g., the something software engineer; systems software engineering was one of the first recorded uses of ‘software engineering’, ’embedded software engineer’ is a common usage and more recently ‘research software engineer’ has been trending.

Customers want the software systems they use to fulfill their needs. Implementing a software system involves figuring out what the needs are, how best to implement them using the available resources and producing usable software; all within a given amount of time and money.

How much software engineering knowledge and skill does a something software engineer need? The obvious answer is: enough to get the something done. Ok, how much is needed to get the something done?

There are so many hours in a day: what percentage of available time is best spent learning about software engineering, what percentage leaning about the something and what percentage doing rather than learning?

The only data I have for answering this question is my own experience of talking to people, from a wide range of business and application areas, whose job includes writing software. My background is compilers (from C to Cobol) and static analysis, my knowledge of end-user application domains is derived from talking to the developers who were using the compilers or static analysis tools I was working on at the time.

I have always been struck by the minimalist knowledge of most developers, when it comes to the programming language they were using. It took a while, but eventually I accepted the obvious: most developers don’t need to know much about the language they are using to get their job done.

By a process that resembles incentivized trial and error, people learn how to write code that does what they want; the compiler does not complain and the output looks ok. For some languages, I used to be able to work out which books a relatively new developer had used to guide their learning, by matching a book’s example code snippets with the code they had written.

This minimalist knowledge approach to programming languages is cost effective because most code is simple and has a short lifetime; the cost of learning lots of language details does not provide enough benefit to be worthwhile.

I am a minimalist language Python developer. Why would I spend time learning more about the semantics of Python than I need to?

What are the benefits of being a language expert? Compiler writers get paid to learn the ins and outs of a language and I know a few people who became language experts without being compiler writers (they got hooked on knowing the language). I have found it useful for keeping my code simple (I am not tempted to write complicate code, or use obscure constructs, in the mistaken belief that they are better than the simple stuff), it is also useful for figuring out other people’s complicated or obscure usage (created intentionally or accidentally).

These benefits are not enough to convince me to learn more about Python, the language. I am content to wait until I need to learn more.

I have occasionally taught advanced programming courses, aimed at developers with a few years experience working in industry. These courses had to include the word ‘advanced’ in their title, otherwise developers with a few years experience would never have signed-up; ‘advanced’ is a necessary marketing signal (others who have run such courses report the same behavior). The course contents were essentially a review of basic material, with lots of examples; most of those attending did not know enough to follow real advanced material. The courses were really about uncovering and correcting bad habits that attendees had picked up over time (often, a technique was discovered to fix a problem and then subsequently adopted for more general use).

What about general software engineering skills? A minimalist knowledge approach to software engineering is cost effective because most code does not exist long enough to make it worthwhile investing in reducing future maintenance costs. Yes, it is more expensive for those that survive to become commonly used, but think of all the savings from not investing in those that did not survive. Software engineering decisions should not be driven by surviorship bias.

The first requirement of any commercial software system is to attract paying customers. In a rapidly changing market, being first with a saleable product can be the difference between life and death. Minimizing software engineering effort saves time and money (in the short term). If the product is a success, there will be money to pay for what needs to be done, if the product fails nobody cares. I have seen a lot of software systems that are a commercial success and a complete software engineering mess; successful, well engineered software is less common (or perhaps they just don’t need me to help them out).

Software engineering mediocrity is not only viable, for most people it’s the outcome of making a cost/benefit decision to invest their learning time in the application domain, not software engineering (or computer language).

Of course, nobody wants to be seen as being mediocre (for some people, mediocre overstates their skill level); their behavior is premium mediocre.

There are a few application areas where software engineering skills are needed, e.g., safety critical software and warehouse scale computing. A few high profile cases are hiding the reality that whatever works is cost effective for most software solutions.

Evidence for 28 possible compilers in 1957

In two earlier posts I discussed the early compilers for languages that are still widely used today and a report from 1963 showing how nothing has changed in programming languages

The Handbook of Automation Computation and Control Volume 2, published in 1959, contains some interesting information. In particular Table 23 (below) is a list of “Automatic Coding Systems” (containing over 110 systems from 1957, or which 54 have a cross in the compiler column):

Computer System Name or Developed by Code M.L. Assem Inter Comp Oper-Date Indexing Fl-Pt Symb. Algeb.

Acronym

IBM 704 AFAC Allison G.M. C X Sep 57 M2 M 2 X

CAGE General Electric X X Nov 55 M2 M 2

FORC Redstone Arsenal X Jun 57 M2 M 2 X

FORTRAN IBM R X Jan 57 M2 M 2 X

NYAP IBM X Jan 56 M2 M 2

PACT IA Pact Group X Jan 57 M2 M 1

REG-SYMBOLIC Los Alamos X Nov 55 M2 M 1

SAP United Aircraft R X Apr 56 M2 M 2

NYDPP Servo Bur. Corp. X Sep 57 M2 M 2

KOMPILER3 UCRL Livermore X Mar 58 M2 M 2 X

IBM 701 ACOM Allison G.M. C X Dec 54 S1 S 0

BACAIC Boeing Seattle A X X Jul 55 S 1 X

BAP UC Berkeley X X May 57 2

DOUGLAS Douglas SM X May 53 S 1

DUAL Los Alamos X X Mar 53 S 1

607 Los Alamos X Sep 53 1

FLOP Lockheed Calif. X X X Mar 53 S 1

JCS 13 Rand Corp. X Dec 53 1

KOMPILER 2 UCRL Livermore X Oct 55 S2 1 X

NAA ASSEMBLY N. Am. Aviation X

PACT I Pact Groupb R X Jun 55 S2 1

QUEASY NOTS Inyokern X Jan 55 S

QUICK Douglas ES X Jun 53 S 0

SHACO Los Alamos X Apr 53 S 1

SO 2 IBM X Apr 53 1

SPEEDCODING IBM R X X Apr 53 S1 S 1

IBM 705-1, 2 ACOM Allison G.M. C X Apr 57 S1 0

AUTOCODER IBM R X X X Dec 56 S 2

ELI Equitable Life C X May 57 S1 0

FAIR Eastman Kodak X Jan 57 S 0

PRINT I IBM R X X X Oct 56 82 S 2

SYMB. ASSEM. IBM X Jan 56 S 1

SOHIO Std. Oil of Ohio X X X May 56 S1 S 1

FORTRAN IBM-Guide A X Nov 58 S2 S 2 X

IT Std. Oil of Ohio C X S2 S 1 X

AFAC Allison G.M. C X S2 S 2 X

IBM 705-3 FORTRAN IBM-Guide A X Dec 58 M2 M 2 X

AUTOCODER IBM A X X Sep 58 S 2

IBM 702 AUTOCODER IBM X X X Apr 55 S 1

ASSEMBLY IBM X Jun 54 1

SCRIPT G. E. Hanford R X X X X Jul 55 Sl S 1

IBM 709 FORTRAN IBM A X Jan 59 M2 M 2 X

SCAT IBM-Share R X X Nov 58 M2 M 2

IBM 650 ADES II Naval Ord. Lab X Feb 56 S2 S 1 X

BACAIC Boeing Seattle C X X X Aug 56 S 1 X

BALITAC M.I.T. X X X Jan 56 Sl 2

BELL L1 Bell Tel. Labs X X Aug 55 Sl S 0

BELL L2,L3 Bell Tel. Labs X X Sep 55 Sl S 0

DRUCO I IBM X Sep 54 S 0

EASE II Allison G.M. X X Sep 56 S2 S 2

ELI Equitable Life C X May 57 Sl 0

ESCAPE Curtiss-Wright X X X Jan 57 Sl S 2

FLAIR Lockheed MSD, Ga. X X Feb 55 Sl S 0

FOR TRANSIT IBM-Carnegie Tech. A X Oct 57 S2 S 2 X

IT Carnegie Tech. C X Feb 57 S2 S 1 X

MITILAC M.I.T. X X Jul 55 Sl S 2

OMNICODE G. E. Hanford X X Dec 56 Sl S 2

RELATIVE Allison G.M. X Aug 55 Sl S 1

SIR IBM X May 56 S 2

SOAP I IBM X Nov 55 2

SOAP II IBM R X Nov 56 M M 2

SPEED CODING Redstone Arsenal X X Sep 55 Sl S 0

SPUR Boeing Wichita X X X Aug 56 M S 1

FORTRAN (650T) IBM A X Jan 59 M2 M 2

Sperry Rand 1103A COMPILER I Boeing Seattle X X May 57 S 1 X

FAP Lockheed MSD X X Oct 56 Sl S 0

MISHAP Lockheed MSD X Oct 56 M1 S 1

RAWOOP-SNAP Ramo-Wooldridge X X Jun 57 M1 M 1

TRANS-USE Holloman A.F.B. X Nov 56 M1 S 2

USE Ramo-Wooldridge R X X Feb 57 M1 M 2

IT Carn. Tech.-R-W C X Dec 57 S2 S 1 X

UNICODE R Rand St. Paul R X Jan 59 S2 M 2 X

Sperry Rand 1103 CHIP Wright A.D.C. X X Feb 56 S1 S 0

FLIP/SPUR Convair San Diego X X Jun 55 SI S 0

RAWOOP Ramo-Wooldridge R X Mar 55 S1 1

8NAP Ramo-Wooldridge R X X Aug 55 S1 S 1

Sperry Rand Univac I and II AO Remington Rand X X X May 52 S1 S 1

Al Remington Rand X X X Jan 53 S1 S 1

A2 Remington Rand X X X Aug 53 S1 S 1

A3,ARITHMATIC Remington Rand C X X X Apr 56 SI S 1

AT3,MATHMATIC Remington Rand C X X Jun 56 SI S 2 X

BO,FLOWMATIC Remington Rand A X X X Dec 56 S2 S 2

BIOR Remington Rand X X X Apr 55 1

GP Remington Rand R X X X Jan 57 S2 S 1

MJS (UNIVAC I) UCRL Livermore X X Jun 56 1

NYU,OMNIFAX New York Univ. X Feb 54 S 1

RELCODE Remington Rand X X Apr 56 1

SHORT CODE Remington Rand X X Feb 51 S 1

X-I Remington Rand C X X Jan 56 1

IT Case Institute C X S2 S 1 X

MATRIX MATH Franklin Inst. X Jan 58

Sperry Rand File Compo ABC R Rand St. Paul Jun 58

Sperry Rand Larc K5 UCRL Livermore X X M2 M 2 X

SAIL UCRL Livermore X M2 M 2

Burroughs Datatron 201, 205 DATACODEI Burroughs X Aug 57 MS1 S 1

DUMBO Babcock and Wilcox X X

IT Purdue Univ. A X Jul 57 S2 S 1 X

SAC Electrodata X X Aug 56 M 1

UGLIAC United Gas Corp. X Dec 56 S 0

Dow Chemical X

STAR Electrodata X

Burroughs UDEC III UDECIN-I Burroughs X 57 M/S S 1

UDECOM-3 Burroughs X 57 M S 1

M.I.T. Whirlwind ALGEBRAIC M.I.T. R X S2 S 1 X

COMPREHENSIVE M.I.T. X X X Nov 52 Sl S 1

SUMMER SESSION M.I.T. X Jun 53 Sl S 1

Midac EASIAC Univ. of Michigan X X Aug 54 SI S

MAGIC Univ. of Michigan X X X Jan 54 Sl S

Datamatic ABC I Datamatic Corp. X

Ferranti TRANSCODE Univ. of Toronto R X X X Aug 54 M1 S

Illiac DEC INPUT Univ. of Illinois R X Sep 52 SI S

Johnniac EASY FOX Rand Corp. R X Oct 55 S

Norc NORC COMPILER Naval Ord. Lab X X Aug 55 M2 M

Seac BASE 00 Natl. Bur. Stds. X X

UNIV. CODE Moore School X Apr 55 |

Chart Symbols used:

Code R = Recommended for this computer, sometimes only for heavy usage. C = Common language for more than one computer. A = System is both recommended and has common language. Indexing M = Actual Index registers or B boxes in machine hardware. S = Index registers simulated in synthetic language of system. 1 = Limited form of indexing, either stopped undirectionally or by one word only, or having certain registers applicable to only certain variables, or not compound (by combination of contents of registers). 2 = General form, any variable may be indexed by anyone or combination of registers which may be freely incremented or decremented by any amount. Floating point M = Inherent in machine hardware. S = Simulated in language. Symbolism 0 = None. 1 = Limited, either regional, relative or exactly computable. 2 = Fully descriptive English word or symbol combination which is descriptive of the variable or the assigned storage. Algebraic A single continuous algebraic formula statement may be made. Processor has mechanisms for applying associative and commutative laws to form operative program. M.L. = Machine language. Assem. = Assemblers. Inter. = Interpreters. Compl. = Compilers. |

Are the compilers really compilers as we know them today, or is this terminology that has not yet settled down? The computer terminology chapter refers readers interested in Assembler, Compiler and Interpreter to the entry for Routine:

“Routine. A set of instructions arranged in proper sequence to cause a computer to perform a desired operation or series of operations, such as the solution of a mathematical problem.

…

Compiler (compiling routine), an executive routine which, before the desired computation is started, translates a program expressed in pseudo-code into machine code (or into another pseudo-code for further translation by an interpreter).

…

Assemble, to integrate the subroutines (supplied, selected, or generated) into the main routine, i.e., to adapt, to specialize to the task at hand by means of preset parameters; to orient, to change relative and symbolic addresses to absolute form; to incorporate, to place in storage.

…

Interpreter (interpretive routine), an executive routine which, as the computation progresses, translates a stored program expressed in some machine-like pseudo-code into machine code and performs the indicated operations, by means of subroutines, as they are translated. …”

The definition of “Assemble” sounds more like a link-load than an assembler.

When the coding system has a cross in both the assembler and compiler column, I suspect we are dealing with what would be called an assembler today. There are 28 crosses in the Compiler column that do not have a corresponding entry in the assembler column; does this mean there were 28 compilers in existence in 1957? I can imagine many of the languages being very simple (the fashionability of creating programming languages was already being called out in 1963), so producing a compiler for them would be feasible.

The citation given for Table 23 contains a few typos. I think the correct reference is: Bemer, Robert W. “The Status of Automatic Programming for Scientific Problems.” Proceedings of the Fourth Annual Computer Applications Symposium, 107-117. Armour Research Foundation, Illinois Institute of Technology, Oct. 24-25, 1957.

Programming Languages: nothing changes

While rummaging around today I came across: Programming Languages and Standardization in Command and Control by J. P. Haverty and R. L. Patrick.

Much of this report could have been written in 2013, but it was actually written fifty years earlier; the date is given away by “… the effort to develop programming languages to increase programmer productivity is barely eight years old.”

Much of the sound and fury over programming languages is the result of zealous proponents touting them as the solution to the “programming problem.”

I don’t think any major new sources of sound and fury have come to the fore.

… the designing of programming languages became fashionable.

Has it ever gone out of fashion?

Now the proliferation of languages increased rapidly as almost every user who developed a minor variant on one of the early languages rushed into publication, with the resultant sharp increase in acronyms. In addition, some languages were designed practically in vacuo. They did not grow out of the needs of a particular user, but were designed as someone’s “best guess” as to what the user needed (in some cases they appeared to be designed for the sake of designing).

My post on this subject was written 49 years later.

…a computer user, who has invested a million dollars in programming, is shocked to find himself almost trapped to stay with the same computer or transistorized computer of the same logical design as his old one because his problem has been written in the language of that computer, then patched and repatched, while his personnel has changed in such a way that nobody on his staff can say precisely what the data processing Job is that his machine is now doing with sufficient clarity to make it easy to rewrite the application in the language of another machine.

Vendor lock-in has always been good for business.

Perhaps the most flagrantly overstated claim made for POLs is that they result in better understanding of the programming operation by higher-level management.

I don’t think I have ever heard this claim made. But then my programming experience started a bit later than this report.

… many applications do not require the services of an expert programmer.

Ssshh! Such talk is bad for business.

The cost of producing a modern compiler, checking it out, documenting it, and seeing it through initial field use, easily exceeds $500,000.

For ‘big-company’ written compilers this number has not changed much over the years. Of course man+dog written compilers are a lot cheaper and new companies looking to aggressively enter the market can spend an order of magnitude more.

In a young rapidly growing field major advances come so quickly or are so obvious that instituting a measurement program is probably a waste of time.

True.

At some point, however, as a field matures, the costs of a major advance become significant.

Hopefully this point in time has now arrived.

Language design is still as much an art as it is a science. The evaluation of programming languages is therefore much akin to art criticism–and as questionable.

Calling such a vanity driven activity an ‘art’ only helps glorify it.

Programming languages represent an attack on the “programming problem,” but only on a portion of it–and not a very substantial portion.

In fact, it is probably very insubstantial.

Much of the “programming problem” centers on the lack of well-trained experienced people–a lack not overcome by the use of a POL.

Nothing changes.

The following table is for those of you complaining about how long it takes to compile code these days. I once had to compile some Coral 66 on an Elliot 903, the compiler resided in 5(?) boxes of paper tape, one box per compiler pass. Compilation involved: reading the paper tape containing the first pass into the computer, running this program and then reading the paper tape containing the program source, then reading the second paper tape containing the next compiler pass (there was not enough memory to store all compiler passes at once), which processed the in-memory output of the first pass; this process was repeated for each successive pass, producing a paper tape output of the compiled code. I suspect that compiling on the machines listed gave the programmers involved plenty of exercise and practice splicing snapped paper-tape.

Computer Cobol statements Machine instructions Compile time (mins) UNIVAC II 630 1,950 240 RCA 501 410 2,132 72 GE 225 328 4,300 16 IBM 1410 174 968 46 NCR 304 453 804 40 |

Uncovering the undefined behaviors

I think that all programming languages contain some constructs that have undefined behavior.

The C Standard explicitly lists various constructs as having undefined behavior. It also specifies that: Undefined behavior is otherwise indicated in this International Standard by the words “undefined behavior” or by the omission of any explicit definition of behavior.; the second half of the sentence refers to what might be called implicit undefined behavior. Implicit undefined behavior can be subdivided into intentional and unintentional. Intentional undefined behavior applies to constructs that the committee considered and decided (and continues to decide) to say nothing about (e.g., question 19), while unintentional undefined behavior applies to constructs that the committee did not explicitly consider (when discovered, these often end up as defect reports, which are sometimes resolved as intentionally undefined behavior).

Fans of some languages claim that ‘their’ language does not contain any undefined behaviors.

Ada does not explicitly specify any construct as having undefined behavior, but it does specify that some constructs generate a bounded error; a rose by any other name…

I sometimes bump into language inventors claiming that ‘their’ language is fully specified, i.e., does not contain any undefined behaviors. My first question to them, about the behavior of division involving negative values, invariable requires me to explain that there are two possible ways of doing it (ignorance is bliss when fully specifying a language). The invariable answer is that the behavior is whatever the underlying implementation does (which is often written in C). In other words, they have imported all the undefined behaviors of the implementation language.

Follow-up question include: what is the order of expression evaluation (e.g., left-to-right, right-to-left, inside out…), what is the order of function argument evaluation (often driven by the direction of stack growth), what is the order of initialization and other order related questions that comes to mind. Their fully specified language quickly turns out to be a sham.

A recent post by John Regehr talks about Gödel’s incompleteness Theorem as a source of undefined behavior. My understanding is that the underlying argument is built on non-termination. How is it possible to tell the difference between non-termination and lasting longer than the age of the universe? In itself I don’t think this theorem is a source of undefined behavior; more enlightenment welcome.

Evidence for the benefits of strong typing, where is it?

It is often claimed that writing software using a strongly typed programming language bestows worthwhile benefits. Those making the claims can sometimes be rather vague about exactly what the benefits are, while at other times appear willing to claim almost any benefit. What does the empirical evidence have to say (let’s ignore the what languages are strongly typed elephant in the room)?

Until recently there had been two empirical studies (plus a couple of language comparison experiments; one of the better ones involves the researcher timing himself implementing various algorithms in various languages; Zislis “An Experiment in Algorithm Implementation”), while in the last few years a group has been experimenting away in Germany (three’ish published data sets).

Measuring changes in developer performance caused by the use of different programming languages is very hard, some of the problems include:

- every person is different: a way needs to be found to take account of differences in subject ability/knowledge/characteristics,

- every problem is different: it may be easier to write a program to solve a problem using language X than using language Y,

- it is difficult to obtain experimental subjects.

The experimental procedure adopted by all the experiments discussed here is to:

- select two different languages or the same language modified to not support some type constructs,

- get students (mostly upper-undergraduates+graduates) to volunteer as experimental subjects,

- have each subject use one language to solve a problem and then use the other language to solve the same problem. Each subject is randomly assigned to a group using a given language order (the experiments start out with an equal number of subjects in each group, but not all subjects complete every problem),

- in some cases the previous step is repeated for new problems.

Having subjects solve the same problem twice creates the opportunity for learning to occur during the implementation of the first program and for this learning to improve performance during the second implementation. The experimental procedures employed generate information that can be used during the analysis of the data (in my case using a mixed-model in R; download code and all data) to factor this ordering effect into the created model.

So what are the results? In chronological order we have (if you know of anymore published work please tell me):

- Gannon “An Experimental Evaluation of Data Type Conversions”: Implemented compilers for two simple languages (think BCPL and BCPL+a string type and simple structures; by today’s standards one language is not quiet as weakly typed as the other). One problem had to be solved and this was designed to require the use of features available in both languages, e.g., a string oriented problem (final programs were between 50-300 lines). The result data included number of errors during development and number of runs needed to create a working program (this all happened in 1977, well before the era of personal computers, when batch processing was king).

There was a small language difference in number of errors/batch submissions; the difference was about half the size of that due to the order in which languages were used by subjects, both of which were small in comparison to the variation due to subject performance differences. While the language effect was small, it exists. To what extent Can the difference be said to be due to stronger typing rather than only one language having built in support for a string type? Who knows, no more experiments like this were performed for 20 years

- Prechelt & Tichy A Controlled Experiment to Assess the Benefits of Procedure Argument Type Checking: Used two C compilers, one K&R C (i.e., no argument checking of function calls) and the other ANSI C, with subjects solving one problem using both compilers; available output data was time taken by subjects to solve the problem.

Using the no argument checking compiler slowed implementation time by around 10%, about five times smaller than the variation in subject performance (there was an ordering effect of around 30%).

- Mayer, Kleinschmager & Hanenberg: Two experiments used different languages (Java and Groovy) and multiple problems; result data was time for subjects to complete the task (Do Static Type Systems Improve the Maintainability of Software Systems? An Empirical Study and An Empirical Study of the Influence of Static Type Systems on the Usability of Undocumented Software). No significant difference due to just language (surprisingly) but differences due to language order, but big differences due to language/problem interaction with some problems solved more quickly in Java and other more quickly in Groovy. Again large variation due to subject performance.

Another experiment used a single language (Java) and multiple problems involving making use of either Java’s generic types or non-generic types (“Do Developers Benefit from Generic Types?”). Again the only significant language difference effects occurred through interaction with other variables in the experiment (e.g., the problem or the language ordering) and again there were large variations in subject performance.

In summary, when a language typing/feature effect has been found its contribution to overall developer performance has been small.

I think some reasons that the effects of typing have been so small, or non-existent, include (I should declare my belief that strong typing is useful):

- the use of students as subjects. Most students have very little programming experience relative to professional developers (i.e., under 100 hours vs. thousands of hours). I can easily imagine many student subjects often finding the warnings produced by the type system more confusing than helpful. More experienced developers are in a position to make full use of what a type system offers, and researchers should try to use professional developers as subjects (it is not that hard to obtain such volunteers)

- the small size of the problems. Typing comes into its own when used to organize and control large amounts of code. I understand the constraints of running an experiment limit the amount of code involved.

An ISO Standard for R (just kidding)

IST/5, the British Standards’ committee responsible for programming languages in the UK, has a new(ish) committee secretary and like all people in a new role wants to see a vision of the future; IST/5 members have been emailed asking us what we see happening in the programming language standards’ world over the next 12 months.

The answer is, off course, that the next 12 months in programming language standards is very likely to be the same as the previous 12 months and the previous 12 before that. Programming language standards move slowly, you don’t want existing code broken by new features and it would be a huge waste of resources creating a standard for every popular today/forgotten tomorrow language.

While true the above is probably not a good answer to give within an organization that knows its business intrinsically works this way, but pines for others to see it as doing dynamic, relevant, even trendy things. What could I say that sounded plausible and new? Big data was the obvious bandwagon waiting to be jumped on and there is no standard for R, so I suggested that work on this exciting new language might start in the next 12 months.

I am not proposing that anybody start work on an ISO standard for R, in fact at the moment I think it would be a bad idea; the purpose of suggesting the possibility is to create some believable buzz to suggest to those sitting on the committees above IST/5 that we have our finger on the pulse of world events.

The purpose of a standard is to create agreement around one way of doing things and thus save lots of time/money that would otherwise be wasted on training/tools to handle multiple language dialects. One language for which this worked very well is C, for which there were 100+ incompatible compilers in the early 1980s (it was a nightmare); with the publication of the C Standard users finally had a benchmark that they could require their suppliers to meet (it took 4-5 years for the major suppliers to get there).

R is not suffering from a proliferation of implementations (incompatible or otherwise), there is no problem for an R standard to solve.

Programming language standards do get created for reasons other than being generally useful. The ongoing work on C++ is a good example of consultant driven standards development; consultants who make their living writing and giving seminars about the latest new feature of C++ require a steady stream of new feature to talk about and have an obvious need to keep new versions of the standard rolling down the production line. Feeling that a language is unappreciated is another reason for creating an ISO Standard; the Modula-2 folk told me that once it became an ISO Standard the use of Modula-2 would take off. R folk seem to have a reasonable grip on reality, or have I missed a lurking distorted view of reality that will eventually give people the drive to spend years working their fingers to the bone to create a standard that nobody is really that interested in?

Reality in the world of programming language standards

I see a lot of steam being vented about the standards’ process as applied to programming languages and software related topics. Knowing something about how the process works might help people live calmer lives, at least once they have calmed down after reading this article. What I have to say applies to programming language standards because these are what I have been involved with, as a member and convener of various UK and international committtees, for 25+ years.

- ISO and your national standards’ body don’t care about the standard you are talking about.

These organizations are monopolies who are required to demonstrate that documented procedures are followed by all concerned. Can you think of any organizational structure that would create less incentives for those on the inside to listen to those on the outside?

Yes, these organizations do sell standards but the sales model is all about the long tail and no peak, to speak of, of best sellers. The real business model for running a standards’ organization is to either charge members a fee (your country pays membership dues for each Standards Committee it wants a say in; if your country has not paid to be a member of ISO JTC 1/SC22 you have no say in programming language standards. ANSI in the US charges people for the right to volunteer their time to attend meetings to work on a standard) and/or rely on government subsidy.

Not being cared about is actually a luxury that people who work in programming language standards should aspire to. The bureaucrats who work in standards hate us; here in the UK there has been at least one attempt to kill off work on programming language standards and I have heard of similar experiences in other countries. The problem is that the standards we produce don’t fit the mold that works for most other standards; programming language standards contain an order of magnitude more pages than the average standard (until recently there was a print run of new standards which then had to be stored until sold and the volume occupied by programming language standards was of note {so I’m reliably informed}), take longer to produce (i.e., more work for the bureaucrats) and all this cost is not justified by the sales figures (which are confidential and last time I saw them only just required me to take my shoes and socks off to count).

- Standards are created by the volunteers who regularly turn up at meetings.

It is only the enthusiasm of these volunteers that makes the process work. If you don’t turn up at meetings then what you think does not count (not quite true, something you write might influence the thinking of one of the worker bees who attends meetings resulting in wording in the standard).

If you really are interested in a standard then become an active member of the committee responsible for it, at least the national one and if you have the time the international one

- Committee documents can be made public.

There are no rules preventing a standards committee putting its documents on a website for Joe public to download. The issue is finding somebody willing to do the work of hosting the website (the programming language world is lucky to have Keld Simonsen) and a willingness of committee members to be open about all their documents.

Looking in from the outside it seems to me that many non-programming language committees want to maintain an aura of mystic and privileged access.

Compiler writing is for hedgehogs

It is said that a fox knows many thing, but the hedgehog knows one big thing. An insightful article by Venkatesh Rao (Venkat) showed how foxes and hedgehogs uniquely map to the two contrasting philosophical points of view of those having weak views that are strongly held (a fox) and those having strong views that are weakly held (a hedgehog).

Venkat observes that the many things the fox knows are acquired from multiple sources and that this disparate collection of knowledge is not connected together by any consistent set of core principles; the one big thing that a hedgehog knows consists of knowledge that is connected by a small set of consistent core principles.

An average developer’s knowledge of a language is very fox-like, i.e., it is culled from many particular instances with each snippet of knowledge being accompanied by the experience around which it was obtained. Back in the day, the ‘advanced’ courses I used to give to developers who had 2-3 years experience were really designed to show how the components of a language fitted together, i.e., to provide a structure to what they already knew about the language. Switching developers from an approach based on their experience of particular instances for each language feature to a rule based approach was often hard work, some developers seem to be naturally driven by itemized personal experiences.

Of necessity a compiler writer spends a lot of time studying one programming language (I’m excluding those who invent their own language as they write the compiler for it) and/or hardware cpu. This extended period of study, assuming the developer has sufficient cognitive capacity (the drop out rate is high), creates a heavily interconnected knowledge of the language in the compiler writer’s head, i.e., they understand one thing very deeply and have strong views created by the core rules they have created to organize this knowledge. These views are weakly held because experience shows that every now and again a major insight is achieved that changes the developer’s perspective completely.

This fox-like characteristic of developer language knowledge goes a long way towards explaining why religious language wars go on for so long and can be so ferocious. A fox is arguing from personal experience that is not based on a set of core principles; every point has to be argued because there is nothing connecting them, undermining one idea does not affect the status of the beliefs about anything else.

I am not arguing that being a fox is a good or a bad thing, and I am certainly not arguing that everybody should spend the huge amount of time needed to become a hedgehog (it is not a cost effective use of time). I am simply making an observation about a state of affairs, and one that is likely to continue because there are no incentives in trying to change things.

I think being a major contributor in the creation of any large and complex software system requires that somebody be or become a hedgehog.

I think that many software developers are foxes; of course to people looking in developers appear to be hedgehogs in the world of software.

R now has its own shelf in Dillons

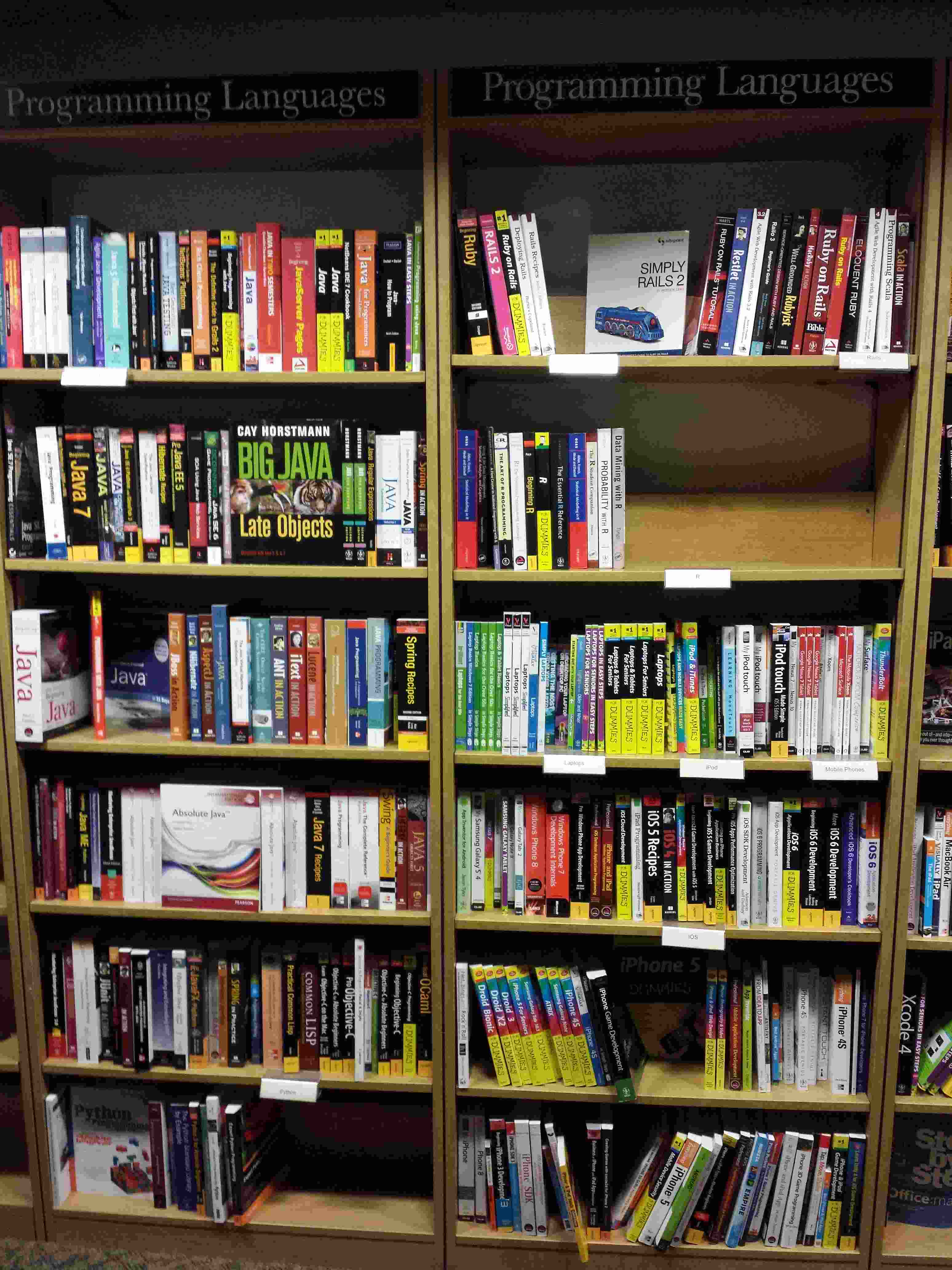

I was in Dillons, the one opposite University College London, at the start of the week and what did I spy there?

There is now a bookshelf devoted to R (right, second from top) in the programming languages section. The shelf would be a lot fuller if O’Reilly did not have a complete section devoted to their books.

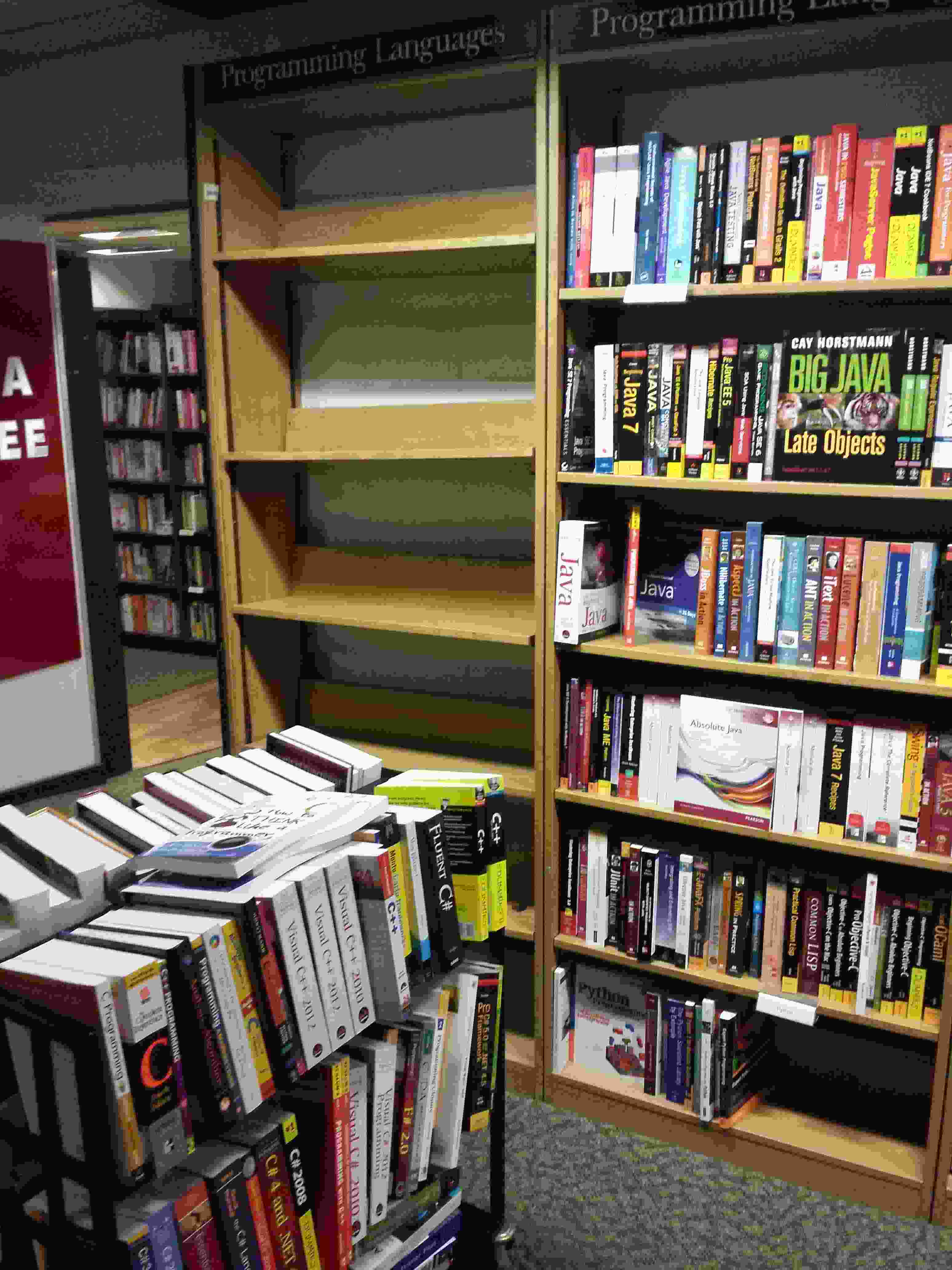

A trolley of C/C++ books was waiting to refill the shelves near the door.

Being adjacent to a university means that programming language books make up a much larger percentage of software books.

And there is O’Reilly in the corner with two stacks of shelves.

And yes, this is a big bookshop, the front is a complete block; computing/mathematics/physics/chemistry/engineering/medicine are in the basement. You can buy skeletons and stethoscopes in the medical section a few rooms down from computing; a stethoscope is useful for locating strange noises in computer cases without having to open them.

Readers a bit younger than me probably know this shop as Waterstones.

Recent Comments