Archive

Performance variation in 2,386 ‘identical’ processors

Every microprocessor is different, random variations in the manufacturing process result in transistors, and the connections between them, being fabricated with more/less atoms. An atom here and there makes very little difference when components are built from millions, or even thousands, of atoms. The width of the connections between transistors in modern devices might only be a dozen or so atoms, and an atom here and there can have a noticeable impact.

How does an atom here and there affect performance? Don’t all processors, of the same product, clocked at the same frequency deliver the same performance?

Yes they do, an atom here or there does not cause a processor to execute more/less instructions at a given frequency. But an atom here and there changes the thermal characteristics of processors, i.e., causes them to heat up faster/slower. High performance processors will reduce their operating frequency, or voltage, to prevent self-destruction (by overheating).

Processors operating within the same maximum power budget (say 65 Watts) may execute more/less instructions per second because they have slowed themselves down.

Some years ago I spotted a great example of ‘identical’ processor performance variation, and the author of the example, Barry Rountree, kindly sent me the data. In the weeks before Christmas I finally got around to including the data in my evidence-based software engineering book. Unfortunately I could not figure out what was what in the data (relearning an important lesson: make sure to understand the data as soon as it arrives), thankfully Barry came to the rescue and spent some time doing software archeology to figure out the data.

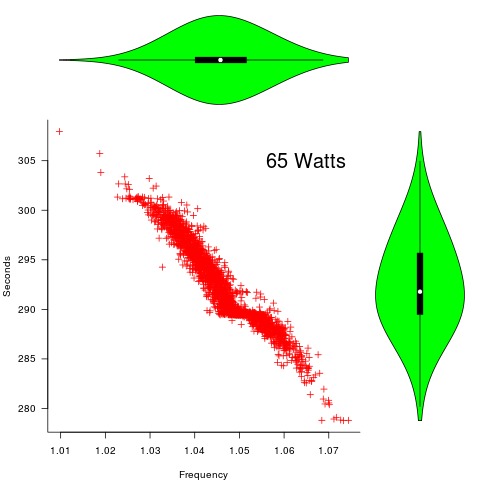

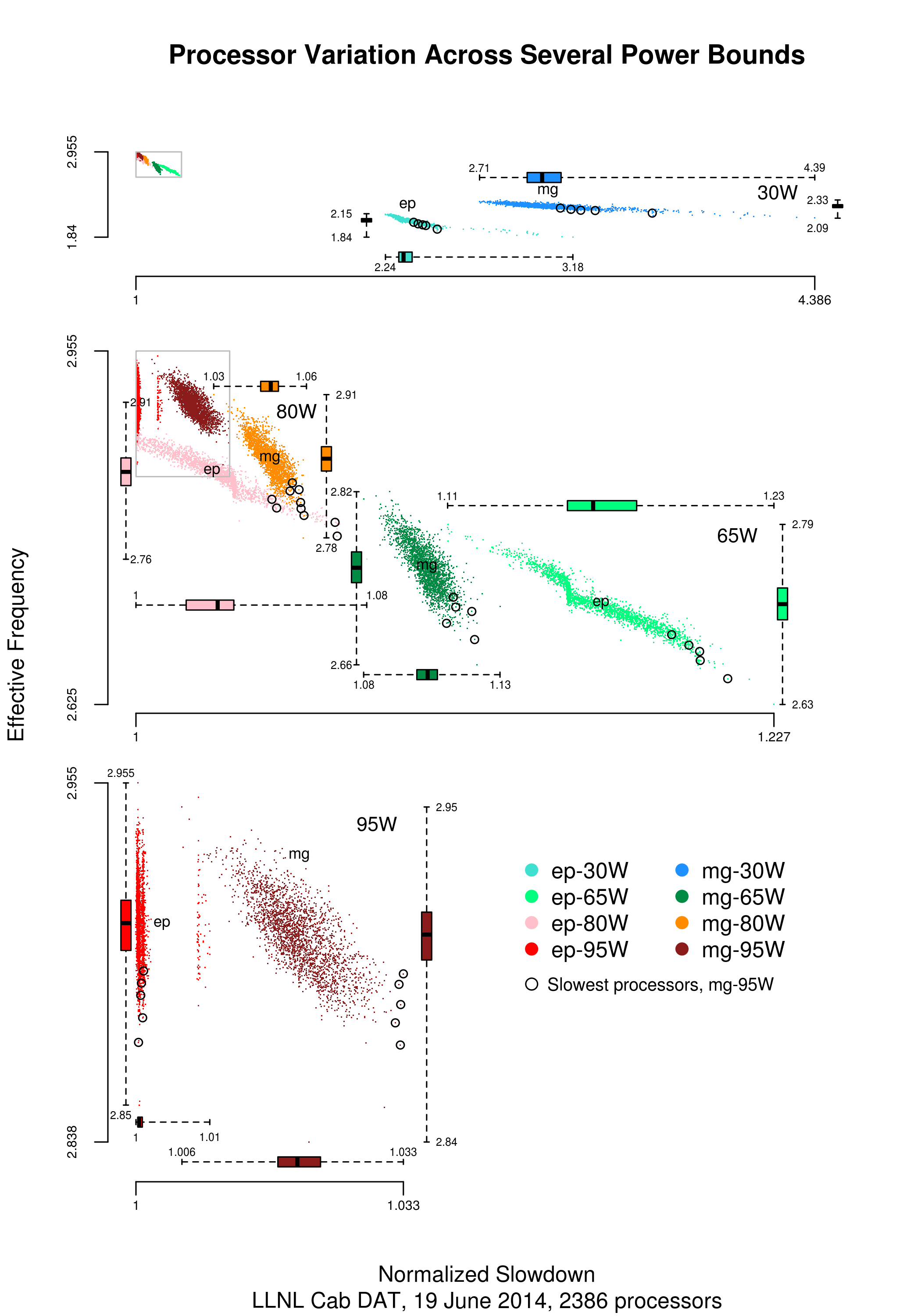

The original plots showed frequency/time data of 2,386 Intel Sandy Bridge XEON processors (in a high performance computer at the Lawrence Livermore National Laboratory) executing the EP benchmark (the data also includes measurements from the MG benchmark, part of the NAS Parallel benchmark) at various maximum power limits (see plot at end of post, which is normalised based on performance at 115 Watts). The plot below shows frequency/time for a maximum power of 65 Watts, along with violin plots showing the spread of processors running at a given frequency and taking a given number of seconds (my code, code+data on Barry’s github repo):

The expected frequency/time behavior is for processors to lie along a straight line running from top left to bottom right, which is roughly what happens here. I imagine (waving my software arms about) the variation in behavior comes from interactions with the other hardware devices each processor is connected to (e.g., memory, which presumably have their own temperature characteristics). Memory performance can have a big impact on benchmark performance. Some of the other maximum power limits, and benchmark, measurements have very different characteristics (see below).

More details and analysis in the paper: An empirical survey of performance and energy efficiency variation on Intel processors.

Intel’s Sandy Bridge is now around seven years old, and the number of atoms used to fabricate transistors and their connectors has shrunk and shrunk. An atom here and there is likely to produce even more variation in the performance of today’s processors.

A previous post discussed the impact of a variety of random variations on program performance.

Update start

A number of people have pointed out that I have not said anything about the impact of differences in heat dissipation (e.g., faster/slower warmer/cooler air-flow past processors).

There is some data from studies where multiple processors have been plugged, one at a time, into the same motherboard (i.e., low budget PhD research). The variation appears to be about the same as that seen here, but the sample sizes are more than two orders of magnitude smaller.

There has been some work looking at the impact of processor location (e.g., top/bottom of cabinet). No location effect was found, but this might be due to location effects not being consistent enough to show up in the stats.

Update end

Below is a png version of the original plot I saw:

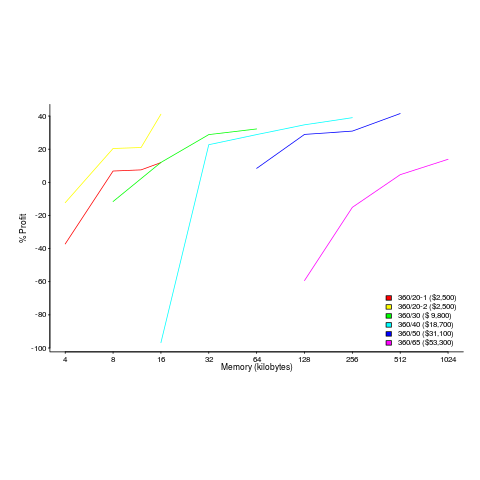

Modular vs. monolithic programs: a big performance difference

For a long time now I have been telling people that no experiment has found a situation where the treatment (e.g., use of a technique or tool) produces a performance difference that is larger than the performance difference between the subjects.

The usual results are that differences between people is the source of the largest performance difference, successive runs are the next largest (i.e., people get better with practice), and the smallest performance difference occurs between using/not using the technique or tool.

This is rather disheartening news.

While rummaging through a pile of books I had not looked at in many years, I (re)discovered the paper “An empirical study of the effects of modularity on program modifiability” by Korson and Vaishnavi, in “Empirical Studies of Programmers” (the first one in the series). It’s based on Korson’s 1988 PhD thesis, with the same title.

There were four experiments, involving seven people from industry and nine students, each involving modifying a 900(ish)-line program in some way. There were two versions of each program, they differed in that one was written in a modular form, while the other was monolithic. Subjects were permuted between various combinations of program version/problem, but all problems were solved in the same order.

The performance data (time to complete the task) was published in the paper, so I fitted various regressions models to it (code+data). There is enough information in the data to separate out the effects of modular/monolithic, kind of problem and subject differences. Because all subjects solved problems in the same order, it is not possible to extract the impact of learning on performance.

The modular/monolithic performance difference was around twice as large as the difference between subjects (removing two very poorly performing subjects reduces the difference to 1.5). I’m going to have to change my slides.

Would the performance difference have been so large if all the subjects had been experienced developers? There is not a lot of well written modular code out there, and so experienced developers get lots of practice with spaghetti code. But, even if the performance difference is of the same order as the difference between developers, that is still a very worthwhile difference.

Now there are lots of ways to write a program in modular form, and we don’t know what kind of job Korson did in creating, or locating, his modular programs.

There are also lots of ways of writing a monolithic program, some of them might be easy to modify, others a tangled mess. Were these programs intentionally written as spaghetti code, or was some effort put into making them easy to modify?

The good news from the Korson study is that there appears to be a technique that delivers larger performance improvements than the difference between people (replication needed). We can quibble over how modular a modular program needs to be, and how spaghetti-like a monolithic program has to be.

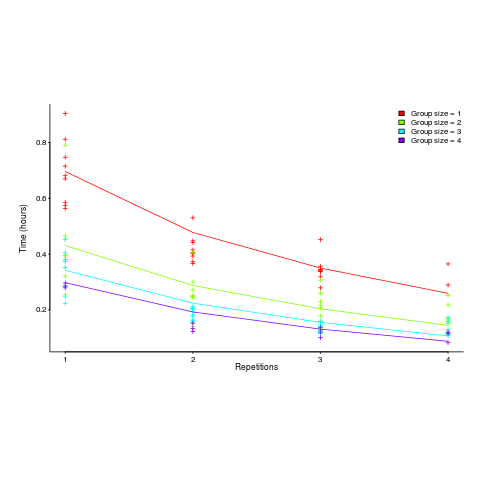

Impact of group size and practice on manual performance

How performance varies with group size is an interesting question that is still an unresearched area of software engineering. The impact of learning is also an interesting question and there has been some software engineering research in this area.

I recently read a very interesting study involving both group size and learning, and Jaakko Peltokorpi kindly sent me a copy of the data.

That is the good news; the not so good news is that the experiment was not about software engineering, but the manual assembly of a contraption of the experimenters devising. Still, this experiment is an example of the impact of group size and learning (through repeating the task) on time to complete a task.

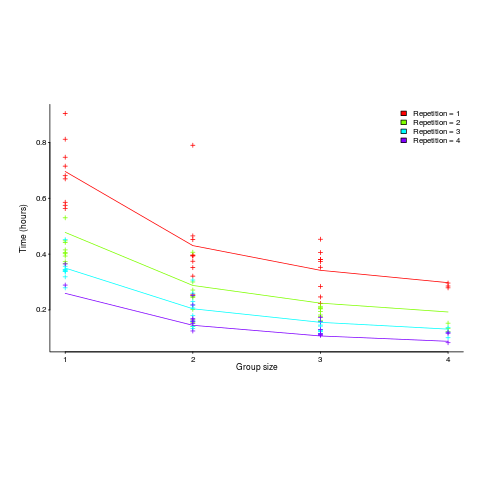

Subjects worked in groups of one to four people and repeated the task four times. Time taken to assemble a bespoke, floor standing rack with some odd-looking connections between components was measured (the image in the paper shows something that might function as a floor standing book-case, if shelves were added, apart from some component connections getting in the way).

The following equation is a very good fit to the data (code+data). There is theory explaining why  applies, but the division by group-size was found by suck-it-and-see (in another post I found that time spent planning increased with teams size).

applies, but the division by group-size was found by suck-it-and-see (in another post I found that time spent planning increased with teams size).

There is a strong repetition/group-size interaction. As the group size increases, repetition has less of an impact on improving performance.

![time = 0.16+ 0.53/{group size} - log(repetitions)*[0.1 + {0.22}/{group size}] time = 0.16+ 0.53/{group size} - log(repetitions)*[0.1 + {0.22}/{group size}]](https://shape-of-code.com/wp-content/plugins/wpmathpub/phpmathpublisher/img/math_981_b0d171bba046801a68ce5dc8ae1d6115.png)

The following plot shows one way of looking at the data (larger groups take less time, but the difference declines with practice), lines are from the fitted regression model:

and here is another (a group of two is not twice as fast as a group of one; with practice smaller groups are converging on the performance of larger groups):

Would the same kind of equation fit the results from solving a software engineering task? Hopefully somebody will run an experiment to find out 🙂

Publishing information on project progress: will it impact delivery?

Numbers for delivery date and cost estimates, for a software project, depend on who you ask (the same is probably true for other kinds of projects). The people actually doing the work are likely to have the most accurate information, but their estimates can still be wildly optimistic. The managers of the people doing the work have to plan (i.e., make worst/best case estimates) and deal with people outside the team (i.e., sell the project to those paying for it); planning requires knowledge of where things are and where they need to be, while selling requires being flexible with numbers.

A few weeks ago I was at a hackathon organized by the people behind the Project Data and Analytics meetup. The organizers (Martin Paver & co.) had obtained some very interesting project related data sets. I worked on the Australian ICT dashboard data.

The Australian ICT dashboard data was courtesy of the Queensland state government, which has a publicly available dashboard listing digital project expenditure; the Victorian state government also has a dashboard listing ICT expenditure. James Smith has been collecting this data on a monthly basis.

What information might meaningfully be extracted from monthly estimates of project delivery dates and costs?

If you were running one of these projects, and had to provide monthly figures, what strategy would you use to select the numbers? Obviously keep quiet about internal changes for as long as possible (today’s reduction can be used to offset a later increase, or vice versa). If the client requests changes which impact date/cost, then obviously update the numbers immediately; the answer to the question about why the numbers changed is that, “we are responding to client requests” (i.e., we would otherwise still be on track to meet the original end-points).

What is the intended purpose of publishing this information? Is it simply a case of the public getting fed up with overruns, with publishing monthly numbers is seen as a solution?

What impact could monthly publication have? Will clients think twice before requesting an enhancement, fearing public push back? Will companies doing the work make more reliable estimates, or work harder?

Project delivery dates/costs change because new functionality/work-to-do is discovered, because the appropriate staff could not be hired and other assorted unknown knowns and unknowns.

Who is looking at this data (apart from half a dozen people at a hackathon on the other side of the world)?

Data on specific projects can only be interpreted in the context of that project. There is some interesting research to be done on the impact of public availability on client and vendor reporting behavior.

Will publication have an impact on performance? One way to get some idea is to run an A/B experiment. Some projects have their data made public, others don’t. Wait a few years, and compare project performance for the two publication regimes.

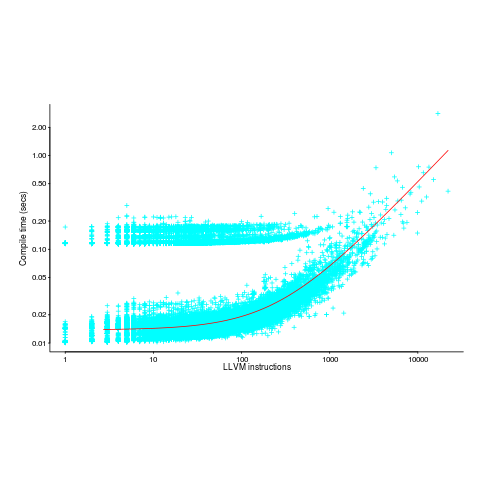

Time taken to compile a source file

How long will it take to compile a source file?

When computers were a lot slower than they are today, this question was of general interest. Job scheduling is more effective when reliable runtime estimates are available, and developers want to know if there is enough time to get a coffee before the compile finishes.

An embarrassing fact about compile time performance, used to be that a large percentage of compile time was spent doing lexical analysis [“The cost of lexical analysis”, I cannot find an online copy]. Why was this embarrassing? Compiler writers like to boast about all the fancy optimizations their compiler does; but doing fancy stuff consumes lots of resources, so why were compilers spending so much of their time doing simple things like lexical analysis? The reality was that fancy compiler optimizations were not commercially viable until developer computers contained tens of megabytes of memory, i.e., very few pre-1990 compilers did any real optimization (people are still fussing over lexer performance).

An analysis of the data in Captain Dennis Miller’s Masters thesis (late Rome period), finds compile time is proportional to the square root of the number of tokens in the source (code+data); more complicated models are a slightly better fit. Where did square root come from? I expected a linear relationship, but would be willing to go with log. The measurements are from Ada compilers in the mid 1980s. I know several people who worked on Ada compilers during that time, and they were implementing the latest fancy optimizations (Ada was going to be the next big thing and the venture capital was flowing; big companies, with big computers were going to be paying lots of money to use Ada, but then microcomputers came along). I think that square root is driven by OS resource limitations, the compilers are using lots of memory and a noticeable amount of time is spent swapping.

So computers got a lot faster and people lost interest in estimates of how long it would take to compile individual files. I have not seen any interest in predicting how long it would take to compile whole projects (just complaints about how long it takes). There has been some work on progress indicators, updated as compilation progresses, which is a step in the right direction. Perhaps somebody has recorded compile time information and thrown machine learning at it; I usually ignore machine learning papers applied to software engineering and perhaps I have missed something. Pointers to project compile time prediction work welcome.

Then along came just-in-time compilation. Now people want to estimate how long it will take to generate machine code from some intermediate form, that is being interpreted.

The plot below (thanks to Rafael Auler for kindly supplying the data from his paper) shows the time taken to generate code from functions containing a given number of LLVM instructions (an intermediate code), at optimization level O3. The red line is a regression fit to one of the ‘arms’ and shows constant time for less than 100’ish instructions and then a linear relationship. I have no idea why the time is roughly constant for a large number of functions.

There is a lot of variation for function containing the same number of instructions. This is to be expected when lots of different optimizations are being tried; sometimes a function will contain lots of the kind of code that a particular optimization spends lot of times process and sometimes the code will not contain anything interesting (i.e., no optimizations are found).

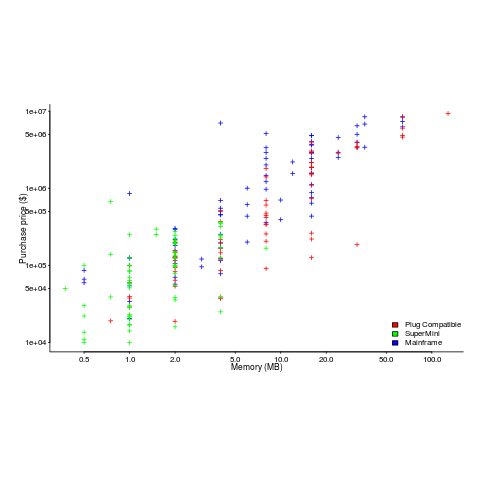

Main memory: the crucial component that vendors don’t mention

CPU performance hogs the limelight when people discuss the year-on-year increases in computing power that used to occur.

This focus on cpu performance was/is driven by marketing, the people with the money either don’t want customers thinking about the performance impact of main memory size or speed, or want them to treat the processor as the most important component of a computer. Vendors want processor performance to drive customer purchase decisions.

Hardware manufacturers used to entice new customers with low cost machines, containing minimal memory. Once a customer started to use their shiny new computer, they found that it did save them lots of time and money, but also they needed more memory (which could only be brought from the manufacturer and was not cheap).

The plot below shows the prices IBM charged for System 360s, in 1966. Anti-trust investigations uncover all kinds of interesting data, like selling low-spec equipment at a loss to entice customers and make life difficult for competitors (code+data for all plots).

The plot below (data from the 19 Aug 1985 issue of ComputerWorld) shows how the price of computers increased as the minimum about of memory they supported increased.

Yes, in 1985 top end computers came with over 50M of memory; but most customers thought themselves lucky if they had a few megabytes.

If the processor is slow, it just takes longer for programs to run. If the computer does not have enough memory, programs cannot run. For most applications memory requirements are addressed first, followed by processor performance; memory requirements is the number one issue. The optimizations that commercial compilers could perform were limited by the memory capacity of developer machines.

Intel’s main line of business used to be selling memory chips, but these chips became commodity items as more companies entered the market; Intel bet the farm on selling processors and the rest is history. As a seller of a unique product it was/is in Intel’s interest to spend lots of money on marketing the benefits of processor performance; sellers of commodity items (such as memory chips) don’t have nearly as much to gain from generic product marketing, because customers may choose to buy from other sellers (in such markets sellers have to concentrate on marketing themselves).

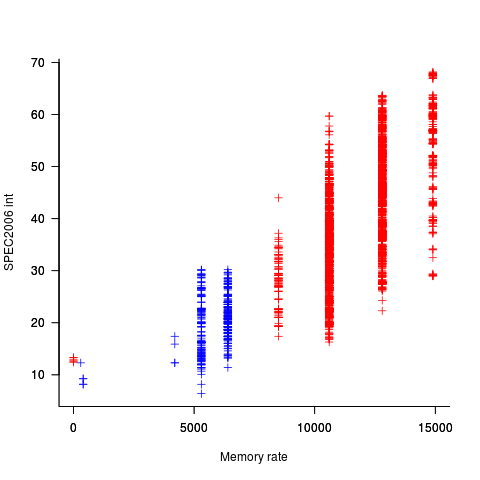

Memory capacity/speed and cpu speed are two aspects of system performance; they need to be balanced to meet customer drive application requirements. The plot below shows the SPEC cpu integer performance of 4,332 systems running at various clock rates; the colors denote the different peak memory transfer rates of the memory chips in these systems (code+data).

These days (and perhaps in the past, I don’t have any data), memory performance is a much better predictor of system performance, but vendors don’t have an incentive to market this fact.

Peak memory transfer rate is best SPECint performance predictor

The idea that cpu clock rate is the main driver of system performance on the SPEC2006 cpu benchmark is probably well entrenched in developers’ psyche. Common knowledge, or folklore, is slow to change. The apparently relentless increase in cpu clock rate, a side-effect of Moore’s law, stopped over 10 years ago, but many developers still behave as-if it is still happening today.

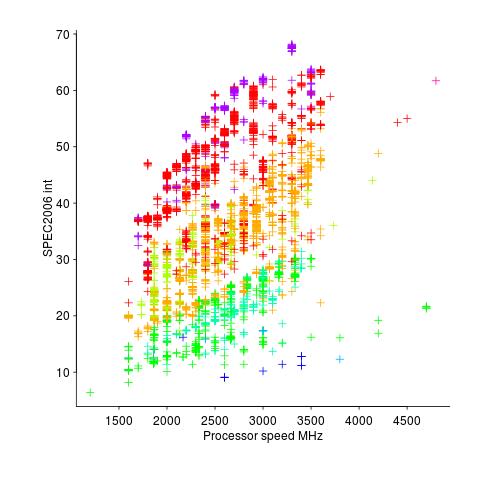

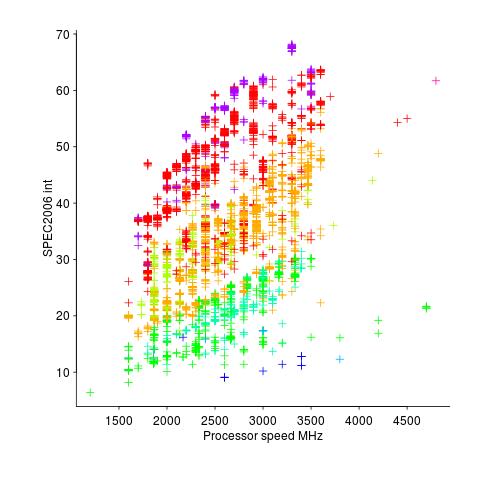

The plot below shows the SPEC cpu integer performance of 4,332 systems running at various clock rates; the colors denote the different peak memory transfer rates of the memory chips in these systems (code and data).

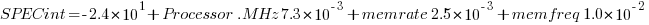

Fitting a regression model to this data, we find that the following equation predicts 80% of the variance (more complicated models fit better, but let’s keep it simple):

where: Processor.MHz is the processor clock rate, memrate the peak memory transfer rate and memfreq the frequency at which the memory is clocked.

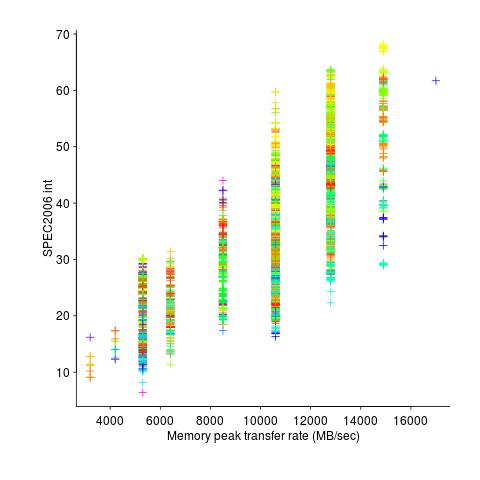

Analysing the relative contribution of each of the three explanatory variables turns up the surprising answer that peak memory transfer rate explains significantly more of the variation in the data than processor clock rate (by around a factor of five).

If you are in the market for a new computer and are interested in relative performance, you can obtain a much more accurate estimate of performance by using the peak memory transfer rate of the DIMMs contained in the various systems you were considering. Good luck finding out these numbers; I bet the first response to your question will be “What is a DIMM?”

Developer focus on cpu clock rate is no accident, there is one dominant supplier who is willing to spend billions on marketing, with programs such as Intel Inside and rebates to manufacturers for using their products. There is no memory chip supplier enjoying the dominance that Intel has in cpus, hence nobody is willing to spend the billions in marketing need to create customer awareness of the importance of memory performance to their computing experience.

The plot below shows the SPEC cpu integer performance against peak memory transfer rates; the colors denote the different cpu clock rates in these systems.

Honking the horn for go faster memory (over go faster cpus)

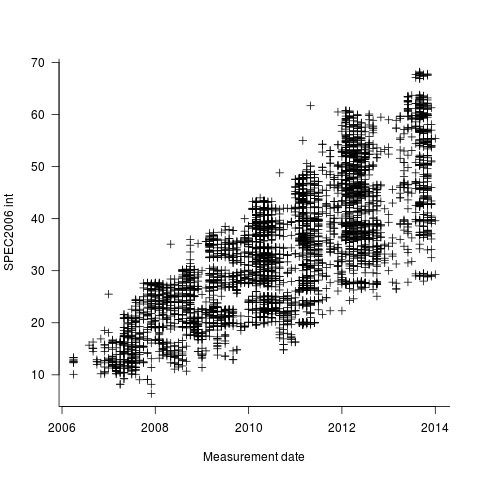

I have been doing some analysis of computer performance, as measured by the results of the SPEC 2006 int benchmark (i.e, no floating point). As the following plot shows, computers are continuing to get faster (code and data):

I think the widening spread of results might have a lot to do with companies slowly migrating to the newer benchmark, increasing the range of test cases; there mus also be an element of an increasing range of computer performance on offer from vendors now we have reached the good-enough point.

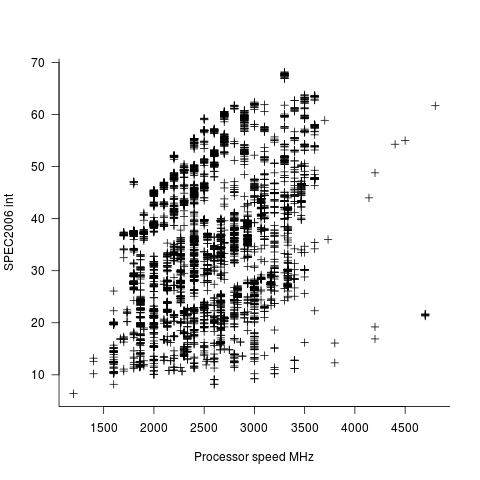

In the last century the increase in performance was strongly correlated with increasing cpu clock speed. As the following plot shows, this century is different (in years to come the first few years, where this correlation quickly died out, will be treated as a round-off error); performance is more likely to be higher at a higher clock rate, but far from guaranteed:

One of the reasons for this change is that, for many tasks, cpus are now seriously performance limited by memory bandwidth, and so these days a lot of the performance improvement is coming from faster memory (yes, it is really about shifting more bytes per clock tick, but a common faster/slower vocabulary keeps things simpler). The following plot uses PC2 (blue) and PC3 (red) module numbers:

Making use of go faster memory is not as straight-forward as using a go faster cpu. Memory chip configuration includes more tuning options than cpus, which just need a faster clock. That spread in performance, for a given memory rate can probably be traced to use of different options and of course cpu caches play an important part in improving performance. The SPEC results do contain lots of descriptive details about cache characteristics, but extracting it will be fiddly and analysing this kind of stuff always makes my head hurt.

The computer buying public have learned that higher clock rate is better. Unfortunately they still think this applies to cpus when they should now be asking about memory speed. When I ask people about the speed of memory in their computer I am usually told how many gigabytes it has, or get the same kind of look I used to get many years ago when asking about cpu speed.

Why does Intel sell compilers?

Intel is a commercial company and the obvious answer to the question of why it sells compilers is: to make money. Does a company that makes billions of dollars in profits really have any interest in making a few million, I’m guessing, from selling compilers? Of course not, Intel’s interest in compilers is as a means of helping them sell more hardware.

How can a compiler be used to increase computer hardware sales? One possibility is improved performance and another is customer perception of improved performance. My company’s first product was a code optimizer and I was always surprised by the number of customers who bought the product without ever performing any before/after timing benchmarks; I learned that engineers are seduced by the image of performance and only a few are ever forced to get involved in measuring it (having been backed into a corner because what they currently have is not good enough).

Intel are not the only company selling x86 chips, AMD and VIA have their own Intel compatible x86 chips. Intel compatible? Doesn’t that mean that programs compiled using the Intel compiler will execute just as quickly on the equivalent chip sold by competitors? Technically the answer is no, but the performance differences are likely to be small in most cases. However, I’m sure there are many developers who have been seduced by Intel’s marketing machine into believing that they need to purchase x86 chips from Intel to make sure they receive this ‘worthwhile’ benefit.

Where do manufacturer performance differences, for the same sequence of instructions, come from? They are caused by the often substantial internal architectural difference between the processors sold by different manufacturers, also Intel and its competitors are continually introducing new processor architectures and processors from the same company will have differences performance characteristics. It is possible for an optimizer to make use of information on different processor characteristics to tune the machine code generated for a particular high-level language construct, with the developer selecting the desired optimization target processor via compiler switches.

Optimizing code for one particular processor architecture is a specialist market. But let’s not forget all those customers who will be seduced by the image of performance and ignore details such as their programs being executed on a wide variety of architectures.

The quality of a compiler’s runtime library can have a significant impact on a program’s performance. The x86 instruction set has grown over time and large performance gains can be had by a library function that adapts to the instructions available on the processor it is currently executing on. The CPUID instruction provides all of the necessary information.

As well as providing information on the kind of processor and its architectural features the CPUID instruction can return information about the claimed manufacturer of the chip (some manufacturers provide a mechanism that allows users to change the character sequence returned by this instruction).

The behavior of some of the functions in Intel’s runtime library depends on the

character sequence returned by the CPUID instruction, producing better performance for the sequence “GenuineIntel”. The US Federal Trade Commission have filed a complaint alleging that this is anti-competitive (more details) and requested that this manufacturer dependency be removed.

I think that removing this manufacturer dependency will have little impact on sales. Any Intel compiler user who is not targeting Intel chips and who is has a real interest in performance can patch the runtime library, the Supercomputer crowd will want to talk to the kind of sophisticated processor/compiler engineers that Intel makes available and for everybody else it is about the perception of performance. In fact Intel ought to agree to a ‘manufacturer free’ runtime library pronto before too many developers have their delusions shattered.

Recent Comments