Archive

Changing development culture and practices: LLM edition

The popular perception of creating software systems is that it mainly involves writing code. In the 1950s, management treated writing code as a clerical task that just mapped the detailed requirements specified by someone with knowledge of the problem to something a computer could execute. Job titles reflected this division of labour, e.g., coder/programmer, systems analyst (the Wikipedia entry lists implementation as part of the job, this eventually became true in theory and for many was probably true in practice since the early days).

Using Large Language Models to write code based on the requirements contained in a prompt appears to take software development back to the process mandated by the managers of early software projects.

A major economic incentive for the creation of software systems is enabling more efficient work processes, with the collateral damage of decimated employment in some work functions. This happened to clerical workers and non-software engineering workers. Now it’s happening to software developers.

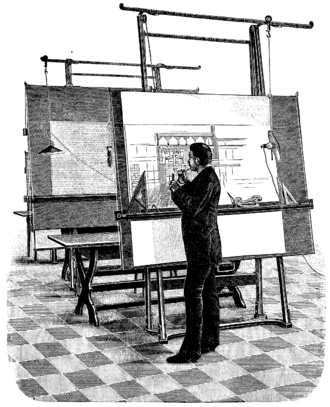

Hardware designers did not cease to exist once Computer-aided design became available. Technical drawing skills (larger schools once had a room full of drawing boards for teaching young teenagers) has ceased to be a job requirement (image from Wikipedia).

Software developer will remain as a job category, perhaps with reduced numbers or with reduced average pay. But the use of LLMs will change the culture and practices of software development.

The shift from using assembly language to high level languages suggests a few ideas about the kinds of changes. Using assembly language requires being reasonably familiar with the cpu architecture, e.g., register names/widths/instruction-restrictions and instruction timings. General developer chat about cpu architectures was still a thing in the 1980s, less so in the 1990s, and very rarely today (people do blog about it). Several decades from now, what will no longer be a general topic of developer conversation? Data types, perhaps; like registers, bit pattern representation is a low level detail. Since most developers don’t know much about the languages they use, it may be difficult to measure the impact of LLM usage on language knowledge.

High-level languages increase developer productivity by reducing the number of details that need to be thought about, at the cost of less efficient code. But for many applications, machine time is cheaper than human time.

LLMs increase developer productivity by reducing the need to lookup details (e.g., spelling of method names and their parameters). As confidence grows in the accuracy of LLM suggested code, developers will start accepting, whatever. What counts is whether the code works, not whether the average developer would have written something faster/smaller/idiomatic.

The early languages have a straightforward mapping from statements/declarations to machine code. Over time, languages were created that allowed developers to think less and less about implementation details, at the cost of supporting constructs that could introduce lots of hidden overhead. I expect that customer demand will incentivize LLM functionality that reduces what developers need to think about.

A real danger of LLM usage is that it will, eventually, result in programs a lot more bloated than humans have managed to achieve. There are physical constraints restricting what hardware designers can do, and these constraints show up in patterns of behavior, e.g., Rent’s rule relating the number of external connections in a logic block to the number of logic gates in the block. There are common usage patterns in existing code, but no theory suggesting they are desirable, or not, in any sense. I await having enough LLM generated production code to make statistically significant measurements.

I suspect that these days most developers are writing glue code, or short programs, and in the near term I expect that most LLM code will fill this need. Unfortunately, there is very little research/measurement on glue code/short program, so there are no known developer usage patterns to compare LLMs against.

The aura of software quality

Bad money drives out good money, is a financial adage. The corresponding research adage might be “research hyperbole incentivizes more hyperbole”.

Software quality appears to be the most commonly studied problem in software engineering. The reason for this is that use of the term software quality imbues what is said with an aura of relevance; all that is needed is a willingness to assert that some measured attribute is a metric for software quality.

Using the term “software quality” to appear relevant is not limited to researchers; consultants, tool vendors and marketers are equally willing to attach “software quality” to whatever they are selling.

When reading a research paper, I usually hit the delete button as soon as the authors start talking about software quality. I get very irritated when what looks like an interesting paper starts spewing “software quality” nonsense.

The paper: A Family of Experiments on Test-Driven Development commits the ‘crime’ of framing what looks like an interesting experiment in terms of software quality. Because it looked interesting, and the data was available, I endured 12 pages of software quality marketing nonsense to find out how the authors had defined this term (the percentage of tests passed), and get to the point where I could start learning about the experiments.

While the experiments were interesting, a multi-site effort and just the kind of thing others should be doing, the results were hardly earth-shattering (the experimental setup was dictated by the practicalities of obtaining the data). I understand why the authors felt the need for some hyperbole (but 12-pages). I hope they continue with this work (with less hyperbole).

Anybody skimming the software engineering research literature will be dazed by the number and range of factors appearing to play a major role in software quality. Once they realize that “software quality” is actually a meaningless marketing term, they are back to knowing nothing. Every paper has to be read to figure out what definition is being used for “software quality”; reading a paper’s abstract does not provide the needed information. This is a nightmare for anybody seeking some understanding of what is known about software engineering.

When writing my evidence-based software engineering book I was very careful to stay away from the term “software quality” (one paper on perceptions of software product quality is discussed, and there are around 35 occurrences of the word “quality”).

People in industry are very interested in software quality, and sometimes they have the confusing experience of talking to me about it. My first response, on being asked about software quality, is to ask what the questioner means by software quality. After letting them fumble around for 10 seconds or so, trying to articulate an answer, I offer several possibilities (which they are often not happy with). Then I explain how “software quality” is a meaningless marketing term. This leaves them confused and unhappy. People have a yearning for software quality which makes them easy prey for the snake-oil salesmen.

R is now important enough to have a paid for PR make-over

With the creation of the R consortium R has moved up a rung on the ladder of commercial importance.

R has captured the early adopters and has picked up a fair few of the early majority (I’m following the technology adoption life-cycle model made popular by the book Crossing the Chasm), i.e., it is starting to become mainstream. Being mainstream means that jobsworths are starting to encounter the language in situations of importance to them. How are the jobsworths likely to perceive R? From my own experience I would say it will be perceived as being an academic thing, which in the commercial world is not good, not good at all.

To really become mainstream R needs to shake off its academic image, and as I see it, the R consortium has been set up to make that happen. I imagine it will try to become the go-to point for journalists wanting information or a quote about things-related-to R. Yes, they will hold conferences with grandiose sounding titles and lots of business people will spend surprising amounts of money to attend, but the real purpose is to solidify the image of R as a commercial winner (the purpose of a very high conference fee is to keep the academics out and convince those attending that it must be important because it is so expensive).

This kind of consortium gets set up when some technology having an academic image is used by large companies that need to sell this usage to potential customers (if the technology is only used internally its wider image is unimportant).

Unix used to have an academic image, one of the things that X/Open was set up to ‘solve’. The academic image is now a thing of the past.

For the first half of the 1980s it looked like Pascal would be a mainstream language; a language widely taught in universities and perceived as being academic. Pascal did not get its own consortium and C came along and took its market (I was selling Pascal tools at the time and had lots of conversations with companies who were switching from Pascal to C and essentially put the change down to perception; it did not help that Pascal implementations did their best to hide/ignore the 8086 memory model, something of interest when memory is scarce).

How will we know when R reaches the top rung (if it does)? Well there are two kinds of languages, those that nobody uses and those that everybody complains about.

R will be truly mainstream once people feel socially comfortable complaining about it to any developer they are meeting for the first time.

Recent Comments