Archive

C compiler validation is 21 today!

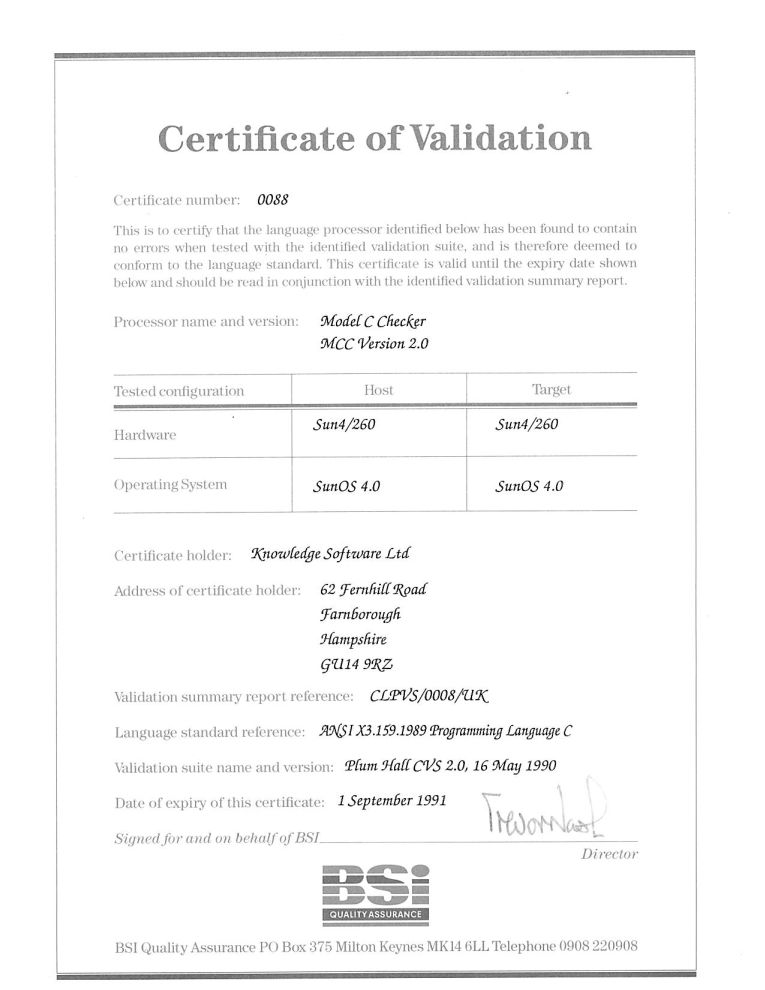

Today, 1 September 2011, is the 21th anniversary of the first formally validated C compilers. The three ‘equal first’ validated compilers were the Model Implementation C Checker from Knowledge Software, Topspeed C from JPI (run by the people who created Turbo Pascal) and the INMOS C compiler (derived from the Norcroft C compiler written by Alan Mycroft+others, the author of the longest response document seen during the review of the C89 draft standard).

Back in the day the British Standards Institution testing group run by John Souter were the world leaders in compiler validation and were very proactive in adding support for a new language. NIST, the equivalent US body, did not offer such a service until a few years later. Those companies in a position to have their compilers validated (i.e., the compiler passed the validation suite) were pressing BSI to be first; the ‘who is first’ issue was resolved by giving all certificates the same date (the actual validation process of a person from BSI, Neil Martin now Director of Test in the Winterop Team at Microsoft, turning up to ‘witness’ the compiler passing the tests happened several weeks earlier).

Testing C compilers was different from other language compilers in that sufficient demand existed to support commercial production and maintenance of test suites (the production of validation suites for previous language compilers had been government funded). After a review of the available test suites BSI chose to use the Plum Hall suite; after a similar review NIST chose to use the Perennial suite (I got involved in trying to figure out for NIST how well this suite covered the requirements contained in the C Standard).

For a while C compiler validation was big business (as in big fish, very small pond). But the compiler validation market is dependent on there being lots of compilers, which requires market fragmentation and to a lesser extent lots of different OSs and hardware platforms (each needing a separate validation). The 1990s saw market consolidation, gcc becoming good enough for commercial use and a shift of developer mind share to C++. Dwindling revenue resulted in BSI’s compiler validation group being shut down after a few years and NIST’s followed in 1998.

Is compiler validation relevant today? When the first C Standard was published a lot of compilers in common use had some significant behavioural differences compared to what the Standard specified. Over time these compilers have either disappeared or been upgraded (a potential customer once asked me the benefits I saw in them licensing the Knowledge Software front end and the reply to one of my responses, “you can tell your customers that the compiler is standard’s compliant”, was that this was not a benefit as they had been claiming this for years). Improvements in Intel’s x86 processor also had a hand in improving compiler Standard’s conformance; the various memory models used by the x86 processor was a huge headache for compiler writers whose products often behaved very differently under different memory models; the arrival of the Pentium with its flat 32-bit address space meant this issue disappeared over time.

These days I suspect that the major compilers targeting platforms where portability is expected (portability is often not a big expectation in the embedded world) are sufficiently compatible that developers are willing to overlook small differences with the Standard. Differences in third party libraries, GUIs and other frameworks have been the big headache for many years now.

Would the ‘platform portability’ compilers, that’s probably gcc, Microsoft, products using EDG’s front end, and perhaps llvm in the coming years, pass the latest version of the PlumHall and Perennial suites?

- The gcc team do not have access to either company’s suite. The gcc regression tests are a poor substitute for a proper compiler validation suite (even though they cost many thousands of dollars commercial compiler writers often buy both companies products because they are good value for money as a testing resource {the Fortran 78 validation suite source gives some idea of how much work is actually involved). I would expect gcc to fail some of the tests but have no idea how many or serious the failures would be.

- Microsoft have said they don’t have plans to support C99 (it took a lot of prodding to get them interested in formally validating against C90).

- I think the llvm team are in the same position as gcc, but perhaps somebody at Apple has access to one or more of the commercial suites (I don’t know).

- EDG are into standard’s conformance and I would expect them to pass both suites.

The certificate is printed on high quality, slightly yellow paper; the template wording is in a subdued gray ink while the customer information is in a very bold black ink. I don’t know whether this is to make life difficult for counterfeiters, but I could not get any half decent photographs and the color scanner had to be switched to black&white.

Validation was good for one year and I saw no worthwhile benefit in paying BSI £5,000 to renew for another year. Few people knew about the one year rule and I did not enlighten them. In the Ada compiler market the one year rule was a major problem, but lets leave that for another time.

sparse can now generate executables

How can you be confident that a source code analysis tool is correct when its analysis of a file does not result in any warning being generated? The best way I know of addressing this issue is for the analysis tool to be capable of generating an executable from the source. Executing a moderately large program requires that the translator get all sorts of complicated analysis correct and provides a huge boost to confidence of correctness compared to an analysis tool that does not have this ability.

Congratulations to the maintainers of sparse (a Linux kernel specific analysis tool started by Linus Torvalds in 2003) for becoming one of the very small number of source code analysis tools capable of generating executable programs.

The Model C Implementation is another tool capable of generating executables. I would love to be able to say that the reason for this was dedication to perfection by the project team, however, the truth is that it started life as a compiler and became an analyzer later.

Clang, the C front end+analyzer to llvm is often referred to as a static analyzer. While it does perform some static checking (like gcc does when the -Wall option is specified) a lot more checks needs to be supported before it can be considered on a par with modern static analysis tools.

GCC’s Treehydra project works via a plug-in to the compiler. This project has yet to live up to its potential, so we can delay discussion of whether it should be classified as a standalone system or an executable generator.

I cannot think of any other ‘full language’ static analysis tools capable of generating executable programs (the C-semantics tool restricts its checks to those required by the C Standard, and I think tools need to do a lot more than this to be considered static analysis tools). Corrections to my lack of knowledge welcome.

I was a little concerned that there were plans afoot to migrate sparse to becoming the build compiler for the Linux kernel. Linus answered my query by saying that this was never a goal.

Recent Comments