Archive

Two failed software development projects in the High Court

When submitting a bid, to be awarded the contract to develop a software system, companies have to provide information on costs and delivery dates. If the costs are significantly underestimated, and/or the delivery dates woefully optimistic, one or more of the companies involved may resort to legal action.

Searching the British and Irish Legal Information Institute‘s Technology and Construction Court Decisions throws up two interesting cases (when searching on “source code”; I have not been able to figure out the patterns in the results that were not returned by their search engine {when I expected some cases to be returned}).

The estimation and implementation activities described in the judgements for these two cases could apply to many software projects, both successful and unsuccessful. Claiming that the system will be ready by the go-live date specified by the customer is an essential component of winning a bid, the huge uncertainties in the likely effort required comes as standard in the software industry environment, and discovering lots of unforeseen work after signing the contract (because the minimum was spent on the bid estimate) is not software specific.

The first case is huge (BSkyB/Sky won the case and EDS had to pay £200+ million): (1) BSkyB Limited (2) Sky Subscribers Services Limited: Claimants – and (1) HP Enterprise Services UK Limited (formerly Electronic Data Systems Limited) (2) Electronic Data systems LLC (Formerly Electronic Data Systems Corporation: Defendants. The amount bid was a lot less than £200 million (paragraph 729 “The total EDS “Sell Price” was £54,195,013 which represented an overall margin of 27% over the EDS Price of £39.4 million.” see paragraph 90 for a breakdown).

What can be learned from the judgement for this case (the letter of Intent was subsequently signed on 9 August 2000, and the High Court decision was handed down on 26 January 2010)?

- If you have not been involved in putting together a bid for a large project, paragraphs 58-92 provides a good description of the kinds of activities involved. Paragraphs 697-755 discuss costing details, and paragraphs 773-804 manpower and timing details,

- if you have never seen a software development contract, paragraphs 93-105 illustrate some of the ways in which delivery/payments milestones are broken down and connected. Paragraph 803 will sound familiar to developers who have worked on large projects: “… I conclude that much of Joe Galloway’s evidence in relation to planning at the bid stage was false and was created to cover up the inadequacies of this aspect of the bidding process in which he took the central role.” The difference here is that the money involved was large enough to make it worthwhile investing in a court case, and Sky obviously believed that they could only be blamed for minor implementation problems,

- don’t have the manager in charge of the project give perjured evidence (paragraph 195 “… Joe Galloway’s credibility was completely destroyed by his perjured evidence over a prolonged period.”). Bringing the law of deceit and negligent misrepresentation into a case can substantially increase/decrease the size of the final bill,

- successfully completing an implementation plan requires people with the necessary skills to do the work, and good people are a scarce resource. Projects fail if they cannot attract and keep the right people; see paragraphs 1262-1267.

A consequence of the judge’s finding of misrepresentation by EDS is a requirement to consider the financial consequences. One item of particular interest is the need to calculate the likely effort and time needed by alternative suppliers to implement the CRM System.

The only way to estimate, with any degree of confidence, the likely cost of implementing the required CRM system is to use a conventional estimation process, i.e., a group of people with the relevant domain knowledge work together for some months to figure out an implementation plan, and then cost it. This approach costs a lot of money, and ties up scarce expertise for long periods of time; is there a cheaper method?

Management at the claimant/defence companies will have appreciated that the original cost estimate is likely to be as good as any, apart from being tainted by the perjury of the lead manager. So they all signed up to using Tasseography, e.g., they get their respective experts to estimate the amount of code that needs to be produce to implement the system, calculate how long it would take to write this code and multiply by the hourly rate for a developer. I would loved to have been a fly on the wall when the respective IT experts, all experienced in provided expert testimony, were briefed. Surely the experts all knew that the ballpark figure was that of the original EDS estimate, and that their job was to come up with a lower/high figure?

What other interpretation could there be for such a bone headed approach to cost estimation?

The EDS expert based his calculation on the debunked COCOMO model (ok, my debunking occurred over six years later, but others have done it much earlier).

The Sky expert based his calculation on the use of function points, i.e., estimation function points rather than lines of code, and then multiply by average cost per function point.

The legal teams point out the flaws in the opposing team’s approach, and the judge does a good job of understanding the issues and reaching a compromise.

There may be interesting points tucked away in the many paragraphs covering various legal issues. I barely skimmed these.

The second case is not as large (the judgement contains a third the number of paragraphs, and the judgement handed down on 19 February 2021 required IBM to pay £13+ million): SCIS GENERAL INSURANCE LIMITED: Claimant – and – IBM UNITED KINGDOM LIMITED: Defendant.

Again there is lots to learn about how projects are planned, estimated and payments/deliveries structured. There are staffing issues; paragraph 104 highlights how the client’s subject matter experts are stuck in their ways, e.g., configuring the new system for how things used to work and not attending workshops to learn about the new way of doing things.

Every IT case needs claimant/defendant experts and their collection of magic spells. The IBM expert calculated that the software contained technical debt to the tune of 4,000 man hours of work (paragraph 154).

If you find any other legal software development cases with the text of the judgement publicly available, please let me know (two other interesting cases with decisions on the British and Irish Legal Information Institute).

Electronic Evidence and Electronic Signatures: book

Electronic Evidence and Electronic Signatures by Stephen Mason and Daniel Seng is not the sort of book that I would normally glance at twice (based on its title). However, at this start of the year I had an interesting email conversation with the first author, a commentator who worked for the defence team on the Horizon IT project case, and he emailed with the news that the fifth edition was now available (there’s a free pdf version, so why not have a look; sorry Stephen).

Regular readers of this blog will be interested in chapter 4 (“Software code as the witness”) and chapter 5 (“The presumption that computers are ‘reliable'”).

Legal arguments are based on precedent, i.e., decisions made by judges in earlier cases. The one thing that stands from these two chapters is how few cases have involved source code and/or reliability, and how simplistic the software issues have been (compared to issues that could have been involved). Perhaps the cases involving complicated software issues get simplified by the lawyers, or they look like they will be so difficult/expensive to litigate that the case don’t make it to court.

Chapter 4 provided various definitions of source code, all based around the concept of imperative programming, i.e., the code tells the computer what to do. No mention of declarative programming, where the code specifies the information required and the computer has to figure out how to obtain it (SQL being a widely used language based on this approach). The current Wikipedia article on source code is based on imperative programming, but the programming language article is not so narrowly focused (thanks to some work by several editors many years ago 😉

There is an interesting discussion around the idea of source code as hearsay, with a discussion of cases (see 4.34) where the person who wrote the code had to give evidence so that the program output could be admitted as evidence. I don’t know how often the person who wrote the code has to give evidence, but these days code often has multiple authors, and their identity is not always known (e.g., author details have been lost, or the submission effectively came via an anonymous email).

Chapter 5 considers the common law presumption in the law of England and Wales that ‘In the absence of evidence to the contrary, the courts will presume that mechanical instruments were in order. Yikes! The fact that this is presumption is nonsense, at least for computers, was discussed in an earlier post.

There is plenty of case law discussion around the accuracy of devices used to breath-test motorists for their alcohol level, and defendants being refused access to the devices and associated software. Now, I’m sure that the software contained in these devices contains coding mistakes, but was a particular positive the result of a coding mistake? Without replicating the exact conditions occurring during the original test, it could be very difficult to say. The prosecution and Judges make the common mistake of assuming that because the science behind the test had been validated, the device must produce correct results; ignoring the fact that the implementation of the science in software may contain implementation mistakes. I have lost count of the number of times that scientist/programmers have told me that because the science behind their code is correct, the program output must be correct. My retort that there are typos in the scientific papers they write, therefore there may be typos in their code, usually fails to change their mind; they are so fixated on the correctness of the science that possible mistakes elsewhere are brushed aside.

The naivety of some judges is astonishing. In one case (see 5.44) a professor who was an expert in mathematics, physics and computers, who had read the user manual for an application, but had not seen its source code, was considered qualified to give evidence about the operation of the software!

Much of chapter 5 is essentially an overview of software reliability, written by a barrister for legal professionals, i.e., it is not always a discussion of case law. A barristers’ explanation of how software works can be entertainingly inaccurate, but the material here is correct in a broad brush sense (and I did not spot any entertainingly inaccuracies).

Other than breath-testing, the defence asking for source code is rather like a dog chasing a car. The software for breath-testing devices is likely to be small enough that one person might do a decent job of figuring out how it works; many software systems are not only much, much larger, but are dependent on an ecosystem of hardware/software to run. Figuring out how they work will take multiple (expensive expert) people a lot of time.

Legal precedents are set when both sides spend the money needed to see a court case through to the end. It’s understandable why the case law discussed in this book is so sparse and deals with relatively simple software issues. The costs of fighting a case involving the complexity of modern software is going to be astronomical.

Likelihood of a fault experience when using the Horizon IT system

It looks like the UK Post Office’s Horizon IT system is going to have a significant impact on the prosecution of cases that revolve around the reliability of software systems, at least in the UK. I have discussed the evidence illustrating the fallacy of the belief that “most computer error is either immediately detectable or results from error in the data entered into the machine.” This post discusses what can be learned about the reliability of a program after a fault experience has occurred, or alleged to have occurred in the Horizon legal proceedings.

Sub-postmasters used the Horizon IT system to handle their accounts with the Post Office. In some cases money that sub-postmasters claimed to have transferred did not appear in the Post Office account. The sub-postmasters claimed this was caused by incorrect behavior of the Horizon system, the Post Office claimed it was due to false accounting and prosecuted or fired people and sometimes sued for the ‘missing’ money (which could be in the tens of thousands of pounds); some sub-postmasters received jail time. In 2019 a class action brought by 550 sub-postmasters was settled by the Post Office, and the presiding judge has passed a file to the Director of Public Prosecutions; the Post Office may be charged with instituting and pursuing malicious prosecutions. The courts are working their way through reviewing the cases of the sub-postmasters charged.

How did the Post Office lawyers calculate the likelihood that the missing money was the result of a ‘software bug’?

Horizon trial transcript, day 1, Mr De Garr Robinson acting for the Post Office: “Over the period 2000 to 2018 the Post Office has had on average 13,650 branches. That means that over that period it has had more than 3 million sets of monthly branch accounts. It is nearly 3.1 million but let’s call it 3 million and let’s ignore the fact for the first few years branch accounts were weekly. That doesn’t matter for the purposes of this analysis. Against that background let’s take a substantial bug like the Suspense Account bug which affected 16 branches and had a mean financial impact per branch of £1,000. The chances of that bug affecting any branch is tiny. It is 16 in 3 million, or 1 in 190,000-odd.”

That 3.1 million comes from the calculation: 19-year period times 12 months per year times 13,650 branches.

If we are told that 16 events occurred, and that there are 13,650 branches and 3.1 million transactions, then the likelihood of a particular transaction being involved in one of these events is 1 in 194,512.5. If all branches have the same number of transactions, the likelihood of a particular branch being involved in one of these 16 events is 1 in 853 (13650/16 -> 853); the branch likelihood will be proportional to the number of transactions it performs (ignoring correlation between transactions).

This analysis does not tell us anything about the likelihood that 16 events will occur, and it does not tell us anything about whether these events are the result of a coding mistake or fraud.

We don’t know how many of the known 16 events are due to mistakes in the code and how many are due to fraud. Let’s ask: What is the likelihood of one fault experience occurring in a software system that processes a total of 3.1 million transactions (the number of branches is not really relevant)?

The reply to this question is that it is not possible to calculate an answer, because all the required information is not specified.

A software system is likely to contain some number of coding mistakes, and given the appropriate input, any of these mistakes may produce a fault experience. The information needed to calculate the likelihood of one fault experience occurring is:

- the number of coding mistakes present in the software system,

- for each coding mistake, the probability that an input drawn from the distribution of input values produced by users of the software will produce a fault experience.

Outside of research projects, I don’t know of any anyone who has obtained the information needed to perform this calculation.

The Technical Appendix to Judgment (No.6) “Horizon Issues” states that there were 112 potential occurrences of the Dalmellington issue (paragraph 169), but does not list the number of transactions processed between these ‘issues’ (which would enable a likelihood to be estimated for that one coding mistake).

The analysis of the Post Office expert, Dr Worden, is incorrect in a complicated way (paragraphs 631 through 635). To ‘prove’ that the missing money was very unlikely to be the result of a ‘software bug’, Dr Worden makes a calculation that he claims is the likelihood of a particular branch experiencing a ‘bug’ (he makes the mistake of using the number of known events, not the number of unknown possible events). He overlooks the fact that while the likelihood of a particular branch experiencing an event may be small, the likelihood of any one of the branches experiencing an event is 13,630 times higher. Dr Worden’s creates complication by calculating the number of ‘bugs’ that would have to exist for there to be a 1 in 10 chance of a particular branch experiencing an event (his answer is 50,000), and then points out that 50,000 is such a large number it could not be true.

As an analogy, let’s consider the UK National Lottery, where the chance of winning the Thunderball jackpot is roughly 1 in 8-million per ticket purchased. Let’s say that I bought a ticket and won this week’s jackpot. Using Dr Worden’s argument, the lottery could claim that my chance of winning was so low (1 in 8-million) that I must have created a counterfeit ticket; they could even say that because I did not buy 0.8 million tickets, I did not have a reasonable chance of winning, i.e., a 1 in 10 chance. My chance of winning from one ticket is the same as everybody else who buys one ticket, i.e., 1 in 8-million. If millions of tickets are bought, it is very likely that one of them will win each week. If only, say, 13,650 tickets are bought each week, the likelihood of anybody winning in any week is very low, but eventually somebody will win (perhaps after many years).

The difference between the likelihood of winning the Thunderball jackpot and the likelihood of a Horizon fault experience is that we have enough information to calculate one, but not the other.

The analysis by the defence team produced different numbers, i.e., did not conclude that there was not enough information to perform the calculation.

Is there any way that the information needed to calculate the likelihood of a fault experience occurring?

In theory fuzz testing could be used. In practice this is probably completely impractical. Horizon is a data driven system, and so a copy of the database would need to be used, along with a copy of all the Horizon software. Where is the computer needed to run this software+database? Yes, use of the Post Office computer system would be needed, along with all the necessary passwords.

Perhaps, if we wait long enough, a judge will require that one party make all the software+database+computer+passwords available to the other party.

Source code discovery, skipping over the legal complications

The 2020 US elections introduced the issue of source code discovery, in legal cases, to a wider audience. People wanted to (and still do) check that the software used to register and count votes works as intended, but the companies who wrote the software wouldn’t make it available and the courts did not compel them to do so.

I was surprised to see that there is even a section on “Transfer of or access to source code” in the EU-UK trade and cooperation agreement, agreed on Christmas Eve.

I have many years of experience in discovering problems in the source code of programs I did not write. This experience derives from my time as a compiler implementer (e.g., a big customer is being held up by a serious issue in their application, and the compiler is being blamed), and as a static analysis tool vendor (e.g., managers want to know about what serious mistakes may exist in the code of their products). In all cases those involved wanted me there, I could talk to some of those involved in developing the code, and there were known problems with the code. In court cases, the defence does not want the prosecution looking at the code, and I assume that all conversations with the people who wrote the code goes via the lawyers. I have intentionally stayed away from this kind of work, so my practical experience of working on legal discovery is zero.

The most common reason companies give for not wanting to make their source code available is that it contains trade-secrets (they can hardly say that it’s because they don’t want any mistakes in the code to be discovered).

What kind of trade-secrets might source code contain? Most code is very dull, and for some programs the only trade-secret is that if you put in the implementation effort, the obvious way of doing things works, i.e., the secret sauce promoted by the marketing department is all smoke and mirrors (I have had senior management, who have probably never seen the code, tell me about the wondrous properties of their code, which I had seen and knew that nothing special was present).

Comments may detail embarrassing facts, aka trade-secrets. Sometimes the code interfaces to a proprietary interface format that the company wants to keep secret, or uses some formula that required a lot of R&D (management gets very upset when told that ‘secret’ formula can be reverse engineered from the executable code).

Why does a legal team want access to source code?

If the purpose is to check specific functionality, then reading the source code is probably the fastest technique. For instance, checking whether a particular set of input values can cause a specific behavior to occur, or tracing through the logic to understand the circumstances under which a particular behavior occurs, or in software patent litigation checking what algorithms or formula are being used (this is where trade-secret claims appear to be valid).

If the purpose is a fishing expedition looking for possible incorrect behaviors, having the source code is probably not that useful. The quantity of source contained in modern applications can be huge, e.g., tens to hundreds of thousands of lines.

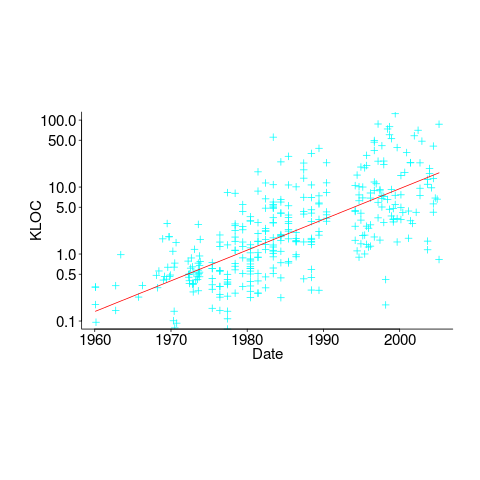

In ancient times (i.e., the 1970s and 1980s) programs were short (because most computers had tiny amounts of memory, compared to post-2000), and it was practical to read the source to understand a program. Customer demand for more features, and the fact that greater storage capacity removed the need to spend time reducing code size, means that source code ballooned. The following plot shows the lines of code contained in the collected algorithms of the Transactions on Mathematical Software, the red line is a fitted regression model of the form:  (code+data):

(code+data):

How, by reading the source code, does anybody find mistakes in a 10+ thousand line program? If the program only occasionally misbehaves, finding a coding mistake by reading the source is likely to be very very time-consuming, i.e, months. Work it out yourself: 10K lines of code is around 200 pages. How long would it take you to remember all the details and their interdependencies of a detailed 200-page technical discussion well enough to spot an inconsistency likely to cause a fault experience? And, yes, the source may very well be provided as a printout, or as a pdf on a protected memory stick.

From my limited reading of accounts of software discovery, the time available to study the code may be just days or maybe a week or two.

Reading large quantities of code, to discover possible coding mistakes, are an inefficient use of human time resources. Some form of analysis tool might help. Static analysis tools are one option; these cost money and might not be available for the language or dialect in which the source is written (there are some good tools for C because it has been around so long and is widely used).

Character assassination, or guilt by innuendo is another approach; the code just cannot be trusted to behave in a reasonable manner (this approach is regularly used in the software business). Software metrics are deployed to give the impression that it is likely that mistakes exist, without specifying specific mistakes in the code, e.g., this metric is much higher than is considered reasonable. Where did these reasonable values come from? Someone, somewhere said something, the Moon aligned with Mars and these values became accepted ‘wisdom’ (no, reality is not allowed to intrude; the case is made by arguing from authority). McCabe’s complexity metric is a favorite, and I have written how use of this metric is essentially accounting fraud (I have had emails from several people who are very unhappy about me saying this). Halstead’s metrics are another favorite, and at least Halstead and others at the time did some empirical analysis (the results showed how ineffective the metrics were; the metrics don’t calculate the quantities claimed).

The software development process used to create software is another popular means of character assassination. People seem to take comfort in the idea that software was created using a defined process, and use of ad-hoc methods provides an easy target for ridicule. Some processes work because they include lots of testing, and doing lots of testing will of course improve reliability. I have seen development groups use a process and fail to produce reliable software, and I have seen ad-hoc methods produce reliable software.

From what I can tell, some expert witnesses are chosen for their ability to project an air of authority and having impressive sounding credentials, not for their hands-on ability to dissect code. In other words, just the kind of person needed for a legal strategy based on character assassination, or guilt by innuendo.

What is the most cost-effective way of finding reliability problems in software built from 10k+ lines of code? My money is on fuzz testing, a term that should send shivers down the spine of a defense team. Source code is not required, and the output is a list of real fault experiences. There are a few catches: 1) the software probably to be run in the cloud (perhaps the only cost/time effective way of running the many thousands of tests), and the defense is going to object over licensing issues (they don’t want the code fuzzed), 2) having lots of test harnesses interacting with a central database is likely to be problematic, 3) support for emulating embedded cpus, even commonly used ones like the Z80, is currently poor (this is a rapidly evolving area, so check current status).

Fuzzing can also be used to estimate the numbers of so-far undetected coding mistakes.

Learning from some legal decisions

The British and Irish Legal Information Institute provides “Access to Freely Available British and Irish Public Legal Information”. Searching the England and Wales High Court (Technology and Construction Court) Decisions throws up some interesting reading (when searching on software).

For those who have never seen a decent sized project go wrong from the inside, DE BEERS UK LIMITED (Formerly: THE DIAMOND TRADING COMPANY LIMITED) vs. ATOS ORIGIN IT SERVICES UK LIMITED provides a well written example. De Beers contracted Atos to write some software. The development of the software did not go well. Were the original requirements/spec underdone or were subsequent personnel not up to the job? Difficult to tell from the Decision, as is the reason Atos thought they had a chance of winning a court case.

SAP UK LIMITED vs. DIAGEO GREAT BRITAIN LIMITED was a licensing dispute, or more accurately an example of why it is important to check what your third-party software gets up to. Diageo had signed a licensing agreement with SAP and 5,800 Diageo users had used a Salesforce.com app which, unknown to them, made use of SAP. The end result was a bill for £55 million, which Diageo had not been expecting.

There are probably more interesting cases to learn from, but I am supposed to be writing a book in my ‘spare’ time.

Why do companies fix faults in software they sell?

Once I buy some software from a company they have my money, if sometime later I find a fault software what incentive does that company have to fix the software and provide me with an update (assuming the software is not so fault ridden that I take advantage of laws allowing me to return a purchase for a refund)?

There are three economic incentives for companies to fix faults:

- because I am paying them a fee for updates that include fixes to known faults,

- because they want to make future sales to me and to others (faults encountered by customers contribute towards the perception of product quality),

- they don’t want to lose money because a fault had consequences that resulted in legal action (this reason is overhyped, in practice software engineering has a missing dead body problem).

Which faults get fixed? Software is surprisingly fault-tolerant and there is no point in fixing faults that customers are unlikely to encounter. This means that once a product has been released and known to be acceptable to many customers, there is no incentive to actively search for faults; this means that the only faults likely to be fixed are the ones reported by customers.

When reporting a fault, customers are often asked to rate its severity. This is a useful technique for prioritizing what gets fixed first, or perhaps what does not get fixed at all. Customers who actively set out to find faults are not appreciated and are labelled as disruptive if they continue doing it. Finding faults is surprisingly easy, finding the faults that have a high probability of being encountered by customers and ranked by them as critical is very hard (this is one of the reasons static analysis tools are not widely used).

What is the motivation for developers to fix faults in Open Source?

- There are companies who provide support services for a fee, just like commercial offerings,

- Open Source is free, gaining more users is not an obvious incentive to fix faults. However, being known as the go-to guys for a given package is a way of attracting companies looking to hire somebody to provide support services or make custom modifications to that package. Fixing faults is a way of getting visibility, it is advertising.

- Developers hate the thought of doing something wrong resulting in a fault in code they have written, and writing faulty code is not socially acceptable behavior in software development circles. These feelings about what constitutes appropriate behavior are often enough to make developers want to spend time fixing faults in code they have written or feel responsible for, provided they have the time. I suspect a lot of faults get fixed by developers when their manager/wife thinks they are working on something more ‘useful’.

Recent Comments