Archive

Ternary radix will have to wait for photonic computers

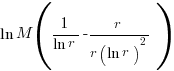

Computer cpu economics suggest that a ternary radix representation rather than binary should be used for representing integer values. The economic cost of a cpu is is roughly proportional to  , where

, where  is the integer radix and

is the integer radix and  is the width, in bits, of the basic integer type (for simplicity I’m assuming there is only one and that the bus width has the same value); the maximum value that can be represented is

is the width, in bits, of the basic integer type (for simplicity I’m assuming there is only one and that the bus width has the same value); the maximum value that can be represented is  .

.

If we fix the maximum representable value  and ask which value of

and ask which value of  minimises

minimises  , then we need to differentiate

, then we need to differentiate  w.r.t.

w.r.t.  , giving

, giving  and this equals 0 when

and this equals 0 when  (the closest integer to

(the closest integer to  is 3).

is 3).

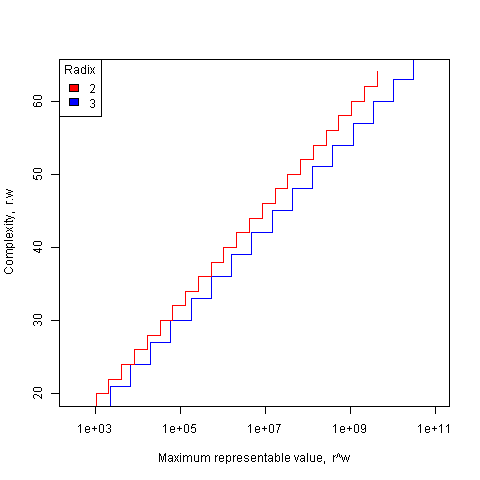

The following plot shows the maximum representable value (right point of horizontal line) that can be achieved for a given ‘cost’ when the radix is 2 and 3.

The reason binary is used in practice is purely to do with the characteristics of power consumption in electronic switching circuits (originally vacuum tube and then transistor based). Electrical power is proportional to voltage times current and a binary circuit can be implemented by switching between states where either the voltage or the current is very small, in either of these two states the power consumption is very low; it is only during the very short transition period switching between them, when the voltage and current have intermediate values, that the power consumed is relatively high. A 3-state switch would need a voltage/current combination denoting a state other than 0/1, and any such combination would consume non-trivial amounts of power (tri-state devices are used in some situations).

I have little idea about the complications of storing ternary values in memory systems, but I guess there will be complications.

In the 1960’s the Setun computer used a ternary radix and there has been the odd experimental systems since.

Are there any kinds of switching circuit whose use is not primarily dictated by device power characteristics and hence might be used to support a ternary radix? One possibility is a light based cpu (i.e., using photons rather than electrons), using polarization to specify state has been proposed. What about storage? Using Josephson junctions could provide the high speed and low power consumption required (we just need somebody to discover a room temperature superconductor).

The technology needed to build a practical cpu using a ternary radix appears to be some years in the future.

What about all the existing code containing a myriad of dependencies on the characteristics of two’s complement integer representation? If a photonic cpu became available that was ten times faster than existing cpus, or consumed 10 times less power or some combination thereof, then I’m sure here would be plenty of economic incentive to get software running on it. The problem is that 10 times better cpus are unlikely to just turn up, they will probably need to be developed in steps and the economics of progressing from step to step don’t look good.

While our civilization is likely to continue on down the binary rabbit hole, another one may have started down, or switched to, the ternary hole. I hope the SETI people are not to blinkered by the binary view of the universe.

Unique values generated by expressions of a given complexity

The majority of integer constants appearing in source code can be represented using a few bits. CPU designers use this characteristic when designing instruction sets, creating so called short-form or quick instructions that perform some operation involving small integer values, e.g., adding a value between 1 and 8 to a register. Writers of code optimizers are always looking for sequences of short-form instructions that are faster/smaller than the longer forms (the INMOS Transputer only had a short form for load immediate).

I have recently been looking at optimizing expressions written for a virtual machine that only supports immediate loads of decimal values between 1 an 9, and binary add/subtract/multiply/divide, e.g., optimizing an expression containing four operators, ((2*7)+9)*4+9 which evaluates to 101, to one containing three operators, (8+9)*6-1 also evaluates to 101. Intermediate results can have fractional values, but I am only interested in expressions whose final result has an integer value (i.e., zero fractional part).

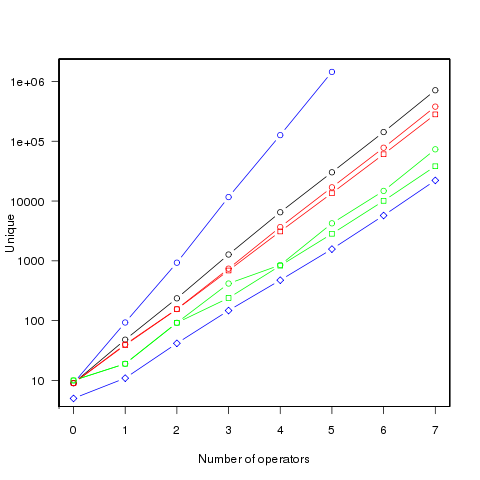

A little thought shows that the value of an expression containing a subexpression whose value includes a fractional value (e.g., 1/3) can always be generated by an expression containing the same or a fewer number of operators and no intermediate fractional value intermediate results (e.g., 9/(1/5) can be generated using 9*5, i.e., the result of any divide operation always has to be an integer if the final result is to be a unique integer. Enumerating the unique set of values generated by expressions containing a given number of operators shows that divide is redundant for expressions containing six of fewer operators and only adds 11 unique values for seven operators (379,073 possibilities without divide)

Removing support for the minus operator only reduces the size of the result set by around 10%. Possibly being worthwhile time saving for expressions containing many operators or searching for an expression whose result value is very large.

There does not appear to be a straightforward (and fast) algorithm that returns the minimal operation expression for a given constant.

I wrote an R program to exhaustively generate all integer values returned by expressions containing up to seven operators. To find out how many different values, integer/real, could be calculated I wrote a maxima program (this represents fractional values using a rational number representation and exceeds 4G byte of storage for expressions containing more than five operators).

The following figure shows the number of different values that can be generated by an expression containing a given number of operators (blue), the number of integer values (black), the number of positive integer values (red), the smallest positive integer that cannot be calculated by an expression containing the given number of operators (green) (circles are for add/subtract/multiply/divide, squares for add/multiply). Any value below a green line is guaranteed to have a solution in the in the given number of operators (or fewer). The blue diamond line is the mean value of a random expression containing the given number of operators.

Limiting the operators to just add/multiply reduces the number of unique value possibilities. The difference increases linearly’ish to around 35% for seven operators.

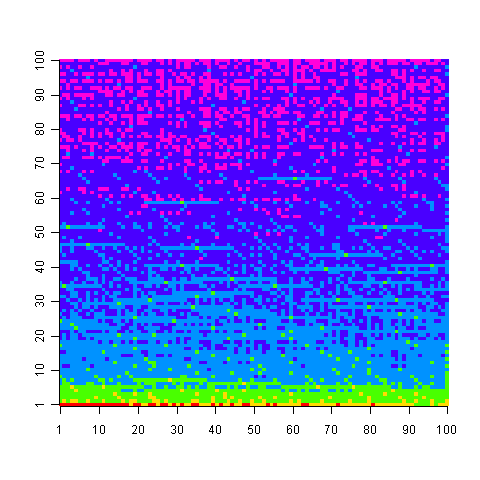

The following uses colors to show the minimum number of operators needed to generate the given value, 1 is in the bottom left, 100,000 in the top right; red for one operator, yellow for two, green for three and so on.

Knowing that N can be calculated using p operators does not mean that N-1 can also be generated using p operators; it is possible to generate 729 using two operators (i.e., 9*9*9), three operators are required to generate 92 and four to generate 417.

Values under the green line (first figure) are known to have solutions in the given number of operators; quickly obtaining the solution is another matter. There is at least a 50/50 chance that a randomly generated expression containing the given number of operators, and producing an integer value, will calculate a value on or below the diamond blue line. The overhead of storing precomputed minimal operator expressions is not that great for small numbers of operators.

Suggestions for a fast/low storage algorithm (random generation + modification through a cost function performs quite well) for large integer values welcome.

Update. Values from the first figure have been accepted by the On-Line Encyclopedia of Integer Sequences as entries: A181898, A181957, A181958, A181959 and A181960.

Recent Comments