Archive

Effort estimation’s inaccurate past and the way forward

Almost since people started building software systems, effort estimation has been a hot topic for researchers.

Effort estimation models are necessarily driven by the available data (the Putnam model is one of few whose theory is based on more than arm waving). General information about source code can often be obtained (e.g., size in lines of code), and before package software and open source, software with roughly the same functionality was being implemented in lots of organizations.

Estimation models based on source code characteristics proliferated, e.g., COCOMO. What these models overlooked was human variability in implementing the same functionality (a standard deviation that is 25% of the actual size is going to introduce a lot of uncertainty into any effort estimate), along with the more obvious assumption that effort was closely tied to source code characteristics.

The advent of high-tech clueless button pushing machine learning created a resurgence of new effort estimation models; actually they are estimation adjustment models, because they require an initial estimate as one of the input variables. Creating a machine learned model requires a list of estimated/actual values, along with any other available information, to build a mapping function.

The sparseness of the data to learn from (at most a few hundred observations of half-a-dozen measured variables, and usually less) has not prevented a stream of puffed-up publications making all kinds of unfounded claims.

Until a few years ago the available public estimation data did not include any information about who made the estimate. Once estimation data contained the information needed to distinguish the different people making estimates, the uncertainty introduced by human variability was revealed (some consistently underestimating, others consistently overestimating, with 25% difference between two estimators being common, and a factor of two difference between some pairs of estimators).

How much accuracy is it realistic to expect with effort estimates?

At the moment we don’t have enough information on the software development process to be able to create a realistic model; without a realistic model of the development process, it’s a waste of time complaining about the availability of information to feed into a model.

I think a project simulation model is the only technique capable of creating a good enough model for use in industry; something like Abdel-Hamid’s tour de force PhD thesis (he also ignores my emails).

We are still in the early stages of finding out the components that need to be fitted together to build a model of software development, e.g., round numbers.

Even if all attempts to build such a model fail, there will be payback from a better understanding of the development process.

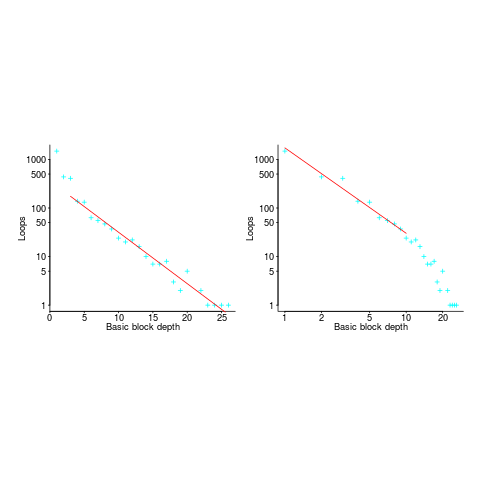

for-loop usage at different nesting levels

When reading code, starting at the first line of a function/method, the probability of the next statement read being a for-loop is around 1.5% (at least in C, I don’t have decent data on other languages). Let’s say you have been reading the code a line at a time, and you are now reading lines nested within various if/while/for statements, you are at nesting depth  . What is the probability of the statement on the next line being a

. What is the probability of the statement on the next line being a for-loop?

Does the probability of encountering a for-loop remain unchanged with nesting depth (i.e., developer habits are not affected by nesting depth), or does it decrease (aren’t developers supposed to using functions/methods rather than nesting; I have never heard anybody suggest that it increases)?

If you think the for-loop use probability is not affected by nesting depth, you are going to argue for the plot on the left (below, showing number of loops whose compound-statement contains appearing in C source at various nesting depths), with the regression model fitting really well after 3-levels of nesting. If you think the probability decreases with nesting depth, you are likely to argue for the plot on the right, with the model fitting really well down to around 10-levels of nesting (code+data).

Both plots use the same data, but different scales are used for the x-axis.

If probability of use is independent of nesting depth, an exponential equation should fit the data (i.e., the left plot), decreasing probability is supported by a power-law (i.e, the right plot; plus other forms of equation, but let’s keep things simple).

The two cases are very wrong over different ranges of the data. What is your explanation for reality failing to follow your beliefs in for-loop occurrence probability?

Is the mismatch between belief and reality caused by the small size of the data set (a few million lines were measured, which was once considered to be a lot), or perhaps your beliefs are based on other languages which will behave as claimed (appropriate measurements on other languages most welcome).

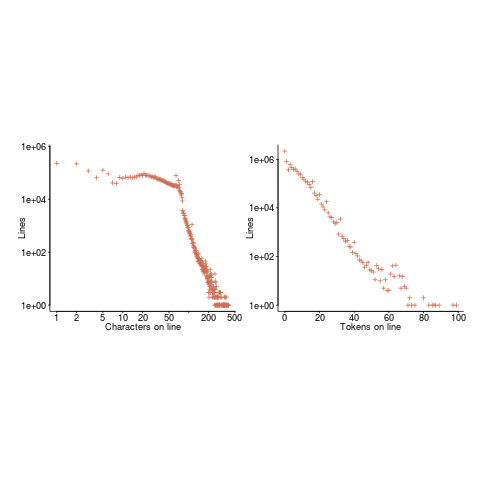

The nesting depth dependent use probability plot shows a sudden change in the rate of decrease in for-loop probability; perhaps this is caused by the maximum number of characters that can appear on a typical editor line (within a window). The left plot (below) shows the number of lines (of C source) containing a given number of characters; the right plot counts tokens per line and the length effect is much less pronounced (perhaps developers use shorter identifiers in nested code). Note: different scales used for the x-axis (code+data).

I don’t have any believable ideas for why the exponential fit only works if the first few nesting depths are ignored. What could be so special about early nesting depths?

What about fitting the data with other equations?

A bi-exponential springs to mind, with one exponential driven by application requirements and the other by algorithm selection; but reality is not on-board with this idea.

Ideas, suggestions, and data for other languages, most welcome.

ALGEC: ALGorithmic language for EConomic problems

I have been reading about ALGEC, the computer language invented in the Soviet Union during the early 1960s, courtesy of a translation of the article Report on the Working Sessions of the Group on Algorithmic Languages for Processing Economic Information (GAIAPEI) by Rand.

The Soviet Union ran a command economy and the job of computers was obviously to process economic information.

The language is based on Algol 60, the default base language for the design of most establishment driven programming languages.

Since the Soviets were the only country to build a computer that used ternary logic, I was hoping that the language would include support for this ‘feature’. No such luck.

Two features caught by attention:

- Keywords can be written in a form that denotes their gender and number. For instance,

Booleancan be written:логическое(neuter),логический(masculine),логическая(feminine) andлогические(plural). - The keyword for the go to token is

to. There is obviously something about the use of Russian that makes it obvious that the word go should not be part of this keyword.

Do readers know of any other computer language which have been influence by features of the designers native human language (apart, obviously from all the English derived computer languages)?

Human vs automatically generated source code: an arms race?

How well do I understand the process of writing source, as performed by human developers? If I could write a program that could generate source code that was indistinguishable from human written code, then I think I could claim to have a decent understanding of the human processes.

An important question is the skill of the entity attempting to distinguish automatically generated and human written code. As of today I think I could fool existing tools (mostly because such tools don’t really exist; n-gram analysis is really all there is), but a human developer with a smattering of coding experience would not be fooled.

I think I know enough to write a tool that could generate single function definitions that would fool most developers, but not a moderately sophisticated analysis tool (I don’t know enough to combine multiple functions into a plausible call graph).

At the CREST workshop today I met Martin Monperrus who shared my view that the only way to prove an understanding of source is to be able to generate it; other people at the workshop seemed more interested in the detection side of things.

Perhaps the time is ripe to kick off a generator/detector arms race would certainly increase our knowledge of the characteristics of human written code.

What counts as automatically generated code? Printing complete function definitions mined from Github is obviously not what most people would associate with the concept of automatic source generation. What about printing statements mined from Github (with each statement coming from a different function definition)? Well, if people could make that work, then good luck to them (it hardly seems worth the bother because most statements are very simple and easily generated on a token by token basis).

Should functions be judged in isolation, or should a detector get to see multiple instances of each case (which would make the detector’s life easier)? It might be best to go with whatever rules make for an interesting arms race.

To start the ball rolling, here is my first entry to the generator side of the race (a rather minimal entry, but still the leader in its field; the recurrent neural network trained on the Linux source would easily beat it, but my interest is in understanding developer behavior and will leave it to others to go down the neural network path).

Forces driving patterns found in call graphs of human written code

It has been a while since I posted anything about generating source that mimics the characteristics of human written code.

Generating function definitions is the easy bit, although variable selection is fiddly and naming needs to be handled.

The hardest part of mimicking human written code is linking functions together, via calls, in apparently meaningful ways. Within one source file it is probably possible to get away with individual calls to other local functions (most function definitions are called once) and a couple of calls to third party libraries. At the complete program level the code needs to tell a story, and doing this is very hard.

I think function calls can be divided into three categories, all based on the relationship between the developer who writes the code than does the call and the developer(s) who wrote the called function:

- Caller developer is the same person as the callee developer. One person gets to decide how things are done,

- Caller developer works on the same team as the callee developer. Here an interface needs to be negotiated with one or more other people,

- Caller developer is making use of a third-party library. The interface is pre-decided, take it or leave it (the same principle applies to library updates, the caller has to decide whether to stay with the existing version or make the changes needed to upgrade to the new version; Android is the (in)famous example of a frequently changed library that developers are under strong pressure to continually adapt to).

Calls to functions in third-party libraries tend to follow stylized sequences, e.g., open_* occurs before read_*/write_* and close_* appears last. Most of the time a generator could get away not calling functions from third-party libraries, because the number of such calls is often small, but when they occur they had better look correct.

Needing to call a function written by another developer on the team opens up all sorts of possibilities: there is always the option of them modifying the function to make life easier for the new call, but the cost of modifying all the existing call sites (plus any associated call chains) may be too high, perhaps a new global variable can be used to communicate the desired information, or perhaps the proposed usage is a small cog in a large wheel and has to make do (or perhaps they don’t like the person asking for the change and reasons are invented for staying as-is).

Then there is correlation in time and space (this has a big impact on patterns of evolution, at least I think so; models of forest fires, i.e., growth, death, fires of various sizes creating space for new growth, is the obvious parallel):

- The longer a function exists the more likely it is to accumulate callers. A function definition can remain unchanged and yet over time become more and more difficult to change.

- It can be very expensive to make changes to an existing function definition when there are lots of calls to it.

What do measurements of code have to say? Almost nothing; existing studies mostly do weird things like treating a system’s call graph as-if it were a social network (using a currently trendy metaphor is good for getting papers published, even if the mapping is all wrong), plotting power law-like graphs and sprouting portentous nonsense.

What is really needed is measurements of forked systems; comparing systems derived from a common past will tell us how much natural variation exists due to individual choice.

FreeBSD, NetBSD and OpenBSD are the obvious poster children of forked, common heritage, systems written in C; I cannot think of any such systems written in Java or C++.

Programming using genetic algorithms: isn’t that what humans already do ;-)

Some time ago I wrote about the use of genetic programming to fix faults in software (i.e., insertion/deletion of random code fragments into an existing program). Earlier this week I was at a lively workshop, Genetic Programming for Software Engineering, with some of the very active researchers in this new subfield.

The genetic algorithm works by having a population of different programs, selecting X% of the best (as measured by some fitness function), making random mutations to those chosen and/or combining bits of programs with other programs; these modified programs are fed back to the fitness function and the whole process iterates until an acceptable solution is found (or a maximum iteration limit is reached).

There are lots of options to tweak; the fitness function gets to decide who has children and is obviously very important, but it can only work with what get generated by the genetic mutations.

The idea I was promoting, to anybody unfortunate enough to be standing in front of me, was that the pattern of usage seen in human written code provides lots of very useful information for improving the performance of genetic algorithms in finding programs having the desired characteristics.

I think that the pattern of usage seen in human written code is driven by the requirements of the problems being solved and regular occurrence of the same patterns is an indication of the regularity with which the same requirements need to be met. As a representation of commonly occurring requirements these patterns are pre-tuned templates for genetic mutation and information to help fitness functions make life/death decisions (i.e., doesn’t look human enough, die!)

There is some noise in existing patterns of code usage, generated by random developer habits and larger fluctuations caused by many developers following the style in some popular book. I don’t have a good handle on estimating the signal to noise ratio.

There has been some work comparing the human maintainability of patches that have been written by genetic algorithms/humans. One of the driving forces behind this work is the expectation that the final patch will still be controlled by humans; having a patch look human-written like is thought to increase the likelihood of it being ‘accepted’ by developers.

Genetic algorithms are also used to improve the runtime performance of programs. Bill Langdon reported that the authors of a program ‘he’ had speeded up by a factor of 70 had not responded to his emails. This may be a case of the authors not knowing how to handle something somewhat off the beaten track; it took a while for Linux developers to start responding to batches of fault reports generated as part of software analysis projects by academic research groups.

One area where human-like might not always be desirable is test case generation. It is easy to find faults in compilers by generating random source code (the syntax/semantics of the randomness follows the rules of the language standard). This approach results in an unmanageable number of fault. Is it worth fixing a fault generated by code that looks like it would never be written by a person? Perhaps the generator should stick to producing test cases that at least look like the code might be written by a person.

Recent Comments