Archive

Christmas books for 2024

My rate of book reading has picked up significantly this year. The following are the really interesting books I read, as is usually the case, most were not published in this year.

I have enjoyed Grayson Perry’s TV programs on the art world, so I bought his book “Playing to the Gallery: Helping Contemporary Art in its Struggle to Be Understood“. It’s a fun, mischievous look at the art world by somebody working as a traditional artist, in the sense of creating work that they believe means/says something, rather than works that are only considered art because they are displayed in an art gallery.

“The Computer from Pascal to von Neumann” by H. H. Goldstine. This history of computing from the mid-1600s (the time of Blaise Pascal) to the mid-1900s (von Neumann died in 1957) told by a mathematician who was first involved in calculating artillery firing tables during World War II, and then worked with early computers and von Neumann. This book is full of insights that only a technical person could provide and is a joy to read.

I saw a poster advertising a guided tour of the trees in my local park, organized by Trees for Cities. It was a very interesting lunchtime; I had not appreciated how many different trees were growing there, including three different kinds of Oak tree. Trees for Cities run events all over the UK, and abroad. Of course, I had to buy some books to improve my tree recognition skills. I found “Collins tree guide” by O. Johnson and D. More to be the most useful and full of information. Various organizations have created maps of trees in cities around the world. The London Tree Map shows the location and species information for over 880,000 of trees growing on streets (not parks), New York also has a map. For a general analysis of patterns of tree growth, see “How to Read a Tree” by T. Gooley.

“Medieval Horizons: Why the Middle Ages Matter” by I. Mortimer. This book takes the reader through the social, cultural and economic changes that happened in England during the Middle Ages, which the author specifies as the period 1000 to 1600. I knew that many people were surfs, but did not know that slaves accounted for around 10% of the population, dropping to zero percent during this period. Changes, at least for the well-off, included moving from living in longhouses to living in what we would call a house, art works moved from two-dimensional representations to life-like images (e.g., renaissance quality), printing enables an explosion of books, non-poor people travelled more, ate better, and individualism started to take-off.

Statistical Consequences of Fat Tails: Real World Preasymptotics, Epistemology, and Applications by N. N. Taleb is a mathematically dense book (while the pdf is in color, I was disappointed that the printed version is black/white; this is the one I read while travelling). This book tells you a lot more than you need to know about the consequences of fat tail distributions. Why might you be interested in the problems of fat tails? Taleb starts by showing how little noise it takes for the comforting assumptions implied by the Normal/Gaussian distribution to fly out the window. The primary comforting assumptions are that the mean and variance of a small sample are representative of the larger population. A world of fat tail distributions is one where the unexpected is to be expected, where a single event can wipe out an organization or industry (banks are said to have lost more in the 2008 financial crisis than they had made in the previous many decades). This book is hard going, and I kept at it to get a feel for the answers to some of the objections to the bad news conveyed. There are a couple of places where I should have been more circumspect in my Evidence-based software engineering book.

I have previously reviewed General Relativity: The Theoretical Minimum by Susskind and Cabannes.

“Embracing Defeat: Japan in the Wake of World War II” by John W. Dower describes in harrowing detail the dire circumstances of the population of Japan immediately after World War II and what they had to endure to survive.

For more detailed book reviews, see: Mr. and Mrs. Psmith’s Bookshelf with some excellent and insightful long book reviews, and the annual Astral Codex Ten book review contest usually has a few excellent reviews/books.

For those of you who think that civilization is about to collapse, or at least like talking about the possibility, a reading list. At the practical level, I think sword fighting and archery skills are more likely to be useful in the longer term.

Learning General relativity at a rudimentary mathematical level

For the longest time, I have wanted to have an understanding of Einstein’s theory of General relativity at a rudimentary mathematical level. Because General relativity has never been a mainstream topic in undergraduate physics, there are almost no books at this level. Also, the mathematics of General relativity is based on tensor calculus, which until recently was rarely used in engineering and non-relativistic physics; market size explains the lack of non-specialist books on the subject.

The Theoretical Minimum is a book series that aims to teach everything required to gain a basic understanding of various areas of modern physics. The primary author of the books is Leonard Susskind.

At the start of this year, “General Relativity: The Theoretical Minimum” by Susskind and Cabannes was published as a paperback (i.e., convenient for reading on the London Underground), and over the last two months I have been slowly working my way through it.

At the start of this week, I discovered Eddie Boyes’ YouTube series on General relativity.

An understanding of General relativity requires an understanding of Tensor calculus, which in turn requires understanding the contravariant and covariant components of a vector. In the Cartesian coordinates system, which pervades undergraduate physics, the axes are at right angles and the measurement units are constant. The covariant component of a vector is the same as the contravariant component.

Contravariant/covariant vectors are different when the axes are not at right angles, as the plot below (from Wikipedia), shows. Choosing one component of the blue vector, the  component of its contravariant vector is measured by drawing a line parallel to the y-axis (on right), while the

component of its contravariant vector is measured by drawing a line parallel to the y-axis (on right), while the  component of its covariant vector is found by drawing a line perpendicular to the x-axis (on left). The plot below shows how the measurements of each component vary as the angle between the x/y axes varies:

component of its covariant vector is found by drawing a line perpendicular to the x-axis (on left). The plot below shows how the measurements of each component vary as the angle between the x/y axes varies:

Susskind’s definition of covariant vectors is a differential equation, which might be enough for some people, but not me. Boyes gives a very clear description (he does labour points, and I watched at 1.75 speed).

There are other places where I found Boyes’ explanation to be clearer, and a few where I preferred Susskind. I would recommend both.

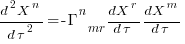

What complicates the use of Tensor calculus is the multiplicity of terms in an equation. In 3-dimensional Cartesian coordinates there are three variables x/y/z, while in 3-dimensional Tensor calculus there are nine variables (the coordinate system can produce an interaction between any axis and another one). The equation for the proper acceleration of an object, written using the Einstein summation convention (there is a summation over the repeated indexes,  ,

,  ,

,  ) is:

) is:  . In three dimensions, there would be three equations (i.e.,

. In three dimensions, there would be three equations (i.e.,  ), each with nine terms on the right, for

), each with nine terms on the right, for  ,

,  ;

;  is the Christoffel symbol which calculates the unit distance for a location in space (in Cartesian coordinates this simplifies down to what we all learned in school); the superscripts denote contravariant components, and the subscripts denote covariant components:

is the Christoffel symbol which calculates the unit distance for a location in space (in Cartesian coordinates this simplifies down to what we all learned in school); the superscripts denote contravariant components, and the subscripts denote covariant components:

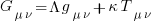

How did Einstein derive his field equation,  , of General relativity?

, of General relativity?

I had known of the equivalence principle, that the force experienced by an accelerating observer (e.g., travelling in an elevator) is indistinguishable from the force of gravity, but had not appreciated how this principle played an integral part in the derivation of the equations for the force experienced in a gravitational field.

The derivation is based on asking about the trajectory of a rocket accelerating at a constant rate in a flat space-time (allowing Cartesian coordinates to be used). If a stationary observer on the ground tracks the rocket from when it starts with zero velocity and then constantly accelerates in a straight line for a very long time, how will the velocity and distance of the rocket (from the observer) change over time? From the ground-based observer’s perspective, the rocket’s velocity will get closer and closer to the speed of light; Special relativity prevents it exceeding the speed of light. The distance travelled by the rocket over time, as tracked by the ground-based observer, will be seen to be fitted by a hyperbolic equation. This analysis is used to justify the use of hyperbolic geometric as a coordinate system for dealing with gravity.

A hyperbolic metric space was not enough for Einstein to derive his equations. He also assumed conservation of various quantities, and appears to have included some idealism about how the Universe ought to operate.

Special relativity predicts some very surprising behaviors, such as of time dilation. What very surprising behaviours does General relativity predict?

The bending of light by gravity is often cited as an example of a prediction by General relativity. However, bending is predicted my Newtonian mechanics, but the amount is half that predicted by General relativity.

While Black holes get a lot of public attention, and are discussion time by Susskind and Boyes, General relativity is not needed to make the prediction that that gravitational attraction of a sufficiently massive body will be greater than the speed of light.

General relativity deals with accelerating frames of reference (gravity or rocket ships). Both Susskind and Boyes discuss some version of Bell’s spaceship paradox, which is actually a consequence of Special relativity; I think that Boyes YouTube video is the easiest to follow.

Apart from ticking another item on my to-do list, I now appreciate some of the subtle points people make about General relativity. I’m sure that this knowledge will increase the likelihood of me getting in over my head in some future discussion on relativity.

A distillation of Robert Glass’s lifetime experience

Robert Glass is a software engineering developer, manager, researcher and author who, until six months ago, I had vaguely heard of; somehow I had missed reading any of his 25 books. After seeing citations to some of Glass’s books, I bought half-a-dozen or so, second hand. They are well written, and twenty-five years ago I would have found them very interesting; now I simply agree with the points made.

“software creativity 2.0” is Glass’s penultimate book, published in 2006, and the one that caught my attention. I would recommend his other books to anybody who is new to software engineering, or experienced people looking for an encapsulation in print of what they encounter at work.

Glass was 74 when this book was published, having started working in computing in 1954. He was there and seems to have met many of the major names in software engineering, working with some of them.

The book is a clear-eyed summary of what Glass has learned from being involved with software engineering, and watching method/tool fashions come and go. My favourite section draws parallels between software development cultures and the culture of Rome vs. Greek vs. Barbarian:

Models Roman Greek Barbarians Organization Organize people Organize things Barely Focus Manages projects Writes programs Leap to coding Motivation Group goals Problem to be solved Heroics Working style Organizations Small groups Solo Politics Imperial Democratic Anarchist Tool use People are tools Things are tools Avoid tools Status Function-ocracy Meritocracy Fear-ocracy Activities Plan things Do things Break things Emphasize Form Substance Line of code |

The contents are essentially a collection of short essays, organized under the 19 chapter headings below, which in turn are grouped into four parts. The first nine chapters (part I, and 60% of pages) contain the experience based material, with the subsequent parts/pages having a creativity theme. A thread running through the discussion is idealism/practice:

Discipline vs. Flexibility

Formal methods versus Heuristics

Optimizing versus Satisficing

Quantitative versus Qualitative Reasoning

Process versus Product

Intellect vs. Clerical Tasks

Theory vs. Practice

Industry vs. Academe

Fun versus Getting Serious

Creativity in the Software Organization

Creativity in Software Technology

Creative Milestones in Software History

Organizational Creativity

The Creative Person

Computer Support for Creativity

Creativity Paradoxes#'twas Always Thus

A Synergistic Conclusion

Other Conclusions |

This book deserves to be widely read. I found it best to read a single section per sitting.

Christmas books for 2023

This year’s Christmas book list, based on what I read this year, and for the first time including a blog series that I’m sure will eventually appear in book form.

“To Explain the World: The discovery of modern science” by Steven Weinberg, 2015. Unless you know that Steven Weinberg won a physics Nobel prize, this looks like just another history of science book (the preface tells us that he also taught a history of science course for over a decade). This book is written by a scientist who appears to have read the original material (I’m assuming in translation), who puts the discoveries and the scientists involved at the center of the discussion; this is not the usual historian who sprinkles in a bit about science, while discussing the cast of period characters. For instance, I had never understood why the work of Galileo was considered to be so important (almost as a footnote, historians list a few discoveries of his). Weinberg devotes pages to discussing Galileo’s many discoveries (his mathematics was a big behind the times, continuing to use a geometric approach, rather than the newer algebraic techniques), and I now have a good appreciation of why Galileo is rated so highly by scientists down the ages.

Chapter 2 of “When Old Technologies Were New: Thinking about electric communication in the late nineteenth century” by Carolyn Marvin, 1988. The book is worth buying just for chapter 2, which contains many hilarious examples of how the newly introduced telephone threw a spanner in to the workings of the social etiquette of the class of person who could afford to install one. Suitors could talk to daughters without other family members being present, public phone booths allowed any class of person to be connected directly to the man of the house, and when phone companies started publishing publicly available directories containing subscriber name/address/number, WELL!?! In the US there were 1 million telephones installed by 1899, and subscribers were sometimes able to listen to live musical concerts and sports events (commercial radio broadcasting did not start until the 1920s).

“The Grand Strategy of the Roman Empire: From the first century A.D. to the third” by Edward Luttwak, 1976; h/t Mr. and Mrs. Psmith’s review. I cannot improve or add to John Psmith’s review. The book contains more details; the review captures the essence. On a related note, for the hard core data scientists out there: Early Imperial Roman army campaigning: observations on marching metrics, energy expenditure and the building of marching camps.

“Innovation and Market Structure: Lessons from the computer and semiconductor industries” by Nancy S. Dorfman, 1987. An economic perspective on the business of making and selling computers, from the mid-1940s to the mid-1980s. Lots of insights, (some) data, and specific examples (for the most part, the historians of computing are, well, historians who can craft a good narrative, but the insights are often lacking). The references led me to: Mancke, Fisher, and McKie, who condensed the 100K+ pages of trial transcript from the 1969–1982 IBM antitrust trial down to 1,500+ pages of Historical narrative.

Worshipping the Future by Helen Dale and Lorenzo Warby. Is “… a series of essays dissecting the social mechanisms that have led to the strange and disorienting times in which we live.” The series is a well written analysis that attempts to “… understand mechanisms of how and the why, …” of Woke.

Honourable mentions

“The Big Con: The story of the confidence man and the confidence trick” by David W. Maurer (source material for the film The Sting).

“Cubed: A secret history of the workplace” by Nikil Saval.

Rereading The Mythical Man-Month

The book The Mythical Man-Month by Fred Brooks, first published in 1975, continues to be widely referenced, my 1995 edition cites over 250K copies in print. In the past I have found it to be a pleasant, relatively content free, read.

Having spent some time analyzing computing data from the 1960s, I thought it would be interesting to reread Brooks in the light of what I had recently learned. I cannot remember when I last read the book, and only keep a copy to be able to check when others cite it as a source.

Each of the 15 chapters, in the 1975 edition, takes the form of a short five/six page management briefing on some project related topic; chapters start with a picture of some work or art on one page, and a short quote from a famous source occupies the opposite page. The 20th anniversary edition adds four chapters, two of which ‘refire’ Brooks’ 1986 paper introducing the term No Silver Bullet (that no single technlogy will produce an order of magnitude improvement in productivity).

Rereading, I found the 1975 contents to be sufficiently non-specific that my newly acquired knowledge did not change anything. It was a pleasant read, various ideas and some data points are presented, the work of others is covered and cited, a few points are briefly summarised and the chapter ends. The added chapters have a different character than the earlier ones, being more detailed in their discussion and more specific in suggesting outcomes. The ‘No Silver Bullet’ material dismisses some of the various claimed discoveries of a silver bullet.

Why did the book sell so well?

The material is an easy read, and given that no solutions are heavily pushed, there is little to disagree with.

Being involved on a project for the first time can be a confusing experience, and even more experienced people get lost. Brooks can provide solace through his calm presentation of project behaviors as stuff that happens.

What project experience did Brooks have?

Brooks’ PhD thesis The Analytic Design of Automatic Data Processing Systems was completed in 1956, and, aged 25, he joined IBM that year. He was project manager for System/360 from its inception in late 1961 to its launch in April 1964. He managed the development of the operating system OS/360 from February 1964, for a year, before leaving to found the computer science department at the University of North Carolina at Chapel Hill, where he remained.

So Brooks gained a few years of hands-on experience at the start of his career and spent the rest of his life talking about it. A not uncommon career path.

Managing the development of an O/S intended to control a machine containing 16K of memory (i.e., IBM’s System/360 model 30) might not seem like a big deal. Teams of half-a-dozen good people had implemented projects like this since the 1950s. However, large companies create large teams, operating over multiple sites, with every changing requirements and interfaces, changing hardware, all with added input from marketing (IBM was/is a sales-driven organization). I suspect that the actual coding effort was a rounding error, compared to the time spent in meetings and on telephone calls.

Brooks looked after the management, and Gene Amdahl looked after the technical stuff (for lots of details see IBM’s 360 and early 370 systems by Pugh, Johnson, and Palmer).

Brooks was obviously a very capable manager. Did the O/360 project burn him out?

An evidence-based software engineering book from 2002

I recently discovered the book A Handbook of Software and Systems Engineering: Empirical Observations, Laws and Theories by Albert Endres and Dieter Rombach, completed in 2002.

The preface says: “This book is about the empirical aspects of computing. … we intend to look for rules and laws, and their underlying theories.” While this sounds a lot like my Evidence-based Software Engineering book, the authors take a very different approach.

The bulk of the material consists of a detailed discussion of 50 ‘laws’, 25 hypotheses and 12 conjectures based on the template: 1) a highlighted sentence making some claim, 2) Applicability, i.e., situations/context where the claim is likely to apply, 3) Evidence, i.e., citations and brief summary of studies.

As researchers of many years standing, I think the authors wanted to present a case that useful things had been discovered, even though the data available to them is nowhere near good enough to be considered convincing evidence for any of the laws/hypotheses/conjectures covered. The reasons I think this book is worth looking at are not those intended by the authors; my reasons include:

- the contents mirror the unquestioning mindset that many commercial developers have for claims derived from the results of software research experiments, or at least the developers I talk to about software research. I’m forever educating developers about the need for replications (the authors give a two paragraph discussion of the importance of replication), that sample size is crucial, and using professional developers as subjects.

Having spent twelve chapters writing authoritatively on 50 ‘laws’, 25 hypotheses and 12 conjectures, the authors conclude by washing their hands: “The laws in our set should not be seen as dogmas: they are not authoritatively asserted opinions. If proved wrong by objective and repeatable observations, they should be reformulated or forgotten.”

For historians of computing this book is a great source for the software folklore of the late 20th/early 21st century,

- the Evidence sections for each of the laws/hypotheses/conjectures is often unintentionally damming in its summary descriptions of short/small experiments involving a handful of people or a few hundred lines of code. For many, I would expect the reaction to be: “Is that it?”

Previously, in developer/researcher discussions, if a ‘fact’ based on the findings of long ago software research is quoted, I usually explain that it is evidence-free folklore; followed by citing The Leprechauns of Software Engineering as my evidence. This book gives me another option, and one with greater coverage of software folklore,

- the quality of the references, which are often to the original sources. Researchers tend to read the more recently published papers, and these are the ones they often cite. Finding the original work behind some empirical claim requires following the trail of citations back in time, which can be very time-consuming.

Endres worked for IBM from 1957 to 1992, and was involved in software research; he had direct contact with the primary sources for the software ‘laws’ and theories in circulation today. Romback worked for NASA in the 1980s and founded the Fraunhofer Institute for Experimental Software Engineering.

The authors cannot be criticized for the miniscule amount of data they reference, and not citing less well known papers. There was probably an order of magnitude less data available to them in 2002, than there is available today. Also, search engines were only just becoming available, and the amount of material available online was very limited in the first few years of 2000.

I started writing a book in 2000, and experienced the amazing growth in the ability of search engines to locate research papers (first using AltaVista and then Google), along with locating specialist books with Amazon and AbeBooks. I continued to use university libraries for papers, which I did not use for the evidence-based book (not that this was a viable option).

Christmas books for 2022

This year’s list of books for Christmas, or Isaac Newton’s birthday (in the Julian calendar in use when he was born), returns to its former length, and even includes a book published this year. My book Evidence-based Software Engineering also became available in paperback form this year, and would look great on somebodies’ desk.

The Mars Project by Wernher von Braun, first published in 1953, is a 91-page high-level technical specification for an expedition to Mars (calculated by one man and his slide-rule). The subjects include the orbital mechanics of travelling between Earth and Mars, the complications of using a planet’s atmosphere to slow down the landing craft without burning up, and the design of the spaceships and rockets (the bulk of the material). The one subject not covered is cost; von Braun’s estimated 950 launches of heavy-lift launch vehicles, to send a fleet of ten spacecraft with 70 crew, will not be cheap. I’ve no idea what today’s numbers might be.

The Fabric of Civilization: How textiles made the world by Virginia Postrel is a popular book full of interesting facts about the economic and cultural significance of something we take for granted today (or at least I did). For instance, Viking sails took longer to make than the ships they powered, and spinning the wool for the sails on King Canute‘s North Sea fleet required around 10,000 work years.

Wyclif’s Dust: Western Cultures from the Printing Press to the Present by David High-Jones is covered in an earlier post.

The Second World Wars: How the First Global Conflict Was Fought and Won by Victor Davis Hanson approaches the subject from a systems perspective. How did the subsystems work together (e.g., arms manufacturers and their customers, the various arms of the military/politicians/citizens), the evolution of manufacturing and fighting equipment (the allies did a great job here, Germany not very good, and Japan/Italy terrible) to increase production/lethality, and the prioritizing of activities to achieve aims. The 2011 Christmas books listed “Europe at War” by Norman Davies, which approaches the war from a data perspective.

Through the Language Glass: Why the world looks different in other languages by Guy Deutscher is a science driven discussion (written in a popular style) of the impact of language on the way its speakers interpret their world. While I have read many accounts of the Sapir–Whorf hypothesis, this book was the first to tell me that 70 years earlier, both William Gladstone (yes, that UK prime minister and Homeric scholar) and Lazarus Geiger had proposed theories of color perception based on the color words commonly used by the speakers of a language.

Printing press+widespread religious behavior: A theory

The book The Weirdest People in the World: How the West Became Psychologically Peculiar and Particularly Prosperous provides an explanation of the processes which weakened the existing social ties of family and tribe; however, the emergence of WEIRD people (Western, Educated, Industrialized, Rich and Democratic) required new social norms to spread and be accepted throughout society. A major technical innovation, in the form of the printing press, provided the means for mass communication of ideas and practices.

David High-Jones’ book Wyclif’s Dust: Western Cultures from the Printing Press to the Present describes the social consequences of what he calls book religion; a combination of deeply religious western societies and the ability of individuals to write and sell affordable books (made possible by the printing press). Religion+printing press created the conditions for what High-Jones calls a hothouse culture, a period from the 1600s to the end of the 1800s.

Around 1440 the printing press is invented and quickly spreads; around 5 million books were handwritten in the 1400s, about 80 million books were produced in the first 50 years of printing, and around a billion in the 1700s. During the 1500s the Protestant reformation happens; Protestant encouraged its followers to read the Bible, which creates a demand for printed Bibles and the need to be able to read (which increases literacy rates). In England, between 1480-1640, 40% of published books were religious.

The changes to society’s existing norms are wrought by cultural transmission, initially via middle class parents making use of edifying books to teach their children moral values and social skills, later Sunday schools took on this role, but also had to offer reading lessons to attract members. In the adult world, accepted norms were maintained by social enforcement. The impact on western societies was widespread because observant religious behavior was widespread.

The original intent, of those writing the religious books, was the creation of a god fearing society. In practice, a trust based society was created, where workers might be relied upon not to shirk their duties and businessmen to not renege on agreements.

In the beginning science, in the form of printed technical books, rarely made an appearance. In the 1700s the Enlightenment happens, and scientific books are discussed by small collections of disparate individuals. The industrial revolution happens, but the bulk of the demand is for trustworthy workers; technical and scientific know how remains a minority interest.

In Part I of the book, High-Jones weaves a reading and convincing narrative. Part II, 1900 to today, is a tale of the crumbling and breakdown of the social forces and incentives that creates the trust based society; while example are enumerated, no overarching theory is proposed (I skimmed this part).

Christmas books for 2021

This year, my list of Christmas books is very late because there is only one entry (first published in 1950), and I was not sure whether a 2021 Christmas book post was worthwhile.

The book is “Planning in Practice: Essays in Aircraft planning in war-time” by Ely Devons. A very readable, practical discussion, with data, on the issues involved in large scale planning; the discussion is timeless. Check out second-hand book sites for low costs editions.

Why isn’t my list longer?

Part of the reason is me. I have not been motivated to find new topics to explore, via books rather than blog posts. Things are starting to change, and perhaps the list will be longer in 2022.

Another reason is the changing nature of book publishing. There is rarely much money to be made from the sale of non-fiction books, and the desire to write down their thoughts and ideas seems to be the force that drives people to write a book. Sites like substack appear to be doing a good job of diverting those with a desire to write something (perhaps some authors will feel the need to create a book length tomb).

Why does an author need a publisher? The nitty-gritty technical details of putting together a book to self-publish are slowly being simplified by automation, e.g., document formatting and proofreading. It’s a win-win situation to make newly written books freely available, at least if they are any good. The author reaches the largest readership (which helps maximize the impact of their ideas), and readers get a free electronic book. Authors of not very good books want to limit the number of people who find this out for themselves, and so charge money for the electronic copy.

Another reason for the small number of good new, non-introductory, books, is having something new to say. Scientific revolutions, or even minor resets, are rare (i.e., measured in multi-decades). Once several good books are available, and nothing much new has happened, why write a new book on the subject?

The market for introductory books is much larger than that for books covering advanced material. While publishers obviously want to target the largest market, these are not the kind of books I tend to read.

Electronic Evidence and Electronic Signatures: book

Electronic Evidence and Electronic Signatures by Stephen Mason and Daniel Seng is not the sort of book that I would normally glance at twice (based on its title). However, at this start of the year I had an interesting email conversation with the first author, a commentator who worked for the defence team on the Horizon IT project case, and he emailed with the news that the fifth edition was now available (there’s a free pdf version, so why not have a look; sorry Stephen).

Regular readers of this blog will be interested in chapter 4 (“Software code as the witness”) and chapter 5 (“The presumption that computers are ‘reliable'”).

Legal arguments are based on precedent, i.e., decisions made by judges in earlier cases. The one thing that stands from these two chapters is how few cases have involved source code and/or reliability, and how simplistic the software issues have been (compared to issues that could have been involved). Perhaps the cases involving complicated software issues get simplified by the lawyers, or they look like they will be so difficult/expensive to litigate that the case don’t make it to court.

Chapter 4 provided various definitions of source code, all based around the concept of imperative programming, i.e., the code tells the computer what to do. No mention of declarative programming, where the code specifies the information required and the computer has to figure out how to obtain it (SQL being a widely used language based on this approach). The current Wikipedia article on source code is based on imperative programming, but the programming language article is not so narrowly focused (thanks to some work by several editors many years ago 😉

There is an interesting discussion around the idea of source code as hearsay, with a discussion of cases (see 4.34) where the person who wrote the code had to give evidence so that the program output could be admitted as evidence. I don’t know how often the person who wrote the code has to give evidence, but these days code often has multiple authors, and their identity is not always known (e.g., author details have been lost, or the submission effectively came via an anonymous email).

Chapter 5 considers the common law presumption in the law of England and Wales that ‘In the absence of evidence to the contrary, the courts will presume that mechanical instruments were in order. Yikes! The fact that this is presumption is nonsense, at least for computers, was discussed in an earlier post.

There is plenty of case law discussion around the accuracy of devices used to breath-test motorists for their alcohol level, and defendants being refused access to the devices and associated software. Now, I’m sure that the software contained in these devices contains coding mistakes, but was a particular positive the result of a coding mistake? Without replicating the exact conditions occurring during the original test, it could be very difficult to say. The prosecution and Judges make the common mistake of assuming that because the science behind the test had been validated, the device must produce correct results; ignoring the fact that the implementation of the science in software may contain implementation mistakes. I have lost count of the number of times that scientist/programmers have told me that because the science behind their code is correct, the program output must be correct. My retort that there are typos in the scientific papers they write, therefore there may be typos in their code, usually fails to change their mind; they are so fixated on the correctness of the science that possible mistakes elsewhere are brushed aside.

The naivety of some judges is astonishing. In one case (see 5.44) a professor who was an expert in mathematics, physics and computers, who had read the user manual for an application, but had not seen its source code, was considered qualified to give evidence about the operation of the software!

Much of chapter 5 is essentially an overview of software reliability, written by a barrister for legal professionals, i.e., it is not always a discussion of case law. A barristers’ explanation of how software works can be entertainingly inaccurate, but the material here is correct in a broad brush sense (and I did not spot any entertainingly inaccuracies).

Other than breath-testing, the defence asking for source code is rather like a dog chasing a car. The software for breath-testing devices is likely to be small enough that one person might do a decent job of figuring out how it works; many software systems are not only much, much larger, but are dependent on an ecosystem of hardware/software to run. Figuring out how they work will take multiple (expensive expert) people a lot of time.

Legal precedents are set when both sides spend the money needed to see a court case through to the end. It’s understandable why the case law discussed in this book is so sparse and deals with relatively simple software issues. The costs of fighting a case involving the complexity of modern software is going to be astronomical.

Recent Comments