Coral 66, CHILL, C, Cobol and C++

C is for Coral 66, CHILL, C, Cobol and C++. Writing compilers for languages beginning with C is a surprisingly accurate summary of the first half of my professional career.

Coral 66 is a variant of Algol 60 created by a branch of the UK Ministry of Defence. Apart from being the target language for my first compiler related work after graduating (a preprocessor to implement floating-point double) it also illustrates the difference between the UK and US military establishments, with the former deriving its language from the academically respectable Algol 60 and the later deriving Jovial from the engineering oriented Fortran.

CHILL gets mentioned because I spent 2 years writing and maintaining a front end of a CHILL compiler whose claim to fame may be as the oldest compiler still in production use; I have been half expecting somebody to point to a PL/1 mainframe compiler that is older. The language does support 2-dimensional switch statements, which I have not encountered elsewhere, e.g., switch i, j ... requires matching the value of i against one list of case labels and then matching the value of j against a second list associated with the first matched value.

C, I have written enough about this already.

COBOL is generally mocked by software developers, but it is a surprisingly sophisticated language; I suspect that the real target of the mockery is the thought of business people writing software. Business people have one redeeming characteristic in the programming language world, they are willing to pay good money for good compilers (generally developers are loath to spend money on the tools of their trade). I think that more money has been spent buying COBOL compilers than buying compilers for all the other languages put together.

My company won a contract to produce an optimising Micro Focus COBOL code generator for the Motorola 88000 (the successor to the popular, to developers, 68000) this is where I got to experience the most extreme form of debugging I have ever done. Creating a code generator for a new cpu involves cross-compiling programs from a platform that already supports the necessary tools and eventually cross-compiling the new compiler so that it executes on the target cpu. Porting software to a new cpu and OS invariably uncovers new faults that are specific to that combination of platform; compiler writers have to deal with the uncertainty that the fault might actually be caused by the code they generated. I’m used to handling this extra level of uncertainty, some developers cannot handle it and end up in mindless looping activities until something different to do. It was not until the target hardware was delivered that we found out it contained alpha silicon (i.e., a not yet in beta version of the cpu), and oh, here is an awk script to run your generated code through to map the instructions that don’t yet work to something that does; ever couple of week we would get an updated cpu motherboard, OS update and of course an awk script for that version of the silicon. I am not looking to repeat the ‘excitement’ of using an alpha silicon cpu to test a freshly written code generator, awk script or not. Sean Corfield deserves a lot of the credit for getting this project to work.

C++ was almost a very different beast than it is today. SC22 (the top level committee responsible for ISO programing language standards) did not think C++ was sufficiently different from C to warrant a separate committee. WG14 discussed this issue and gave a formal response to SC22 saying “we are not the committee to standarize C++”. WG21 was created, but without the power to publish any standards, with the task of figuring out what to do. Things came to a head, in a very heated discussion, at the WG21 meeting during early 1992 in London; the meeting minutes are suspiciously brief. The two positions were, 1) C++ is still incomplete and needs time to evolve to become itself before being standardized (I recall the AT&T representative saying that “C++ needed to fulfill its destiny” and banging the table, which got a round of applause), 2) we need a C++ ISO standard asap because C++ is being used now for production work and we have to stop Bjarne making major changes to the language (up until then existing practice was for the group creating an ISO language standard to specify the words for whatever existed at the time, i.e., no invention of new features); the Microsoft representative was the vocal led on this position.

As an outsider it (I had not attended the two previous WG12 meetings) there appeared to be many floating voters in the room and it could have gone either way, but the standardize now camp eventually prevailed.

Stroustrup’s solution to future C++ evolution possibly being stymied by WG21 was to become an active member of WG21 and to recruit like minded individuals to continue the work of evolving C++ within WG21, i.e., an ISO language committee broke with tradition and started doing major language invention.

Why did people care that Stroustrup kept changing the language, why didn’t they ignore him and carry on with whatever C++ compiler they were using? Up until almost the date of the London meeting all but one C++ compilers were based on Cfront, and who maintained and controlled Cfront? Yes, none other than Bjarne Stroustrup himself.

Things to read

The Design and Evolution of C++, by Bjarne Stroustrup. Get the 1994 edition, before history started to get updated.

BASIC, Babbage, BCPL, B, BLISS and BLooP

B is for BASIC, Babbage, BCPL, B, BLISS and BLooP

BASIC was the Javascript of its day, in the sense that it was the language supported by the greatest number of computing platforms in the field; this is likely a very different view of BASIC than the one readers brought up on the various Microsoft products have. Back in the day Basic (lower case to separate it from its original beginners roots) was a language that met a few simple criteria: 1) it could be implemented in a few K of memory and support useful programs that ran in a few more K, 2) it used line numbers for control flow and source editing, 3) it had a print statement and 4) if it supported arrays they were defined using the letter sequence DIM.

Babbage crossed my path during my first job after graduating. It was billed as a high level assembler, but I suspect it was created as a side project by somebody wanting to learn a bit about compilers who found existing languages too hard. Such languages and their implementations continue to proliferate today, but back in the day developers often had to use them for production work because no alternatives were available.

BCPL was a return to simplicity compared to the increasingly ornate languages that designers were coming up with later in the 1960s. These days it is probably best known, if known at all, as the language that most strongly influenced the design of C. I have found that mentioning its name often brings back fond memories in those who used it in an earlier life.

B was the language designed by the group of guys that came up with C some months later. Its main claim to fame is as a source of debate about whether the successor to C should be called P (the third letter in BCPL) or D (the fourth letter of the alphabet).

BLISS is another systems language from the late 60s. Its claim to fame is as the target language of the PQCC project (Production Quality Compiler-Compiler). This project invented, or at least brought all the pieces together in one place, the structure of how compilers are written today, i.e., convert the source to a tree and have lots of passes walk over this tree slowly transforming it to optimized code. PQCC happened in the early 70s and when I encountered it on a compiler project at Intermetrics at the start of the 80s the design approach seemed impractical to me, and others new to the ideas; the resulting compiler would easily exceed the available computer memory capacity of the day (the secret sauce that rarely got talked about was software memory management). By the mid-90s memory was so plentiful that all the compiler research from the last two decades could finally fly.

BLooP is a language that is not Turing complete. It was created by Douglas Hofstadter for his book Gödel, Escher, Bach and fails to be Turing complete in a rather interesting way, by being limited to computing functions that are primitive recursive.

Things to read

The Design of an Optimizing Compiler, by William Wulf, Richard K. Johnson, Charles B. Weinstock, Steven O. Hobbs, and Charles M. Geschke (longish technical report).

I suspect “Gödel, Escher, Bach” got such rave reviews in its day because nobody wanted to admit to not understanding it; I found it overly long and too full of itself. Chunks of it might appeal to a young audience.

ALGOL 60, AWK, ALGOL 68, Ada, APL and Assembler

Having watched other blogs run themed Advent calendars, this year I have plucked up the courage to attempt my own based on programming languages in alphabetical order. The choice of languages covered will be governed by a mixture of my having used them, me thinking they are interesting in some way and for some letters not having many languages to write about.

Off we go…

A is for Algol 60, awk, ALGOL_68, Ada, APL and Assembler.

Over 50 years later its amazing how modern the Algol 60 report feels (some might complain about the lack of object oriented features, but we have to wait until 1967 for these to appear). Unlike Cobol and Fortran, from the same period, Algol is not generally known today, but it has probably been much more influential on what came after it. Randell and Russell’s “ALGOL 60 Implementation” from 1963 was the go to book for compiler writers until the Dragon book appeared in 1977.

AWK is my go to language for simple text processing. It’s one line at a time approach to handling input maps so well on to many real world problems, using a bigger language just feels like overkill.

Algol 68 was just as ground breaking as its earlier namesake. The Chomsky revolution in linguistics was in full swing, everything could be specified using syntax (that messy semantics stuff was tamed!), and programming languages had to get with it. Algol 68 was specified using a two-level grammar, one level of grammar specified syntax rules that were used to specify the second level syntax rules for actual source code (i.e., rules specifying rules). This two-level approach enabled many semantic requirements, on code, to be specified by syntactic rules, which was considered to be a good thing. The problem was language exponents loved to explain the wonderful syntactic goings on to all and sundry, and the language never spread beyond those who fell in love with two-level grammars (yes, your author was young once). One of those involved in early work on Algol 68 thought it such a monstrosity that he left and same up with a much simpler language, Pascal. A blast from Christmas’s past for those who miss pouring over line printer output.

Ada gets a mention because rather than get involved with writing an Ada compiler for somebody else I started my own company working first in Pascal and then C. I’m not sure if anybody yet understands the reasons behind Ada’s failure to become the widely used language that it was intended to be (enough money was thrown at it). Those involved pivoted and these days Ada is touted as somehow being ideal for use in safety-critical applications.

APL. The language that insists you write code that is the antithesis of readability. Despite the need for a special keyboard to write programs this language used to have a surprisingly large following (it was big in financial institutions where the ability to succinctly evaluate complex formula was demanded). The fact that it proved possible to compile APL convinced me that it was possible to write a compiler for any language.

Assembler. How could I not mention assembler?

Things to read

Informal introduction to Algol 68 by Lindsey and van der meulen, for those who find the language definition, A Revised Report on the Algorithmic Language ALGOL 68, a little brain scrambling.

Grammars for Programming Languages by J. Craig Cleaveland and Robert C. Uzgalis is a very readable introduction to two-level grammars.

Rationale for Design Ada Programming Language by J. Ichbiah J. Barnes is full of interesting insights.

Effective awk Programming: Universal Text Processing and Pattern Matching by Arnold Robbins, the 4th edition is out in two months 🙂

I have never read any really good APL books. Suggestions welcome.

Empirical analysis of UK legislation started this weekend

Yesterday I was one of a dozen or so people at a hackathon hosted by the Ministry of Justice. I’m sure that the organizers would have liked a lot more people attending, but the McDonald’s hackathon across town was a bigger draw for most of the hackers I know.

The dataset was all UK legislation back to 1988, and a less complete set going back to 1267, in html and various flavors of xml; in all 20G of compressed files, with the compressed html files occupying 7G on my machine. As of this weekend the files are available online.

There were about half a dozen domain experts, in various aspects of the creation of UK legislation, present and they suggested lots of interesting questions that we (i.e., the attendees who could code) might like to try to answer.

I was surprised at the simplicity of some questions, e.g., volume (e.g., word count) of legislation over time and branch of government. The reason these question had not been answered before is that the data had not been available; empirical analysis of UK legislation started this weekend.

The most interesting question I heard involved the relative volume of primary and secondary legislation. Primary legislation is written by Parliament and in some cases creates a framework that is used by secondary legislation which contains the actual details. A lot of this secondary legislation is written by the Executive branch of government (by civil servants in the appropriate branch of government) and may not involve any parliamentary oversight. Comparing the relative volume of primary/secondary legislation over time would show if the Executive branch was gaining more powers to write laws, at the expense of Parliament.

With all the interesting discussions going on, setting ourselves up and copying the data (from memory sticks, not the internet), coding did not really start until 11, and we had to have our projects ‘handed-in’ by 16:00, not enough time to be sure of getting even an approximate answer to the primary/secondary legislation question. So I plumped for solving a simpler problem that I was confident of completing.

Certain phrases are known to be frequently used in legislation, e.g., “for the purposes of”. What phrases are actually very common in practice? The domain experts were interested in phrases by branch of government and other subsets; I decided to keep it simple by processing every file and giving them the data.

Counting sequences of n words is a very well studied problem and it was straightforward to locate a program to do it for me. I used the Lynx web browser to strip out the html (the importance of making raw text available for this kind of analysis work was recognized and this will be available at some future date). I decided that counting all four word sequences ought to be doable on my laptop and did manage to get it all to work in the available time. Code and list of 4-grams over the whole corpus available on Github.

As always, as soon as they saw the results, the domain experts immediately starting asking new questions.

Regular readers of this blog will know of my long standing involvement in the structure and interpretation of programming language standards. It was interesting to hear those involved in the writing/interpretation of legislation having exactly the same issues, and coming up with very similar solutions, as those of us in the language standards world. I was surprised to hear that UK legislation has switched from using “shall” to using “must” to express requirements (one of the hacks plotted the use of shall/must over time and there has been an abrupt change of usage). One of the reasons given was that “must” is more modern; no idea how word modernness was measured. In the ISO standards’ world “shall” is mandated over “must”. Everybody was very busy in the short amount of time available, so I had to leave an insiders chat about shall/must to another time.

The availability of such a large amount of structured English documents having great import should result in some interesting findings and tools being produced.

Cobol 2014, perhaps the definitive final version of the language…

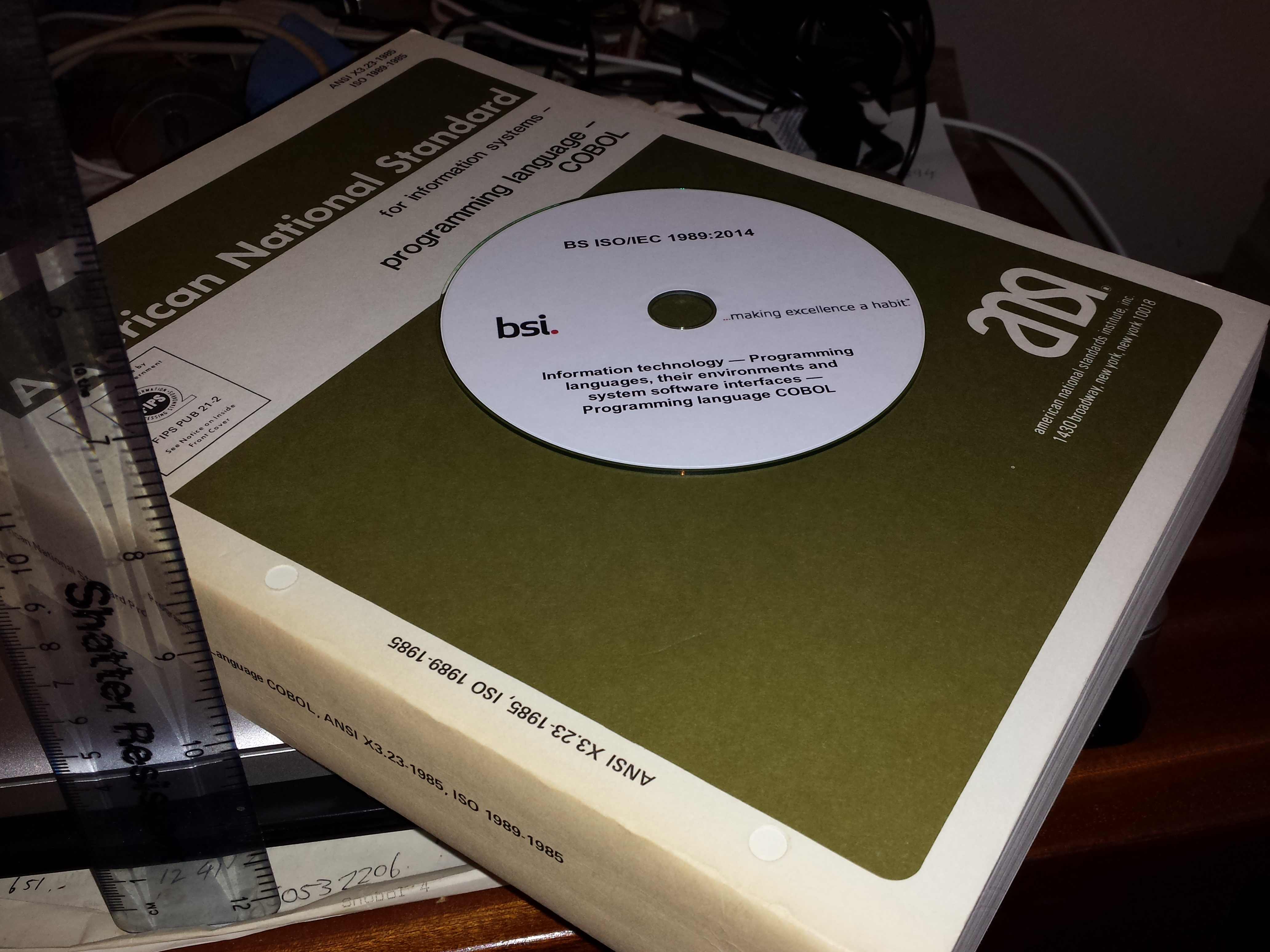

Look what arrived in the post this morning, a complementary copy of the new Cobol Standard (the CD on top of a paper copy of the 1985 standard).

In the good old days, before the Internet, members of IST/5 received a complementary copy of every new language standard in comforting dead tree form (a standard does not feel like a standard until it is weighed in the hand; pdfs are so lightweight); these days we get complementary access to pdfs. I suspect that this is not a change of policy at British Standards, but more likely an excessive print run that they need to dispose of to free up some shelf space. But it was nice of them to think of us workers rather than binning the CDs (my only contribution to Cobol 2014 was to agree with whatever the convener of the committee proposed with regard to Cobol).

So what does the new 955-page standard have to say for itself?

“COBOL began as a business programming language, but its present use has spread well beyond that to a general purpose programming language. Significant enhancements in this International Standard include:

— Dynamic-capacity tables

— Dynamic-length elementary items

— Enhanced locale support in functions

— Function pointers

— Increased size limit on alphanumeric, boolean, and national literals

— Parametric polymorphism (also known as method overloading)

— Structured constants

— Support for industry-standard arithmetic rules

— Support for industry-standard date and time formats

— Support for industry-standard floating-point formats

— Support for multiple rounding options”

I guess those working with Cobol will find these useful, but I don’t see them being enough to attract new users from other languages.

I have heard tentative suggestions of the next revision appearing in the 2020s, but with membership of the Cobol committee dying out (literally in some cases and through retirement in others) perhaps this 2014 publication is the definitive final version of Cobol.

The POPL 2015 papers involving C

SIGPLAN (the ACM Special Interest Group on Programming LANguages) has just made available many of the papers that have been accepted for their 2015 POPL conference (Principles of Programming Languages). Good for them. I wish more conferences would do this.

There are three papers involving C, so obviously I have read those first. Two papers are heavy on the mathematics and one not quite so heavy:

- Sound Modular Verification of C Code Executing in an Unverified Context: Describes a tool that takes C source annotated with separation logic and translates it to C source containing runtime checks; it is intended that these runtime checks verify the conditions expressed in the separation logic. Why does the developer add the interface checks in separation logic and then translate, rather than adding them in C in the first place? This was question was not addressed

- Common compiler optimizations are invalid in the C11 memory model and what we can do about it: This sounds like bad news, but the introduction mentions specialist optimizations that are common in that specialist area. There follows 11 pages of mathematics + another five pages in an appendix. Page 12 tells us what it is all about. Some requirements in C11 would be muck up the nice mathematics should CompCert, which currently supports C90, be upgraded to C11. In other words, a compiler implementor is complaining that wording in the standard is making their life difficult (hey, join the queue) and has published a paper about it.

- Formal verification of a C static analyzer: An interesting piece of work spoiled by claims that a soap powder manufacturer would not be able to get away with. Verasco, the static analysis tool described, does its checking on an intermediate language that is two-steps removed from the original C source. Using the authors’ logic, I could bolt on one of the existing Fortran-to-C translators and claim to have a formally-verified Fortran static analyzer, with C being just an intermediate language in the chain. The problem with analyzing an intermediate language is that the transformations that have occurred along the way have changed the semantics of the original code, so the results of any analysis could be different than if applied to the original source. An example from the paper, the code:

z = f(x) + 2 * g(y)

is transformed to:

t1 = f(x); t2 = g(y); z = t1 + 2 * t2;

The implementation thus selects one of the two possible evaluation orders for the functions

fandg. It is possible that callingfbeforegwill result in behavior that is different from callinggbeforef(no undefined behavior occurs because there is a sequence point before a function returns, using pre-C11 terminology).So Verasco is only checking one of the two possible execution paths in this code. Not a particularly sound proof.

C-semantics is the C formal methods tool that stands head and shoulders above anything else that currently exists (a fun Fibonacci example). It is actually based on the C source and does significantly more checking than verasco, but is not mentioned in the “Related work” section of the paper.

Some of the other POPL papers look a lot more interesting and potentially useful.

Workshop on App Store Analysis

I was at the 36th CREST Open Workshop, on App Store Analysis, at the start of this week. The attendee list reads like a who’s who of academics researching App stores. What really stood out for me was the disconnect between my view of the software engineering aspects of developing mobile Apps and the view of many, but not all, academics in the room.

Divergent points of view on App development being different because… included:

Academics: they are written by a small number (often one) of developers.

Me: This was true in the early days of microprocessors and the web. When something new comes out only a small number of people are involved in it and few companies are willing to invest in setting up large development teams. If the new thing succeeds (i.e., there is money to be made) the money to create large teams will follow.

Academics: third party libraries make a significant contribution to functionality.

Me: This is true of a lot of web software and it is becoming more common for Apps on all platforms. It was not true in the past because the libraries were not available; Open Source changed all that.

Academics: they are not structured/written according to software engineering principles (someone in the room thought that waterfall was still widely used).

Me: This is true of most software produced by individuals who are writing something out of interest in their spare time or because they are not gainfully employed in ‘real’ work. When microcomputers were new the internal quality of most software on the market was truly appalling; it was primarily written by people who knew a market niche very well and taught themselves programming, the software sold because it addressed the needs to its customers and code quality was irrelevant (of course the successful products eventually needed to be maintained, which in when code quality became important, but they now had money to employ developers who knew about that kind of stuff).

Academics: the rapid rate of change (in tools and libraries etc) being experienced will continue into the foreseeable future.

Me: I was staggered that anyone could think this.

Academics: lots of money to be made for minimal investment:

Me: Those days are past.

Me: power drain issues (may) be a significant design issues.

Academics: Blank look.

Other things to report:

Various concerns raised by people who had encountered the viewpoint that mobile Apps were not considered worthy of serious academic study within software engineering; this point of view seemed to be changing. I don’t recall there every having been academic research groups targeting microcomputer software, but this certainly happened for web development.

I was a bit surprised at the rather rudimentary statistical techniques that were being used. But somebody is working on a book to change this.

Running Average Power Limit: a new target for viruses

I have been learning about the Running Average Power Limit, RAPL, feature that Intel introduced with their Sandy Bridge architecture. RAPL is part of a broader framework providing access to all kinds of interesting internal processor state (e.g., detailed instruction counts, cache accesses, branch information etc; use PAPI to get at the numbers on your system, existing perf users need at least version 3.14 of the Linux kernel).

My interest in RAPL is in using it to monitor the power consumed by short code sequences that are alternative implementations of some functionality. There are some issues that need to be sorted out before this is possible at the level of granularity I want to look at.

I suspect that RAPL might soon move from a very obscure feature to something that is very widely known and talked about; it provides a means for setting an upper limit on the average power consumed by a processor, under software control.

Some environmental activists are very militant and RAPL sounds like it can help save the planet by limiting the average power consumed by computers. Operating systems do provide various power saving options, but I wonder how widely they are used aggressively; one set of building based measurements shows a fairly constant rate of power consumption, smaller set showing a bit of daily variation.

How long will it be before a virus targeting RAPL appears?

Limiting the average power consumed by a processor is likely to result in programs running more slowly. Will the average user notice? Slower browser response could be caused by all sorts of things. Users are much more likely to notice a performance problem when watching a high definition video.

For service providers RAPL is another target to add to the list of possible denial-of-service attacks.

A book about some important bits of R

I see that Hadley Wickham’s new book, “Advanced R”, is being published in dead tree form and will be available a month or so. Hadley has generously made the material available online; I quickly skimmed the material a few months ago when I first heard about it and had another skim this afternoon.

The main problem with the book is its title, authors are not supposed to write advanced books and then call them advanced. When I studied physics the books all had “advanced” in their titles, but when I got to University the books switched to having some variant of “fundamental” in their title. A similar pattern applies to computer books, with the books aimed at people who know a bit and want to learn a bit more having an advanced-like word in their title and the true advanced stuff having more downbeat titles, e.g., Javascript: The Good Parts, “Algorithms in Snobol 4”, Algorithms + Data Structures = Programs.

Some alternative title suggestions: “R: Some important bits”, “The Anatomy of R” or “The nitty gritty of R”.

The book is full of useful technical details that are scattered about and time consuming to find elsewhere; a useful reference manual, covering how to do technical stuff in R, to have on the shelf.

My main quibble with the book is the amount of airplay that the term “functional programming” gets. Does anybody really care that R has a strong functional flavor? Would many R users recognize another functional language if it jumped up and bit them? The die hard functional folk would probably say that R is not really a functional language, but who cares. I think people who write about R should stop using the words “functional programming”, it just confuses R users and serves no useful purpose; just talk about the convenient things that R allows us to write.

A book that I would really like to read is the R equivalent of books such as “Algorithms in Snobol 4”, “Effective C++” and “SQL for Smarties” (ok, that one has advanced in the subtitle), that take a wide selection of relatively simple problems and solve them in ways that highlight different aspects of the language (perhaps providing multiple solutions to the same problem).

Recent Comments