Actively maintained production compilers for middle-age languages

The owners of the Borland C++ compiler have stopped maintaining it. So we are now down to, by my counting, three four different production quality C++ compilers still being actively maintained (Visual C++ {the command line c1.exe, not the interactive IDE compiler}, GCC, LLVM and EDG); lots of companies repackage EDG and don’t talk about it.

How many production compilers for other middle-age languages are still being actively maintained?

Ada I think is now down to one (GNAT; I’m not sure of the status of what was the Intermetrics compiler).

Cobol has two+ (I’m not sure how many internal compilers IBM has, some of which are really Microfocus) that I know of (Microfocus and Fujitsu {was ACUCobol}).

Fortran probably needs more than one hand to count its compilers. Nothing like having large engineering applications using the languages features supported by your compiler to keep the maintenance fees rolling in.

C still has lots of compilers (a C validation suite vendor told me many years ago that they had over 150 customers). Embedded processors can be a very tough target for the general purpose algorithms used in GCC and LLVM, so vendors with hand crafted compilers can still eek out a living.

Perl has one (which I find surprising).

R has one, but like Cobol it is not a fashionable language in compiler writing circles. Over the last couple of years there have been a few ‘play’ implementations and rumors of people creating a new production quality implementation.

Lisp has one or millions, depending on how you view dialects or there could be a million people with a different view on the identity of the 1.

Snobol-4 still has one (yes, I am a fan of this language).

There are lots of languages which have not yet reached middle-age, so its too soon to start counting how many actively supported compilers they still have in production use.

Eye-tracking of developers reading code is now in start-up mode

Readability has always been the meaningless go to attribute for designers of new languages and code restructuring techniques that needed a worthy sounding benefit to tout.

Market researchers, being more interested in empirical data than arm-waving, have been long time users of eye-tracking technology; gaze direction providing a direct link to where cognitive attention is being invested.

Over the last few years, a small number of researchers have started measuring where software developers look when they read code. Analysing and interpreting data on eye movement while reading code is still in start-up mode. One group has started collecting data that others can use, the obligatory R packages (saccade, gazepath and itsadug) and Python library now exist, and the eye-movement in programming conference has its third meeting in November.

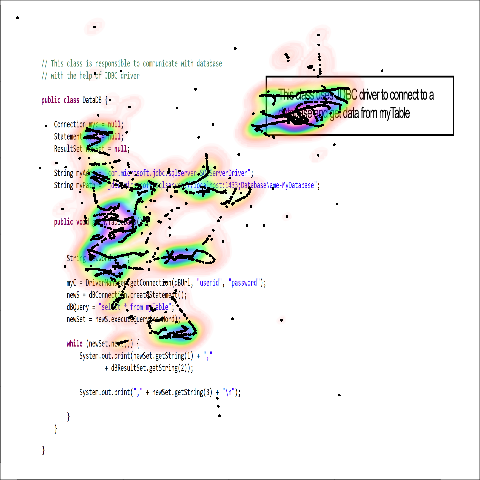

Apart from one tantalizing image (see below, code+data here, original research paper+data) my book should arrive too soon to say anything useful about code readability based on eye-tracking data.

It has taken several decades for researchers to create reasonably reliable models of attention and eye-movement for reading text. Code reading adds vertical eye-movement to the horizontal movements that occur when reading text; the models are probably going to be a lot more complicated. I discussed a few of the issues in my first book (the E-Z Reader model is still one of the top performers).

Accurately tracking eye motion during software development is technically difficult. Until recently obtaining the necessary accuracy required keeping the subject’s head fixed (achieved by having subjects clamp their teeth on a bite bar); somewhat impractical for developers wanting to view a large screen. Accurately tracking what developers are looking at requires tracking both head and eye motion. The necessary hardware is coming down in price, but still contains one too many zeroes for me to buy one to play with (I was given an Intel RealSense at a hackathon, now I just need the software…).

Next time somebody claims that so-and-so is more readable, ask them what eye-tracking research has to say on the subject.

2015 in the programming language standard’s world

Last Tuesday I was at the British Standards Institute for a meeting of IST/5, the programming languages committee.

My suggestion that the Cobol 2014 standard may be the last revision of that language appears to be coming true; there has been a steep decline in membership of the US Cobol committee (this is where all the work is done, with the rest of the world joining this committee or rubber stamping what comes out of it), and nobody has expressed interest in being involved in new work items.

Fortran appears to be going strong, with a revised standard planned for 2017.

In October C++ are rectifying the fact that they have not meet in Hawaii for three years. In fairness I ought to point out that the Fortran committee, when hosted by INCITS/PL22.3, regularly hold meetings in Las Vegas (I’m told its because the hotel rooms are cheap; Nevada is where US underground atom bomb tests were located and lots of super-computers executing programs written in Fortran were involved, or perhaps readers can think of an alternative explanation that does not invoke secret government organizations).

I found out that PL/1 is still an ISO Standard.

Work on the C and Ada standards continues.

Prolog has a new convenor, Ulrich Neumerkel. There was a meeting during April in Dresden, Germany but no minutes have been published. Did anybody attend?

ISO/IEC 23360-1:2006, the ISO version of the Linux Base Standard is almost 10 years behind the specification published by the organization who actually does the work. Some voices have expressed an interest in updating the ISO document. What does ISO’s version of the Linux Standard Base have to do with the committee responsible for programming languages?

Well, a long time ago in a galaxy far away, or the late 1980s in London, some people decided to set up a committee that specified O/S related library functions callable from C programs. SC22, programming languages, was the existing ISO committee having the closest fit with this new working group; initially it produced a specification that went under the name POSIX. Jump forward 15 years and Linux was the big POSIX success story (ok, the Linux people might see things differently) and dare I suggest that one of the motivations for creating ISO/IEC 23360-1:2006 might have been to bask in the reflected success of Linux. I understand the motivation of people involved in the standard’s process for wanting to published an update that reflects the current state of play (seriously out of date standards degrade the brand), but I don’t see why the Linux Foundation would be interested in going through the hassle of making this happen (unless they are having a mid-life crisis and are seeking approval of their work from an authority figure). Watch this blog for a 2016 status update.

Adding house numbers to Open Street Map

Team OSM-house-numbers (Pavel and yours truly) was at the Open Street Map London hack weekend a few days ago.

When Phyllis Pearsall was out walking the streets of London in the 1930s gathering information for her Geographer’s A-Z London street map she recorded house numbers and included this information on her maps. House number information is included in OSM data when people have added it. Is there a way of automatically adding this information in bulk?

The UK Land Registry maintains a database of house sales; the information includes postcode, street and house number. The database is available under the Open Government License, which is compatible with the OSM license.

It is straight-forward to match all house sales having the same postcode/street to obtain a min/max house number for a postcode/street.

The first half of a UK postcode specifies a large area or district (e.g., GU14 is my district code), while the second half has a granularity of around a quarter of a mile or less (depending on housing density).

It was decided that house numbers on a map become useful when streets are long enough, where long enough is defined as containing houses having different postcodes. Assuming that street names are unique within a given postcode district, filtering out not-long enough streets was trivial.

The Land registry started recording sales in 1995 and it is possible that some streets are not considered to be long enough because they contain houses that have not been sold within the last 20 years; this problem will also affect the min/max value of some house number ranges.

To tie this postcode/street information to Open Street Map data we need latitude/longitude information.

Information on house number locations is very useful to governments and in the UK is collected by our national mapping body, the Ordinance Survey, who like all UK government bodies have a long history of being loath to make information available for general public use. The current situation, according to Wikipedia, is that the Ordinance Survey mapping from postcode to latitude/longitude is available as open data.

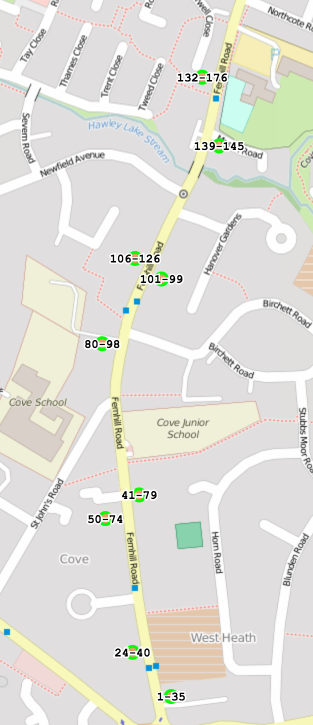

Adding information for postcode lat/long and feeding everything into a webpage produces a map such as the one below (opposite sides of the street having different postcodes is plainly visible):

We cannot guarantee that the house number data we have created is 100% accurate; there may be mistakes in our code or the Land registry/Ordinance Survey data we processed. Experienced OSM hackers at the event told us about minor mistakes in automatically generated data, that had occurred in the past and had a disproportionate impact on user confidence in OSM accuracy. So we did not upload our data to OSM; you can find it on github (saved in compressed form to reduce download time), along with the code used to create it.

The hackathon finished at five, with people decamping to a local pub. We were more or less done by three. What next (perhaps for another hackathon or a dedicated OSM hacker)?

What is needed is a simple way to overlay house number range information on an OSM image which can be easily used by people with local knowledge to confirm whether it is correct or not, with the data being added to OSM if it is correct.

Other possible OSM uses for the Land registry data include estimating density of houses along a street, e.g., number-of-unique-house-numbers divided by distance-between-adjacent-postcodes and perhaps even a house price heatmap (ok, that’s a bit specialized).

What about trees? This is my tree hugging side showing itself. If you plan to go out mapping house numbers, don’t forget to map the trees!

How will C code in 2045 look different from today?

What constructs will be in such common use in C source code written by developers in 2045 that people looking at C written in 2015 will know it comes from a much earlier era (a previous post looked back at C written in 1986)?

C is a high level language that allows developers to get close to the hardware, so to get some idea of what everyday C might be like in 2045 we have to ask what everyday hardware will be like 10-20 years from now (the C standard committee waits for hardware feature to become established before adding features to support them).

I think the following hardware trends will have a big impact on the future appearance of C source code:

Power consumption: Runtime performance is an integral part of the design of C. In the past performance has been about program execution time and/or memory usage; the spread of mobile computing has created a third strand: electrical power consumption. A variety of techniques have been proposed for reducing program power consumption, including: type specifiers that enable developers to tell the compiler accuracy can be traded off against power in calculations involving a given variable and scaling cpu voltage/frequency in non-time critical code (researchers are currently trying to do this without developer involvement, but a storage/type specifier like register or inline would provide useful information to the compiler),

Unreliable hardware: running hardware at lower voltages (to reduce power consumption) increases the probability of noise having an effect on program output, as does use of smaller line widths in cpu fabrication (more chips per die increases manufacturer profits). Proposed solutions include adding type specifiers to variables that can tolerate holding approximate values or more making probabilistic assertions.

Non-volatile memory: Like most languages C has an implicit model of programs sitting on a slow storage device, e.g., hard disk, and being loaded into very fast storage for execution. Non-volatile storage could have a very dramatic impact on this view of the world. For years gaming consoles have stored code+data as a memory image in ROM for rapid loading, but being able to write to storage that is only an order of magnitude slower than main memory opens up all sorts of interesting opportunities. The concept of named address spaces defined in Programming languages – C – Extensions to support embedded processors is waiting to expand out of its current niche of C on embedded processors.

There is at least one language construct that is likely to be rarely seen by developers working in 2045: inline. The reason that today’s developers have been given the ability to define functions inline is that compilers are not yet good enough to reliably make good function inlining decisions, rather like they were not good enough to reliability make good register allocation decisions 30 years ago (ok, register can still be useful for developers using weird and wonderful processor architectures or brain dead compilers).

I have not yet said anything about parallel processing or multiprocessor hardware. The C11 Standard updated C99 to provide generic support (i.e., _Atomic plus associated sequence point wording updates and the threads library) for this kind of hardware. Support for a specific parallel/multiprocessor model will happen if a specific model becomes the industry standard (rather like IEEE floating-point not being anointed by C90 because it was not yet what every hardware vendor used; other formats were on their last legs and by C99 could be treated as dead).

A first stab a low resolution image enhancement

Team Clarify-the-Heat (Gary, Pavel and yours truly) were at a hackathon sponsored by Flir at the weekend.

The FlirONE contains an infrared sensor with 160 by 120 pixels, plus an optical sensor that provides a higher resolution image that can be overlaid with the thermal, that attaches to the USB port of an Android phone or iPhone. The sensor frequency range is 8 to 14 µm, i.e., ‘real’ infrared, not the side-band sliver obtained by removing the filter from visual light sensors.

At 160 by 120 the IR sensor resolution is relatively low, compared to today’s optical sensors, and team Clarify-the-Heat decided to create an iPhone App that merged multiple images to create a higher resolution image (subpixel interpolation) in real-time. An iPhone was used because the Flir software for this platform has been around longer (Android support is on its first release) and image processing requires lots of cpu power (i.e., compiled Objective-C is likely to be a lot faster than interpreted Java). The FlirONE frame rate is 8.6 images per second.

Modern smart phones contain 3-axis gyroscopes and data on change of rotational orientation was used to find the pixels from two images that corresponded to the same area of the viewed 2-D image. Phone gyroscope sensors drift over periods of a few seconds, some experimentation found that over a period of a few seconds the drift on Gary’s iPhone was safely under a tenth of a degree; one infrared pixel had a field of view of approximately 1/3 degrees horizontally and 1/4 degrees vertically. Some phone gyroscope sensors are known to be sensitive enough to pick up the vibrations caused by local conversations.

The plan was to get an App running in realtime on the iPhone. In practice debugging a couple of problems ate up the hours and the system demonstrated uploaded data from the iPhone to a server which a laptop read and processed two images to create a higher resolution result (the intensity from the overlapping pixels is averaged to double, in our case, the resolution). This approach is very primitive compared to the algorithms that do sub-pixel enhancement by detecting image features (late in the day we found out that OpenCV supports some of this kind of stuff), but it requires image less image processing and should be practical in real-time on a phone.

We discovered that the ‘raw’ data returned by the FlirONE API has been upsampled to produce a 320 by 240 image. This means that the gyroscope data, used to align image pixels, has to be twice as accurate. We did not try to use magnetic field data (while not accurate the values do not drift) to filter the gyroscope readings.

Two IR images from a FlirOne, of yours truly, and a ‘higher’ resolution image below:

The enhanced image shows more detail in places, but would obviously benefit from input from more images. The technique relies on partial overlap between pixels, which is a hit and miss affair (we were managing to extract around 5 images per second but did not get as far as merging more than two images at a time).

Team Clarify-the_Heat got an honorable mention, but then so did half the 13 teams present, and I won a FlirONE in one of the hourly draws 🙂 It looks like they will be priced around $250 when they go on sale in mid-August. I wonder how long it will take before they are integrated into phones (yet more megapixels in the visual spectrum is a bit pointless)?

I thought the best project of the event was by James Rampersad, who used processed the IR video stream using Eulerian Video Magnification to how blood pulsing through his face.

Deluxe Paint: 30 year old C showing its age in places

Electronic Arts have just released the source code of a version of Deluxe Paint from 1986.

The C source does not look anything like my memories of code from that era, probably because it was written on and for the Amiga. The Amiga used a Motorola 68000 cpu, which meant a flat address space, i.e., no peppering the code with near/far/huge data/function declaration modifiers (i.e., which cpu register will Sir be using to call his functions and access his data).

Function definitions use what is known as K&R style, because the C++ way of doing things (i.e., function prototypes) was just being accepted into C by the ANSI committee (the following is from BLEND.C):

void DoBlend(ob, canv, clip) BMOB *ob; BoxBM *canv; Box *clip; {...} |

The difference between the two ways of doing things (apart from the syntax of putting the type information outside/inside the brackets) is that the compiler does not do any type checking between definition/call for the K&R style. So it is possible to call DoBlend with any number of arguments having any defined type and the compiler will generate code for the call assuming that the receiving end is expecting what is being passed. Having an exception raised in the first few lines of a function used to be a sure sign of an argument mismatch, while having things mysteriously fail well into the body of the code regularly ruined a developer’s day. Companies sold static analysis tools that did little more than check calls against function definitions.

The void on the return type is unusual for 1986, as is the use of enum types. I would have been willing to believe that this code was from 1996. The clue that this code is from 1986 and not 1996 is that int is 16 bits, a characteristic that is implicit in the code (the switch to 32 bits started in the early 1990s when large amounts of memory started to become cheaply available in end-user systems).

So the source code for a major App, in its day, is smaller than the jpegs on many’ish web pages (jpegs use a compressed format and the zip file of the source is 164K). The reason that modern Apps contain orders of magnitude more code is that our expectation of the features they should contain has grown in magnitude (plus marketing likes bells and whistles and developers enjoy writing lots of new code).

When the source of one of today’s major Apps is released in 30 years time, will people be able to recognise patterns in the code that localise it to being from around 2015?

Ah yes, that’s how people wrote code for computers that worked by moving electrons around, not really a good fit for photonic computers…

Exhuastive superoptimization

Optimizing compilers include a list of common code patterns and equivalent, faster executing, patterns that they can be mapped to (traditionally the role of peephole optimization, which in many early compilers was the only optimization).

The problem with this human written table approach is that it is fixed in advance and depends on the person adding the entries knowing lots of very obscure stuff.

An alternative approach is the use of a superoptimizer, these take a code sequence and find the functionally equivalent sequence that executes the fastest. However, superoptimizing interesting sequences often take a very long time and so use tends to be restricted to helping figure out, during compiler or library development, what to do for sequences that are known to occur a lot and be time critical.

A new paper, Optgen: A Generator for Local Optimizations, does not restrict itself to sequences deemed to be common or interesting, it finds the optimal sequence for all sequences by iterating through all permutations of operands and operators. Obviously this will take awhile and the results in the paper cover expressions containing two unknown operands + known constants, and expressions containing three and four operands that are not constants; the operators searched were: unary ~ and - and binary +, &, |, - and ~.

The following 63 optimization patterns involving two variables (x and y)+constants (c0, c1, c2) were found, with the right column listing whether LLVM, GCC or the Intel compiler supported the optimization or not.

optional-precondition => before -> after L G I -~x -> x + 1 + + - -(x & 0x80000000) -> x & 0x80000000 - + - ~-x -> x - 1 + + - x +~x -> 0xFFFFFFFF + + - x + (x & 0x80000000) -> x & 0x7FFFFFFF - - - (x | 0x80000000) + 0x80000000 -> x & 0x7FFFFFFF + - - (x & 0x7FFFFFFF) + (x & 0x7FFFFFFF) -> x + x + + - (x & 0x80000000) + (x & 0x80000000) -> 0 + + - (x | 0x7FFFFFFF) + (x | 0x7FFFFFFF) -> 0xFFFFFFFE + + - (x | 0x80000000) + (x | 0x80000000) -> x + x + + - x & (x + 0x80000000) -> x & 0x7FFFFFFF + - - x & (x | y) -> x + + - x & (0x7FFFFFFF - x) -> x & 0x80000000 - - - -x & 1 -> x & 1 - + - (x + x)& 1 -> 0 + + - is_power_of_2(c1) && c0 & (2 * c1 - 1) == c1 - 1 => (c0 - x) & c1 -> x & c1 - - - x | (x + 0x80000000) -> x | 0x80000000 + - - x | (x & y) -> x + + - x | (0x7FFFFFFF - x) -> x | 0x7FFFFFFF - - - x | (x^y) -> x | y + - - ((c0 | -c0) &~c1) == 0 ) (x + c0) | c1!x | c1 + - + is_power_of_2(~c1) && c0 & (2 *~c1 - 1) ==~c1 - 1 => (c0 - x) | c1 -> x | c1 - - - -x | 0xFFFFFFFE -> x | 0xFFFFFFFE - - - (x + x) | 0xFFFFFFFE -> 0xFFFFFFFE + + - 0 - (x & 0x80000000) -> x & 0x80000000 - + - 0x7FFFFFFF - (x & 0x80000000) -> x | 0x7FFFFFFF - - - 0x7FFFFFFF - (x | 0x7FFFFFFF) -> x & 0x80000000 - - - 0xFFFFFFFE - (x | 0x7FFFFFFF) -> x | 0x7FFFFFFF - - - (x & 0x7FFFFFFF) - x -> x & 0x80000000 - - - x^(x + 0x80000000) -> 0x80000000 + - - x^(0x7FFFFFFF - x) -> 0x7FFFFFFF - - - (x + 0x7FFFFFFF)^0x7FFFFFFF -> -x - - - (x + 0x80000000)^0x7FFFFFFF -> ~x + + - -x^0x80000000 -> 0x80000000 - x - - - (0x7FFFFFFF - x)^0x80000000 -> ~x - + - (0x80000000 - x)^0x80000000 -> -x - + - (x + 0xFFFFFFFF)^0xFFFFFFFF -> -x + + - (x + 0x80000000)^0x80000000 -> x + + - (0x7FFFFFFF - x)^0x7FFFFFFF -> x - - - x - (x & c) -> x &~c + + - x^(x & c) -> x &~c + + - ~x + c -> (c - 1) - x + + - ~(x + c) -> ~c - x + - - -(x + c) -> -c - x + + - c -~x -> x + (c + 1) + + - ~x^c -> x^~c + + - ~x - c -> ~c - x + + - -x^0x7FFFFFFF -> x + 0x7FFFFFFF - - - -x^0xFFFFFFFF -> x - 1 + + - x & (x^c) -> x &~c + + - -x - c -> -c - x + + - (x | c) - c -> x &~c - - - (x | c)^c -> x &~c + + - ~(c - x) -> x +~c + - - ~(x^c) -> x^~c + + - ~c0 == c1 => (x & c0)^c1 -> x | c1 + + - -c0 == c1 => (x | c0) + c1 -> x &~c1 - - - (x^c) + 0x80000000 -> x^(c + 0x80000000) + + - ((c0 | -c0) & c1) == 0 => (x^c0) & c1!x & c1 + + - (c0 &~c1) == 0 => (x^c0) | c1!x | c1 + - - (x^c) - 0x80000000 -> x^(c + 0x80000000) + + - 0x7FFFFFFF - (x^c) -> x^(0x7FFFFFFF - c) - - - 0xFFFFFFFF - (x^c) -> x^(0xFFFFFFFF - c) + + - |

The optional-precondition are hand-written rules that constants must obey for a particular expression mapping to apply, e.g., (c0 &~c1) == 0.

Hand written rules using bit-vector (for each bit of a constant the value one, zero or don’t know) analysis of constants is used to prune the search space (otherwise the search of the two operand+constants case would still be running).

LLVM covers 40 of the 63 cases, GCC 36 and Intel just 1 (wow). I suspect that many of the unsupported patterns are rare, but now they are known it is worth implementing them just to be able to say that this particular set of possibilities is completely covered.

R is now important enough to have a paid for PR make-over

With the creation of the R consortium R has moved up a rung on the ladder of commercial importance.

R has captured the early adopters and has picked up a fair few of the early majority (I’m following the technology adoption life-cycle model made popular by the book Crossing the Chasm), i.e., it is starting to become mainstream. Being mainstream means that jobsworths are starting to encounter the language in situations of importance to them. How are the jobsworths likely to perceive R? From my own experience I would say it will be perceived as being an academic thing, which in the commercial world is not good, not good at all.

To really become mainstream R needs to shake off its academic image, and as I see it, the R consortium has been set up to make that happen. I imagine it will try to become the go-to point for journalists wanting information or a quote about things-related-to R. Yes, they will hold conferences with grandiose sounding titles and lots of business people will spend surprising amounts of money to attend, but the real purpose is to solidify the image of R as a commercial winner (the purpose of a very high conference fee is to keep the academics out and convince those attending that it must be important because it is so expensive).

This kind of consortium gets set up when some technology having an academic image is used by large companies that need to sell this usage to potential customers (if the technology is only used internally its wider image is unimportant).

Unix used to have an academic image, one of the things that X/Open was set up to ‘solve’. The academic image is now a thing of the past.

For the first half of the 1980s it looked like Pascal would be a mainstream language; a language widely taught in universities and perceived as being academic. Pascal did not get its own consortium and C came along and took its market (I was selling Pascal tools at the time and had lots of conversations with companies who were switching from Pascal to C and essentially put the change down to perception; it did not help that Pascal implementations did their best to hide/ignore the 8086 memory model, something of interest when memory is scarce).

How will we know when R reaches the top rung (if it does)? Well there are two kinds of languages, those that nobody uses and those that everybody complains about.

R will be truly mainstream once people feel socially comfortable complaining about it to any developer they are meeting for the first time.

2015: A new C semantics research group

A very new PhD student research group working on C semantics has just appeared on the horizon. You can tell they are very new to C semantics by the sloppy wording in their survey of C users (what is a ‘normal’ compiler and how does it differ from the ‘current mainstream’ compiler referred to in some questions? I’m surprised the outcome appeared clear to the authors, given the jumble of multiple choice options given to respondents).

Over the years a number of these groups have appeared, existed until their members received a PhD and then disappeared. In some cases one of the group members does something that shows a lot of potential (e.g., the C-semantics work), but the nature of academic research means that either the freshly minted PhD moves to industry or else moves on to another research area. Unfortunately most groups are overwhelmed by the task and pivot into meaningless subsets of concentrating on mathematical organisms. Very, very occasionally interesting work gets supported once the PhD is out of the way, Coccinelle being the stand-out example for C.

It takes implementing a full compiler (as part of a PhD or otherwise) to learn C semantics well enough to do meaningful research on it. The world seems to be stuck in a loop of using research to educate know-nothings until they know-something and then sending them off on another track. This is why C language researchers keep repeating themselves every 10 years or so.

Will anybody in this new group do any interesting work? Alan Mycroft set the bar very high for Cambridge by submitting a 100 page comment document on the draft C89 standard that listed almost as much ambiguous wording as everybody else put together found (but he was implementing a compiler in his spare time and not doing it for a PhD, so perhaps he does not count).

One suggestion I would make to this new group is that if they really are interested in actual usage they should measure actual usage, developer beliefs about compiler behavior is rarely very accurate and always heavily tainted by experiences from when they first started out.

Recent Comments