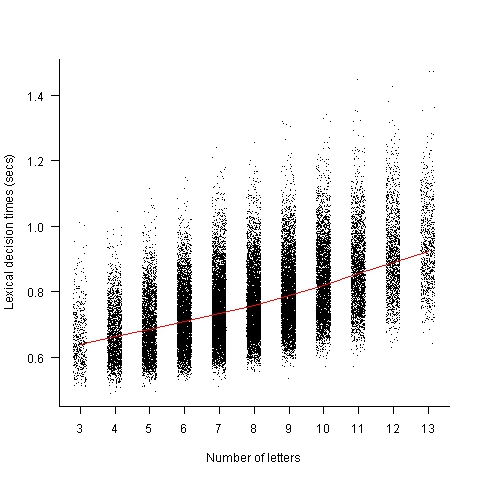

Word length lexical decision data

Well chosen identifier names can reduce the effort needed to understand the code containing them, compared to badly chosen identifiers.

An identifier might have a name that creates a semantic association in the mind of the reader about the role of the variable within a function definition (e.g., outer_counter) or an association with the information contained in the variable (e.g., max_fruit).

Code is not always read like prose text, developers might quickly scan through the source looking for something. In this case short identifier names are best because it reduces the number of characters that need to be scanned.

If you want to make life difficult for anyone who has to read your code, add a visually boring common prefix to every identifier, e.g., uacc. Readers start looking up a word in memory based in the first few characters while they process the remaining characters in a word, and eye tracking studies have found that that character sequence information is used to plan eye saccades (where to move the eyes next). A short bland sequence can really throw a spanner in the works of our over-learned reading skills.

I once researched a detailed analysis of the issues involved in a cost/benefit analysis of identifier selection. The good news is that I think I covered everything, the bad news is that the various kinds of data on human character sequence usage needed to perform the analysis was/is not available.

Today I got my hands on lots of performance data on the affect of word length on visual word recognition; thanks to Boris New (code and data)

The plot below shows the mean response time of 819 subjects performing a lexical decision task (respond yes/no on whether a character sequence is a word or nonword); each subject was tested on a subset of around 3,000 out of 33,608 words.

Note, this data is for single words. There are bound to be all sorts of interaction effects when two words/nonwords occur together in an identifier, e.g., semantic priming.

Word length is only one of several factors that have been found to effect people’s performance in processing words; others include the word frequency effect and age of acquisition (when the word was learned, which is correlated with word frequency).

Have fun with this.

Operator assignment may be in the next Ada standard

WG9, the Ada Standard committee, are looking into adding support for operator assignment in the next revision of the language standard. Ada was designed back in the day when C’s operator assignments, such as +=, were a novelty (all the languages we know today that follow C’s lead, including choice of operator precedence, did not yet exist); developers using serious languages knew that typing everything out in full was good for you.

It’s amazing to read about “… those who have been damaged by terser languages…”, in the proposal comments. Do I only think that My_Package.My_Array(I).Field +:= 1; is so much easier to read than My_Package.My_Array(I).Field := My_Package.My_Array(I).Field + 1; because my brain has been damaged? In the second statement I have to parse two subexpressions and notice that they refer to the same object, while the first statement only requires me to parse one subexpression and the +:= operator tell me that something is being added to its value. The first statement requires a lot less effort and is likely to be less error prone (I bet Ada programs make cut and paste error just like the rest of us).

Did you notice the use of round brackets to specify an array index? Yes, the eighties were the decade when the programming language world was badgered into being politically correct and only require the use of characters that appeared on every European country’s keyboard.

The Ada proposal started out following the C convention, e.g., +=, *=, etc, then switched to :+, :*, etc. The assignment token in Ada is := and there is a logic to switching out the = for another operator. However, I would argue that +:, *:, etc would be a less error prone ordering or characters. People pay more attention to the first character in a sequence and somebody in a hurry may not notice that the colon is not followed by a =; putting the ‘unexpected’ character first is more likely to catch reader attention.

Another proposal is for the @ token to act as a placeholder. The only place I can see this being useful on any regular basis is in bitwise manipulation: My_Package.My_Array(I).Field := (@ << 16) or @ ; (Ada does not support << as an operator, so reality is a bit more complicated than this). I don't think there are enough situations where placeholders would be useful to warrant using a solution that is more complicated than operator assignment.

One proposed advantage of the new fangled operators is making life easier for non-Ada users (see the discussion in the proposal comments). The I have put lots of efforts into Ada, why should others have it easy die-hards are fighting a rear guard action to make sure life is not too easy for newcomers.

Researching just to create software patents

I was recently reading the paper “ARC++: Effective Typestate and Lifetime Dependency Analysis” (cannot find a public pdf now). What caught my attention was the fact it involved static analysis of C++ source, something that few researchers get involved in because of the technical complexity of parsing C++. I wanted to know more and guess what popped up near the top of a Google search, a patent with almost the same title as the paper and with many of the paper’s authors listed as the inventors!

As far as I can tell the Claims section of the patent does not list anything new or novel (in fact the only prior art listed was added by the patent examiner). It is not hard to understand why this patent exists; the main paper author works in an NEC research laboratory and commercial research labs have to ‘pay’ their way by producing patents that the parent company can brandish at competitors.

At least it looks like the patent was applied for before the paper was published. The most extreme case of paper published followed by patent application, sometime later, I have encountered was during my spell as an adviser to the Monitoring Trustee appointed by the European Commission in the EU/Microsoft competition court case. One of the non-patented innovations being claimed by Microsoft (or perhaps the patent had been granted, my memory is fuzzy) was for something described in a PhD thesis whose author went to work for Microsoft, after completing his PhD; this was back in the day when typing a couple of technical terms and the name listed as the patent inventor into a search engine was still something new for bean counters.

Lisp and functional languages discourage free riders

While many developers have a favorite programming language, there are a few who believe they have found the One True Language and refuse to even consider coding in another language.

Why is the One True Language invariably a dialect of Lisp or a functional language?

I think the reason is the same as why strict churches are strong, both these language families make life difficult for free riders, i.e., the casual programmer.

Superficially Lisp-like languages look unwelcoming because of all those brackets and the tiresome reverse polish notation, but once past these surface speed bumps life is not a bed of roses, there are mind bending language challenges to master at every abstraction level; developers get sucked into the community working on mastering each level (this suggests an alternative explanation that coding in Lisp is a way of continuing to play Dungeons & Dragons while appearing to work).

True functional languages don’t have global variables and certainly don’t let you create stateful information. The self-flagulation no global variable languages have a limited clientèle; its the writing of programs that don’t make use of side-effects (e.g., iteration via recursion, not explicit loops) that marks out the true community members; a short conversation with a developer is enough to tell whether they are one-of-us who joyfully tells the world of their latest assignment-free solution to an apparently intractable problem (intractable in the sense of appearing to require the use of assignment statements). There are always umpteen different ways of writing something in functional languages, providing plenty of scope for sects to splinter off by requiring disciples to follow a particular approved style.

Why have Lisp and functional programming continued to survive for so long? Some interesting research on communal societies has found a correlation between the number of costly requirements entailed by community membership and community longevity, the greater the number of costly requirements the longer a community survives. Having sunk so much time and effort into the costly signaling required for community membership, people are loath to leave it all behind.

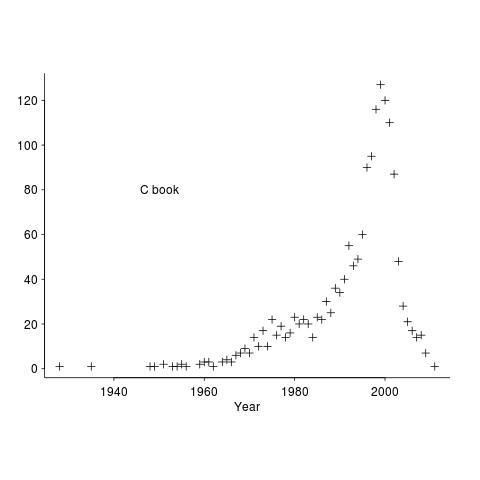

Citation patterns in my two books

When writing my C book, I cited any paper or book whose material I made use of and/or that I thought would be useful to the reader. One of the rules for academic papers is to cite the paper that ‘invented’ the idea; this is intended to incentivize researchers to work hard to discover new things that will be cited many times (citation count is a measure of the importance of the work and these days a metric used when deciding promotions and awarding grants).

When I started writing the C book the premier search engine was AltaVista, with Google becoming number one a few years before the book was completed. Finding papers online was still a wondrous experience and Citeseer was a godsend.

The plot below shows the numbers of works cited by year of publication, for the C book.

These days all the information we could possible need is said to be online. I don’t think this is true, but it might be a good enough approximation. But being online does not mean being available for free, a lot of academic papers remain behind paywalls.

It used to be possible to visit a good University library and copy papers of interest (I have a filing cabinet full of paper from the C book). Those days are gone, with libraries moving their reference stock off-site (the better ones offer on-premise online access).

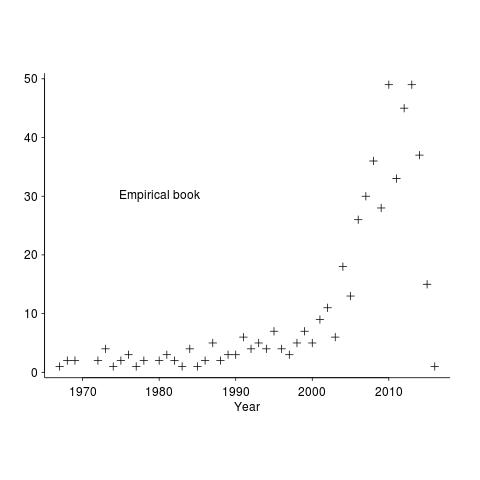

My book on empirical software engineering is driven by what data is available, which means most cited work is going to be relatively new. There is another big factor driving the work I cite this time around; I am fed up with tax payer funded research ending up behind paywalls, so I am only citing papers that can be downloaded for free (in practice they also have to be found by a search engine or linked to from somewhere or other) and when the ‘discovery’ paper is not available for download I cite a later work that is.

The plot below shows the numbers of works cited by year of publication, for the empirical software engineering draft book.

The rising slope is much sharper for the latest book. I think most of the difference is driven by the newness of the subject, software researchers tend to be very good (at least the non-business department ones) at making pdfs available for download.

Another factor might be how Citeseer and Google Scholar cross reference papers; Citeseer links to works cited by a paper (i.e., link back in time), while Google Scholar links to works that cite the paper (i.e., link forward in time).

Forces driving patterns found in call graphs of human written code

It has been a while since I posted anything about generating source that mimics the characteristics of human written code.

Generating function definitions is the easy bit, although variable selection is fiddly and naming needs to be handled.

The hardest part of mimicking human written code is linking functions together, via calls, in apparently meaningful ways. Within one source file it is probably possible to get away with individual calls to other local functions (most function definitions are called once) and a couple of calls to third party libraries. At the complete program level the code needs to tell a story, and doing this is very hard.

I think function calls can be divided into three categories, all based on the relationship between the developer who writes the code than does the call and the developer(s) who wrote the called function:

- Caller developer is the same person as the callee developer. One person gets to decide how things are done,

- Caller developer works on the same team as the callee developer. Here an interface needs to be negotiated with one or more other people,

- Caller developer is making use of a third-party library. The interface is pre-decided, take it or leave it (the same principle applies to library updates, the caller has to decide whether to stay with the existing version or make the changes needed to upgrade to the new version; Android is the (in)famous example of a frequently changed library that developers are under strong pressure to continually adapt to).

Calls to functions in third-party libraries tend to follow stylized sequences, e.g., open_* occurs before read_*/write_* and close_* appears last. Most of the time a generator could get away not calling functions from third-party libraries, because the number of such calls is often small, but when they occur they had better look correct.

Needing to call a function written by another developer on the team opens up all sorts of possibilities: there is always the option of them modifying the function to make life easier for the new call, but the cost of modifying all the existing call sites (plus any associated call chains) may be too high, perhaps a new global variable can be used to communicate the desired information, or perhaps the proposed usage is a small cog in a large wheel and has to make do (or perhaps they don’t like the person asking for the change and reasons are invented for staying as-is).

Then there is correlation in time and space (this has a big impact on patterns of evolution, at least I think so; models of forest fires, i.e., growth, death, fires of various sizes creating space for new growth, is the obvious parallel):

- The longer a function exists the more likely it is to accumulate callers. A function definition can remain unchanged and yet over time become more and more difficult to change.

- It can be very expensive to make changes to an existing function definition when there are lots of calls to it.

What do measurements of code have to say? Almost nothing; existing studies mostly do weird things like treating a system’s call graph as-if it were a social network (using a currently trendy metaphor is good for getting papers published, even if the mapping is all wrong), plotting power law-like graphs and sprouting portentous nonsense.

What is really needed is measurements of forked systems; comparing systems derived from a common past will tell us how much natural variation exists due to individual choice.

FreeBSD, NetBSD and OpenBSD are the obvious poster children of forked, common heritage, systems written in C; I cannot think of any such systems written in Java or C++.

Workshop on analyzing software engineering data

I am teaching a workshop, analyzing software engineering data, on 16 January 2016. If you meet the assumed level of know-how (basic understanding of maths to GCSE level, fluent in at least one programming language {i.e., written 10k+ lines of code} and will turn up with a laptop that has R installed), then you are welcome to sign up, its free. The event is being organized by ACCU London.

The focus is on extracting information that is useful to practicing software developers for creating software systems; statistics is used as a tool to find patterns in the data (R is used for this and the programs have the form: read_data(); format_data(); appropriate_statistical_function(); plot_results() and are usually contained in 10-30 lines).

The maths/programming requirements are there because the focus is on the software engineering ideas implied by the data; people need to implicitly understand how an equation fits together (not because there will be lots of algebra, there isn’t) and to be able to pick up and use a new language quickly.

The material is based on a book I am working on.

Its a hands-on workshop, with me talking for an hour or so and then everybody analyzing data for an hour, repeating until end-of-day. I have plenty of data for you to work on, but if you do have some software engineering data that you are willing to share with everybody, please bring it along.

The workshop is something of an experiment; as far as I know there are no books or courses aimed at software developers interested in analyzing software engineering data (there are a few books containing an assortment of academic papers). If the material is too easy I can speed up, if it is too hard then I will slow down; if the material is of no practical use we can all leave early.

The plan is to start at the beginning and cover all the important topics in software engineering. Obviously this requires more than a one day workshop. If there is enough interest there will be more workshops covering different topics (assuming I have time to organize the material and an available venue permitting).

The Ockham Sound Analysis Criteria

Yesterday a headline on a tool vendor blog caught my eye “… meets NIST high assurance standards”. What is this high assurance standard that I had not heard about before?

It turns out to be the Ockham Sound Analysis Criteria (which is not a standard, but is sort of connected to assurance at some level). I have been following the NIST group (now known as SAMATE) behind this work since their first meeting (the only one I have attended); their Static Analysis Tool Exposition (SATE) work is a great idea, but I imagine it has been an uphill battle convincing tool vendors to publicly expose the strength and weaknesses of their tools.

Passing the Ockham Sound Analysis Criteria requires that a static analysis tool detect “…a minimum of 60% of buggy sites OR of non-buggy sites.”, with no false-positives (which I take to mean that no incorrect warnings generated, i.e., the tool cannot incorrectly say “there is a fault here” or “there are no faults here”).

The obvious low cost tool implementation is to pattern detect the known problems in the known test suite (called the Juliet test suite) and output warnings about them. The only way for SAMATE to stop companies doing this (or at least tuning their tools to pass the suite) is to regularly change the test cases used.

I think I understand the rationale being the no false-positive requirement (SAMATE are using the marketing term “sound”). NIST want static analysis tools to be usable by people who don’t know anything about software; a strange idea I know, but the Nuclear Regulatory Commission have wanted to do this in the past.

Not being willing to accept false-positives kills innovation. New analysis techniques invariably start out being unreliable and improve over time.

I suspect that few vendors will have any interest in claiming to meet the Ockham Sound Analysis Criteria (apart from the ones that pattern match on the tests to satisfy some contract requirement). There is too much downside (new tests could put a vendor in the position of having to make a big investment just to continue to meet the criteria) for almost no upside (does anybody make purchase decisions based on this criteria?)

I think that the tool vendor (TrustinSoft) found they could make the claim and being relatively new in the tools market thought it might mean something to customers (I doubt it will, as their sales people are probably finding out). Of course what customers really want tool vendors to tell them is that their code does not contain any problems.

Recent formal methods and C papers (Sep 2015)

I have been catching up on my reading of papers from this year’s Programming Language Design and Implementation conference (whose organizers have not yet figured out that linking to pdfs of the papers might be useful).

Needless to say there are a few papers on formal methods and C:

- “A Formal C Memory Model Supporting Integer-Pointer Casts” is a truly awful paper. It starts out: “The ISO C standard famously does not give semantics to a significant subset of syntactically valid C programs.” and goes down hill from there. As far as I know only one language, Algol 68, defines semantic requirements using syntax, all other languages specify a syntax which is a very large superset of the set of semantically valid programs. The paper goes on to define a C-like language that is also Java-like, C#-like and *-like most languages created in the last 20 years. I have no idea why this paper got accepted, is PLDI now a third tier conference?

- Defining the undefinedness of C from the C-semantics guys. I could only find a version from 2012 online. Come on guys, you’re letting down one of your cheer-leaders. Update: pdf now available.

- A Formal C Memory Model for Separation Logic (not at PLDI, but popped up on arXiv today). This is one of those annoying papers that could have been great, but shoots itself in the foot. The first 20 pages shows that the author is aware of some of the complications involved in modeling C’s behavior. This is followed by pages and pages of definitions, a scattering of lemmas and Facts; at page 51 The Theorems start, blah, blah, blah. Then we are almost done, there is a discussion of related work.

Where is it shown that any of this stuff is connected to the requirements contained in the C Standard? The source of the implementation is provided, lets look at that; hmmm, no cross references to the C Standard here (in fact it is almost comment free). What about testing, processing source code to see what happens. The only mention of testing appears while discussing what the competition do (C-semantics; those pesky Americans again, not only not using Coq but testing their formal tools, don’t they know that anything written using mathematics must be correct).

The author’s draft PhD thesis says something about testing; but I get the feeling that he only says something about it because the competition does, even mostly using their+others tests rather than coming up with lots of his own.

While this work (part of the CH2O project) has clearly created a system that handles a chunk of real C, I don’t think it is anywhere close to being a very accurate model of C semantics. The author appears to be so much more interested in doing interesting mathematical stuff and finds it rather tiresome that the realities of C semantics disrupt the idealism.

Showing that they have clearly not learned how things are done in the formal semantics community, those pesky Americans have gone and produced a formal semantics for Javascript and tested it against the ECMAScript 5.1 conformance test suite (passing all 2,782 core language tests, Chrome V8 is the only other implementations that does this).

The compiler/interpreter distinction

What is the difference between compiled and interpreted programs?

In the good-old-days the distinction was easy to make: compiled code is executed by hardware while interpreted code is executed by software.

These days it can be very difficult to decide whether a program will be executed by hardware or software, and in some cases both may occur. More complicated cpus implement some of their instructions in micro-code (software control of very low level hardware resources) and the virtual machines specified for software execution can be implemented in hardware (an interesting project for a group of talented students in their summer holidays wanting to learn about ASICs).

Some people make a distinction based on the abstraction level of the cpu specification, e.g., very high level abstraction means the code must be interpreted. In practice the implementation of a cpu specification in hardware or software is an economic decision (software may be slow, but its a lot cheaper to implement).

I think there is a compiler/interpreter distinction, but the difference is not about how code is executed (the hardware/software distinction is a convenient difference that is easy to explain).

The compiler/interpreter distinction is a difference of responsibility. Compilers treat programs like the Spartans treated their children, they are bundled into a file of the appropriate format and left for the Operating system to load into memory and point the cpu at the first instruction (a cpu’s one interest is executing the sequences of instructions pointed to by the program counter). Interpreters are more like dotting nannies, organizing the provision of memory and on call to provide access to the desired resources.

Sometimes a language is classified as an interpreted language. There is no such thing as an interpreted language, only languages which are much more easily implemented using an interpreter than a compiler.

The performance of the Spartan approach may be very desirable, but the cost of achieving it can be very high.

Recent Comments