A book of wrongheadedness from O’Reilly

Writers of recommended practice documents usually restrict themselves to truisms, platitudes and suggestions that doing so and so might not be a good idea. However, every now and again somebody is foolish enough to specify limits on things like lines of code in a function/method body or some complexity measure.

The new O’Reilly book “Building Maintainable Software Ten Guidelines for Future-Proof Code”

(free pdf download until 25th January) is a case study in wrongheaded guideline thinking; probably not the kind of promotional vehicle for the Software Improvement Group, where the authors work, that was intended.

A quick recap of some wrongheaded guideline thinking:

- if something causes problems, recommend against it,

- if something has desirable behavior, recommend use it,

- ignore the possibility that any existing usage is the least worst way of doing things,

- if small numbers are involved, talk about the number 7 and human short term memory,

- discuss something that sounds true and summarize by repeating the magical things that will happen developer people follow your rules.

Needless to say, despite a breathless enumeration of how many papers the authors have published, no actual experimental evidence is cited as supporting any of the guidelines.

Let’s look at the first rule:

Limit the length of code units to 15 lines of code

Various advantages of short methods are enumerated; this looks like a case of wrongheaded item 2. Perhaps splitting up a long method will create lots of small methods with desirable properties. But what of the communication overhead of what presumably is a tightly coupled collection of methods? There is a reason long methods are long (apart from the person writing the code not knowing what they are doing), having everything together in one place can be more a more cost-effective use of developer resources than lots of tiny, tightly coupled methods.

This is a much lower limit than usually specified, where did it come from? The authors cite a study of 28,000 lines of Java code (yes, thousand not million) found that 95.4% of the methods contained at most 15 lines. Me thinks that methods with 14 or fewer lines came in just under 95%.

Next chapter/rule:

Limit the number of branch points per unit to 4

I think wrongheaded items 2, 3 & 5 cover this.

Next:

Do not copy code

Wrongheaded item 1 & 3 for sure. Oh, yes, there is empirical research showing that most code is never changed and cloned code contains fewer faults (but not replicated as far as I know).

Next:

Limit the number of parameters per unit to at most 4

Wrongheaded item 2. The alternatives are surely much worse. I have mostly seen this kind of rule applied to embedded systems code where number of parameters can be a performance issue. Definitely not a top 10 guideline issue.

Next…: left as an exercise for the reader…

What were the authors thinking when they wrote this nonsense book?

Of course any thrower of stones should give the location of his own glass house. Which is 10 times longer, measures a lot more than 28k of source and cites loads of stuff, but only manages to provide a handful of nebulous guidelines. Actually the main guideline output is that we know almost nothing about developer’s cognitive functioning (apart from the fact that people are sometimes very different, which is not very helpful) or the comparative advantages/disadvantages of various language constructs.

subset vs array indexing: which will cause the least grief in R?

The comments on my post outlining recommended R usage for professional developers were universally scornful, with my proposal recommending subset receiving the greatest wrath. The main argument against using subset appeared to be that it went against existing practice, one comment linked to Hadley Wickham suggesting it was useful in an interactive session (and by implication not useful elsewhere).

The commenters appeared to be knowledgeable R users and I suspect might have fallen into the trap of thinking that having invested time in obtaining expertise of language intricacies, they ought to use these intricacies. Big mistake, the best way to make use of language expertise is to use it to avoid the intricacies, aiming to write simply, easy to understand code.

Let’s use Hadley’s example to discuss the pros and cons of subset vs. array indexing (normally I have lots of data to help make my case, but usage data for R is thin on the ground).

Some data to work with, which would normally be read from a file.

sample_df = data.frame(a = 1:5, b = 5:1, c = c(5, 3, 1, 4, 1)) |

The following are two of the ways of extracting all rows for which a >= 4:

subset(sample_df, a >= 4) # has the same external effect as: sample_df[sample_df$a >= 4, ] |

The subset approach has the advantages:

- The array name,

sample_df, only appears once. If this code is cut-and-pasted or the array name changes, the person editing the code may omit changing the second occurrence. - Omitting the comma in the array access is an easy mistake to make (and it won’t get flagged).

- The person writing the code has to remember that in R data is stored in row-column order (it is in column-row order in many languages in common use). This might not be a problem for developers who only code in R, but my target audience are likely to be casual R users.

The case for subset is not all positive; there is a use case where it will produce the wrong answer. Let’s say I want all the rows where b has some computed value and I have chosen to store this computed value in a variable called c.

c=3 subset(sample_df, b == c) |

I get the surprising output:

> a b c > 1 1 5 5 > 5 5 1 1 |

because the code I have written is actually equivalent to:

sample_df[sample_df$b == sample_df$c, ] |

The problem is caused by the data containing a column having the same name as the variable used to hold the computed value that is tested.

So both subset and array indexing are a source of potential problems. Which of the two is likely to cause the most grief?

Unless the files being processed each potentially contain many columns having unknown (at time of writing the code) names, I think the subset name clash problem is much less likely to occur than the array indexing problems listed earlier.

Its a shame that assignment to subset is not supported (something to consider for future release), but reading is the common case and that is what we are interested in.

Yes, subset is restricted to 2-dimensional objects, but most data is 2-dimensional (at least in my world). Again concentrate recommendations on the common case.

When a choice is available, developers should pick the construct that is least likely to cause problems, and trivial mistakes are the most common cause of problems.

Does anybody have a convincing argument why array indexing is to be preferred over subset (not common usage is the reason of last resort for the desperate)?

R recommended usage for professional developers

R is not one of those languages where there is only one way of doing something, the language is blessed/cursed with lots of ways of doing the same thing.

Teaching R to professional developers is easy in the sense that their fluency with other languages will enable them to soak up this small language like a sponge, on the day they learn it. The problems will start a few days after they have been programming in another language and go back to using R; what they learned about R will have become entangled in their general language knowledge and they will be reduced to trial and error, to figure out how things work in R (a common problem I often have with languages I have not used in a while, is remembering whether the if-statement has a then keyword or not).

My Empirical software engineering book uses R and is aimed at professional developers; I have been trying to create a subset of R specifically for professional developers. The aims of this subset are:

- behave like other languages the developer is likely to know,

- not require knowing which way round the convention is in R, e.g., are 2-D arrays indexed in row-column or column-row order,

- reduces the likelihood that developers will play with the language (there is a subset of developers who enjoy exploring the nooks and crannies of a language, creating completely unmaintainable code in the process).

I am running a workshop based on the book in a few weeks and plan to teach them R in 20 minutes (the library will take a somewhat longer).

Here are some of the constructs in my subset:

- Use

subsetto extract rows meeting some condition. Indexing requires remembering to do it in row-column order and weird things happen when commas accidentally get omitted. - Always call

read.csvwith the argumentas.is=TRUE. Computers now have lots of memory and this factor nonsense needs to be banished to history. - Try not to use for loops. This will probably contain array/data.frame indexing, which provide ample opportunities for making mistakes, use the

*applyor*plyfunctions (which have the added advantage of causing code to die quickly and horribly when a mistake is made, making it easier to track down problems). - Use

headto remove the lastNelements from an object, e.g.,head(x, -1)returns x with the last element removed. Indexing with the length minus one is a disaster waiting to happen.

It’s a shame that R does not have any mechanism for declaring variables. Experience with other languages has shown that requiring variables to be declared before use catches lots of coding errors (this could be an optional feature so that those who want their ‘freedom’ can have it).

We now know that support for case-sensitive identifiers is a language design flaw, but many in my audience will not have used a language that behaves like this and I have no idea how to help them out.

There are languages in common use whose array bounds start at one. I will introduce R as a member of this club. Not much I can do to help out here, except the general suggestion not to do array indexing.

Suggestions based on reader’s experiences welcome.

2015: the year I started regularly talking to researchers in China

For me 2015 is when I started having regular email discussions with researchers in China; previously I only had regular discussions with Chinese researchers in the other countries. Given the number of researchers in China the volume of discussion will likely increase.

I have found researchers in China to be as friendly and helpful as researchers in other countries. The main problem I have experienced is slow or intermittent access to websites based in China.

In the past Chinese researchers I met were very good and these was a consensus that Asians were very good academically. I was told that we in the west were seeing a very skewed sample. Well, recently I have started to meet Chinese researchers who are not that good (or perhaps not even very good at all, I did not have time to find out). Perhaps the flow of Chinese researchers in the west now exceeds the volume of available really good people, or perhaps more of the good people are staying in China.

I am looking forward to learning about the Chinese view of software engineering (whatever it might be). From what I can tell it is a very practical approach, which I am very pleased to see.

Christmas books: 2015

The following is a list of the really interesting books I read this year (only one of which was actually published in 2015, everything has to work its way through several piles and being available online is a shortcut to the front of the queue). The list is short because I did not read many books.

The best way to learn about something is to do it and The Language Construction Kit by Mark Rosenfelder ought to be required reading for all software developers. It is about creating human languages and provides a very practical introduction into how human languages are put together.

The Righteous Mind by Jonathan Haidt. Yet more ammunition for moving Descartes‘s writings on philosophy into the same category as astrology and flat Earth theories.

I’m still working my way through Mining of Massive Datasets by Jure Leskovec, Anand Rajaraman and Jeff Ullman.

If you are thinking of learning R, then the best book (and the one I am recommending for a workshop I am running) is still: The R Book by Michael J. Crawley.

There are books piled next to my desk that might get mentioned next year.

I spend a lot more time reading blogs these days and Ben Thompson’s blog Stratechery is definitely my best find of the year.

So you found a bug in my compiler: Whoopee do

The hardest thing about writing a compiler is getting someone to pay you to do it. Having found somebody to pay you to write, update or maintain a compiler, why would you want to fix fault reported by some unrelated random Joe user?

If Random Joe is customer of a commercial compiler company he can expect a decent response to bug reports, because paying customers are hard to find and companies want to hold onto them.

If Random Joe is a user of an open source compiler, then what incentive does anybody paid to work on the compiler have to do anything about the reported problem?

The most obvious reason is reputation, developers want to feel that they are creating a high quality piece of software. Given that there are not enough resources to spend time investigating all reported problems (many are duplicates or minor issues), it is necessary to prioritize. When reputation is a major factor, the amount of publicity attached to a problem report has a big impact on the priority assigned to that report.

When compiler fuzzers started to attract a lot of attention, a few years ago, the teams working on gcc and llvm were quick to react and fix many of the reported bugs (Csmith is the fuzzer that led the way).

These days finding bugs in compilers using fuzzing is old news and I suspect that the teams working on gcc and llvm don’t need to bother too much about new academic papers claiming that the new XYZ technique or tool finds lots of compiler bugs. In fact I would suggest that compiler developers stop responding to researchers working toward publishing papers on these techniques/tools; responses from compiler maintainers is being becoming a metric for measuring the performance of techniques/tools, so responding just encourages the trolls.

Just because you have been using gcc or llvm since you wore short trousers does not mean they owe you anything. If you find a bug in the compiler and you care, then fix it or donate some money so others can make a living working on these compilers (I don’t have any commercial connection or interest in gcc or llvm). At the very least, don’t complain.

Hackathon New Year’s resolution

The problem with being a regularly hackathon attendee is needing a continual stream of interesting ideas for something to build; the idea has to be sold to others (working in a team of one is not what hackathons are about), be capable of being implemented in 24 hours (if only in the flimsiest of forms) and make use of something from one of the sponsors (they are paying for the food and drink and it would be rude to ignore them).

I think the best approach for selecting something to build is to have the idea and mold one of the supplied data sets/APIs/sponsor interests to fit it; looking at what is provided and trying to come up with something to build is just too hard (every now and again an interesting data set or problem pops up, but this is not a regular occurrence).

My resolution for next year is to only work on Wow projects at hackathons. This means that I will not be going to as many hackathons (because I cannot think of a Wow idea to build) and will be returning early from many that I attend (because my Wow idea gets shot down {a frustratingly regular occurrence} and nobody else manages to sell me their idea, or the data/API turns out to be seriously deficient {I’m getting better at spotting the likelihood of this happening before attending}).

Machine learning in SE research is a bigger train wreck than I imagined

I am at the CREST Workshop on Predictive Modelling for Software Engineering this week.

Magne Jørgensen, who virtually single handed continues to move software cost estimation research forward, kicked-off proceedings. Unfortunately he is not a natural speaker and I think most people did not follow the points he was trying to get over; don’t panic, read his papers.

In the afternoon I learned that use of machine learning in software engineering research is a bigger train wreck that I had realised.

Machine learning is great for situations where you have data from an application domain that you don’t know anything about. Lets say you want to do fault prediction but don’t have any practical experience of software engineering (because you are an academic who does not write much code), what do you do? Well you could take some source code measurements (usually on a per-file basis, which is a joke given that many of the metrics often used only have meaning on a per-function basis, e.g., Halstead and cyclomatic complexity) and information on the number of faults reported in each of these files and throw it all into a machine learner to figure the patterns and build a predictor (e.g., to predict which files are most likely to contain faults).

There are various ways of measuring the accuracy of the predictions made by a model and there is a growing industry of researchers devoted to publishing papers showing that their model does a better job at prediction than anything else that has been published (yes, they really do argue over a percent or two; use of confidence bounds is too technical for them and would kill their goose).

I long ago learned to ignore papers on machine learning in software engineering. Yes, sooner or later somebody will do something interesting and I will miss it, but will have retained my sanity.

Today I learned that many researchers have been using machine learning “out of the box”, that is using whatever default settings the code uses by default. How did I learn this? Well, one of the speakers talked about using R’s carat package to tune the options available in many machine learners to build models with improved predictive performance. Some slides showed that the performance of carat tuned models were often substantially better than the non-carat tuned model and many people in the room were aghast; “If true, this means that all existing papers [based on machine learning] are dead” (because somebody will now come along and build a better model using carat; cannot recall whether “dead” or some other term was used, but you get the idea), “I use the defaults because of concerns about breaking the code by using inappropriate options” (obviously somebody untroubled by knowledge of how machine learning works).

I think that use of machine learning, for the purpose of prediction (using it to build models to improve understanding is ok), in software engineering research should be banned. Of course there are too many clueless researchers who need the crutch of machine learning to generate results that can be included in papers that stand some chance of being published.

The Empirical Investigation of Perspective-Based Reading: Data analysis

Questions about the best way to perform code reviews go back almost to the start of software development. The perspective-based reading approach focuses reviewers’ attention on the needs of the users of the document/code, e.g., tester, user, designer, etc, and “The Empirical Investigation of Perspective-Based Reading” is probably the most widely cited paper on the subject. This paper is so widely cited I decided it was worth taking the time to email the authors of a 20 year old paper asking if the original data was available and could I have a copy to use in a book I am working on. Filippo Lanubile’s reply included two files containing the data (original files, converted files+code)!

How do you compare the performance of different approaches to finding problems in documents/code? Start with experienced subjects, to minimize learning effects during the experiment (doing this also makes any interesting results an easier sell; professional developers know how unrealistic student performance tends to be); the performance of subjects using what they know has to be measured first, learning another technique first would contaminate any subsequent performance measurements.

In this study subjects reviewed four documents over two days; the documents were two NASA specifications and two generic domain specifications (bank ATM and parking garage); the documents were seeded with faults. Subjects were split into two groups and read documents in the following sequences:

Group 1 Group 2 Day 1 NASA A NASA B ATM PG Day 2 Perspective-based reading training PG ATM NASA B NASA A |

The data contains repeated measurements of the same subject (i.e., their performance on different documents using one of two techniques), so mixed-model regression has to be used to build a model.

I built two models, one for number of faults detected and the another for the number of false positive faults flagged (i.e., something that was not a fault flagged as a fault).

The two significant predictors of percentage of known faults detected were kind of document (higher percentage detected in the NASA documents) and order of document processing on each day (higher percentage reported on the first document; switching document kind ordering across groups would have enabled more detail to be teased out).

The false positive model was more complicated, predictors included number of pages reviewed (i.e., more pages reviewed more false positive reports; no surprise here), perspective-based reading technique used (this also included an interaction with number of years of experience) and kind of document.

So use of perspective-based reading did not make a noticeable difference (the false positive impact was in amongst other factors). Possible reasons that come to mind include subjects not being given enough time to switch reading techniques (people need time to change established habits) and some of the other reading techniques used may have been better/worse than perspective-based reading and overall averaged out to no difference.

This paper is worth reading for the discussion of the issues involved in trying to control factors that may have a noticeable impact on experimental results and the practical issues of using professional developers as subjects (the authors clearly put a lot of effort into doing things right).

Please let me know if you build any interesting model using the data.

Peak memory transfer rate is best SPECint performance predictor

The idea that cpu clock rate is the main driver of system performance on the SPEC cpu benchmark is probably well entrenched in developers’ psyche. Common knowledge, or folklore, is slow to change. The apparently relentless increase in cpu clock rate, a side-effect of Moore’s law, stopped over 10 years ago, but many developers still behave as-if it is still happening today.

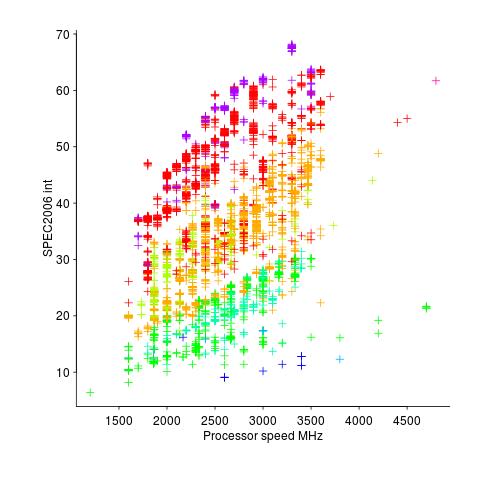

The plot below shows the SPEC cpu integer performance of 4,332 systems running at various clock rates; the colors denote the different peak memory transfer rates of the memory chips in these systems (code and data).

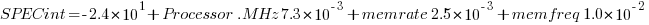

Fitting a regression model to this data, we find that the following equation predicts 80% of the variance (more complicated models fit better, but let’s keep it simple):

where: Processor.MHz is the processor clock rate, memrate the peak memory transfer rate and memfreq the frequency at which the memory is clocked.

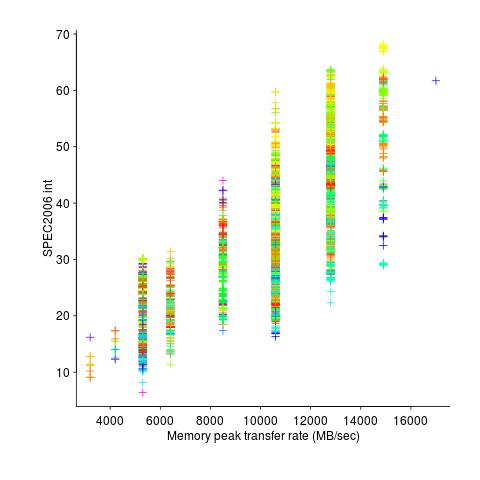

Analysing the relative contribution of each of the three explanatory variables turns up the surprising answer that peak memory transfer rate explains significantly more of the variation in the data than processor clock rate (by around a factor of five).

If you are in the market for a new computer and are interested in relative performance, you can obtain a much more accurate estimate of performance by using the peak memory transfer rate of the DIMMs contained in the various systems you were considering. Good luck finding out these numbers; I bet the first response to your question will be “What is a DIMM?”

Developer focus on cpu clock rate is no accident, there is one dominant supplier who is willing to spend billions on marketing, with programs such as Intel Inside and rebates to manufacturers for using their products. There is no memory chip supplier enjoying the dominance that Intel has in cpus, hence nobody is willing to spend the billions in marketing need to create customer awareness of the importance of memory performance to their computing experience.

The plot below shows the SPEC cpu integer performance against peak memory transfer rates; the colors denote the different cpu clock rates in these systems.

Recent Comments