Array bound checking in C: the early products

Tools to support array bound checking in C have been around for almost as long as the language itself.

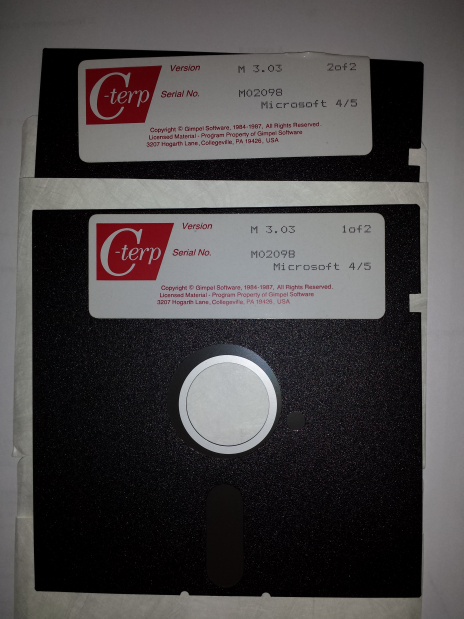

I recently came across product disks for C-terp; at 360k per 5 1/4 inch floppy, that is a C compiler, library and interpreter shipped on 720k of storage (the 3.5 inch floppies with 720k and then 1.44M came along later; Microsoft 4/5 is the version of MS-DOS supported). This was Gimpel Software’s first product in 1984.

The Model Implementation C checker work was done in the late 1980s and validated in 1991.

Purify from Pure Software was a well-known (at the time) checking tool in the Unix world, first available in 1991. There were a few other vendors producing tools on the back of Purify’s success.

Richard Jones (no relation) added bounds checking to gcc for his PhD. This work was done in 1995.

As a fan of bounds checking (finding bugs early saves lots of time) I was always on the lookout for C checking tools. I would be interested to hear about any bounds checking tools that predated Gimpel’s C-terp; as far as I know it was the first such commercially available tool.

Hedonism: The future economics of compiler development

In the past developers paid for compilers, then gcc came along (followed by llvm) and now a few companies pay money to have a bunch of people maintain/enhance/support a new cpu; developers get a free compiler. What will happen once the number of companies paying money shrinks below some critical value?

The current social structure has an authority figure (ISO committees for C and C++, individuals or companies for other languages) specifying the language, gcc/llvm people implementing the specification and everybody else going along for the ride.

Looking after an industrial strength compiler is hard work and requires lots of know-how; hobbyists have little chance of competing against those paid to work full time. But as the number of companies paying for support declines, the number of people working full-time on the compilers will shrink. Compiler support will become a part-time job or hobby.

What are the incentives for a bunch of people to get together and spend their own time maintaining a compiler? One very powerful incentive is being able to decide what new language features the compiler will support. Why spend several years going to ISO meetings arguing with everybody about whether the next version of the standard should support your beloved language construct, it’s quicker and easier to add it directly to the compiler (if somebody else wants their construct implemented, let them write the code).

The C++ committee is populated by bored consultants looking for an outlet for their creative urges (the production of 1,600 page documents and six extension projects is driven by the 100+ people who attend meetings; a lot fewer people would produce a simpler C++). What is the incentive for those 100+ (many highly skilled) people to attend meetings, if the compilers are not going to support the specification they produce? Cut out the middle man (ISO) and organize to support direct implementation in the compiler (one downside is not getting to go to Hawaii).

The future of compiler development is groups of like-minded hedonists (in the sense of agreeing what features should be in a language) supporting a compiler for the bragging rights of having created/designed the languages features used by hundreds of thousands of developers.

Average maintenance/development cost ratio is less than one

Part of the (incorrect) folklore of software engineering is that more money is spent on maintaining an application than was spent on the original development.

Bossavit’s The Leprechauns of Software Engineering does an excellent job of showing that the probably source of this folklore did not base their analysis on any cost data (I’m not going to add to an already unwarranted number of citations by listing the source).

I have some data, actually two data sets, each measuring a different part of the problem, i.e., 1) system lifetime and 2) maintenance/development costs. Both sets of measurements apply to IBM mainframe software, so a degree of relatedness can be claimed.

Analyzing this data suggests that the average maintenance/development cost ratio, for a IBM applications, is around 0.81 (code+data). The data also provides a possible explanation for the existing folklore in terms of survivorship bias, i.e., most applications do not survive very long (and have a maintenance/development cost ratio much less than one), while a few survive a long time (and have a maintenance/development cost ratio much greater than one).

At any moment around 79% of applications currently being maintained will have a maintenance/development cost ratio greater than one, 68% a ratio greater than two and 51% a ratio greater than five.

Another possible cause of incorrect analysis is the fact we are dealing with ratios; the harmonic mean has to be used, not the arithmetic mean.

Existing industry practice of not investing in creating maintainable software probably has a better cost/benefit than the alternative because most software is not maintained for very long.

Early compiler history

Who wrote the early compilers, when did they do it, and what were the languages compiled?

Answering these questions requires defining what we mean by ‘compiler’ and deciding what counts as a language.

Donald Knuth does a masterful job of covering the early development of programming languages. People were writing programs in high level languages well before the 1950s, and Konrad Zuse is the forgotten pioneer from the 1940s (it did not help that Germany had just lost a war and people were not inclined to given German’s much public credit).

What is the distinction between a compiler and an assembler? Some early ‘high-level’ languages look distinctly low-level from our much later perspective; some assemblers support fancy subroutine and macro facilities that give them a high-level feel.

Glennie’s Autocode is from 1952 and depending on where the compiler/assembler line is drawn might be considered a contender for the first compiler. The team led by Grace Hopper produced A-0, a fancy link-loader, in 1952; they called it a compiler, but today we would not consider it to be one.

Talking of Grace Hopper, from a biography of her technical contributions she sounds like a person who could be technical but chose to be management.

Fortran and Cobol hog the limelight of early compiler history.

Work on the first Fortran compiler started in the summer of 1954 and was completed 2.5 years later, say the beginning of 1957. A very solid claim to being the first compiler (assuming Autocode and A-0 are not considered to be compilers).

Compilers were created for Algol 58 in 1958 (a long dead language implemented in Germany with the war still fresh in people’s minds; not a recipe for wide publicity) and 1960 (perhaps the first one-man-band implemented compiler; written by Knuth, an American, but still a long dead language). The 1958 implementation sounds like it has a good claim to being the second compiler after the Fortran compiler.

In December 1960 there were at least two Cobol compilers in existence (one of which was created by a team containing Grace Hopper in a management capacity). I think the glory attached to early Cobol compiler work is the result of having a female lead involved in the development team of one of these compilers. Why isn’t the other Cobol compiler ever mentioned? Why is the Algol 58 compiler implementation that occurred before the Cobol compiler ever mentioned?

What were the early books on compiler writing?

“Algol 60 Implementation” by Russell and Randell (from 1964) still appears surprisingly modern.

Principles of Compiler Design, the “Dragon book”, came out in 1977 and was about the only easily obtainable compiler book for many years. The first half dealt with the theory of parser generators (the audience was CS undergraduates and parser generation used to be a very trendy topic with umpteen PhD thesis written about it); the book morphed into Compilers: Principles, Techniques, and Tools. Old-timers will reminisce about how their copy of the book has a green dragon on the front, rather than the red dragon on later, trendier (with less parser theory and more code generation) editions.

Collecting all of the publicly available source code

Collecting together all the software ever written is impossible, but collecting everything that can be found would create something very useful (since I have always been interested in source code analysis, my opinion is biased).

Collecting together source available on the internet is easy. Creating a copy of Github is one of the first actions of anybody with ambitions of collecting lots of source (back in the day it was collecting shareware 5″ 1/4, then 3″ 1/2 floppy discs, then CDs and then DVDs).

The very hard to collect items “exist” on line-printer paper, punched cards, rolls of punched tape and mag tape. These are the items where serious collectors should concentrate their efforts (NASA lost a lot of Voyager data when magnetic particles fell off the plastic strips of tape reels in storage, because the adhesive had degraded).

The Internet Archive is doing a great job of collecting and making available ‘antique’ source code (old computer games is a popular genre; other collectors concentrate on being able to execute ROM images of games), but they are primarily US based.

Collecting the World’s source code requires collection organizations in every country. Collecting old code is a people intensive business and requires lots of local knowledge.

A new source code collection organization has recently been setup in France; the Software Heritage currently aims to collect all software that is publicly available. So far they have done what everybody else does, made a copy of Github and a couple of the well-known source repos.

I hope this organization is not just the French government throwing money at another one-upmanship US vs. France project.

If those involved are serious about collecting source code, rather than enjoying the perks of a tax-funded show project, they will realise that lots of French specific source code is dotted around the country needing to be collected now (before the media decomposes and those who know how to read it die).

Gentleman scientists in software engineering

The Royal Society was formed in 1660 as a “College for the Promoting of Physico-Mathematical Experimental Learning”. A lot of very important research was done by members of this society, who were independently wealthy or held a university post.

For a while now, I have thought that the only way software engineering is going to advance to become a real engineering/scientific discipline is via gentleman scientists (unless industry really does need more clueless button pushers).

I was talking at the LondonR meeting on Tuesday (slides) and got chatting with familiar faces from hackathons. It seems that they had also had ideas for researching particular problems in software engineering, and liked the idea of a group of Gentleman scientists.

The problem we have is that none of us wants to do the organizing (a common problem). We must be able to do better than meeting in a pub.

I think the main qualifications for being a member of the group of Gentleman scientists for the “Promoting of Software Experimental Learning” would be something like:

- enjoying the pleasure of gaining knowledge about how the world works, i.e., no flights of fancy,

- interested in finding answers to questions whose answers are not yet known, i.e., doing real research, not personal learning,

- have the funds to support what you do, i.e., you want funding, you find it,

- being proficient in a necessary skill, i.e., you cannot be a beginner in all the required skills.

If the Danish Gentlemen scientists can send rockets into space, I’m sure the inhabitants of London and surrounds (no nationality restrictions here) can make major discoveries in software engineering (nobody has really found any yet, they are all waiting to be found).

Doing software research is not expensive, in monetary terms. It requires that those involved know something about real-life software issues and have the time and inclination to research possible solutions. People in industry are ideally placed to do the research. There are academic research groups doing interesting work in this area (they are in the minority). There are no groups we could join that are within easy traveling distance of London’ish based people (I would claim none in the UK).

The rationale for having a group of like-minded people meeting together include: it provides a structure and focus, sharing ideas is interesting and helps refine them, it’s an enjoyable night out, and a network is good for sharing/finding resources.

What might be the outputs of this group/network/society/asylum? Blog posts, talks, reports, books: the intent is to produce stuff that practicing software developers will find useful.

When the Royal Society started, Latin was the language of scholars. It’s motto ‘Nullius in verba’ catches the sentiment, but ‘take nobody’s word for it’ does not sound catchy. Something to work on.

I will keep readers posted on any progress (e.g., finding a venue and organizing a night). If any readers knows of an existing group like this, please let me know (not looking to build an empire).

Economics chapter added to “Empirical software engineering using R”

The Economics chapter of my Empirical software engineering book has been added to the draft pdf (download here).

This is a slim chapter, it might grow a bit, but I suspect not by a huge amount. Reasons include lots of interesting data being confidential and me not having spent a lot of time on this topic over the years (so my stash of accumulated data is tiny). Also, a significant chunk of the economics data I have is used to discuss issues in the Ecosystems and Projects chapters, perhaps some of this material will migrate back once these chapters are finalized.

You might argue that Economics is more important than Human cognitive characteristics and should have appeared before it (in chapter order). I would argue that hedonism by those involved in producing software is the important factor that pushes (financial) economics into second place (still waiting for data to argue my case in print).

Some of the cognitive characteristics data I have been waiting for arrived, and has been added to this chapter (some still to be added).

As always, if you know of any interesting software engineering data, please tell me.

I am after a front cover. A woodcut of alchemists concocting a potion appeals, perhaps with various software references discretely included, or astronomy related (the obvious candidate has already been used). The related modern stuff I have seen does not appeal. Suggestions welcome.

Ecosystems next.

Happy 30th birthday to GCC

Thirty years ago today Richard Stallman announced the availability of a beta version of gcc on the mod.compilers newsgroup.

Everybody and his dog was writing C compilers in the late 1980s and early 1990s (a C compiler validation suite vendor once told me they had sold over 150 copies; a compiler vendor has to be serious to fork out around $10,000 for a validation suite). Did gcc become the dominant open source because one compiler would inevitably become dominant, or was there some collection of factors that gave gcc a significant advantage?

I think gcc’s market dominance was driven by two environmental factors, with some help from a technical compiler implementation decision.

The technical implementation decision was the use of RTL as the optimization+code generation strategy. Jack Davidson’s 1981 PhD thesis (and much later the LCC book) describe the gory details. The code generators for nearly every other C compiler was closely tied to the machine being targeted (because the implementers were focused on getting a job done, not producing a portable compiler system). Had they been so inclined Davidson and Christopher Fraser could have been the authors of the dominant C compiler.

The first environment factor was the creation of a support ecosystem around gcc. The glue that nourished this ecosystem was the money made writing code generators for the never ending supply of new cpus that companies were creating (that needed a C compiler). In the beginning Cygnus Solutions were the face of gcc+tools; Michael Tiemann, a bright affable young guy, once told me that he could not figure out why companies were throwing money at them and that perhaps it was because he was so tall. Richard Stallman was not the easiest person to get along with and was probably somebody you would try to avoid meeting (I don’t know if he has mellowed). If Cygnus had gone with a different compiler, they had created 175 host/target combinations by 1999, gcc would be as well-known today as Hurd.

Yes, people writing Masters and PhD thesis were using gcc as the scaffolding for their fancy new optimization techniques (e.g., here, here and here), but this work essentially played the role of an R&D group trying to figure out where effort ought to be invested writing production code.

Sun’s decision to unbundle the development environment (i.e., stop shipping a C compiler with every system) caused some developers to switch to another compiler, some choosing gcc.

The second environment factor was the huge leap in available memory on developer machines in the 1990s. Compiler vendors cannot ship compilers that do fancy optimization if developers don’t have computers with enough memory to hold the optimization information (many, many megabytes). Until developer machines contained lots of memory, a one-man band could build a compiler producing code that was essentially as good as everybody else. An open source market leader could not emerge until the man+dog compilers could be clearly seen to be inferior.

During the 1990s the amount of memory likely to be available in developers’ computers grew dramatically, allowing gcc to support more and more optimizations (donated by a myriad of people targeting some aspect of code generation that they found interesting). Code generation improved dramatically and man+dog compilers became obviously second/third rate.

Would things be different today if Linus Torvalds’ had not selected gcc? If Linus had chosen a compiler licensed under a more liberal license than copyleft, things might have turned out very differently. LLVM started life in 2003 and one of my predictions for 2009 was its demise in the next few years; I failed to see the importance of licensing to Apple (who essentially funded its development).

Eventually, success.

With success came new existential threats, in particular death by a thousand forks.

A serious fork occurred in 1997. Stallman was clogging up the works; fortunately he saw the writing on the wall and in 1999 stepped aside.

Money is what holds together the major development teams supporting gcc and llvm. What happens when customers wanting support for new back-ends dries up, what happens when major companies stop funding development? Do we start seeing adverts during compilation? Chris Lattner, the driving force behind llvm recently moved to Tesla; will it turn out that his continuing management was as integral to the continuing success of llvm as getting rid of Stallman was to the continuing success of gcc?

Will a single mainline version of gcc still be the dominant compiler in another 30 years time?

Time will tell.

Learning from some legal decisions

The British and Irish Legal Information Institute provides “Access to Freely Available British and Irish Public Legal Information”. Searching the England and Wales High Court (Technology and Construction Court) Decisions throws up some interesting reading (when searching on software).

For those who have never seen a decent sized project go wrong from the inside, DE BEERS UK LIMITED (Formerly: THE DIAMOND TRADING COMPANY LIMITED) vs. ATOS ORIGIN IT SERVICES UK LIMITED provides a well written example. De Beers contracted Atos to write some software. The development of the software did not go well. Were the original requirements/spec underdone or were subsequent personnel not up to the job? Difficult to tell from the Decision, as is the reason Atos thought they had a chance of winning a court case.

SAP UK LIMITED vs. DIAGEO GREAT BRITAIN LIMITED was a licensing dispute, or more accurately an example of why it is important to check what your third-party software gets up to. Diageo had signed a licensing agreement with SAP and 5,800 Diageo users had used a Salesforce.com app which, unknown to them, made use of SAP. The end result was a bill for £55 million, which Diageo had not been expecting.

There are probably more interesting cases to learn from, but I am supposed to be writing a book in my ‘spare’ time.

Uncovering the undefined behaviors

I think that all programming languages contain some constructs that have undefined behavior.

The C Standard explicitly lists various constructs as having undefined behavior. It also specifies that: Undefined behavior is otherwise indicated in this International Standard by the words “undefined behavior” or by the omission of any explicit definition of behavior.; the second half of the sentence refers to what might be called implicit undefined behavior. Implicit undefined behavior can be subdivided into intentional and unintentional. Intentional undefined behavior applies to constructs that the committee considered and decided (and continues to decide) to say nothing about (e.g., question 19), while unintentional undefined behavior applies to constructs that the committee did not explicitly consider (when discovered, these often end up as defect reports, which are sometimes resolved as intentionally undefined behavior).

Fans of some languages claim that ‘their’ language does not contain any undefined behaviors.

Ada does not explicitly specify any construct as having undefined behavior, but it does specify that some constructs generate a bounded error; a rose by any other name…

I sometimes bump into language inventors claiming that ‘their’ language is fully specified, i.e., does not contain any undefined behaviors. My first question to them, about the behavior of division involving negative values, invariable requires me to explain that there are two possible ways of doing it (ignorance is bliss when fully specifying a language). The invariable answer is that the behavior is whatever the underlying implementation does (which is often written in C). In other words, they have imported all the undefined behaviors of the implementation language.

Follow-up question include: what is the order of expression evaluation (e.g., left-to-right, right-to-left, inside out…), what is the order of function argument evaluation (often driven by the direction of stack growth), what is the order of initialization and other order related questions that comes to mind. Their fully specified language quickly turns out to be a sham.

A recent post by John Regehr talks about Gödel’s incompleteness Theorem as a source of undefined behavior. My understanding is that the underlying argument is built on non-termination. How is it possible to tell the difference between non-termination and lasting longer than the age of the universe? In itself I don’t think this theorem is a source of undefined behavior; more enlightenment welcome.

Recent Comments