Almost all published analysis of fault data is worthless

Faults are the subject of more published papers than any other subject in empirical software engineering. Unfortunately, over 98.5% of these fault related papers are at best worthless and at worst harmful, i.e., make recommendations whose impact may increase the number of faults.

The reason most fault papers are worthless is the data they use and the data they don’t to use.

The data used

Data on faults in programs used to be hard to obtain, a friend in a company that maintained a fault database was needed. Open source changed this. Now public fault tracking systems are available containing tens, or even hundreds, of thousands of reported faults. Anybody can report a fault, and unfortunately anybody does; there is a lot of noise mixed in with the signal. One study found 43% of reported faults were enhancement requests, the same underlying fault is reported multiple times (most eventually get marked as duplicate, at the cost of much wasted time) and …

Fault tracking systems don’t always contain all known faults. One study found that the really important faults are handled via email discussion lists, i.e., they are important enough to require involving people directly.

Other problems with fault data include: biased reported of problems, reported problem caused by a fault in a third-party library, and reported problem being intermittent or not reproducible.

Data cleaning is the essential first step that many of those who analyse fault data fail to perform.

The data not used

Users cause faults, i.e., if nobody ever used the software, no faults would be reported. This statement is as accurate as saying: “Source code causes faults”.

Reported faults are the result of software being used with a set of inputs that causes the execution of some sequence of tokens in the source code to have an effect that was not intended.

The number and kind of reported faults in a program depends on the variety of the input and the number of faults in the code.

Most fault related studies do not include any user related usage data in their analysis (the few that do really stand out from the crowd), which can lead to very wrong conclusions being drawn.

User usage data is very hard to obtain, but without it many kinds of evidence-based fault analysis are doomed to fail (giving completely misleading answers).

The first compiler was implemented in itself

I have been reading about the world’s first actual compiler (i.e., not a paper exercise), described in Corrado Böhm’s PhD thesis (French version from 1954, an English translation by Peter Sestoft). The thesis, submitted in 1951 to the Federal Technical University in Zurich, takes some untangling; when you are inventing a new field, ideas tend to be expressed using existing concepts and terminology, e.g., computer peripherals are called organs and registers are denoted by the symbol  .

.

Böhm had work with Konrad Zuse and must have known about his language, Plankalkül. The language also has a APL feel to it (but without the vector operations).

Böhm’s language does not have a name, his thesis is really about translating mathematical expressions to machine code; the expressions are organised by what we today call basic blocks (Böhm calls them groups). The compiler for the unnamed language (along with a loader) is written in itself; a Java implementation is being worked on.

Böhm’s work is discussed in Donald Knuth’s early development of programming languages, but there is nothing like reading the actual work (if only in translation) to get a feel for it.

Update (3 days later): Correspondence with Donald Knuth.

Update (3 days later): A January 1949 memo from Haskell Curry (he of Curry fame and more recently of Haskell association) also uses the term organ. Might we claim, based on two observations on different continents, that it was in general use?

The shadow of the input distribution

Two things need to occur for a user to experience a fault in a program:

- a fault has to exist in the code,

- the user has to provide input that causes program execution to include the faulty code in a way that exhibits the incorrect behavior.

Data on the distribution of user input values is extremely rare, and we are left having to look for the shadows that the input distribution creates.

Csmith is a well-known tool for generating random C source code. I spotted an interesting plot in a compiler fuzzing paper and Yang Chen kindly sent me a copy of the data. In compiler fuzzing, source code is automatically generated and fed to the compiler, various techniques are used to figure out when the compiler gets things wrong.

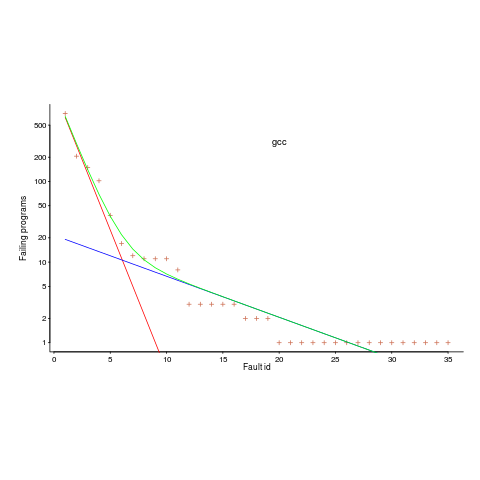

The plot below is a count of the number of times each fault in gcc has been triggered (code+data). Multiple occurrences of the same fault are experienced because the necessary input values occur multiple times in the generated source code (usually in different files).

The green line is a fitted regression model, it’s a bi-exponential, i.e., the sum of two exponentials (the straight lines in red and blue).

The obvious explanation for this bi-exponential behavior (explanations invented after seeing the data can have the flavor of just-so stories, which is patently not true here 🙂 is that one exponential is driven by the presence of faults in the code and the other exponential is driven by the way in which Csmith meanders over the possible C source.

So, which exponential is generated by the faults and which by Csmith? I’m still trying to figure this out; suggestions welcome, along with alternative explanations.

Is the same pattern seen in duplicates of user reported faults? It does in the small amount of data I have; more data welcome.

Christmas books for 2017

Some suggestions for books this Christmas. As always, the timing of books I suggest is based on when they reach the top of my books-to-read pile, not when they were published.

“Life ascending: The ten great inventions of evolution” by Nick Lane. The latest thinking (as of 2010) on the major events in the evolution of life. Full of technical detail, very readable, and full of surprises (at least for me).

“How buildings learn” by Stewart Brand. Yes, I’m very late on this one. So building are just like software, people want to change them in ways not planned by their builders, they get put to all kinds of unexpected uses, some of them cannot keep up and get thrown away and rebuilt, while others age gracefully.

“Dead Man Working” by Cederström and Fleming is short and to the point (having an impact on me earlier in the year), while “No-Collar: The humane workplace and its hidden costs” by Andrew Ross is longer (first half is general, second a specific instance involving one company). Both have a coherent view work in the knowledge economy.

If you are into technical books on the knowledge economy, have a look at “Capitalism without capital” by Haskel and Westlake (the second half meanders off, covering alleged social consequences), and “Antitrust law in the new economy” by Mark R. Patterson (existing antitrust thinking is having a very hard time grappling with knowledge-based companies).

If you are into linguistics, then “Constraints on numerical expressions” by Chris Cummins (his PhD thesis is free) provides insight into implicit assumptions contained within numerical expressions (of the human conversation kind). A must read for anybody interested in automated fact checking.

ISO/IEC JTC 1/SC 42 Artificial intelligence

What has been preventing Artificial Intelligence being a success? Yes, you guessed it, until now ISO has not had an SC (Standards’ Committee) dealing with AI. Well, the votes are in and JTC 1/SC 42 Artificial intelligence is go.

Countries pay ISO to be members of an SC and the tax payers of: Austria, Canada, Finland, Germany, Ireland, Switzerland and United States have the pleasure of being founding member countries of SC42.

What standards/technical-reports are those attending SC42 meetings going to working on?

The two document titles I have seen so far are: “Artificial Intelligence Concepts and Terminology” and “Framework for Artificial Intelligence (AI) Systems Using Machine Learning (ML)”.

I hope the terminology document arrives in plenty of time, before the machines take over. The ISO Standard for Year 2000 terminology arrived in December 1999 (there was a flurry of emails desperately trying to row-back on this document).

Want to join up? Wael William Diab is the chairperson.

Vanity project or real research?

I gave a talk at the CREST Open Workshop yesterday. Many of those attending and speaking were involved in empirical work of one kind or another. My experience is that researchers involved in empirical work, in software engineering, feel the need to justify using this approach to research, because it is different from what many others in the field do. I want to reverse this perception; those not doing empirical work are the ones that should feel the need to justify their approach to research.

Evidence obtained from experiments and measurements are the basis of the scientific method.

I started by contrasting a typical software engineering researcher’s view of their work (both images from Wikipedia under a Creative Commons Attribution-ShareAlike License):

.jpg)

with a common industry view of academic researchers:

The reputation of those doing evidence based research is being completely overshadowed by those who use ego and bluster to promote their claims. We needed an effective label for work that is promoted using ego and bluster, and I proposed vanity projects (the work done by the ego and bluster crowd does not deserve to be referred to as research). Yes, there are plenty of snake-oil salesmen in industry, but that is another issue.

Vanity projects being passed off as research should be named and shamed.

Next time you are in the audience listening to claims made by the speaker about the results of his/her research, that are not backed up by experiments or measurements, ask them why decided to pursue a vanity project rather than proper research.

Data-set update to “Empirical software engineering using R”

The pile of papers, books and data-sets, relating to previously released draft chapters of my Empirical software engineering book, has been growing, and cluttering up my mind. I decided to have a clear-out.

A couple of things stood out.

There are around 25 data-sets that have been promised but not yet arrived. If you encounter anybody who mentions they promised to send me data, please encourage them to spend some time doing this. I don’t want to add a new category, promised but never delivered, to the list of email responses.

There has been an increase in data-sets not being used because I already have something better. This is a good sign, data quality is increasing. One consequence is that a growing number of ‘historical’ data-sets have fallen by the wayside. This is a good thing, most data-sets analysed in papers are very low quality and only used because nothing else was available.

One of my reasons for making draft releases was to prompt people to suggest data I had missed. This has not happened yet; come on people, suggest some data I don’t yet know about.

About a third of the pile got included in the latest draft, a third had been superseded by something better, and a third are still waiting for promised data.

Now, back to the reliability chapter.

Grace Hopper: Manager, after briefly being a programmer

In popular mythology, Grace Hopper is a programmer who wrote one of the first compilers. I think the reality is that Hopper did some programming, but quickly moved into management; a common career path for freshly minted PhDs and older people entering computing (Hopper was in her 40s when she started); her compiler management work occurred well after many other compilers had been written.

What is the evidence?

Hopper is closely associated with Cobol. There is a lot of evidence for at least 28 compilers in 1957, well before the first Cobol compiler (can a compiler written after the first 28 be called one of the first?)

The A-0 tool, which Hopper worked on as a programmer in 1951-52, has been called a compiler. However, the definition of Compile used sounds like today’s assembler and the definition of Assemble used sounds like today’s link-loader (also see: section 7 of Digital Computers – Advanced Coding Techniques for Hopper’s description of what A-2, a later version, did).

The ACM’s First Glossary of Programming Terminology, produced by a committee chaired by Hopper in June 1954.

Routine – a set of coded instructions arranged in proper sequence to direct the computer to perform a desired operation or series of operations. See also Subroutine.

Compiler (Compiling Routine) – an executive routine which, before the desired computation is started, translates a program expressed in pseudo-code into machine code (or into another pseudo-code for further translation by an interpreter). In accomplishing the translation, the compiler may be required to:

…

Assemble – to integrate the subroutines (supplied, selected, or generated) into the main routine, i.e., to:

Adapt – to specialize to the task at hand by means of preset parameters.

Orient – to change relative and symbolic addresses to absolute form.

Incorporate – to place in storage.

Hopper’s name is associated with work on the MATH-MATIC and ARITH-MATIC Systems, but her name does not appear in the list of people who wrote the manual in 1957. A programmer working on these systems is likely to have been involved in producing the manual.

After the A-0 work, all of Hopper’s papers relate to talks she gave, committees she sat on and teams she led, i.e., the profile of a manager.

À la carte Entropy

My observation that academics treat Entropy as the go-to topic, when they have no idea what else to talk about, has ruffled a few feathers. David Clark, one of the organizers of a workshop on Information Theory and Software Testing has invited me to give a talk on Entropy (the title is currently Entropy for the uncertain, but this state might change :-).

Complaining about the many ways entropy is currently misused in software engineering would be like shooting fish in a barrel, and equally pointless. I want to encourage people to use entropy in a meaningful way, and to stop using Shannon entropy just because it is the premium brand of entropy.

Shannon’s derivation of the iconic formula  depends on various assumptions being true. While these conditions look like they might hold for some software engineering problems, they clearly don’t hold for others. It may be possible to use other forms of entropy for some of these other problems; Shannon became the premium brand of entropy because it was first to market, the other entropy products have not had anyone championing their use, and academics follow each other like sheep (it’s much easier to get a paper published by using the well-known brands).

depends on various assumptions being true. While these conditions look like they might hold for some software engineering problems, they clearly don’t hold for others. It may be possible to use other forms of entropy for some of these other problems; Shannon became the premium brand of entropy because it was first to market, the other entropy products have not had anyone championing their use, and academics follow each other like sheep (it’s much easier to get a paper published by using the well-known brands).

Shannon’s entropy has been generalized, with the two most well-known being (in the limit  , both converge to Shannon entropy):

, both converge to Shannon entropy):

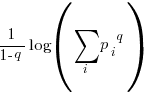

Rényi entropy in 1961:

Tsallis entropy in 1988:

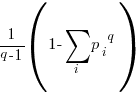

All of these formula reduce a list of probabilities to a single value. A weighting is applied to each probability, and this weighted value is summed to produce a single value that is further manipulated. The probability weighting functions are plotted below:

Under what conditions might one of these two forms of entropy be used (there are other forms)? I have been rummaging around looking for example uses, and could not find many.

There are some interesting papers about possible interpretations of the  parameter in Tsallis entropy: the most interesting paper I have found shows a connection with the correlation between states, e.g., preferential attachment in networks. This implies that Tsallis entropy is the natural first candidate to consider for systems exhibiting power law characteristics. Another paper suggests

parameter in Tsallis entropy: the most interesting paper I have found shows a connection with the correlation between states, e.g., preferential attachment in networks. This implies that Tsallis entropy is the natural first candidate to consider for systems exhibiting power law characteristics. Another paper suggests  derives from variation in the parameter of an exponential equation.

derives from variation in the parameter of an exponential equation.

Some computer applications: a discussion of Tsallis entropy and the concept of non-extensive entropy, along with an analysis of statistical properties of hard disc workloads, the same idea applied to computer memory.

Some PhD thesis: Rényi entropy, with  , for error propagation in software architectures, comparing various measures of entropy as a metric for the similarity of program execution traces, plus using Rényi entropy in cryptography

, for error propagation in software architectures, comparing various measures of entropy as a metric for the similarity of program execution traces, plus using Rényi entropy in cryptography

As you can see, I don’t have much to talk about. I’m hoping my knowledgeable readers can point me at some uses of entropy in software engineering where the author has put some thought into which entropy to use (which may have resulted in Shannon entropy being chosen; I’m only against this choice when it is made for brand name reasons).

Registration for the workshop is open, so turn up and cheer me on.

Roll your own weighting plot:

p_vals=seq(0.001, 1.001, by=0.01) plot(p_vals, -p_vals*log(p_vals), type="l", col="red", ylim=c(0, 1), xaxs="i", yaxs="i", xlab="Probability", ylab="Weight") q=0.5 lines(p_vals, p_vals^q, type="l", col="blue") q=2 lines(p_vals, p_vals^q, type="l", col="green") |

Double exponential performance hit in C# compiler

Yesterday I was reading a blog post on the performance impact of using duplicated nested types in a C# generic class, such as the following:

class Class<A, B, C, D, E, F> { class Inner : Class<Inner, Inner, Inner, Inner, Inner, Inner> { Inner.Inner inner; } } |

Ah, the joys of exploiting a language specification to create perverse code 🙂

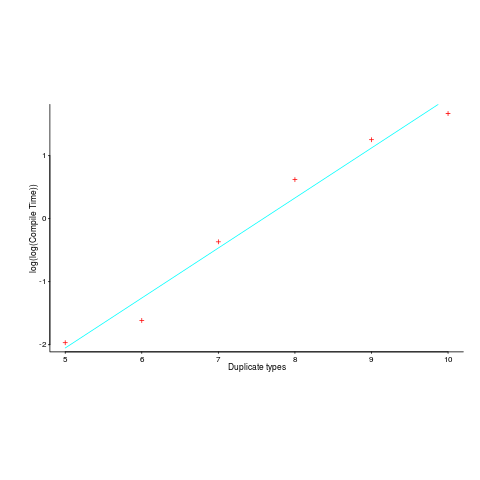

The author, Matt Warren, had benchmarked the C# compiler for increasing numbers of duplicate types; good man. However, the exponential fitted to the data, in the blog post, is obviously not a good fit. I suspected a double exponential might be a better fit and gave it a go (code+data).

Compile-time data points and fitted double-exponential below:

Yes, for compile time the better fit is:  and for binary size:

and for binary size:  , where

, where  ,

,  are some constants and

are some constants and  the number of duplicates.

the number of duplicates.

I had no idea what might cause this pathological compiler performance problem (apart from the pathological code) and did some rummaging around. No luck; I could not find any analysis of generic type implementation that might be used to shine some light on this behavior.

Ideas anybody?

Recent Comments