Polished statistical analysis chapters in evidence-based software engineering

I have completed the polishing/correcting/fiddling of the eight statistical analysis related chapters of my evidence-based software engineering book, and an updated draft pdf is now available (download here).

The material was in much better shape than I recalled, after abandoning it to the world 2-years ago, to work on the software engineering chapters.

Changes include moving more figures into the margin (which is responsible for a lot of the reduction in page count), fixing grammatical typos, removing place-holders for statistical techniques that are unlikely to be of general interest to software engineers, and mostly minor shuffling around (the only big change was moving a lot of material from the Experiments chapter to the Statistics chapter).

There is still some work to be done, in places (most notably the section on surveys).

What next? My collection of data waiting to be analysed has been piling up, so I will spend the next month reducing the backlog.

The six chapters covering the major areas of software engineering need to be polished and fleshed out, from their current bare-bones state. All being well, this time next year a beta release will be ready.

While working on the statistical material, I have been making monthly updates to the pdf+data available. If it makes sense to do this for the rest of the material, then it will happen. I’m not going to write a blog post every month; perhaps a post after what look like important milestones.

As always, if you know of any interesting software engineering data, please tell me.

Waiting for the funerals: culture in software engineering research

A while ago I changed my opinion about why software engineering academics very rarely got/get involved in empirical/experimental based research.

I used to think it was because commercial data was so hard to get hold of.

In practice commercial data does not seem to be that hard to get hold of. At least for academics in business schools, and I have not experienced problems gaining access to commercial data (but it is very hard finding a company willing to allow me to make an anonymised version of its data public). There are many evidence-based papers published using confidential data (i.e., data that cannot be made public).

I now think the reasons for non-evidence-based research are culture and preference for non-people based research.

In the academic world the software side of computing often has a strong association is mathematics departments (I know that in some universities it is in engineering). I have had several researchers tell me that it would raise eyebrows, if they started doing more people oriented research, because this kind of research is viewed as being the purview of other departments.

Software had its algorithm era, which is now long gone; but unfortunately, many academics still live in a world where the mindset of TEOCP holds sway.

Baffled looks are common, when I talk to software engineering academics. They are baffled by the idea that it is possible to run experiments in software engineering, and they are baffled by the idea of evidence-based theories. I am still struggling to understand the mindset that produces the arguments they make against the possibility of experiments and evidence being useful.

In the past I know that some researchers have had problems getting experiment-based papers published. Hopefully this problem is now in the past, given that empirical/experimental papers are becoming more common.

Max Planck, one of the founders of quantum mechanics, found that physicists trained in what we now call classical physics, were not willing to teach or adopt a quantum mechanics world view; Planck observed: “Science advances one funeral at a time”.

Some pair programming benefits may be mathematical artefacts

Many claims are made about the advantages of pair programming. The claim that the performance of pairs is better than the performance of individuals may actually be the result of the mathematical consequences of two people working together, rather than working independently (at least for some tasks).

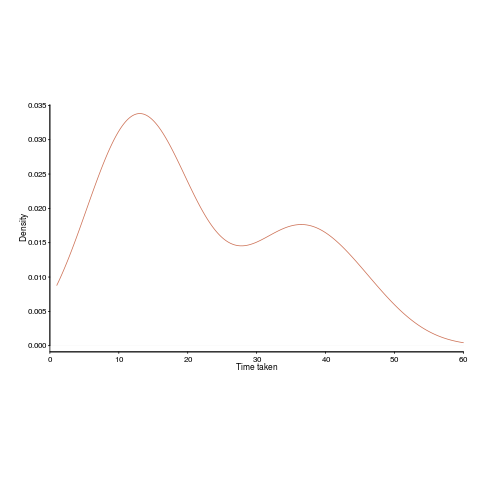

Let’s say that individuals have to find a fault in code, and then fix it. Some people will find the fault and then its fix much more quickly than others. The data for the following analysis comes from the report Experimental results on software debugging (late Rome period), via Lutz Prechelt and shows the density of the time taken by each developer to find and fix a fault in a short Fortran program.

Fixing faults is different from many other development tasks in that if often requires a specific insight to spot the mistake; once found, the fixing task tends to be trivial.

The mean time taken, for task t1, is 22.2 minutes (standard deviation 13).

How long might pairs of developers have taken to solve the same problem. We can take the existing data, create pairs, and estimate (based on individual developer time) how long the pair might take (code+data).

Averaging over every pair of 17 individuals would take too much compute time, so I used bootstrapping. Assuming the time taken by a pair was the shortest time taken by the two of them, when working individually, sampling without replacement produces a mean of 14.9 minutes (sd 1.4) (sampling with replacement is complicated…).

By switching to pairs we appear to have reduced the average time taken by 30%. However, the apparent saving is nothing more than the mathematical consequence of removing larger values from the sample.

The larger the variability of individuals, the larger the apparent saving from working in pairs.

When working as a pair, there will be some communication overhead (unless one is much faster and ignores the other developer), so the saving will be slightly less.

If the performance of a pair was the mean of their individual times, then pairing would not change the mean performance, compared to working alone. The performance of a pair has to be less than the mean of the performance of the two individuals, for pairs to show an improved performance.

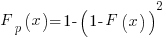

There is an analytic solution for the distribution of the minimum of two values drawn from the same distribution. If  is a probability density function and

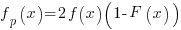

is a probability density function and  the corresponding cumulative distribution function, then the corresponding functions for the minimum of a pair of values drawn from this distribution is given by:

the corresponding cumulative distribution function, then the corresponding functions for the minimum of a pair of values drawn from this distribution is given by:  and

and  .

.

The presence of two peaks in the above plot means the data is not going to be described by a single distribution. So, the above formula look interesting but are not useful (in this case).

When pairs of values are drawn from a Normal distribution, a rough calculation suggests that the mean is shifted down by approximately half the standard deviation.

Christmas books for 2018

The following are the really interesting books I read this year (only one of which was actually published in 2018, everything has to work its way through several piles). The list is short because I did not read many books and/or there is lots of nonsense out there.

The English and their history by Robert Tombs. A hefty paperback, at nearly 1,000 pages, it has been the book I read on train journeys, for most of this year. Full of insights, along with dull sections, a narrative that explains lots of goings-on in a straight-forward manner. I still have a few hundred pages left to go.

The mind is flat by Nick Chater. We experience the world through a few low bandwidth serial links and the brain stitches things together to make it appear that our cognitive hardware/software is a lot more sophisticated. Chater’s background is in cognitive psychology (these days he’s an academic more connected with the business world) and describes the experimental evidence to back up his “mind is flat” model. I found that some of the analogues dragged on too long.

In the readable social learning and evolution category there is: Darwin’s unfinished symphony by Leland and The secret of our success by Henrich. Flipping through them now, I cannot decide which is best. Read the reviews and pick one.

Group problem solving by Laughin. Eye opening. A slim volume, packed with data and analysis.

I have already written about Experimental Psychology by Woodworth.

The Digital Flood: The Diffusion of Information Technology Across the U.S., Europe, and Asia by Cortada. Something of a specialist topic, but if you are into the diffusion of technology, this is surely the definitive book on the diffusion of software systems (covers mostly hardware).

Is it worth attending an academic conference or workshop?

If you work in industry, is it worth attending an academic conference or workshop?

The following observations are based on my attending around 50 software engineering and compiler related conferences/workshops, plus discussion with a few other people from industry who have attended such events.

Short answer: No.

Slightly longer answer: Perhaps, if you are looking to hire somebody knowledgeable in a particular domain.

Much longer answer: Academics go to conferences to network. They are looking for future collaborators, funding, jobs, and general gossip. What is the point of talking to somebody from industry? Academics will make small talk and be generally friendly, but they don’t know how to interact, at the professional level, with people from industry.

Why are academics generally hopeless at interacting, at the professional level, with people from industry?

Part of the problem is lack of practice, many academic researchers live in a world that rarely intersects with people from industry.

Impostor syndrome is another. I have noticed that academics often think that people in industry have a much better understanding of the realities of their field. Those who have had more contact with people from industry might have noticed that impostor syndrome is not limited to academia.

Talking of impostor syndrome, and feeling of being a fraud, academics don’t seem to know how to handle direct criticism. Again I think it is a matter of practice. Industry does not operate according to: I won’t laugh at your idea, if you don’t laugh at mine, which means people within industry are practiced at ‘robust’ discussion (this does not mean they like it, and being good at handling such discussions smooths the path into management).

At the other end of the impostor spectrum, some academics really do regard people working in industry as simpletons. I regularly have academics express surprise that somebody in industry, i.e., me, knows about this-that-or-the-other. My standard reply is to say that its because I paid more for my degree and did not have the usual labotomy before graduating. Not a reply guaranteed to improve industry/academic relations, but I enjoy the look on their faces (and I don’t expect they express that opinion again to anyone else from industry).

The other reason why I don’t recommend attending academic conferences/workshops, is that lots of background knowledge is needed to understand what is being said. There is no point attending ‘cold’, you will not understand what is being presented (academic presentations tend to be much better organized than those given by people in industry, so don’t blame the speaker). Lots of reading is required. The point of attending is to talk to people, which means knowing something about the current state of research in their area of interest. Attending simply to learn something about a new topic is a very poor use of time (unless the purpose is to burnish your c.v.).

Why do I continue to attend conferences/workshops?

If a conference/workshop looks like it will be attended by people who I will find interesting, and it’s not too much hassle to attend, then I’m willing to go in search of gold nuggets. One gold nugget per day is a good return on investment.

Practical ecosystem books for software engineers

So you have read my (draft) book on evidence-based software engineering and want to learn more about ecosystems. What books do I suggest?

Biologists have been studying ecosystems for a long time, and more recently social scientists have been investigating cultural ecosystems. Many of the books written in these fields are oriented towards solving differential equations and are rather subject specific.

The study of software ecosystems has been something of a niche topic for a long time. Problems for researchers have included gaining access to ecosystems and the seeming proliferation of distinct ecosystems. The state of ecosystem research in software engineering is rudimentary; historians are starting to piece together what has happened.

Most software ecosystems are not even close to being in what might be considered a steady state. Eventually most software will be really old, and this will be considered normal (“Shock Of The Old: Technology and Global History since 1900” by Edgerton; newness is a marketing ploy to get people to buy stuff). In the meantime, I have concentrated on the study of ecosystems in a state of change.

Understanding ecosystems is about understanding how the interaction of participant’s motivation, evolves the environment in which they operate.

“Modern Principles of Economics” by Cowen and Tabarrok, is a very readable introduction to economics. Economics might be thought of as a study of the consequences of optimizing the motivation of maximizing return on investment. “Principles of Corporate Finance” by Brealey and Myers, focuses on the topic in its title.

“The Control Revolution: Technological and Economic Origins of the Information Society” by Beniger: the ecosystems in which software ecosystems coexist and their motivations.

“Evolutionary dynamics: exploring the equations of life” by Nowak, is a readable mathematical introduction to the subject given in the title.

“Mathematical Models of Social Evolution: A Guide for the Perplexed” by McElreath and Boyd, is another readable mathematical introduction, but focusing on social evolution.

“Social Learning: An Introduction to Mechanisms, Methods, and Models” by Hoppitt and Laland: developers learn from each other and from their own experience. What are the trade-offs for the viability of an ecosystem that preferentially contains people with specific ways of learning?

“Robustness and evolvability in living systems” by Wagner, survival analysis of systems built from components (DNA in this case). Rather specialised.

Books with a connection to technology ecosystems.

“Increasing returns and path dependence in the economy” by Arthur, is now a classic, containing all the basic ideas.

“The red queen among organizations” by Barnett, includes a chapter on computer manufacturers (has promised me data, but busy right now).

“Information Foraging Theory: Adaptive Interaction with Information” by Pirolli, is an application of ecosystem know-how, i.e., how best to find information within a given environment. Rather specialised.

“How Buildings Learn: What Happens After They’re Built” by Brand, yes building are changed just like software and the changes are just as messy and expensive.

Several good books have probably been omitted, because I failed to spot them sitting on the shelf. Suggestions for books covering topics I have missed welcome, or your own preferences.

Practical psychology books for software engineers

So you have read my (draft) book on evidence-based software engineering and want to learn more about human psychology. What books do I suggest?

I wrote a book about C that attempted to use results from cognitive psychology to understand developer characteristics. This work dates from around 2000, and some of my book choices may have been different, had I studied the subject 10 years later. Another consequence is that this list is very weak on social psychology.

I own all the following books, but it may have been a few years since I last took them off the shelf.

There are two very good books providing a broad introduction: “Cognitive psychology and its implications” by Anderson, and “Cognitive psychology: A student’s handbook” by Eysenck and Keane. They have both been through many editions, and buying a copy that is a few editions earlier than current, saves money for little loss of content.

“Engineering psychology and human performance” by Wickens and Hollands, is a general introduction oriented towards stuff that engineering requires people to do.

Brain functioning: “Reading in the brain” by Dehaene (a bit harder going than “The number sense”). For those who want to get down among the neurons “Biological psychology” by Kalat.

Consciouness: This issue always comes up, so let’s kill it here and now: “The illusion of conscious will” by Wegner, and “The mind is flat” by Chater.

Decision making: What is the difference between decision making and reasoning? In psychology those with a practical orientation study decision making, while those into mathematical logic study reasoning. “Rational choice in an uncertain world” by Hastie and Dawes, is a general introduction; “The adaptive decision maker” by Payne, Bettman and Johnson, is a readable discussion of decision making models. “Judgment under Uncertainty: Heuristics and Biases” by Kahneman, Slovic and Tversky, is a famous collection of papers that kick started the field at the start of the 1980s.

Evolutionary psychology: “Human evolutionary psychology” by Barrett, Dunbar and Lycett. How did we get to be the way we are? Watch out for the hand waving (bones can be dug up for study, but not the software of our mind), but it weaves a coherent’ish story. If you want to go deeper, “The Adapted Mind: Evolutionary Psychology and the Generation of Culture” by Barkow, Tooby and Cosmides, is a collection of papers that took the world by storm at the start of the 1990s.

Language: “The psychology of language” by Harley, is the book to read on psycholinguistics; it is engrossing (although I have not read the latest edition).

Memory: I have almost a dozen books discussing memory. What these say is that there are a collection of memory systems having various characteristics; which is what the chapters in the general coverage books say.

Modeling: So you want to model the human brain. ACT-R is the market leader in general cognitive modeling. “Bayesian cognitive modeling” by Lee and Wagenmakers, is a good introduction for those who prefer a more abstract approach (“Computational modeling of cognition” by Farrell and Lewandowsky, is a big disappointment {they have written some great papers} and best avoided).

Reasoning: The study of reasoning is something of a backwater in psychology. Early experiments showed that people did not reason according to the rules of mathematical logic, and this was treated as a serious fault (whose fault it was, shifted around). Eventually most researchers realised that the purpose of reasoning was to aid survival and reproduction, not following the recently (100 years or so) invented rules of mathematical logic (a few die-hards continue to cling to the belief that human reasoning has a strong connection to mathematical logic, e.g., Evans and Johnson-Laird; I have nearly all their books, but have not inflicted them on the local charity shop yet). Gigerenzer has written several good books: “Adaptive thinking: Rationality in the real world” is a readable introduction, also “Simple heuristics that make us smart”.

Social psychology: “Social learning” by Hoppitt and Laland, analyzes the advantages and disadvantages of social learning; “The Secret of Our Success: How Culture Is Driving Human Evolution, Domesticating Our Species, and Making Us Smarter” by Henrich, is a more populist book (by a leader in the field).

Vision: “Visual intelligence” by Hoffman is a readable introduction to how we go about interpreting the photons entering our eyes, while “Graph design for the eye and mind” by Kosslyn is a rule based guide to visual presentation. “Vision science: Photons to phenomenology” by Palmer, for those who are really keen.

Several good books have probably been omitted, because I failed to spot them sitting on the shelf. Suggestions for books covering topics I have missed welcome, or your own preferences.

Practical statistics books for software engineers

So you have read my (draft) book on evidence-based software engineering and want to learn more about the statistical techniques used, but are not interested lots of detailed mathematics. What books do I suggest?

All the following books are sitting on the shelf next to where I write (not that they get read that much these days).

Before I took the training wheels off my R usage, my general go to book was (I still look at it from time to time): “The R Book” by Crawley, second edition; “R in Action” by Kabacoff is a good general read.

In alphabetical subject order:

Categorical data: “Categorical Data Analysis” by Agresti, the third edition is a weighty tomb (in content and heaviness). Plenty of maths+example; more of a reference.

Compositional data: “Analyzing compositional data with R” by van den Boogaart and Tolosana-Delgado, is more or less the only book of its kind. Thankfully, it is quite good.

Count data: “Modeling count data” by Hilbe, may be more than you want to know about count data. Readable.

Circular data: “Circular statistics in R” by Pewsey, Neuhauser and Ruxton, is the only non-pure theory book available. The material seems to be there, but is brief.

Experiments: “Design and analysis of experiments” by Montgomery.

General: “Applied linear statistical models” by Kutner, Nachtsheim, Neter and Li, covers a wide range of topics (including experiments) using a basic level of mathematics.

Machine learning: “An Introduction to Statistical Learning: with Applications in R” by James, Witten, Hastie and Tibshirani, is more practical (but not dumbed down, like some) and less maths (a common problem with machine learning books, e.g., “The Elements of Statistical Learning”). Watch out for the snake-oil salesmen using machine learning.

Mixed-effects models: “Mixed-effects models in S and S-plus” by Pinheiro and Bates, is probably the book I prefer; “Mixed effects models and extensions in ecology with R” by Zuur, Ieno, Walker, Saveliev and Smith, is another view on an involved topic (plus lots of ecological examples).

Modeling: “Statistical rethinking” by McElreath, is full of interesting modeling ideas, using R and Stan. I wish I had some data to try out some of these ideas.

Regression analysis: “Applied Regression Analysis and Generalized Linear Models” by Fox, now in its third edition (I also have the second edition). I found this the most useful book, of those available, for a more detailed discussion of regression analysis. Some people like “Regression modeling strategies” by Harrell, but this does not appeal to me.

Survival analysis: “Introducing survival and event history analysis” by Mills, is a readable introduction covering everything; “Survival analysis” by Kleinbaum and Klein, is full of insights but more of a book to dip into.

Time series: The two ok books are: “Time series analysis and its application: with R examples” by Shumway and Stoffler, contains more theory, while “Time series analysis: with applications in R” by Cryer and Chan, contains more R code.

There are lots of other R/statistics books on my shelves (just found out I have 31 of Springer’s R books), some ok, some not so. I have a few ‘programming in R’ style books; if you are a software developer, R the language is trivial to learn (its library is another matter).

Suggestions for books covering topics I have missed welcome, or your own preferences (as a software developer).

The first commercially available (claimed) verified compiler

Yesterday, I read a paper containing a new claim by some of those involved with CompCert (yes, they of soap powder advertising fame): “CompCert is the first commercially available optimizing compiler that is formally verified, using machine assisted mathematical proofs, to be exempt from miscompilation”.

First commercially available; really? Surely there are earlier claims of verified compilers being commercial availability. Note, I’m saying claims; bits of the CompCert compiler have involved mathematical proofs (i.e., code generation), so I’m considering earlier claims having at least the level of intellectual honesty used in some CompCert papers (a very low bar).

What does commercially available mean? The CompCert system is open source (but is not free software), so I guess it’s commercially available via free downloading licensing from AbsInt (the paper does not define the term).

Computational Logic, Inc is the name that springs to mind, when thinking of commercial and formal verification. They were active from 1983 to 1997, and published some very interesting technical reports about their work (sadly there are gaps in the archive). One project was A Mechanically Verified Code Generator (in 1989) and their Gypsy system (a Pascal-like language+IDE) provided an environment for doing proofs of programs (I cannot find any reports online). Piton was a high-level assembler and there was a mechanically verified implementation (in 1988).

There is the Danish work on the formal specification of the code generators for their Ada compiler (while there was a formal specification of the Ada semantics in VDM, code generators tend to be much simpler beasts, i.e., a lot less work is needed in formal verification). The paper I have is: “Retargeting and rehosting the DDC Ada compiler system: A case study – the Honeywell DPS 6” by Clemmensen, from 1986 (cannot find an online copy). This Ada compiler was used by various hardware manufacturers, so it was definitely commercially available for (lots of) money.

Are then there any earlier verified compilers with a commercial connection? There is A PRACTICAL FORMAL SEMANTIC DEFINITION AND VERIFICATION SYSTEM FOR TYPED LISP, from 1976, which has “… has proved a number of interesting, non-trivial theorems including the total correctness of an algorithm which sorts by successive merging, the total correctness of the McCarthy-Painter compiler for expressions, …” (which sounds like a code generator, or part of one, to me).

Francis Morris’s thesis, from 1972, proves the correctness of compilers for three languages (each language contained a single feature) and discusses how these features may be combined into a more “realistic” language. No mention of commercial availability, but I cannot see the demand being that great.

The definition of PL/1 was written in VDM, a formal language. PL/1 is a huge language and there were lots of subsets. Were there any claims of formal verification of a subset compiler for PL/1? I have had little contact with the PL/1 world, so am not in a good position to know. Anybody?

Over to you dear reader. Are there any earlier claims of verified compilers and commercial availability?

Publishing information on project progress: will it impact delivery?

Numbers for delivery date and cost estimates, for a software project, depend on who you ask (the same is probably true for other kinds of projects). The people actually doing the work are likely to have the most accurate information, but their estimates can still be wildly optimistic. The managers of the people doing the work have to plan (i.e., make worst/best case estimates) and deal with people outside the team (i.e., sell the project to those paying for it); planning requires knowledge of where things are and where they need to be, while selling requires being flexible with numbers.

A few weeks ago I was at a hackathon organized by the people behind the Project Data and Analytics meetup. The organizers (Martin Paver & co.) had obtained some very interesting project related data sets. I worked on the Australian ICT dashboard data.

The Australian ICT dashboard data was courtesy of the Queensland state government, which has a publicly available dashboard listing digital project expenditure; the Victorian state government also has a dashboard listing ICT expenditure. James Smith has been collecting this data on a monthly basis.

What information might meaningfully be extracted from monthly estimates of project delivery dates and costs?

If you were running one of these projects, and had to provide monthly figures, what strategy would you use to select the numbers? Obviously keep quiet about internal changes for as long as possible (today’s reduction can be used to offset a later increase, or vice versa). If the client requests changes which impact date/cost, then obviously update the numbers immediately; the answer to the question about why the numbers changed is that, “we are responding to client requests” (i.e., we would otherwise still be on track to meet the original end-points).

What is the intended purpose of publishing this information? Is it simply a case of the public getting fed up with overruns, with publishing monthly numbers is seen as a solution?

What impact could monthly publication have? Will clients think twice before requesting an enhancement, fearing public push back? Will companies doing the work make more reliable estimates, or work harder?

Project delivery dates/costs change because new functionality/work-to-do is discovered, because the appropriate staff could not be hired and other assorted unknown knowns and unknowns.

Who is looking at this data (apart from half a dozen people at a hackathon on the other side of the world)?

Data on specific projects can only be interpreted in the context of that project. There is some interesting research to be done on the impact of public availability on client and vendor reporting behavior.

Will publication have an impact on performance? One way to get some idea is to run an A/B experiment. Some projects have their data made public, others don’t. Wait a few years, and compare project performance for the two publication regimes.

Recent Comments