Readability: a scientific approach

Readability, as applied to software development today, is a meaningless marketing term. Readability is promoted as a desirable attribute, and is commonly claimed for favored programming languages, particular styles of programming, or ways of laying out source code.

Whenever somebody I’m talking to, or listening to in a talk, makes a readability claim, I ask what they mean by readability, and how they measured it. The speaker invariably fumbles around for something to say, with some dodging and weaving before admitting that they have not measured readability. There have been a few studies that asked students to rate the readability of source code (no guidance was given about what readability might be).

If somebody wanted to investigate readability from a scientific perspective, how might they go about it?

The best way to make immediate progress is to build on what is already known. There has been over a century of research on eye movement during reading, and two model of eye movement now dominate, i.e., the E-Z Reader model and SWIFT model. Using eye-tracking to study developers is slowly starting to be adopted by researchers.

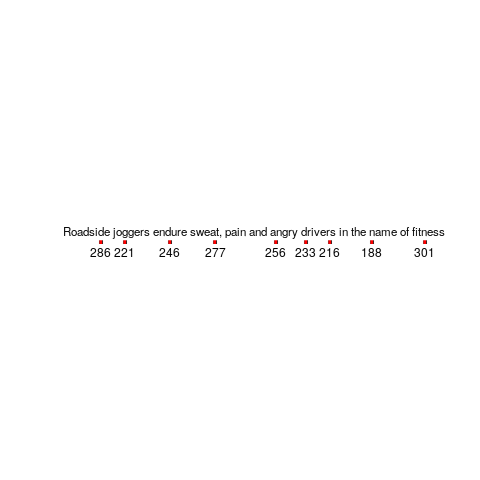

Our eyes don’t smoothly scan the world in front of us, rather they jump from point to point (these jumps are known as a saccade), remaining fixed long enough to acquire information and calculate where to jump next. The image below is an example from an eye tracking study, where subjects were asking to read a sentence (see figure 770.11). Each red dot appears below the center of each saccade, and the numbers show the fixation time (in milliseconds) for that point (code):

Models of reading are judged by the accuracy of their predictions of saccade landing points (within a given line of text), and fixation time between saccades. Simulators implementing the E-Z Reader and SWIFT models have found that these models have comparable performance, and the robustness of these models are compared by looking at the predictions they make about saccade behavior when reading what might be called unconventional material, e.g., mirrored or scarmbeld text.

What is the connection between the saccades made by readers and their understanding of what they are reading?

Studies have found that fixation duration increases with text difficulty (it is also affected by decreases with word frequency and word predictability).

It has been said that attention is the window through which we perceive the world, and our attention directs what we look at.

A recent study of the SWIFT model found that its predictions of saccade behavior, when reading mirrored or inverted text, agreed well with subject behavior.

I wonder what behavior SWIFT would predict for developers reading a line of code where the identifiers were written in camelCase or using underscores (sometimes known as snake_case)?

If the SWIFT predictions agreed with developer saccade behavior, a raft of further ‘readability’ tests spring to mind. If the SWIFT predictions did not agree with developer behavior, how might the model be updated to support the reading of lines of code?

Until recently, the few researchers using eye tracking to investigate software engineering behavior seemed to be having fun playing with their new toys. Things are starting to settle down, with some researchers starting to pay attention to existing models of reading.

What do I predict will be discovered?

Lots of studies have found that given enough practice, people can become proficient at handling some apparently incomprehensible text layouts. I predict that given enough practice, developers can become equally proficient at most of the code layout schemes that have been proposed.

The important question concerning text layout, is: which one enables an acceptable performance from a wide variety of developers who have had little exposure to it? I suspect the answer will be the one that is closest to the layout they have had the most experience,i.e., prose text.

Software development is an “expert level” thing.

The expectation is you’re working on a code base for months or years.

So I expect and it matches my experience of working simultaneously on two code bases that CamelCaps or snakes makes no difference.

The debate of the day is, given larger (and more) monitors, for expert users that spend months in a code base, what line length is best?

Decades of experience tells me one thing… there are many (different), but VERY strongly held opinions as to what the Correct layout style is.

But I note two things…. if there was a compelling style, there wouldn’t be such disagreement,

And if it was really important (and objective), every project would have a pre-commit hook that reformatted every file.

I only know of one project that does that, and the formatter was a HUGE saga to develop to everybodies satisfaction.

Here is the saga I was referring to.. http://journal.stuffwithstuff.com/2015/09/08/the-hardest-program-ive-ever-written/

@John Carter

Ideally, software development is an “expert level” thing. In practice, there are not enough experts to go around.

Yes, “experts” have been there and done that, so they are at home with the common forms of organizing code, i.e., camel or snake makes no difference (although I can feel myself ‘changing gear’, perhaps I am not expert enough in the camel form). So those interested in reducing effort/cost need to target those with little experience, and there might be lots of these.

Optimal line length is a complicated subject. First, we need to ask why the code is being read, e.g., are people reading it in detail, or quickly scanning it for a rough idea of what is going on.

Why must source code have one layout? Couldn’t the editor/ide have a command to switch the layout to adapt to the purpose a developer is reading the code? Code might best be written using format F1, read for understanding by a native English speaker (trained on text that reads left to right) using format F2, and by a native Hebrew speaker (trained on text that reads right to left) using format F3.

I was unaware of the saccade work — interesting.

Our code base was written in C, C++, Java, and VHDL; supporting and build scripts were written in numerous languages (Python, Perl, csh). Each had its own set of rules and guidelines.

New and modified code went through (informal) group reviews with moderating greybeards present — the group decided on readability. Subjective, perhaps, but it worked well. I would argue that inconsistency within files is what hampers comprehension.

I had heard a while ago the use of eye-tracking for measuring readability, but I’ve not followed up with that research. Thanks for bringing this up again!

For me, readability is not just a “style” (layout, variable names case convention) thing. Style helps to keep consistency within a project and a programming language, making it – I believe – easier to understand (its comprehension as Nemo said above). When diving into a new project, I find myself tunning my way of reading at first for some time to understand how something is written (trying to find patterns in their style that convene some meaning, as Python style suggests for class and variable names). As a non-native English speaker, I find this also happens to me when I meet a new person whose accent I’m not familiar with. At first, my brain sweats trying to understand what they are saying… but once I tune in to their pronunciation, if they are talking clearly (in meaning) I have no problem understanding what they say. Of course, that “tuning” time varies depending on their complexity.

I find in programming there are these two aspects too for readability: a style that may encode some type of meaning, and readability of the variables and functions (eg., `x` vs `bird_speed`, `can_see(hawk, starling)` vs `hawk.can_see(starling)`). If I have to keep track of what `x` means, I think my eyes will be up and down trying to remember where it came from. Is that what you mean by reading code as “prose text”? Because, in prose, there’s also some style rules (grammar and punctuation rules, like for example quotes and/or dashes for dialogues – which, I’ve just realised, are different between languages too! [1]).

[1] https://litreactor.com/columns/talk-it-out-how-to-punctuate-dialogue-in-your-prose

@David PS

Source code differs from prose in that it is likely to contain lots of ‘words’ that the reader has not encountered before, so identifier naming is a much more important reading issue.

Yes, developers write source code with an accent, just as they speak a native language with an accent.

As discussed here, identifier naming is a complicated topic, not least because triggering an appropriate semantic association in the reader’s mind is heavily dependent on the associations already present in that mind.

Not really related to eye movements, but related to “jumping around in code”: I was kinda wondering if theres any established metric of code readability that crudely measures the distance ones “gaze” would have to travel to “take in” a pice of code? Here, say, a script that’s broken up into many small functions would get a low score (you would have to “jump around” in the source a lot to understand what’s going on) while a jupyter notebook you can read from top to bottom would get a high score.

While a crappy metric in many ways, I believe it could capture the difficulty I personally feel when reading a new codebase that’s heavily abstracted (functions, calling functions, calling functions in other files, often badly named) rather than being able to read code “from start to finish”.

Btw, love your blog!

@Rasmus Bååth

“Gaze” moves in discrete jumps, saccades, with the length of the jump based on maximizing the information gained (based on experience of character frequencies and the characters the eye’s parafoveal detect to the right; assuming left to right reading). Regressions occur when the jump was too long, and what is seen is not what was expected, also higher levels of processing may have to reset context.

How to predict number of saccades?

The Mr. Chips model is one approach, see page 214 of this pdf http://knosof.co.uk/cbook/

Surely a high percentage of regressions indicates less readability, i.e., difficulty reading in one continuous scan.

Perhaps a heatmap of eye landing positions should not contain any hotspots. See figure 2.17 of this pdf http://knosof.co.uk/ESEUR/

Software researchers are starting to do studies using eye trackers, but I’m not aware of any major findings, yet.