Replicating results using research software

The reproducibility of results, from scientific studies, has always been an important issue. Over the last few years software has become a hot topic in reproducibility circles; many researchers have an expectation that if they run the original researcher’s software, they will replicate the results. Reality has not lived up to their expectations and there has been a lot of flapping around looking for a solution. There is a solution, but first, why does the problem exist?

I have spent a lot of time porting software to different compilers (when I was in the compiler business, I wanted everybody to port their applications to the compiler I was working), different hardware (oh, the days when every major vendor had at least one distinct cpu; not like today where it’s x86, ARM, or embedded), different operating systems (umpteen flavors of Unix, all with slightly different header file contents and library behavior; the Unix wars were good for those in the porting business) and every now and again different languages (by translating).

The Wintel alliance wiped out variation in cpus and operating systems (they can still be found lurking in dark corners) and open source compilers created a near monoculture of compilers for the major languages.

The major software portability problems of 30 years ago have become rather minor. But software portability problems that once tended to be minor (at least for scientific software), have grown to become a major headache. Today’s major portability problems center around evolution of the libraries/packages being used, and longer term the evolution of the language(s) used.

Evolution has created development ecosystems where there are rampant dependencies on specific, or earlier than, or later than versions of libraries/packages. I have been out of the porting business for several decades, but talking to those doing it today, the story is the same; experience in porting from A to B is everything, second best is talking to somebody else who has gone in that direction and third best are the one-line forums such as stackoverflow.

Researchers are doing research on who-knows-what and probably have need-to-know knowledge of software and the libraries they are using, the researchers receiving a copy of the original software might know less. What is the probability that the originating and receiving researchers have exactly the same versions of libraries installed? The receiving researcher may not have any of the required libraries installed, and promptly install the latest version (which may well be more recent than the ones used by the original researcher).

A solution is available; distribute a duplicate of the researchers complete system as a container, e.g., a Docker image.

Containers solve the replication problem. But these days people want more, they actually think it should be possible to take research software and modify it to suite their own needs. Good luck with that.

Research software is written to solve a problem, often by people writing their first non-trivial programs (i.e., they are novices), with no incentive to produce something that is easy for others to use. When software is written by experienced developers, who have an incentive to build something that is easy for others to work with, multiple reimplementations are often still required to achieve something of decent quality. Creating robust software, that others can use, is very hard.

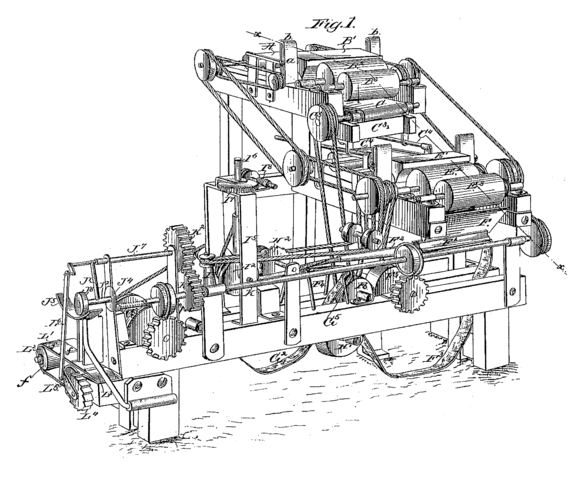

The problem with software is its invisibility; the difficulties are not visible. When the internal operations are visible, the difficulties of making changes are easier to see.

James Albert Bonsack’s cigarette rolling machine (from Wikipedia).

Recent Comments